- 服务端故障排查

- 端口被占用问题

- web进程升级后,页面没有更新到升级后的效果

- JMS服务端无法启动

- SERVER IP不对,导致zmcServer无法启动

- 注册码不对,导致服务端进程异常

- mongoDB进程异常退出,再次启动失败

- mongoDB启动报错Address already in use for socket

- zmcServer报错mongodb :can’t say something

- web界面密码输入错误次数过多,导致账号锁死

- 访问【Daemon Manager】页面出错

- 短信猫报gnu.io.NoSuchPortException错误

- 短信中心对接常见错误

- 邮件对接常见错误

- PATH配置有误,用户找不到对应命令,zmcServer启动失败

- HeartBeat not exists,maybe disconnect to server!

- TopTen中出现资源名显示为数字

- ClOSE_WAIT过多,导致31040告警

- Daemon Manager客户端进行为0问题

- 北向任务生成csv时,产生的字段序列与页面填入顺序不符

- mongodb启动失败导致无法生成告警

- 客户端故障排查

- zmcDaemon进程日志报错:Call Time out

- agentQueue 出现repeating selector 问题

- 客户端报错:Java heap space out of memory

- Fail to get script resource错误

- IODectect模块报空指针错误

- Probe task running error ( 31040 )告警

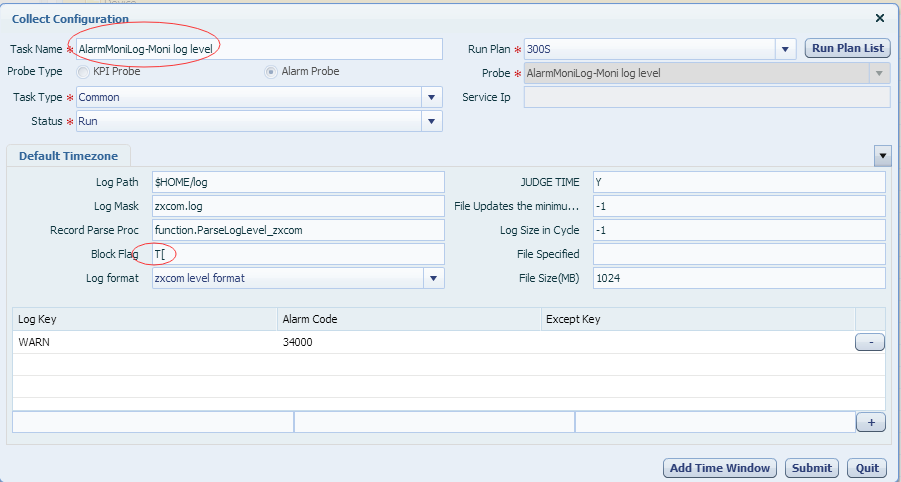

- The block flag error and cause buffer upper 10k lines错误

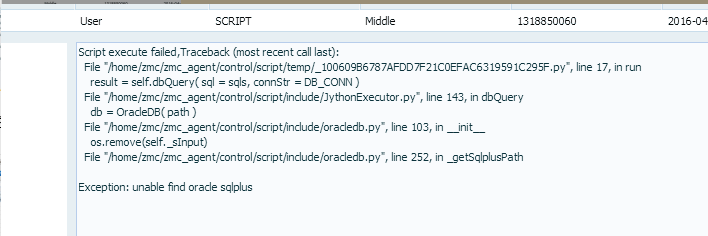

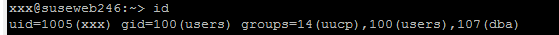

- KPI任务报Unable find oracle sqlplus

- IOError: [Errno 13] Permission denied: ‘/ztesoft/ocs/zmc_agent/control/probedata/temp/NM_CDR_xxx

- RuntimeError: SpaceInfo::_diskIO4LinuxIORW(): execute [sar -d 1 3] result is unexcept format

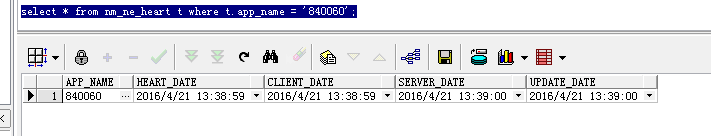

- KPI_220 missing告警

- KPI_228 missing告警

- 生成文件权限不对,无法读取KPI数据:Permission denied

- Task触发script类型的任务执行后,一直处于RUNNING状态

- NE(xxx) HeartBeat not exists,maybe disconnect to server!

- Probe执行报错出现乱码

- 探针类问题排查

- Defaults requiretty //此行必须注释

- 附录

- 修订历史

ZSmart Monitoring & Control

故障排查手册

目录

1 服务端故障排查 4

1.1 端口被占用问题 4

1.1.1 故障现象 4

1.1.2 排查步骤 4

1.1.3 解决办法 7

1.2 web进程升级后,页面没有更新到升级后的效果 7

1.2.1 故障现象 7

1.2.2 解决办法 8

1.3 JMS服务端无法启动 10

1.3.1 故障现象 10

1.3.2 排查步骤 10

1.3.3 解决办法 11

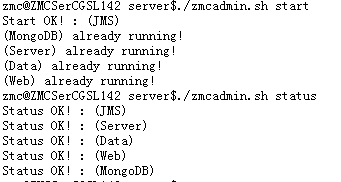

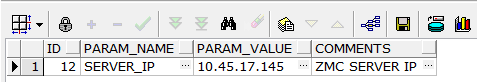

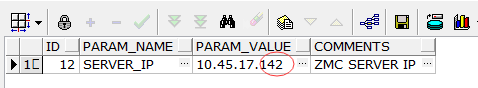

1.4 SERVER IP不对,导致zmcServer无法启动 11

1.4.1 故障现象 11

1.4.2 解决办法 12

1.5 注册码不对,导致服务端进程异常 13

1.5.1 故障现象 13

1.5.2 解决办法 13

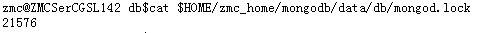

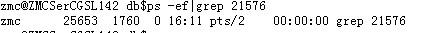

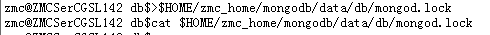

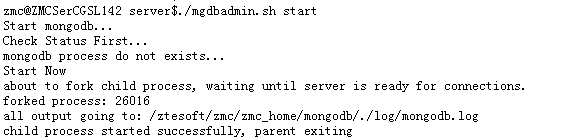

1.6 mongoDB进程异常退出,再次启动失败 14

1.6.1 故障现象 14

1.6.2 解决办法 14

1.7 zmcServer报错mongodb :can’t say something 16

1.7.1 故障现象 16

1.7.2 排查步骤 16

1.7.3 解决办法 17

1.8 web界面密码输入错误次数过多,导致账号锁死 18

1.8.1 故障现象 18

1.8.2 解决办法 19

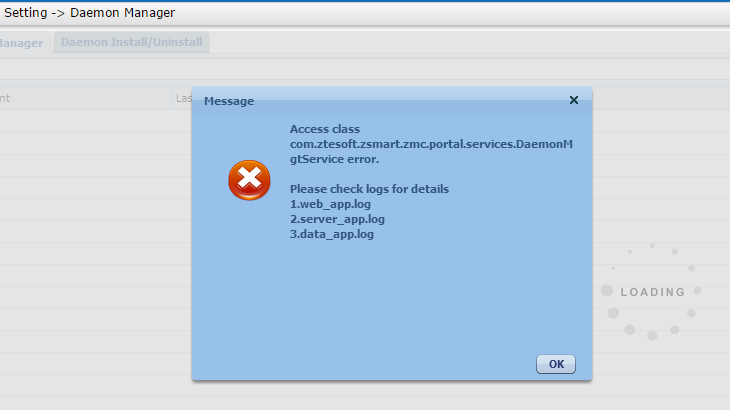

1.9 访问【Daemon Manager】页面出错 19

1.9.1 故障现象 19

1.9.2 解决办法 19

1.10 短信猫报gnu.io.NoSuchPortException错误 21

1.10.1 故障现象 21

1.10.2 解决办法 21

1.11 短信中心对接常见错误 23

1.11.1 Connection refused问题 23

1.11.2 Odd-length string错误 23

1.11.3 Execute SMPPHelper end with False错误 24

1.12 邮件对接常见错误 26

1.12.1 Could not connect to SMTP host问题 26

1.12.2 535 5.7.8 Error: authentication failed: authentication failure错误 27

1.12.3 550 5.7.1 Client does not have permissions to send as this sender错误 28

2 客户端故障排查 29

2.1 zmcDaemon进程日志报错:Call Time out 29

2.1.1 故障现象 29

2.1.2 解决办法 29

2.2 agentQueue 出现repeating selector 问题 32

2.2.1 故障现象 32

2.2.2 解决办法 33

2.3 客户端报错:Java heap space out of memory 36

2.3.1 故障现象 36

2.3.2 解决办法 37

2.4 Fail to get script resource错误 37

2.4.1 故障现象 37

2.4.2 解决办法 38

2.5 IODectect模块报空指针错误 39

2.5.1 故障现象 39

2.5.2 解决办法 39

2.6 Probe task running error ( 31040 )告警 39

2.6.1 故障现象 39

2.6.2 解决办法 40

2.7 The block flag error and cause buffer upper 10k lines错误 40

2.7.1 故障现象 40

2.7.2 解决办法 41

3 附录 43

3.1 SMPP错误码 43

3.2 SMPP号码编码类型 45

3.3 SMPP号码编码方案 45

修订历史 45

服务端故障排查

端口被占用问题

zmc服务端涉及到JMS/MongoDB/zmcServer/zmcData/zmcWeb等进程,这些进程启动时会监听一些端口,如果端口启动失败,会导致对应的进程无法启动,或者启动后功能异常。我们以JMS服务端进程端口被占用为例,排查故障。

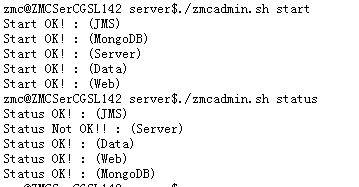

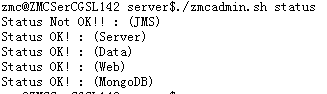

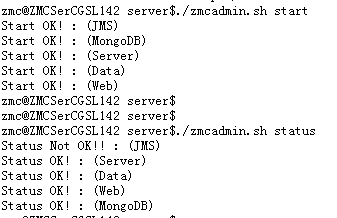

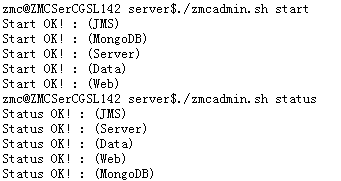

故障现象

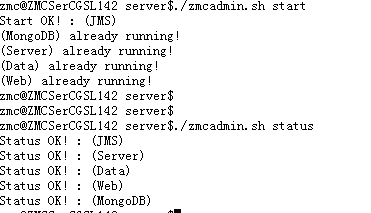

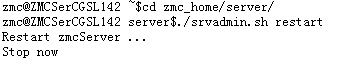

启动zmc服务端:

启动后,再次查询进程状态,发现JMS启动失败:

排查步骤

检查日志

检查JMS的日志 $HOME/zmc_home/jms_provider/data/activemq.log发现如下报错:

2016-02-15 11:11:07,674 | ERROR | Failed to start Apache ActiveMQ (localhost, ID:ZMCSerCGSL142-54417-1455505867475-0:1). Reason: java.io.IOException: Transport Connector could not be registered in JMX: Failed to bind to server socket: tcp://0.0.0.0:61616?maximumConnections=1000&wireformat.maxFrameSize=104857600 due to: java.net.BindException: Address already in use | org.apache.activemq.broker.BrokerService | main

java.io.IOException: Transport Connector could not be registered in JMX: Failed to bind to server socket: tcp://0.0.0.0:61616?maximumConnections=1000&wireformat.maxFrameSize=104857600 due to: java.net.BindException: Address already in use

at org.apache.activemq.util.IOExceptionSupport.create(IOExceptionSupport.java:27)

at org.apache.activemq.broker.BrokerService.registerConnectorMBean(BrokerService.java:1977)

at org.apache.activemq.broker.BrokerService.startTransportConnector(BrokerService.java:2468)

at org.apache.activemq.broker.BrokerService.startAllConnectors(BrokerService.java:2385)

at org.apache.activemq.broker.BrokerService.doStartBroker(BrokerService.java:684)

at org.apache.activemq.broker.BrokerService.startBroker(BrokerService.java:642)

at org.apache.activemq.broker.BrokerService.start(BrokerService.java:578)

at org.apache.activemq.xbean.XBeanBrokerService.afterPropertiesSet(XBeanBrokerService.java:58)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.invokeCustomInitMethod(AbstractAutowireCapableBeanFactory.java:1546)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.invokeInitMethods(AbstractAutowireCapableBeanFactory.java:1487)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.initializeBean(AbstractAutowireCapableBeanFactory.java:1419)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:518)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:455)

at org.springframework.beans.factory.support.AbstractBeanFactory$1.getObject(AbstractBeanFactory.java:293)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:222)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:290)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:192)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.preInstantiateSingletons(DefaultListableBeanFactory.java:585)

at org.springframework.context.support.AbstractApplicationContext.finishBeanFactoryInitialization(AbstractApplicationContext.java:895)

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:425)

at org.apache.xbean.spring.context.ResourceXmlApplicationContext.

at org.apache.xbean.spring.context.ResourceXmlApplicationContext.

at org.apache.activemq.xbean.XBeanBrokerFactory$1.

at org.apache.activemq.xbean.XBeanBrokerFactory.createApplicationContext(XBeanBrokerFactory.java:102)

at org.apache.activemq.xbean.XBeanBrokerFactory.createBroker(XBeanBrokerFactory.java:66)

at org.apache.activemq.broker.BrokerFactory.createBroker(BrokerFactory.java:71)

at org.apache.activemq.broker.BrokerFactory.createBroker(BrokerFactory.java:54)

at org.apache.activemq.console.command.StartCommand.startBroker(StartCommand.java:115)

at org.apache.activemq.console.command.StartCommand.runTask(StartCommand.java:74)

at org.apache.activemq.console.command.AbstractCommand.execute(AbstractCommand.java:57)

at org.apache.activemq.console.command.ShellCommand.runTask(ShellCommand.java:148)

at org.apache.activemq.console.command.AbstractCommand.execute(AbstractCommand.java:57)

at org.apache.activemq.console.command.ShellCommand.main(ShellCommand.java:90)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.activemq.console.Main.runTaskClass(Main.java:262)

at org.apache.activemq.console.Main.main(Main.java:115)

Caused by: java.io.IOException: Failed to bind to server socket: tcp://0.0.0.0:61616?maximumConnections=1000&wireformat.maxFrameSize=104857600 due to: java.net.BindException: Address already in use

at org.apache.activemq.util.IOExceptionSupport.create(IOExceptionSupport.java:33)

at org.apache.activemq.transport.tcp.TcpTransportServer.bind(TcpTransportServer.java:138)

at org.apache.activemq.transport.tcp.TcpTransportFactory.doBind(TcpTransportFactory.java:60)

at org.apache.activemq.transport.TransportFactory.bind(TransportFactory.java:124)

at org.apache.activemq.broker.TransportConnector.createTransportServer(TransportConnector.java:310)

at org.apache.activemq.broker.TransportConnector.getServer(TransportConnector.java:136)

at org.apache.activemq.broker.TransportConnector.asManagedConnector(TransportConnector.java:105)

at org.apache.activemq.broker.BrokerService.registerConnectorMBean(BrokerService.java:1972)

… 41 more

Caused by: java.net.BindException: Address already in use

at java.net.PlainSocketImpl.socketBind(Native Method)

at java.net.PlainSocketImpl.bind(PlainSocketImpl.java:359)

at java.net.ServerSocket.bind(ServerSocket.java:319)

at java.net.ServerSocket.

at javax.net.DefaultServerSocketFactory.createServerSocket(ServerSocketFactory.java:170)

at org.apache.activemq.transport.tcp.TcpTransportServer.bind(TcpTransportServer.java:134)

… 47 more

从日志中可以看出在绑定0.0.0.0:61616端口时,产生一个Address already in use错误。这个错误就是端口被占用。

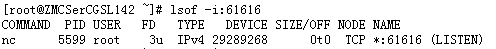

检查哪个进程占用端口

使用lsof -i:端口号 命令查看端口被哪个进程占用。建议使用root用户自行这个命令,如果只是使用普通用户自行lsof,查到的进程信息局限于该用户自身,很多种情况下,占用端口的不一定是用户本身的进程,一般是其他用户的进程。

lsof -i:61616

通过pid查看进程信息:

ps -ef|grep 5599

这个进程是我们故意启动,用于占用61616端口的。

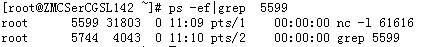

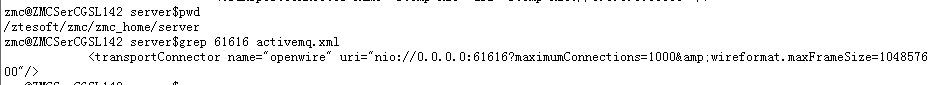

解决办法

找到占用端口的进程之后,需要进行判断,如果这个占用端口的进程是系统的关键进程,或者是业务的进程,就只能修改JMS等进程的配置,将端口号改变成一个本机还没有占用过的端口。

以JMS为例,我们需要修改zmchome/server/activemq.xml文件里的端口号:

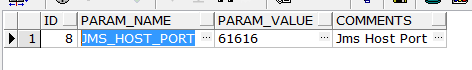

并且同步修改数据库 system_params里的对应字段:

再重启jms进程。

如果占用这个端口的进程是一个无关紧要,或者就是jms自身僵死的进程,可以将进程kill之后,再重启JMS服务端进程:

_kill 5599

zmcadmin.sh start

进程正常启动。

web进程升级后,页面没有更新到升级后的效果

故障现象

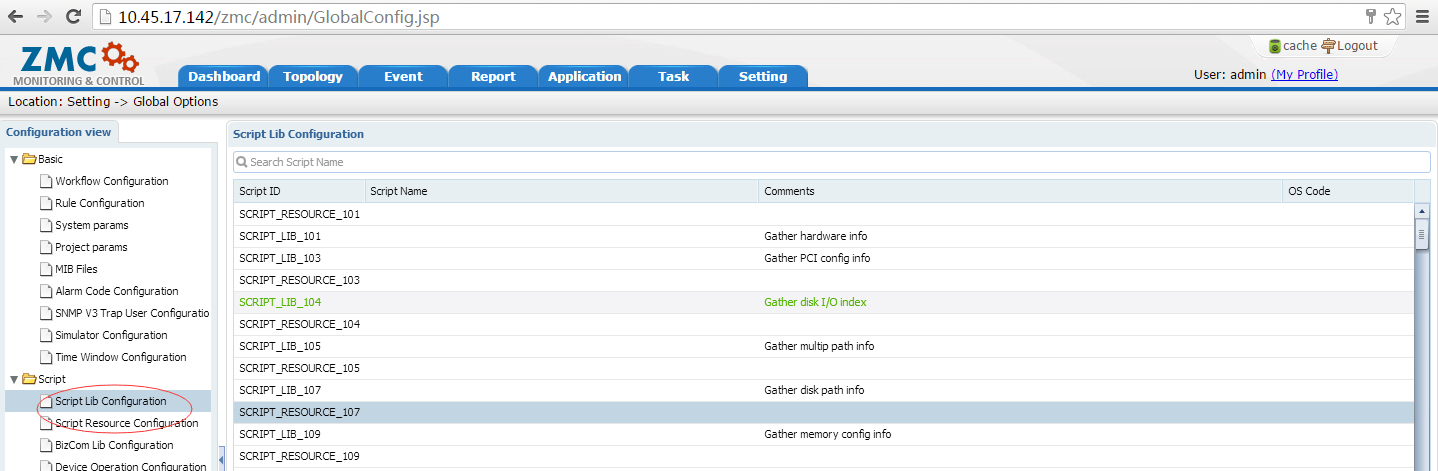

系统升级后,有些功能进行了更新,页面产生了变化,但是升级后,从浏览器里看不出变化。比如,从v8.0.20升级到v8.0.21之后,【Script Lib Configuration】和【Script Resource Configuration】合并,但是升级后,Chrome展示如下,依然是老的界面:

从其他机器或者其他浏览器,我们可以发现页面已经更新:

解决办法

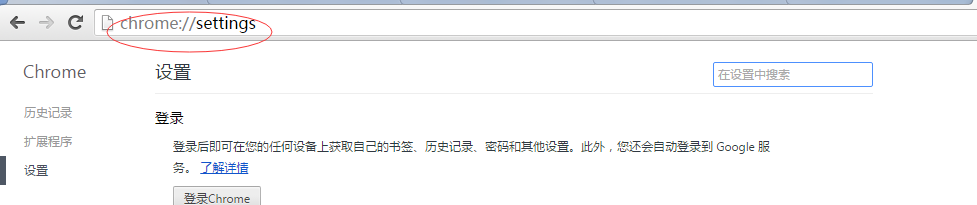

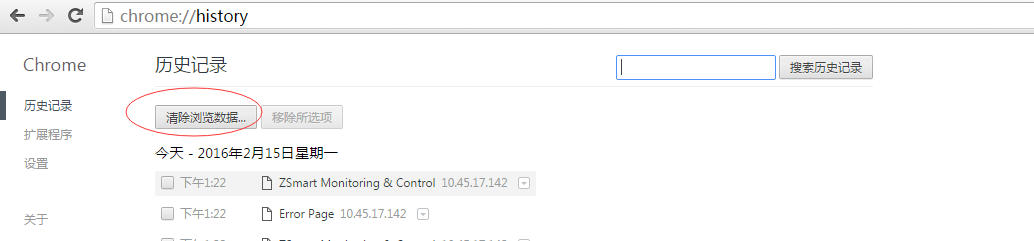

刷新浏览器的缓存数据,以chrome为例:

在【设置】里

选择【历史记录】

点击【清除浏览数据】

将缓存的文件清除。

再次刷新页面,已经变成升级后的版本:

如果现场升级后,发现页面有报错,页面和后台程序不匹配,可以优先考虑清除历史缓存数据。

JMS服务端无法启动

故障现象

启动ZMC服务端进程后,再次查询,发现JMS进程没有启动成功

排查步骤

检查日志

检查Java的版本,在zmc用户下执行java –version,要求版本在1.6以上。

检查JMS的日志 $HOME/zmc_home/jms_provider/data/activemq.log发现日志没有更新。

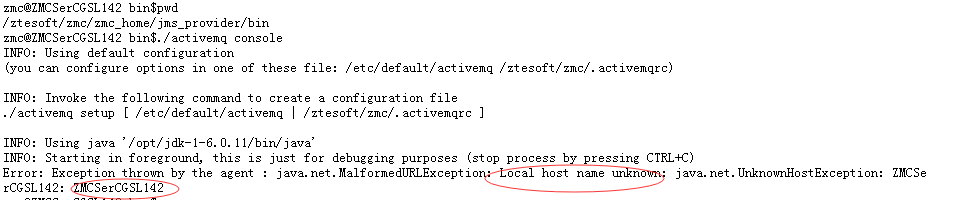

执行测试命令行

在$HOME/zmchome/jms_provider/bin下,执行

./activemq console_

显示出错原因是 ZMCSerCGSL142这个主机名字(ZMC服务端本机的名称)找不到。

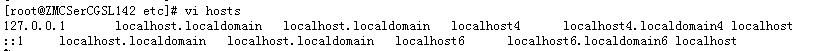

解决办法

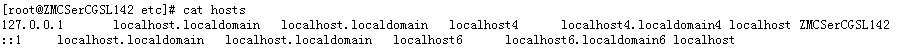

用root用户在/etc/hosts文件里添加ZMCSerCGSL142主机名:

修改前

修改后:

再次启动jms服务端进程:

进程启动成功。

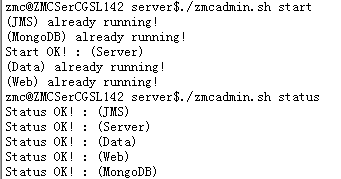

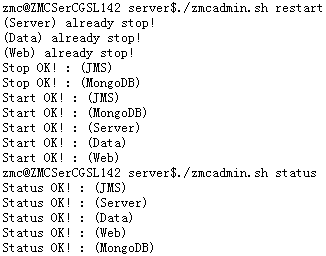

SERVER IP不对,导致zmcServer无法启动

故障现象

解决办法

查看$HOME/zmc_home/server/logs/server_app.log日志:

7831 2016-02-15 15:02:08.386 [MainThread] ERROR c.z.z.z.r.b.ZMCServer—> SERVER IP 10.45.17.145 not exits on this server, system exit

7831 2016-02-15 15:02:08.386 [MainThread] ERROR c.z.z.z.s.m.ServerStarter—> Self configuration verify failed, exit

日志中显示SERVER IP为10.45.17.145,实际主机的SERVER_IP为10.45.17.142,需要修改数据库中的记录:

SELECT * FROM System_Params t WHERE t.param_value=’10.45.17.145’ FOR UPDATE;

修改为:

再次启动zmc服务端进程:

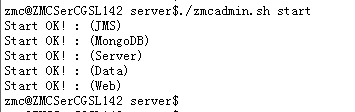

启动成功。

注册码不对,导致服务端进程异常

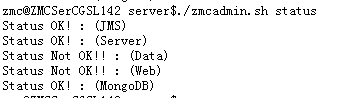

故障现象

ZMC服务端进程启动后,显示进程正常:

登录服务端web页面失败,再次查看进程:

发现zmcWeb和zmcData进程宕了。

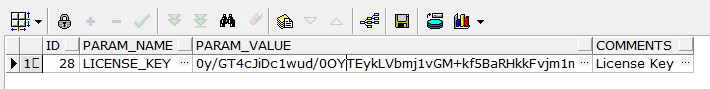

解决办法

查看zmcData的日志$HOME/zmc_home/server/logs/data_app.log:

43565 2016-02-15 15:10:39.062 [main] ERROR c.z.z.z.c.u.SeqUtils—> System Project Id or License can not be empty, please check install manual book

显示Project Id或者 License为空。

ProjectId内置在License中,因此需要检查system_params里的license记录是否正确,如果错误,用版本申请时分配的官方license重新替换:

SELECT * FROM System_Params t WHERE t.param_name=’LICENSE_KEY’ FOR UPDATE;

更新licence后,重启进程:

启动成功,页面访问正常。

mongoDB进程异常退出,再次启动失败

故障现象

启动mongoDB进程时,报错:

zmc@ZMCSerCGSL142 server$./mgdbadmin.sh start

Start mongodb…

Check Status First…

mongodb process do not exists…

Start Now

about to fork child process, waiting until server is ready for connections.

forked process: 21736

all output going to: /ztesoft/zmc/zmc_home/mongodb/./log/mongodb.log

ERROR: child process failed, exited with error number 100

解决办法

查看$HOME/zmchome/mongodb/log/mongodb.log日志:

Mon Feb 15 15:47:42.354 [initandlisten] options: { config: “mongodb.conf”, dbpath: “./data/db”, fork: “true”, logappend: “true”, logpath: “./log/mongodb.log”, nojournal: “true”, port: 3567 }

**

Unclean shutdown detected.

Please visit http://dochub.mongodb.org/core/repair for recovery instructions.

*

Mon Feb 15 15:47:42.421 [initandlisten] exception in initAndListen: 12596 old lock file, terminating

Mon Feb 15 15:47:42.421 dbexit:

Mon Feb 15 15:47:42.421 [initandlisten] shutdown: going to close listening sockets…

Mon Feb 15 15:47:42.421 [initandlisten] shutdown: going to flush diaglog…

Mon Feb 15 15:47:42.421 [initandlisten] shutdown: going to close sockets…

Mon Feb 15 15:47:42.421 [initandlisten] shutdown: waiting for fs preallocator…

Mon Feb 15 15:47:42.421 [initandlisten] shutdown: closing all files…

Mon Feb 15 15:47:42.421 [initandlisten] closeAllFiles() finished

Mon Feb 15 15:47:42.421 dbexit: really exiting now

从日志可以看到有旧的lockfile没有清理。mongoDB正常退出时,会自动将lockfile清空,但是如果进程是被kill -9强制杀死,lockfile就需要手工清理。

查看lockfile文件

_cat $HOME/zmc_home/mongodb/data/db/mongod.lock

检查进程是否已经退出:

ps -ef|grep 21576|grep -v grep

进程不存在,可以清空文件:

>$HOME/zmc_home/mongodb/data/db/mongod.lock

再次启动mongoDB:

启动成功。

mongoDB启动报错Address already in use for socket

故障现象

* SERVER RESTARTED *

Thu Nov 3 18:58:55.843 [initandlisten] MongoDB starting : pid=26263 port=13567 dbpath=/home/zmc/zmc_home/mongodb/./data/db 64-bit host=zmc

Thu Nov 3 18:58:55.844 [initandlisten] db version v2.4.10

Thu Nov 3 18:58:55.844 [initandlisten] git version: e3d78955d181e475345ebd60053a4738a4c5268a

Thu Nov 3 18:58:55.844 [initandlisten] build info: Linux ip-10-2-29-40 2.6.21.7-2.ec2.v1.2.fc8xen #1 SMP Fri Nov 20 17:48:28 EST 2009 x86_64 BOOST_LIB_VERSION=1_49

Thu Nov 3 18:58:55.844 [initandlisten] allocator: tcmalloc

Thu Nov 3 18:58:55.844 [initandlisten] options: { config: “mongodb.conf”, dbpath: “./data/db”, fork: “true”, logappend: “true”, logpath: “./log/mongodb.log”, nojournal: “true”, port: 13567 }

Thu Nov 3 18:58:55.879 [FileAllocator] allocating new datafile /home/zmc/zmc_home/mongodb/./data/db/local.ns, filling with zeroes…

Thu Nov 3 18:58:55.879 [FileAllocator] creating directory /home/zmc/zmc_home/mongodb/./data/db/_tmp

Thu Nov 3 18:58:55.890 [FileAllocator] done allocating datafile /home/zmc/zmc_home/mongodb/./data/db/local.ns, size: 16MB, took 0.005 secs

Thu Nov 3 18:58:55.906 [FileAllocator] allocating new datafile /home/zmc/zmc_home/mongodb/./data/db/local.0, filling with zeroes…

Thu Nov 3 18:58:55.914 [FileAllocator] done allocating datafile /home/zmc/zmc_home/mongodb/./data/db/local.0, size: 64MB, took 0.007 secs

Thu Nov 3 18:58:55.915 [initandlisten] ERROR: listen(): bind() failed errno:98 Address already in use for socket: 0.0.0.0:13567

Thu Nov 3 18:58:55.915 [initandlisten] ERROR: addr already in use

Thu Nov 3 18:58:55.915 [initandlisten] now exiting

Thu Nov 3 18:58:55.915 dbexit:

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: going to close listening sockets…

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: going to flush diaglog…

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: going to close sockets…

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: waiting for fs preallocator…

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: closing all files…

Thu Nov 3 18:58:55.915 [initandlisten] closeAllFiles() finished

Thu Nov 3 18:58:55.915 [initandlisten] shutdown: removing fs lock…

Thu Nov 3 18:58:55.915 dbexit: really exiting now

排查步骤

使用命令lsof| grep 13567 查找占用端口号13567的进程

Kell -9 pid 杀掉进程

重新启动

问题解决。

zmcServer报错mongodb :can’t say something

故障现象

zmcServer进程正常启动后,在$HOME/zmc_home/server/logs/server_app.log里发现有如下错误日志:

3915495 2016-02-15 16:30:13.017 [Log Sample Thread] ERROR c.z.z.z.l.a.LogAnalyzer—>

com.mongodb.MongoException$Network: can’t say something

at com.mongodb.DBTCPConnector.say(DBTCPConnector.java:194) [mongo-2.10.1.jar:na]

at com.mongodb.DBTCPConnector.say(DBTCPConnector.java:155) [mongo-2.10.1.jar:na]

at com.mongodb.DBApiLayer$MyCollection.insert(DBApiLayer.java:270) [mongo-2.10.1.jar:na]

at com.mongodb.DBApiLayer$MyCollection.insert(DBApiLayer.java:226) [mongo-2.10.1.jar:na]

at com.mongodb.DBCollection.insert(DBCollection.java:147) [mongo-2.10.1.jar:na]

at com.mongodb.DBCollection.insert(DBCollection.java:132) [mongo-2.10.1.jar:na]

at com.ztesoft.zsmart.zmc.core.access.MongodbClient.insertDBObjectList(MongodbClient.java:62) [zmc_core.jar:na]

at com.ztesoft.zsmart.zmc.log.analyzer.LogSample.load(LogSample.java:146) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.log.analyzer.LogAnalyzer.startExactSample(LogAnalyzer.java:59) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.log.analyzer.LogAnalyzer.access$100(LogAnalyzer.java:25) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.log.analyzer.LogAnalyzer$1.run(LogAnalyzer.java:174) [zmc_modules.jar:na]

at java.lang.Thread.run(Thread.java:679) [na:1.6.0_22]

Caused by: java.io.IOException: couldn’t connect to [/10.45.17.142:13567] bc:java.net.ConnectException: Connection refused

at com.mongodb.DBPort._open(DBPort.java:214) [mongo-2.10.1.jar:na]

at com.mongodb.DBPort.go(DBPort.java:107) [mongo-2.10.1.jar:na]

at com.mongodb.DBPort.go(DBPort.java:84) [mongo-2.10.1.jar:na]

at com.mongodb.DBPort.say(DBPort.java:79) [mongo-2.10.1.jar:na]

at com.mongodb.DBTCPConnector.say(DBTCPConnector.java:181) [mongo-2.10.1.jar:na]

… 11 common frames omitted

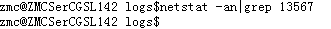

排查步骤

检查端口是否启动

从日志里可以看到zmcServer连的是mongoDB的13567端口。

netstat -an|grep 13567

13567端口没有处于监听状态。

检查mongoDB启动的端口

zmc@ZMCSerCGSL142 logs$ps -ef|grep mongodb

zmc 26016 1 0 16:14 ? 00:00:03 ./bin/mongod -f mongodb.conf

zmc 32177 32688 0 16:52 pts/0 00:00:00 grep mongodb

zmc@ZMCSerCGSL142 logs$lsof -p 26016|grep LISTEN

mongod 26016 zmc 7u IPv4 29507523 0t0 TCP :oap (LISTEN)

mongod 26016 zmc 9u IPv4 29507527 0t0 TCP :tram (LISTEN)

zmc@ZMCSerCGSL142 logs$grep oap /etc/services

soap-beep 605/tcp # SOAP over BEEP

soap-beep 605/udp # SOAP over BEEP

netconfsoaphttp 832/tcp # NETCONF for SOAP over HTTPS

netconfsoaphttp 832/udp # NETCONF for SOAP over HTTPS

netconfsoapbeep 833/tcp # NETCONF for SOAP over BEEP

netconfsoapbeep 833/udp # NETCONF for SOAP over BEEP

loaprobe 1634/tcp # Log On America Probe

loaprobe 1634/udp # Log On America Probe

oap 3567/tcp # Object Access Protocol

oap 3567/udp # Object Access Protocol

oap-s 3568/tcp # Object Access Protocol over SSL

oap-s 3568/udp # Object Access Protocol over SSL

m-oap 5567/tcp # Multicast Object Access Protocol

m-oap 5567/udp # Multicast Object Access Protocol

soap-http 7627/tcp # SOAP Service Port

soap-http 7627/udp # SOAP Service Port

oap-admin 8567/tcp # Object Access Protocol Administration

oap-admin 8567/udp # Object Access Protocol Administration

trisoap 10200/tcp # Trigence AE Soap Service

trisoap 10200/udp # Trigence AE Soap Service

MOS-soap 10543/tcp # MOS SOAP Default Port

MOS-soap 10543/udp # MOS SOAP Default Port

MOS-soap-opt 10544/tcp # MOS SOAP Optional Port

MOS-soap-opt 10544/udp # MOS SOAP Optional Port

amt-soap-http 16992/tcp # Intel(R) AMT SOAP/HTTP

amt-soap-http 16992/udp # Intel(R) AMT SOAP/HTTP

amt-soap-https 16993/tcp # Intel(R) AMT SOAP/HTTPS

amt-soap-https 16993/udp # Intel(R) AMT SOAP/HTTPS

zmc@ZMCSerCGSL142 logs$grep tram /etc/services

tram 4567/tcp # TRAM

tram 4567/udp # TRAM

从上面的执行结果看,mongoDB监听在3567和4567端口,和zmcServer要求的13567不符合。

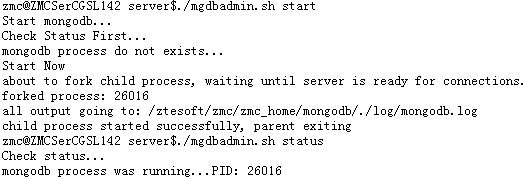

解决办法

将mongoDB的配置文件里的端口从3567改成13567,重启mongoDB:

zmc@ZMCSerCGSL142 mongodb$pwd

/ztesoft/zmc/zmc_home/mongodb

zmc@ZMCSerCGSL142 mongodb$vi mongodb.conf

port=13567

dbpath=./data/db

logpath=./log/mongodb.log

logappend=true

fork=true

nojournal=true

zmc@ZMCSerCGSL142 server$./mgdbadmin.sh stop

Stop mongodb…

Kill PID : 26016

zmc@ZMCSerCGSL142 server$./mgdbadmin.sh start

Start mongodb…

Check Status First…

mongodb process do not exists…

Start Now

about to fork child process, waiting until server is ready for connections.

forked process: 858

all output going to: /ztesoft/zmc/zmc_home/mongodb/./log/mongodb.log

child process started successfully, parent exiting

server_app.log里不再产生新的mongoDB连接不上错误。

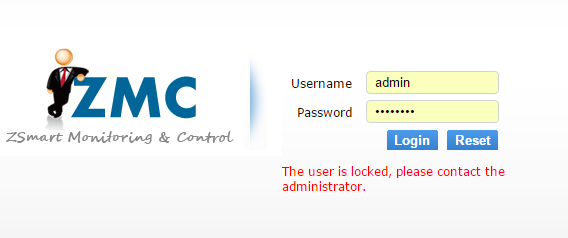

web界面密码输入错误次数过多,导致账号锁死

故障现象

由于尝试输入错误的密码次数过多,导致登录ZMC Web的用户账号被锁:

解决办法

修改数据库中sys_user表里的对应记录,将账号状态update成EFFECT,错误次数置为0:

UPDATE sys_user SET STATE=’EFFECT’,FAIL_TIMES=0 WHERE user_name=’admin’;

完成数据库更新后,即可刷新Web页面,采用正确的用户名密码登录系统。

访问【Daemon Manager】页面出错

故障现象

解决办法

按照出错提示,检查$HOME/zmc_home/server/logs/web_app.log日志:

3061337 2016-02-17 15:44:49.967 [Schedule_Worker_High_Slave] ERROR c.z.z.z.c.a.RMIClient—> Fail to create remote object,exception :

java.rmi.ConnectException: Connection refused to host: 10.45.17.142; nested exception is:

java.net.ConnectException: Connection refused

at sun.rmi.transport.tcp.TCPEndpoint.newSocket(TCPEndpoint.java:619) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPChannel.createConnection(TCPChannel.java:216) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPChannel.newConnection(TCPChannel.java:202) [na:1.6.0_22]

at sun.rmi.server.UnicastRef.newCall(UnicastRef.java:340) [na:1.6.0_22]

at sun.rmi.registry.RegistryImpl_Stub.lookup(Unknown Source) [na:1.6.0_22]

at java.rmi.Naming.lookup(Naming.java:101) [na:1.6.0_22]

at com.ztesoft.zsmart.zmc.core.access.RMIClient.createRemoteObject(RMIClient.java:55) [zmc_core.jar:na]

at com.ztesoft.zsmart.zmc.housekeeping.bll.AutoRefreshCacheJob.reloadCache(AutoRefreshCacheJob.java:67) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.housekeeping.bll.AutoRefreshCacheJob.perform(AutoRefreshCacheJob.java:52) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.core.scheduler.Slave.run(Worker.java:137) [zmc_core.jar:na]

Caused by: java.net.ConnectException: Connection refused

at java.net.PlainSocketImpl.socketConnect(Native Method) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:327) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:193) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:180) [na:1.6.0_22]

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:384) [na:1.6.0_22]

at java.net.Socket.connect(Socket.java:546) [na:1.6.0_22]

at java.net.Socket.connect(Socket.java:495) [na:1.6.0_22]

at java.net.Socket.

at java.net.Socket.

at sun.rmi.transport.proxy.RMIDirectSocketFactory.createSocket(RMIDirectSocketFactory.java:40) [na:1.6.0_22]

at sun.rmi.transport.proxy.RMIMasterSocketFactory.createSocket(RMIMasterSocketFactory.java:146) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPEndpoint.newSocket(TCPEndpoint.java:613) [na:1.6.0_22]

… 9 common frames omitted

从日志里可以看出,Web调用RMI出错。

检查zmcServer的日志 $HOME/zmc_home/server/logs/server_app.log,发现如下日志:

22246 2016-02-17 16:07:24.354 [MainThread] ERROR c.z.z.z.s.m.ServerStarter—> ======ZMC Server launchs [error]======

com.ztesoft.zsmart.core.exception.BaseAppException: [RemoteException] null

at com.ztesoft.zsmart.zmc.core.access.RMIServer.start(RMIServer.java:64) [zmc_core.jar:na]

at com.ztesoft.zsmart.zmc.server.main.ServerStarter.main(ServerStarter.java:249) [zmc_server.jar:na]

Caused by: java.rmi.server.ExportException: Port already in use: 1099; nested exception is:

java.net.BindException: Address already in use

at sun.rmi.transport.tcp.TCPTransport.listen(TCPTransport.java:328) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPTransport.exportObject(TCPTransport.java:236) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPEndpoint.exportObject(TCPEndpoint.java:411) [na:1.6.0_22]

at sun.rmi.transport.LiveRef.exportObject(LiveRef.java:147) [na:1.6.0_22]

at sun.rmi.server.UnicastServerRef.exportObject(UnicastServerRef.java:207) [na:1.6.0_22]

at sun.rmi.registry.RegistryImpl.setup(RegistryImpl.java:120) [na:1.6.0_22]

at sun.rmi.registry.RegistryImpl.

at java.rmi.registry.LocateRegistry.createRegistry(LocateRegistry.java:203) [na:1.6.0_22]

at com.ztesoft.zsmart.zmc.core.access.RMIServer.start(RMIServer.java:44) [zmc_core.jar:na]

… 1 common frames omitted

Caused by: java.net.BindException: Address already in use

at java.net.PlainSocketImpl.socketBind(Native Method) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.bind(AbstractPlainSocketImpl.java:353) [na:1.6.0_22]

at java.net.ServerSocket.bind(ServerSocket.java:336) [na:1.6.0_22]

at java.net.ServerSocket.

at java.net.ServerSocket.

at sun.rmi.transport.proxy.RMIDirectSocketFactory.createServerSocket(RMIDirectSocketFactory.java:45) [na:1.6.0_22]

at sun.rmi.transport.proxy.RMIMasterSocketFactory.createServerSocket(RMIMasterSocketFactory.java:351) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPEndpoint.newServerSocket(TCPEndpoint.java:667) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPTransport.listen(TCPTransport.java:317) [na:1.6.0_22]

… 9 common frames omitted

检查发现,zmcServer进程启动失败:

从server_app.log日志中可以看出导致出错的原因是1099端口被占用。参照【端口被占用问题】章节解决后,故障排除。

短信猫报gnu.io.NoSuchPortException错误

故障现象

短信猫测试时,Web界面点击【Test】,返回失败。查看$HOME/zmc_home/server/logs/web_app.log,发现如下日志

1942213 2016-02-18 09:52:29.723 [RMI TCP Connection(29)-127.0.0.1] ERROR c.z.z.z.s.SMSmodem—> Comm4AT Open error:[Traceback (most recent call last):

File “/ztesoft/zmc/zmc_home/server/script/SMSmodem.py”, line 113, in Open

self.hSerial.open()

File “/ztesoft/zmc/zmc_home/server/script/serial/serialjava.py”, line 64, in open

portId = comm.CommPortIdentifier.getPortIdentifier(self._port)

NoSuchPortException: gnu.io.NoSuchPortException

]

同时发现$HOME/zmc_home/server/logs/zmcServer.nohup.out里也有报错:

check_group_uucp(): error testing lock file creation Error details:Permission deniedcheck_lock_status: No permission to create lock file.

please see: How can I use Lock Files with rxtx? in INSTALL

解决办法

No permission to create lock file问题解决

这个问题需要zmc用户具有lock/uucp(Suse操作系统是uucp组,Redhat类操作系统是lock组)用户组权限,追加用户组指令:

usermod -A uucp zmc (适用于Suse操作系统)

usermod -aG lock zmc (适用于Redhat操作系统)

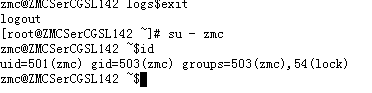

本例中我们的操作系统是CGSL,适用Redhat操作系统指令:

usermod -aG lock zmc

退出当前会话,重新登录,检查当前用户的用户信息:

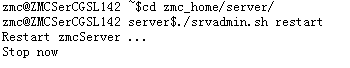

重启zmcServer进程,

这时再进行测试,zmcServer.nohup.out已经不报错,这个问题解决。

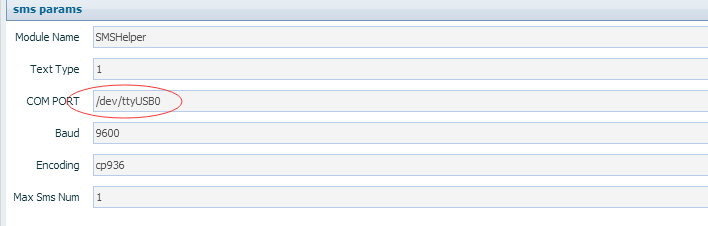

NoSuchPortException问题解决

检查web界面的端口配置:

在ZMC服务端上查看这个设备是否存在:

ls -ltr /dev/ttyUSB0

设备存在,但是只对root用户或者dialout用户组可以读写。

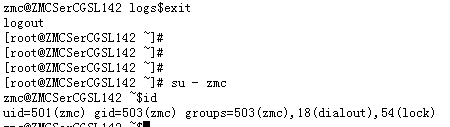

将zmc用户添加到dialout用户组:

usermod -aG dialout zmc

退出当前会话,重新登录,检查用户归属的用户组:

重启zmcServer进程:

再次测试,端口不存在的错误已经消失,server_app.log报错如下,这个错误是sim卡没有插到短信猫导致的,此处忽略。

24190 2016-02-18 16:06:08.445 [RMI TCP Connection(6)-127.0.0.1] WARN c.z.z.z.s.SMSmodem—> Failed to receive msg,because it is COM => Error.vtResult[1]=[AT+CMGF=1

短信中心对接常见错误

Connection refused问题

点击SMPP的【Test】按钮,返回失败,检查$HOME/zmchome/server/logs/server_app.log日志,发现如下错误:

1898634 2016-02-18 16:39:56.084 [RMI TCP Connection(18)-127.0.0.1] WARN c.z.z.z.s.TSMPPModem—> Socket Error, errmsg:error(111, ‘Connection refused’)

对于这种问题,需要检查zmc主机是否可以连接端短信中心的端口上,在ZMC服务端上,执行:

_telnet 短信中心ip port

telnet失败的话,需要确认如下信息:

1)网络是否连通,从ZMC的主机到短信中心的主机中间的路由是否正常。

2)是否有防火墙禁止了端口访问,防火墙可能来自:ZMC服务端自身的防火墙配置(iptables),ZMC服务端和短信中心之间的网络中的防火墙,短信中心自身的防火墙配置

Odd-length string错误

点击SMPP的【Test】按钮,返回失败,检查$HOME/zmc_home/server/logs/server_app.log日志,发现如下错误:

5967274 2016-02-18 17:47:44.724 [RMI TCP Connection(55)-127.0.0.1] ERROR c.z.z.z.s.TSMPPModem—> error at SendMSG:[Traceback (most recent call last):

File “/ztesoft/zmc/zmc_home/server/script/SMPPHelper.py”, line 491, in run

res = self.SendMSG(self.sStaff,self.sMsg,self.nMaxCount)

File “/ztesoft/zmc/zmc_home/server/script/SMPPHelper.py”, line 441, in SendMSG

if not self.Init():

File “/ztesoft/zmc/zmc_home/server/script/SMPPHelper.py”, line 219, in Init

(retFlag,retMsg) = self.login()

File “/ztesoft/zmc/zmc_home/server/script/SMPPHelper.py”, line 146, in login

(retFlag, retMsg) =self.send(smppMsg,True)

File “/ztesoft/zmc/zmc_home/server/script/SMPPHelper.py”, line 296, in send

data = smppMsg.pack()

File “/ztesoft/zmc/zmc_home/server/script/smpplib/smpp.py”, line 144, in pack

self.packBody()

File “/ztesoft/zmc/zmc_home/server/script/smpplib/smpp.py”, line 204, in packBody

self._Body = PACK_STR(self._SystemId , 16 ) \

TypeError: Odd-length string

]

从日志中可以发现,问题出在登录阶段,在SMPP的BIND_TX函数组包时异常。通常这种情况都是因为传入的参数格式有问题导致的,从经验上,最可能出错的字段是协议版本字段。

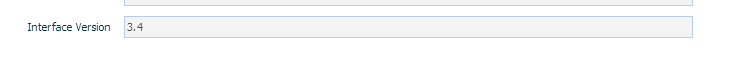

检查Web界面上SMPP渠道的协议版本字段:

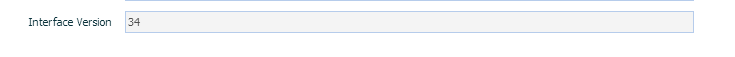

目前配置的值为3.4,这个值不符合要求,虽然协议的版本号是SMPP3.4,但是这个字段的值必须填成34(偶数位数的纯数字组合)。

将字段值修改成正确的值:

点击【Cache】刷新配置,再次测试,返回成功。

Execute SMPPHelper end with False错误

短信中心对接测试时,如果执行测试失败,查看$HOME/zmc_home/server/logs/server_app.log日志:

18132173 2016-01-11 10:33:09.464 [RMI TCP Connection(10)- 172.25.127.154] DEBUG c.z.z.z.c.s.j.JythonScript—> Execute SMPPHelper start

18133499 2016-01-11 10:33:10.790 [RMI TCP Connection(10)- 172.25.127.154] DEBUG c.z.z.z.c.s.j.JythonScript—> Execute SMPPHelper end with False

由于日志不够详细,我们可以配置logback文件,将涉及到SMPP执行部分的日志级别设置成DEBUG。

修改$HOME/zmc_home/server/logback_server.xml文件,在文件结尾之前增加如下一行:

修改完成后,重新触发一次测试,日志变成:

216875 2016-01-11 13:16:20.831 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.c.s.j.JythonScript—> Execute SMPPHelper start

216883 2016-01-11 13:16:20.839 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> run smpphelper

216885 2016-01-11 13:16:20.841 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> Init TSMPPModem. HostIp=[172.25.121.100],HostPort=[6501],SystemId=[DCSSMS],Password=[dcs],CharCode=[2],SourceAddr=[85366032232300004002],SourceAddrTon=[0],DestAddrTon=[0],sLocalIp=[],sLocalPort=[]

216885 2016-01-11 13:16:20.841 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> initParams smpphelper

216886 2016-01-11 13:16:20.842 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> SendMSG params:66972272 111 2

216886 2016-01-11 13:16:20.842 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> recStaff = [66972272],message = [111]

216896 2016-01-11 13:16:20.852 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> Send PDU to socket buffer,Msg:

CommandLen :32

CommandId :BIND_TRANCEIVER

CommandStatus :E_SUCCESS

SequenceNumber:1

Body :444353534d5300646373000034000000

216899 2016-01-11 13:16:20.855 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> Receive PDU

CommandLen :16

CommandId :BIND_TRANCEIVER_RESP

CommandStatus :UNKNOWN_STATUS_0000000f

SequenceNumber:1

Body :

216899 2016-01-11 13:16:20.855 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> MsgAck:

CommandLen :16

CommandId :BIND_TRANCEIVER_RESP

CommandStatus :UNKNOWN_STATUS_0000000f

SequenceNumber:1

Body :

216900 2016-01-11 13:16:20.856 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> Login Failed,Return flag:false, Msg:

216901 2016-01-11 13:16:20.857 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> login….error

216901 2016-01-11 13:16:20.857 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> _Status is not BIND_TX ._Status=[0]

217902 2016-01-11 13:16:21.858 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.s.TSMPPModem—> SendMSG res:[false]

217902 2016-01-11 13:16:21.858 [RMI TCP Connection(10)-172.25.127.154] DEBUG c.z.z.z.c.s.j.JythonScript—> Execute SMPPHelper end with False

从上面的日志,我们可以看出,短信发送失败在BIND_TRANCEIVER消息,错误码是0000000f,参考附录中的SMPP错误码可以推断,这个错误的原因是登录时有参数不对。

| ESME_RINVSYSID | 0x0F | Invalid System ID (login/bind failed – invalid username / system id) |

|---|---|---|

最大的可能是用户名不对,可以联系SMSC侧确认下我们哪个参数送得不对。

如果BIND消息通过了,在SUBMIT_SM失败了,比如下例:

164053 2016-01-11 16:01:17.216 [RMI TCP Connection(6)-10.145.113.30] DEBUG c.z.z.z.s.TSMPPModem—> Send PDU to socket buffer,Msg:

CommandLen :52

CommandId :SUBMIT_SM

CommandStatus :E_SUCCESS

SequenceNumber:2

Body :SRC:179179,DEST:59355882,MSG: Test

164066 2016-01-11 16:01:17.229 [RMI TCP Connection(6)-10.145.113.30] DEBUG c.z.z.z.s.TSMPPModem—> Receive PDU

CommandLen :16

CommandId :SUBMIT_SM_RESP

CommandStatus :UNKNOWN_STATUS_0000000b

SequenceNumber:2

Body :

返回0000000b,对应错误码:

| ESME_RINVDSTADR | 0x0B | Invalid desintation address (recipient/destination phone number is not valid) |

|---|---|---|

这个情况比较可能的是因为被叫号码的号码编码类型及方案有问题。

NPI for Dest Address:被叫号码的编码方案,建议值为1,参见附录【SMPP号码编码方案】。

Dest Address Ton: 被叫号码的编码类型,建议值为1。参见附录【SMPP号码编码类型】。

调试SMPP短信可能会碰到很多问题,在参数都没有问题的前提下,可以考虑多尝试下将在被叫号码加上国家码/长途字冠等信息的各种组合。

邮件对接常见错误

Could not connect to SMTP host问题

测试SMTP时,界面上返回失败,在$HOME/zmc_home/server/logs/server_app.log里有如下日志:

19508 2016-02-19 15:36:09.159 [RMI TCP Connection(6)-127.0.0.1] ERROR c.z.z.z.c.u.EmailUtils—> Send email error!

com.ztesoft.zsmart.core.exception.BaseAppException: Could not connect to SMTP host: 127.0.0.1, port: 25

at com.ztesoft.zsmart.core.exception.ExceptionHandler.publish(ExceptionHandler.java:260) [core_V8.0.5.jar:na]

at com.ztesoft.zsmart.core.exception.ExceptionHandler.publish(ExceptionHandler.java:155) [core_V8.0.5.jar:na]

at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:224) [zmc_core.jar:na]

at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:117) [zmc_core.jar:na]

at com.ztesoft.zsmart.zmc.monisvr.bll.rules.MailRule.triggerMsg(MailRule.java:376) [zmc_modules.jar:na]

at com.ztesoft.zsmart.zmc.server.rmiservice.NMSMgtServiceImpl.triggerMsg(NMSMgtServiceImpl.java:151) [zmc_server.jar:na]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) [na:1.6.0_22]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) [na:1.6.0_22]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) [na:1.6.0_22]

at java.lang.reflect.Method.invoke(Method.java:616) [na:1.6.0_22]

at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:322) [na:1.6.0_22]

at sun.rmi.transport.Transport$1.run(Transport.java:177) [na:1.6.0_22]

at java.security.AccessController.doPrivileged(Native Method) [na:1.6.0_22]

at sun.rmi.transport.Transport.serviceCall(Transport.java:173) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:553) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:808) [na:1.6.0_22]

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:667) [na:1.6.0_22]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110) [na:1.6.0_22]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603) [na:1.6.0_22]

at java.lang.Thread.run(Thread.java:679) [na:1.6.0_22]

Caused by: javax.mail.MessagingException: Could not connect to SMTP host: 127.0.0.1, port: 25

at com.sun.mail.smtp.SMTPTransport.openServer(SMTPTransport.java:1972) [mail.jar:1.4.5]

at com.sun.mail.smtp.SMTPTransport.protocolConnect(SMTPTransport.java:642) [mail.jar:1.4.5]

at javax.mail.Service.connect(Service.java:295) [mail.jar:1.4.5]

at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:196) [zmc_core.jar:na]

… 17 common frames omitted

Caused by: java.net.ConnectException: Connection refused

at java.net.PlainSocketImpl.socketConnect(Native Method) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:327) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:193) [na:1.6.0_22]

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:180) [na:1.6.0_22]

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:384) [na:1.6.0_22]

at java.net.Socket.connect(Socket.java:546) [na:1.6.0_22]

at java.net.Socket.connect(Socket.java:495) [na:1.6.0_22]

at com.sun.mail.util.SocketFetcher.createSocket(SocketFetcher.java:319) [mail.jar:1.4.5]

at com.sun.mail.util.SocketFetcher.getSocket(SocketFetcher.java:233) [mail.jar:1.4.5]

at com.sun.mail.smtp.SMTPTransport.openServer(SMTPTransport.java:1938) [mail.jar:1.4.5]

… 20 common frames omitted

这个问题解决思路和短信中心的【Connect refused问题】,一样需要检查ip地址,端口是否正确,以及链路是否连通。

535 5.7.8 Error: authentication failed: authentication failure错误

测试SMTP时,界面上返回失败,在$HOME/zmc_home/server/logs/server_app.log里有如下日志:

609587 2016-02-19 15:45:59.238 [RMI TCP Connection(13)-127.0.0.1] ERROR c.z.z.z.c.u.EmailUtils—> Send email error!

javax.mail.AuthenticationFailedException: 535 5.7.8 Error: authentication failed: authentication failure

at com.sun.mail.smtp.SMTPTransport$Authenticator.authenticate(SMTPTransport.java:823) [mail.jar:1.4.5]<br /> at com.sun.mail.smtp.SMTPTransport.authenticate(SMTPTransport.java:756) [mail.jar:1.4.5]<br /> at com.sun.mail.smtp.SMTPTransport.protocolConnect(SMTPTransport.java:673) [mail.jar:1.4.5]<br /> at javax.mail.Service.connect(Service.java:317) [mail.jar:1.4.5]<br /> at javax.mail.Service.connect(Service.java:176) [mail.jar:1.4.5]<br /> at javax.mail.Service.connect(Service.java:125) [mail.jar:1.4.5]<br /> at javax.mail.Transport.send0(Transport.java:194) [mail.jar:1.4.5]<br /> at javax.mail.Transport.send(Transport.java:124) [mail.jar:1.4.5]<br /> at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:190) [zmc_core.jar:na]<br /> at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:117) [zmc_core.jar:na]<br /> at com.ztesoft.zsmart.zmc.monisvr.bll.rules.MailRule.triggerMsg(MailRule.java:376) [zmc_modules.jar:na]<br /> at com.ztesoft.zsmart.zmc.server.rmiservice.NMSMgtServiceImpl.triggerMsg(NMSMgtServiceImpl.java:151) [zmc_server.jar:na]<br /> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) [na:1.6.0_22]<br /> at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) [na:1.6.0_22]<br /> at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) [na:1.6.0_22]<br /> at java.lang.reflect.Method.invoke(Method.java:616) [na:1.6.0_22]<br /> at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:322) [na:1.6.0_22]<br /> at sun.rmi.transport.Transport$1.run(Transport.java:177) [na:1.6.0_22]<br /> at java.security.AccessController.doPrivileged(Native Method) [na:1.6.0_22]<br /> at sun.rmi.transport.Transport.serviceCall(Transport.java:173) [na:1.6.0_22]<br /> at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:553) [na:1.6.0_22]<br /> at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:808) [na:1.6.0_22]<br /> at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:667) [na:1.6.0_22]<br /> at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110) [na:1.6.0_22]<br /> at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603) [na:1.6.0_22]<br /> at java.lang.Thread.run(Thread.java:679) [na:1.6.0_22]<br />出现这个错误的原因一般是登录邮件服务端的用户名密码错误,将用户名密码修改成正确值即可。

550 5.7.1 Client does not have permissions to send as this sender错误

测试SMTP时,界面上返回失败,在$HOME/zmc_home/server/logs/server_app.log里有如下日志:

5270772 2015-12-12 13:30:29.052 [RMI TCP Connection(61)-10.45.17.121] ERROR c.z.z.z.c.u.EmailUtils—> Send email error!

com.sun.mail.smtp.SMTPSendFailedException: 550 5.7.1 Client does not have permissions to send as this sender

at com.sun.mail.smtp.SMTPTransport.issueSendCommand(SMTPTransport.java:2114) [mail.jar:1.4.5]<br /> at com.sun.mail.smtp.SMTPTransport.mailFrom(SMTPTransport.java:1618) [mail.jar:1.4.5]<br /> at com.sun.mail.smtp.SMTPTransport.sendMessage(SMTPTransport.java:1119) [mail.jar:1.4.5]<br /> at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:198) [zmc_core.jar:na]<br /> at com.ztesoft.zsmart.zmc.core.utils.EmailUtils.sendEmail(EmailUtils.java:117) [zmc_core.jar:na]<br /> at com.ztesoft.zsmart.zmc.monisvr.bll.rules.MailRule.triggerMsg(MailRule.java:376) [zmc_modules.jar:na]<br /> at com.ztesoft.zsmart.zmc.server.rmiservice.NMSMgtServiceImpl.triggerMsg(NMSMgtServiceImpl.java:151) [zmc_server.jar:na]<br /> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) [na:1.6.0_45]<br /> at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39) [na:1.6.0_45]<br /> at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25) [na:1.6.0_45]<br /> at java.lang.reflect.Method.invoke(Method.java:597) [na:1.6.0_45]<br /> at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:303) [na:1.6.0_45]<br /> at sun.rmi.transport.Transport$1.run(Transport.java:159) [na:1.6.0_45]<br /> at java.security.AccessController.doPrivileged(Native Method) [na:1.6.0_45]<br /> at sun.rmi.transport.Transport.serviceCall(Transport.java:155) [na:1.6.0_45]<br /> at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:535) [na:1.6.0_45]<br /> at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:790) [na:1.6.0_45]<br /> at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:649) [na:1.6.0_45]<br /> at java.util.concurrent.ThreadPoolExecutor$Worker.runTask(ThreadPoolExecutor.java:895) [na:1.6.0_45]<br /> at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:918) [na:1.6.0_45]<br /> at java.lang.Thread.run(Thread.java:662) [na:1.6.0_45]<br />Caused by: com.sun.mail.smtp.SMTPSenderFailedException: 550 5.7.1 Client does not have permissions to send as this senderat com.sun.mail.smtp.SMTPTransport.mailFrom(SMTPTransport.java:1625) [mail.jar:1.4.5]<br /> ... 19 common frames omitted<br />这个错误产生的原因是Send Mail Box参数设置不对。有些SMTP服务器对发件人要求比较严格,那么这个参数不能输入其他值,必须是 登录到邮箱服务器的用户名@域名 的模式。

PATH配置有误,用户找不到对应命令,zmcServer启动失败

现象描述:

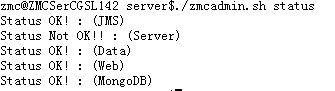

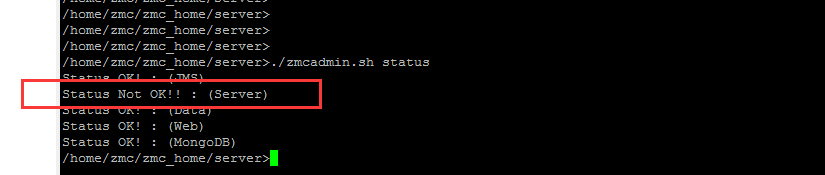

1.执行./zmcadmin.sh status,如下图

2.查看/logs/server_app.log日志,如下图

排查方法:

1.检查/logs/zmcServer.nohup.out文件,发现提示/bin/sh: ip: command not found,那就在server用户下执行ip address命令,结果也是同样的提示

注意,只linux下使用ip命令,其他系统使用netstat命令,具体照zmcServer.nohup.out的提示信息处理

2.检查用户下面的环境变量文件,由于系统版本不同,文件名可能是.bash_profile和.Profile其中一个。检查PATH的值是否包含/usr/sbin路径,如果,请检查PATH的定义格式是否错,如果只定义了一个PATH,查看是否是PATH=$PATH:XXX:XXX:XXX格式,如果定义了多个PATH,查看最后有没有加上:$PATH

3.修改好后,执行source .bash_profile

4.执行./srvadmin.sh start

HeartBeat not exists,maybe disconnect to server!

故障现象:

服务端和客户端进程正常,但是告警时间中,客户端报NE(sdunicom_51.90-zmc:28106) HeartBeat not exists,maybe disconnect to server!

解决方法:

查看服务端所在用户是否安装了agent,若无,需部署

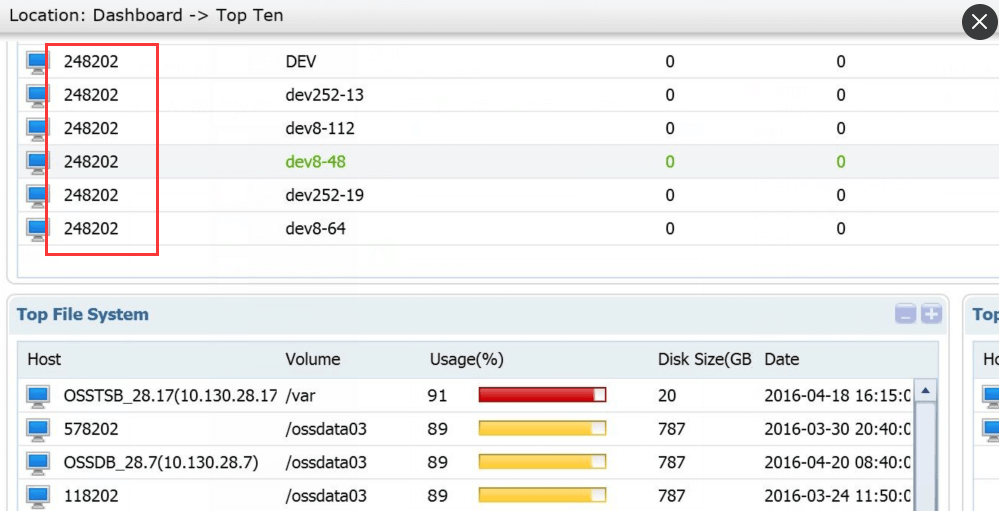

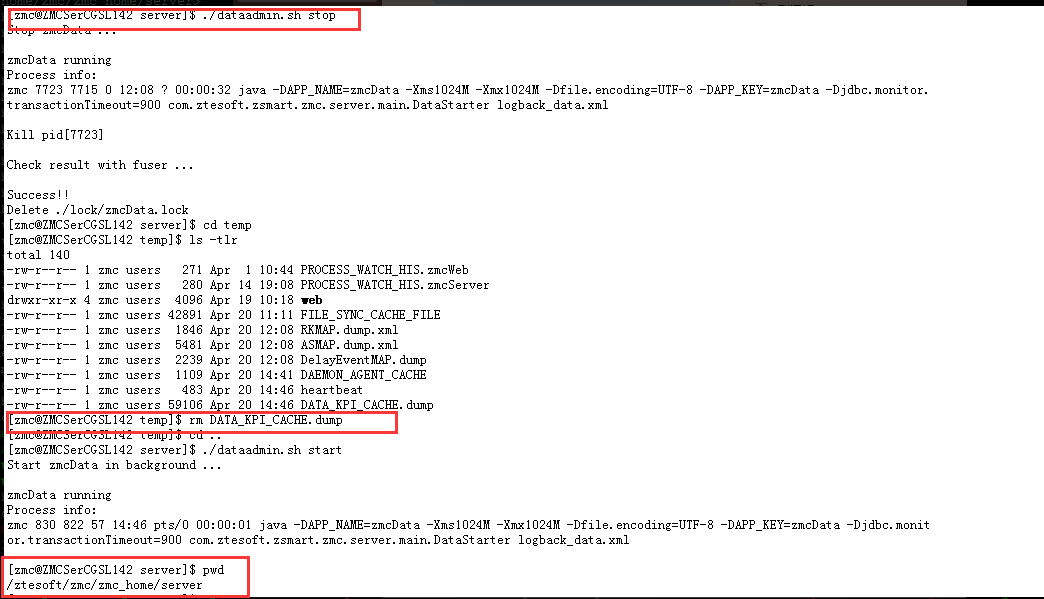

TopTen中出现资源名显示为数字

故障现象:

解决办法:

- 确认数据来源,针对不同来源,处理方式不同

1.查看TopTen中数据的采集时间,如果是距离当前时间较远,初步判定为垃圾数据,如果较近或者还在不断更新,初步判定为TopTen中的探针配置在了service下面。

2.执行select t. app_env_id,t.create_date from KPI_xxx t where t. res_inst_id = ‘页面显示id’;查看KPI的采集时间,若无更新,证明是垃圾数据,若在不断更新,查看NM_TASK_LIB表;

3.执行select t.res_inst_type,t.res_inst_id from NM_TASK_LIB t where t.app_env_id = ‘步骤3查到的值’;存在type类型为service,请修改探针位置,若无结果,验证为垃圾数据;

2. 处理方法

1.停掉zmcData进程;

2.删除/zmc_home/server/temp下的DATA_KPI_CACHE.dump文件;

3.重启zmcData进程;

.

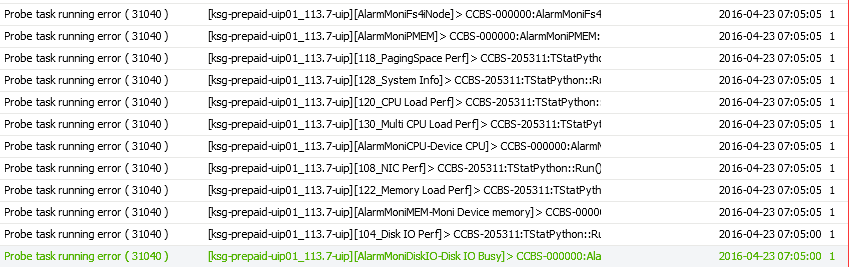

ClOSE_WAIT过多,导致31040告警

现象分析:

同一个客户端一时间段内出现多个31040告警

agent_probe_app.log里很多命令执行后取不到结果的错误

138525055 2016-04-23 07:05:00.479 [MainThread] ERROR c.z.z.z.a.p.ProbeMgr—> > CCBS-000000:AlarmMoniDiskIO::Run() TASK[148035-6268035] This task with error:

File “/ztesoft/uip/zmc_agent/control/probe/agent/component/AlarmMoniDiskIO.py”, line 42, in Run

devIO = spaceInfo.getDiskIORatio()

File “/ztesoft/uip/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 923, in getDiskIORatio

info = self._diskIO4LinuxIORW()

File “/ztesoft/uip/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 969, in _diskIO4LinuxIORW

raise RuntimeError, sMessageErr

RuntimeError: SpaceInfo::_diskIO4LinuxIORW(): execute [sar -d 1 3] result is unexcept format

138530087 2016-04-23 07:05:05.511 [MainThread] ERROR c.z.z.z.a.p.ProbeMgr—> > CCBS-205311:TStatPython::Run() TASK[148035-6408035] Python=[statistics.HostServ.HostServ.SwapDetailInfo],Message=[

File “/ztesoft/uip/zmc_agent/control/probe/agent/component/StatPython.py”, line 92, in Run

StatValues = apply(self.FuncInst,[self.ClassInst])

File “/ztesoft/uip/zmc_agent/control/probe/agent/statistics/HostServ.py”, line 368, in SwapDetailInfo

return impl.GetSwapSpaceDetail()

File “/ztesoft/uip/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 76, in GetSwapSpaceDetail

info = self._swap4free()

File “/ztesoft/uip/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 214, in _swap4free

raise RuntimeError, sMessageErr

RuntimeError: SpaceInfo::_swap4free(): execute [free -m] result is unexcept format

]

在 agent_app.log里可以找到出错日志:

138829979 2016-04-23 07:10:05.403 [MainThread] WARN c.z.z.z.c.s.Command—> Command[/oracle/product/112/bin/sqlplus -S </ztesoft/uip/zmc_agent/control/probe/agent/statistics/../../../probedata/temp/sqlplus.20160423071005.0.259189543309.sql] meet some errors:[Command [sh, -c, /oracle/product/112/bin/sqlplus -S </ztesoft/uip/zmc_agent/control/probe/agent/statistics/../../../probedata/temp/sqlplus.20160423071005.0.259189543309.sql] Execute Error: Cannot run program “sh”: java.io.IOException: error=24, Too many open files],if these are not important,just ignore them.

解决办法:

1.执行命令查看netstat -an|grep WAIT|wc –l,存在大量的CLOSE_WAIT,执行第二步;

2.停掉554、592、594、596探针;

Daemon Manager客户端进行为0问题

故障现象:

Daemon Manager页面列表:

告警列表中对应客户端告警:

解决方法:

检查下客户端的进程,zmcAgent和zmcDaemon进程是否存在,若均不存在,启动进程,若agent进程不在,Daemon进程存在,并且存在多个,停掉所有的Daemon,重新启动daemon和agent进程。

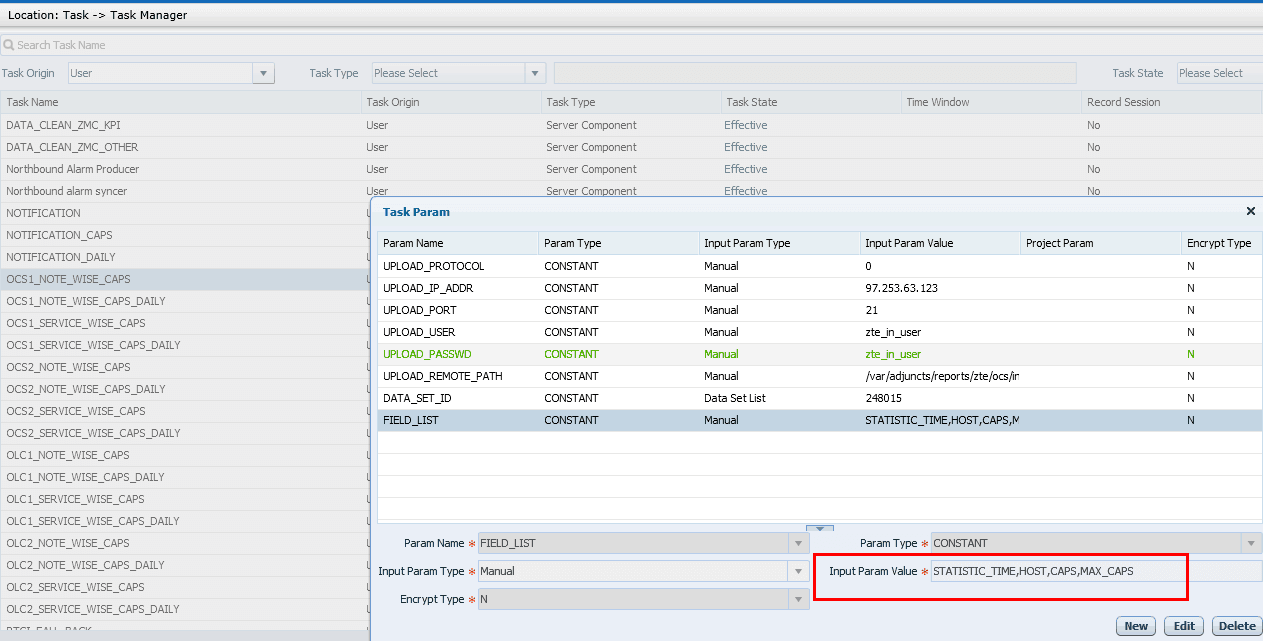

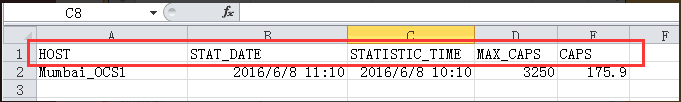

北向任务生成csv时,产生的字段序列与页面填入顺序不符

故障现象:

解决方法:

页面输入时,为参数配置SORT_LIST排序参数,格式”字段1:Order,字段2:Order”,Order取值1表示升序,-1表示降序,比如”C1:1,C2:-1”表示按照C1升序,C2降序排列

mongodb启动失败导致无法生成告警

root用户启动ZMC造成mongodb启动失败

检查server_app.log发现如下日志:

| 8640 2017-10-22 17:04:13.262 [MongoDB Cleaner] ERROR c.z.z.z.s.b.SyslogMongo—> Failed to clear MongoDB,error: 8656 2017-10-22 17:04:13.278 [MongoDB Cleaner] ERROR c.z.z.z.s.b.SyslogMongo—> null com.mongodb.MongoWriteException: not authorized to remove from SYSLOG_SECURITY.SYSLOG_DATA at com.mongodb.MongoCollectionImpl.executeSingleWriteRequest(MongoCollectionImpl.java:523) ~[mongo-java-driver-3.3.0.jar:na] |

|---|

解决方法:

使用root用户停掉ZMC服务,并删除相关的日志文件和数据文件,然后切换到zmc用户启动服务。

客户端故障排查

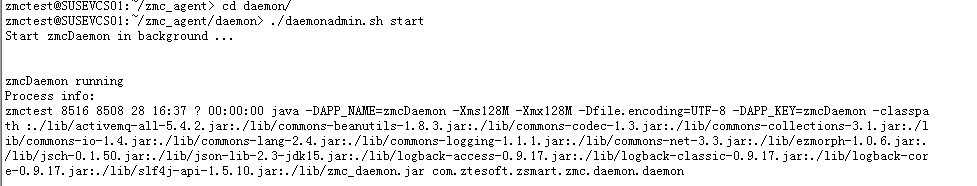

zmcDaemon进程日志报错:Call Time out

故障现象

解决办法

查看客户端上的zmcDaemon日志

登录到10.45.16.68的zmctest用户上,查看$HOME/zmc_agent/daemon/logs/daemon_app.log,里面有如下报错:

120617 2016-02-23 09:46:12.160 [main] ERROR c.z.z.z.d.b.MessageBroker—> Call Time out 21E9F4929D284C2DB3C61260E28C2738

120617 2016-02-23 09:46:12.160 [main] ERROR c.z.z.z.d.daemon—>

java.lang.NullPointerException: null

at com.ztesoft.zsmart.zmc.daemon.bll.FileSync.sync(FileSync.java:56) [zmc_daemon.jar:na]

at com.ztesoft.zsmart.zmc.daemon.daemon.main(daemon.java:90) [zmc_daemon.jar:na]

这段日志显示属于该进程的JMS消息有可能被其他进程抢先处理了。

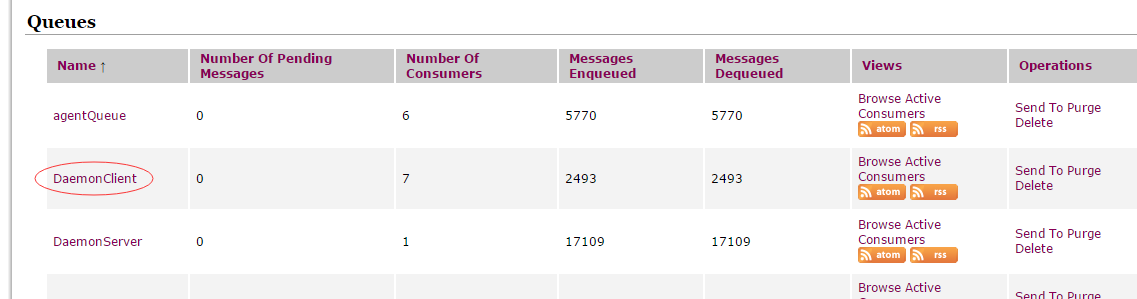

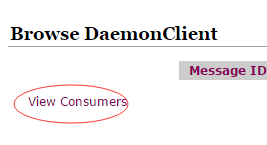

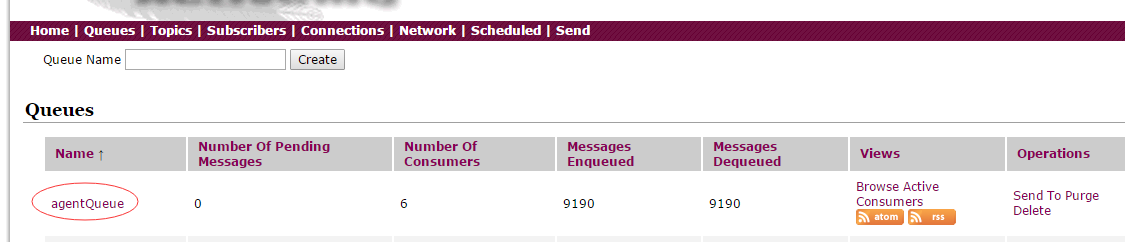

查看JMS的消息队列

登录到服务端的JMS页面:

http://服务端ip:8161

点击【Manage ActiveMQ broker】链接:

选择【Queues】Tab页:

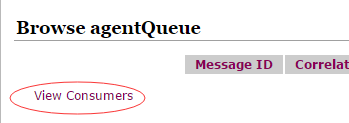

点击【DaemonClient】链接:

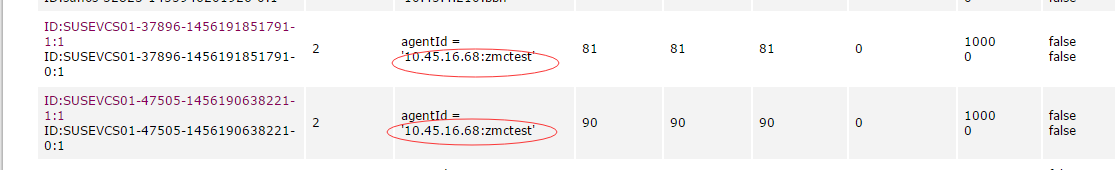

点击【View Consumers】链接:

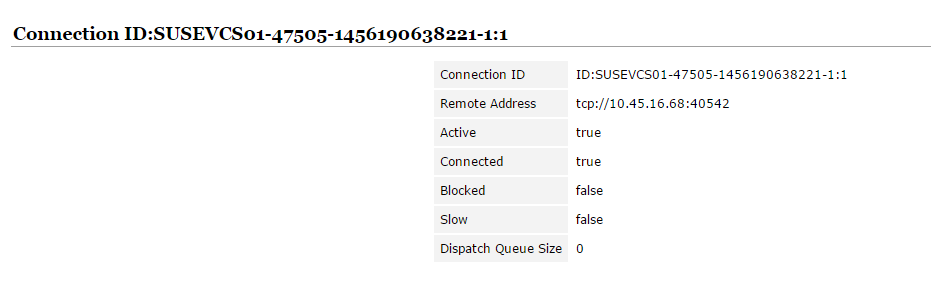

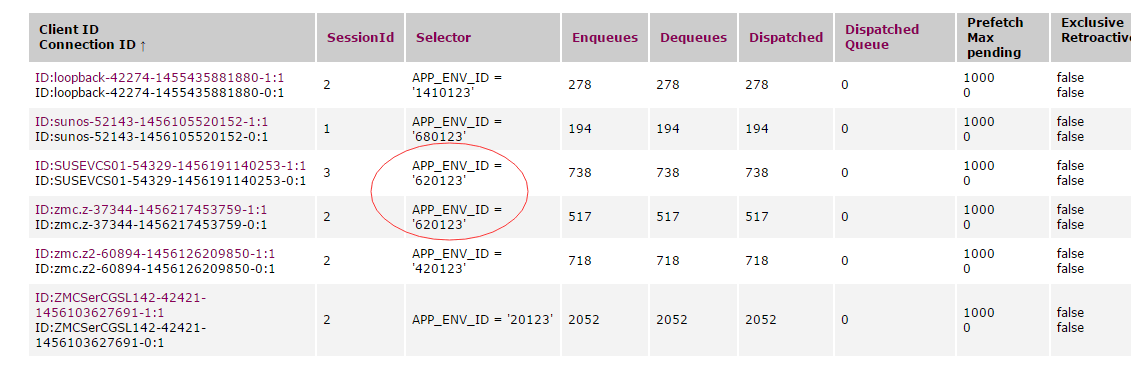

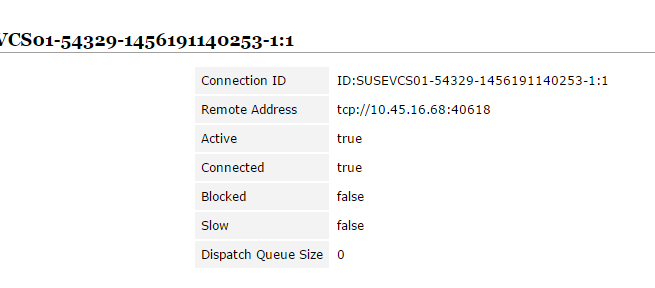

在上面的界面上可以看到两个agentId内容是一样的。

分别点第一列中的ID链接,可以查看链接的详细信息:

从上面的图中,我们可以看到涉及到的端口号分别的是40714和40542。

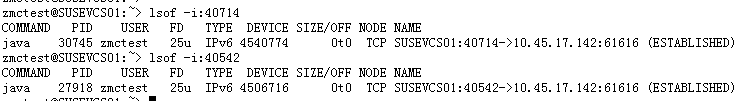

客户端上根据端口获得进程信息

执行如下两条语句,获取到进程信息:

lsof -i:40714

lsof -i:40542

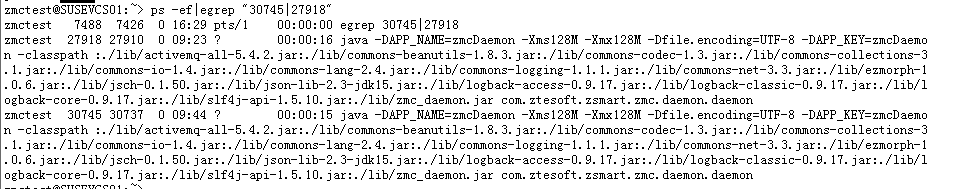

根据PID获取详细进程信息:

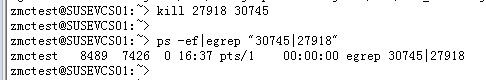

我们可以看到,zmctest用户上起了两个zmcDaemon进程,把这两个进程都停了再重新启动进程即可:

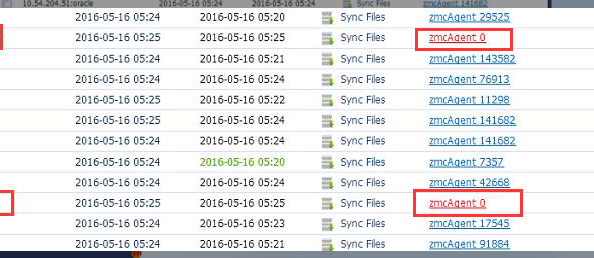

agentQueue 出现repeating selector 问题

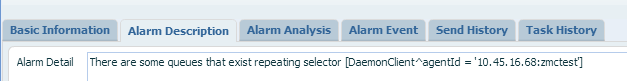

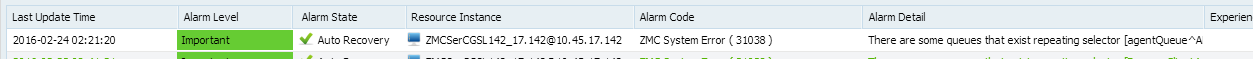

故障现象

故障现象和zmcDaemon相似,在告警界面上有如下告警:

详细信息:

解决办法

查看JMS的消息队列

登录到服务端的JMS页面:

http://服务端ip:8161

转到Queues页面:

点击【agentQueue】链接:

点击【View Consumers】:

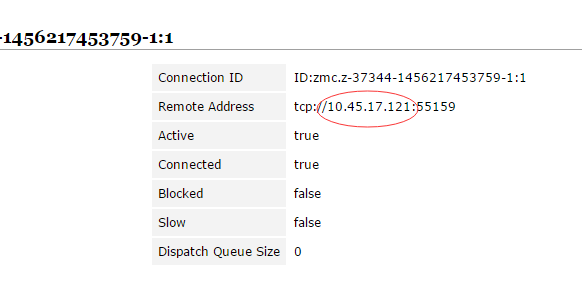

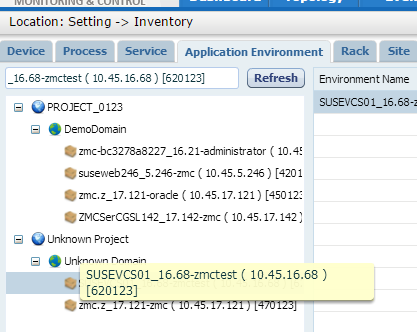

根据告警提示,我们查找620123的app_env_id,发现有两条记录,分别点开两条记录的ID链接:

从链接的详细信息中可以看出,第二条链接是从10.45.17.121上发送过来的,我们在ZMC web页面的Inventory里查询到这个620123应该是16.68机器上的app env:

查找并停止错误进程,修复问题

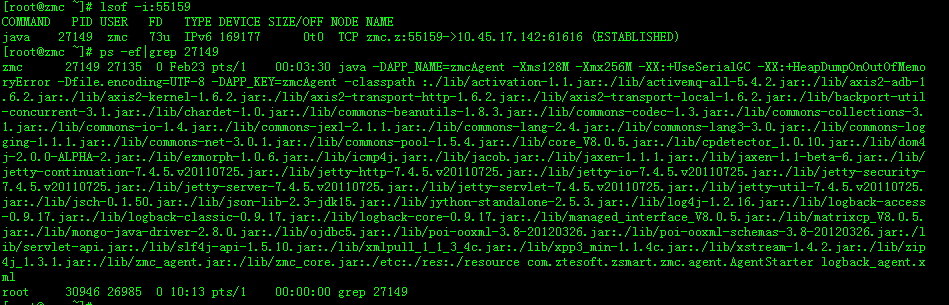

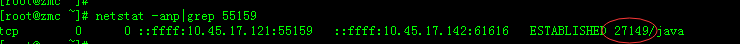

登录到17.121的root用户,查找55159端口的归属进程:

这个进程是zmc用户下的zmcAgent进程。

PS:我们也可以用netstat -anp|grep 55159方式找到进程

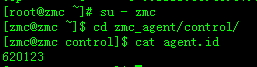

查看这个进程对应的app env id:

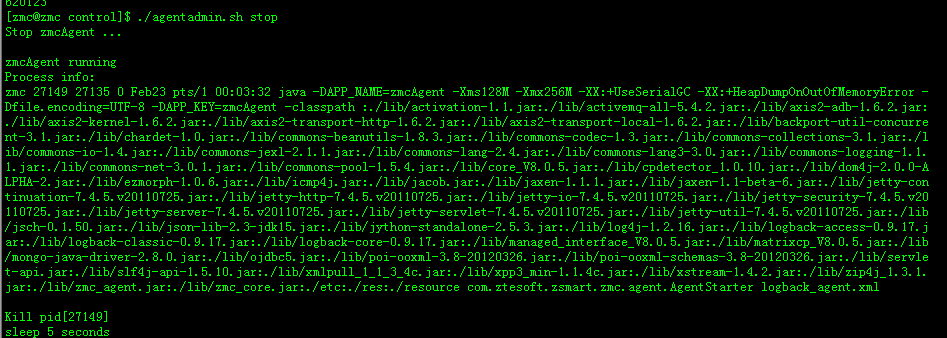

这个agent.id文件内容不对,需要停止zmcAgent进程,删除agent.id文件,重启进程重新注册:

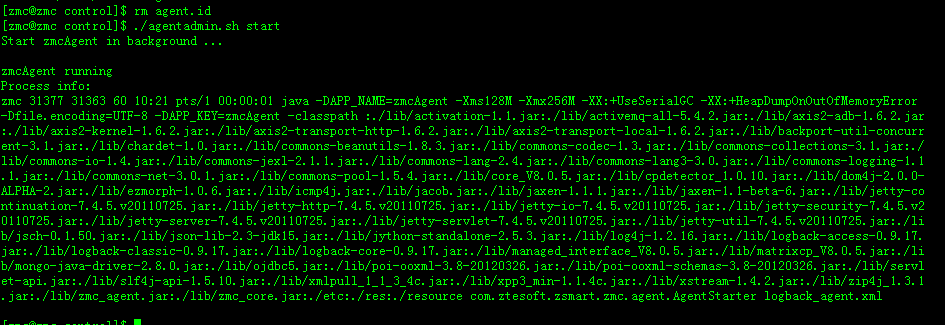

过段时间再次查看agent.id:

内容已经更新。

告警也自动恢复:

客户端报错:Java heap space out of memory

故障现象

zmcAgent进程异常退出,在$HOME/zmcagent/control/logs/zmcAgent.nohup.out里可以看到如下日志:

java.lang.OutOfMemoryError: Java heap space

at org.python.core.PyString.str_mod(PyString.java:748)

at org.python.core.PyString.mod(PyString.java:743)

at org.python.core.PyObject.basicmod(PyObject.java:2673)

at org.python.core.PyObject.mod(PyObject.java:2659)

at StatFile$py.CodePos$4(/home/zmctest/zmcagent/control/probe/agent/component/StatFile.py:215)

at StatFile$py.callfunction(/home/zmctest/zmcagent/control/probe/agent/component/StatFile.py)

at org.python.core.PyTableCode.call(PyTableCode.java:165)

at org.python.core.PyBaseCode.call(PyBaseCode.java:134)

at org.python.core.PyFunction.call(PyFunction.java:317)

at org.python.core.PyMethod.call(PyMethod.java:109)

at StatFile$py.Run$5(/home/zmctest/zmcagent/control/probe/agent/component/StatFile.py:320)

at StatFile$py.callfunction(/home/zmctest/zmc_agent/control/probe/agent/component/StatFile.py)

at org.python.core.PyTableCode.call(PyTableCode.java:165)

at org.python.core.PyBaseCode.call(PyBaseCode.java:301)

at org.python.core.PyBaseCode.call(PyBaseCode.java:194)

at org.python.core.PyFunction.__call(PyFunction.java:387)

at org.python.core.PyMethod.instancemethod___call(PyMethod.java:220)

at org.python.core.PyMethod.__call(PyMethod.java:211)

at org.python.core.PyMethod.__call(PyMethod.java:201)

at org.python.core.PyMethod.__call(PyMethod.java:196)

at org.python.core.PyObject._jcallexc(PyObject.java:3502)

at org.python.core.PyObject._jcall(PyObject.java:3534)

at org.python.proxies.StatFile$TStatFile$8.Run(Unknown Source)

at com.ztesoft.zsmart.zmc.agent.probe.bll.ProbeTask.run(ProbeTask.java:253)

at com.ztesoft.zsmart.zmc.agent.probe.ProbeMgr.run(ProbeMgr.java:132)

at java.lang.Thread.run(Thread.java:662)

java.lang.OutOfMemoryError: Java heap space

解决办法

解决这种问题的办法是在 $HOME/zmc_agent/zmc.profile里增加APP_XMS_SIZE_zmcAgent和APP_XMX_SIZE_zmcAgent参数,适当增加内存大小,比如:

APP_XMS_SIZE_zmcAgent=256M

APP_XMX_SIZE_zmcAgent=384M

export APP_XMS_SIZE_zmcAgent

export APP_XMX_SIZE_zmcAgent

修改完成后,启动zmcAgent进程。

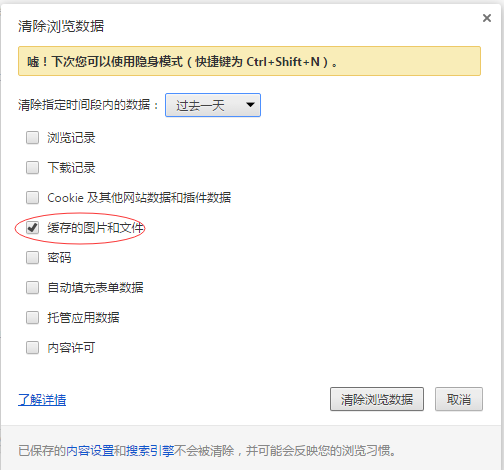

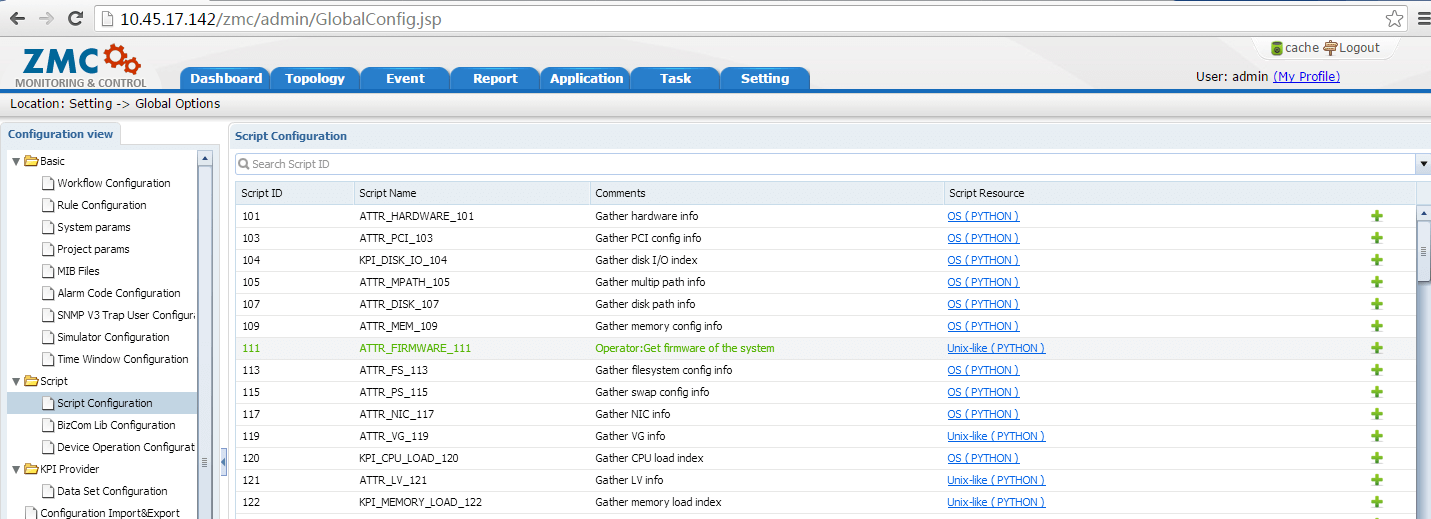

Fail to get script resource错误

故障现象

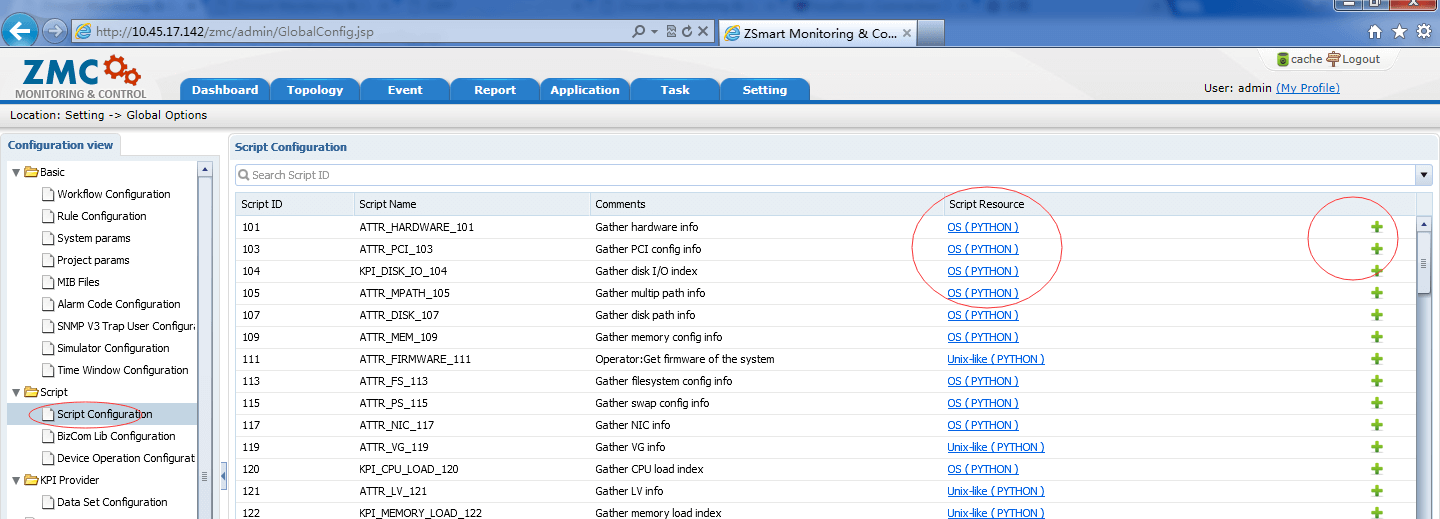

在【Script Configuration】配置了一个脚本,通过Task在客户端执行。

执行时,发现任务没有触发到客户端上。

解决办法

检查服务端$HOME/zmc_home/server/logs/server_app.log里,有如下日志:

63869921 2016-02-25 09:15:36.864 [pool-3-thread-1] DEBUG c.z.z.z.p.b.Processor—> Do Callback, Event = [INVOKE_AGENT_SCRIPT],UUID = [C9FB7FA7D5C1449EB2778BE82285B387],Data = [{SCRIPT_ID=210123, PARAMS_DICT={Z_ENCRYPTPARAMDB_CONN=/ZMC+DES/r7AexiJ6NJMYkhy5+oVc8mylI8v0l2jM, RES_INST_ID=620123, WORKFLOW_WRITE_SESSION=N, RES_INST_TYPE=APP_ENV}, TASK_INST_ID=2171030123, EXEC_RESULT=FAILED, PROC_INST_ID=201602250915363268, RES_INST_ID=620123, EXEC_LOG_CONTENT=Fail to get script resource, scriptId:210123, osVersionId:8, RES_INST_TYPE=APP_ENV, TASK_ID=210123}],Route = [4000000,6000000],CreateDate = [20160225091536]

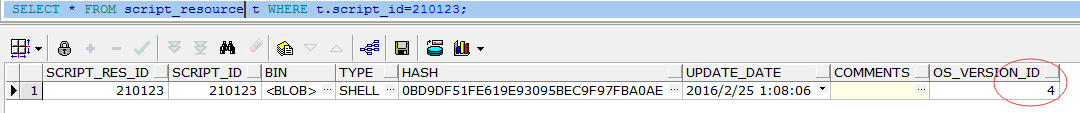

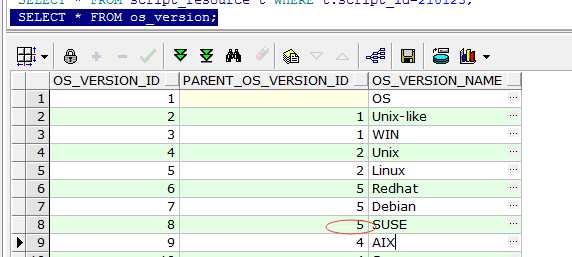

在数据库中查找210123的script_resource记录:

从日志里我们可以看到当前客户端的os version id是8,脚本定义在4,可以查看os_version表看看8和4之间有没有继承关系:

从记录上看,8的上一层是5,而5和4是并列的,因此我们需要修改脚本的操作系统类型,将它改成OS/Unix-like/SUSE。

此处我们将它改成Unix-like:

点击cache刷新,重新测试之后,在客户端上正常接收到Task。

IODectect模块报空指针错误

故障现象

客户端的$HOME/zmc_agent/control/logs/agent_app.log里大量报错:

9421444 2016-02-25 19:30:11.417 [IOPlugin] DEBUG c.z.z.z.a.c.IODetectMgr—> loadIOResource

9421444 2016-02-25 19:30:11.417 [IOPlugin] ERROR c.z.z.z.a.c.IODetectMgr—>

java.lang.NullPointerException: null

at com.ztesoft.zsmart.zmc.agent.component.IODetectMgr.loadIOResource(IODetectMgr.java:270) [zmc_agent.jar:na]

at com.ztesoft.zsmart.zmc.agent.component.IODetectMgr.run(IODetectMgr.java:292) [zmc_agent.jar:na]

at java.lang.Thread.run(Thread.java:662) [na:1.6.0_45]

9424445 2016-02-25 19:30:14.418 [IOPlugin] DEBUG c.z.z.z.a.c.IODetectMgr—> loadIOResource

9424445 2016-02-25 19:30:14.418 [IOPlugin] ERROR c.z.z.z.a.c.IODetectMgr—>

java.lang.NullPointerException: null

at com.ztesoft.zsmart.zmc.agent.component.IODetectMgr.loadIOResource(IODetectMgr.java:270) [zmc_agent.jar:na]

at com.ztesoft.zsmart.zmc.agent.component.IODetectMgr.run(IODetectMgr.java:292) [zmc_agent.jar:na]

解决办法

检查客户端的$ HOME/zmc_agent/control/probedata目录,发现下面没有agent.xml文件。

检查ZMC服务端的$ HOME/zmc_home/server/ftphome/agent_sync/control/probedata目录,下面同样没有agent.xml.

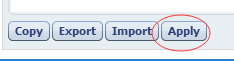

在【Setting】->【Agent Probe】页面下点击【Apply】,重新生成agent.xml并同步到各个主机:

问题解决。

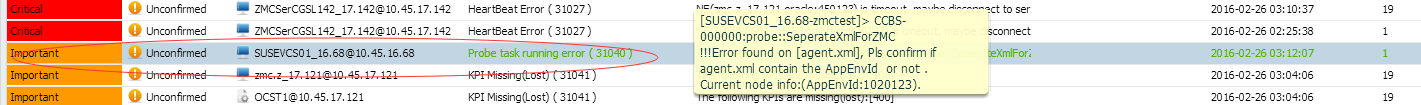

Probe task running error ( 31040 )告警

故障现象

新加网元后,Web界面上产生31040告警:

告警详细内容如下:

[SUSEVCS01_16.68-zmctest]> CCBS-000000:probe::SeperateXmlForZMC

!!!Error found on [agent.xml], Pls confirm if agent.xml contain the AppEnvId or not .

Current node info:(AppEnvId:1020123).

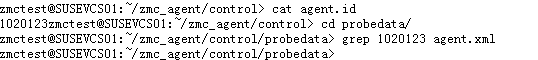

解决办法

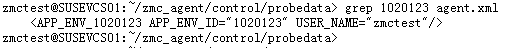

登录到对应网元,查看agent.xml是否有该网元的app_env_id:

从结果看,agent.xml里没有该app_env_id。

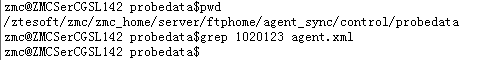

在服务端上查找该app_env_id:

同样找不到,排除因同步不及时造成的问题,问题产生的原因可能是新网元注册之后,服务端没有及时产生新的agent.xml记录,导致新网元没有配置信息。

在【Setting】->【Agent Probe】页面下点击【Apply】,重新生成agent.xml并同步到各个主机:

问题解决。

The block flag error and cause buffer upper 10k lines错误

故障现象

ZMC界面上有如下告警:

详细内容为:

[SUSEVCS01_16.68-zmctest][AlarmMoniLog-Moni log key]> CCBS-201318:TAlarmMoniLog::Run() TASK[1020123-1510123] Parse File [/home/zmctest/log/zxcom.log] Error,Message=[

File “/home/zmctest/zmc_agent/control/probe/agent/component/AlarmMoniLog.py”, line 352, in Run

nNewPos = self.FileParser.ParseFile(sFileName, nPos,self.Log,self.ACMoudle+self.ACRun+18,self.sNEId,self.sTaskId)

File “/home/zmctest/zmc_agent/control/probe/agent/function/ParseFile.py”, line 282, in ParseFile

self.pReadFile.OpenFile(sFile, nPos)

File “/home/zmctest/zmc_agent/control/probe/agent/function/ParseFile.py”, line 76, in OpenFile

raise Exception(‘The block flag error and cause buffer upper 10k lines(%s)’%self.ifs.tell())

Exception: The block flag error and cause buffer upper 10k lines(74995975)

]

解决办法

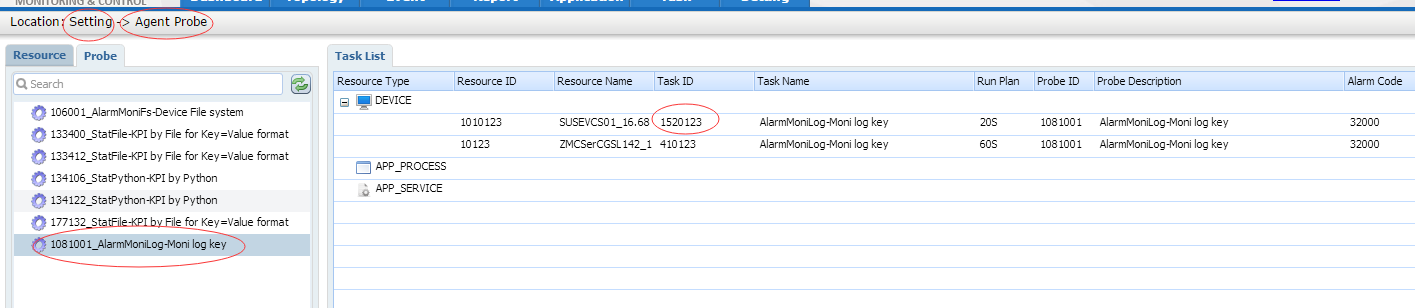

Probe task running error这种错误是一般情况下是组件执行失败触发的告警,如果在日志中能找到组件的task id,可以根据这个ID在web界面上找到具体的组件配置信息。

此例中,我们从日志里可以发现这个错误是在AlarmMoniLog-Moni log key组件触发的,具体涉及到的TaskId是TASK[1020123-1510123],也就是1510123这个ID。

在【Setting】->【Agent Probe】页面的Probe这一Tab页里,可以根据组件找到Task:

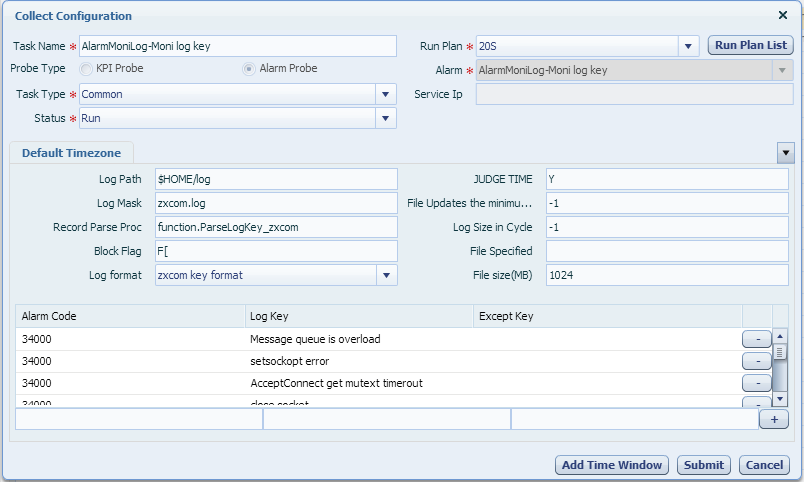

双击这个Task,可以看到具体的配置:

对于The block flag error and cause buffer upper 10k lines这种报错,一般情况下都是因为日志监控组件的Block Flag配置不对导致。

从上面的截图中,我们可以看出,当前的Block Flag配置的是”F[“,而实际的日志如下:

2016-02-01 21:00:58 T[NORMAL] [ zxinfunc.c:6507] C[0]> Proc [name: abnormal_cdr_treat 203,pid:2108] finished moduele registration

2016-02-01 21:00:58 T[NORMAL] [ pretreatWrap.c: 207] C[0]> abnormal_cdr_treat[203] InitAllProcessEvent Init Start …

2016-02-01 21:00:58 T[NORMAL] [ pretreatWrap.c: 243] C[0]> abnormal_cdr_treat[203] Change command to[CMD_NOTHING]

2016-02-01 21:01:08 T[ ERROR] [ zxmoni_lib.c: 526] C[1237]> PROC:TimingTask 204 PROC_PID:2035 stoped,restart the process

每条日志的第一行没有”F[“这样的字符串,因此这个配置不适合采集本例中的zxcom.log。

正确的配置是采用AlarmMoniLog-Moni log level里的zxcom组件,里面的消息分隔符是”T[“,这个字符串在每条消息的第一行都会出现,适合当分隔符。

KPI任务报Unable find oracle sqlplus

故障现象:

KPI执行脚本,报错如下

解决方法:

- 检查agent用户环境变量,添加

export ORACLE_HOME=/oracle/product/xxx(根据实际路径修改)

export PATH=$ORACLE_HOME/bin:$PATH

- 执行id命令,agent用户需含有dba组,若没有,root用户执行usermod -A dba xxx

- 重启客户端进程,包括zmcAgent和zmcDaemon进程。

IOError: [Errno 13] Permission denied: ‘/ztesoft/ocs/zmc_agent/control/probedata/temp/NM_CDR_xxx

故障现象:

[106FileSystem Perf]> CCBS-000000:TaskBase::SaveData() TASK[1049827-339827] Failed to Write Stat File. FILE=[/ztesoft/ocs/zmcagent/control/probedata/temp/NM_CDR_Stat_1049827_339827_20160411100503],error:

File “/ztesoft/ocs/zmc_agent/control/probe/agent/component/TaskStatBase.py”, line 124, in SaveData

ofs = _file(self.sStatFileName,’ab’)

File “/ztesoft/ocs/zmc_agent/control/probe/public/JFile.py”, line 22, in __init

self._file = PyFileWrapper(name,mode,buffsize)

IOError: [Errno 13] Permission denied: ‘/ztesoft/ocs/zmc_agent/control/probedata/temp/NM_CDR_Stat_1049827_339827_20160411100503’

解决方法:

检查执行权限,以客户端用户进入报错路径,执行touch命令,若报错,说明用户权限有问题,若无报错,执行步骤2

执行df -i,查看所在分区Inode的使用情况,Inode使用100%,即使硬盘还有空间,文件也无法写入,如何情路Inode,请找集成协助。

RuntimeError: SpaceInfo::_diskIO4LinuxIORW(): execute [sar -d 1 3] result is unexcept format

故障现象:

6344772 2016-04-11 15:25:03.390 [MainThread] ERROR c.z.z.z.a.p.ProbeMgr—> > CCBS-205311:TStatPython::Run() TASK[118119-18119] Python=[statistics.HostServ.HostServ.IOstatInfo],Message=[

File “/home/olcbl/zmc_agent/control/probe/agent/component/StatPython.py”, line 92, in Run

StatValues = apply(self.FuncInst,[self.ClassInst])

File “/home/olcbl/zmc_agent/control/probe/agent/statistics/HostServ.py”, line 412, in IOstatInfo

dInfo = impl.getDiskIORatio()

File “/home/olcbl/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 923, in getDiskIORatio

info = self._diskIO4LinuxIORW()

File “/home/olcbl/zmc_agent/control/probe/agent/function/SpaceInfo.py”, line 969, in _diskIO4LinuxIORW

raise RuntimeError, sMessageErr

RuntimeError: SpaceInfo::_diskIO4LinuxIORW(): execute [sar -d 1 3] result is unexcept format

解决办法:

在客户端执行sar -d 1 3,若报错,解决用户权限问题,若无,执行步骤2

zmc.profile文件新增变量 export LANGUAGE=en_US,重启zmcAgent进程

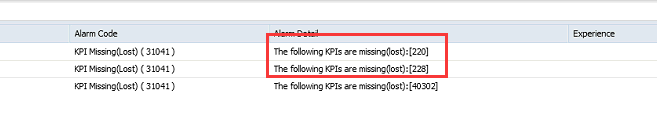

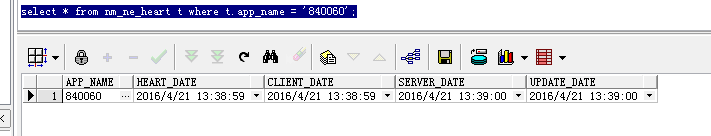

KPI_220 missing告警

故障现象:

解决办法:

检查客户端与服务端进程状态

检查客户端与服务端心跳

select * from nm_ne_heart t where t.app_name = ‘env的id’;

- 步骤1和2均OK,分析下监控对象是否在探针的监控范围内,220探针监控对象为归档日志,只有对象支持日志归档功能,监控才有效,目前rb数据库是不支持归档日志的。

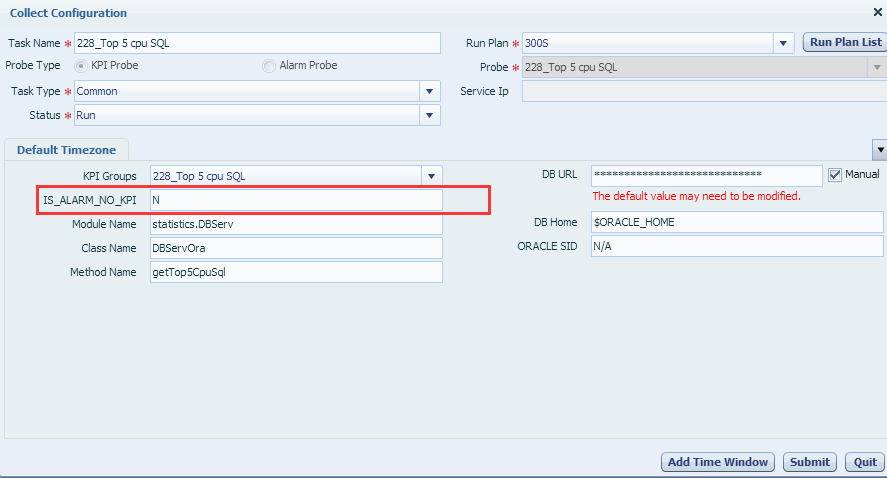

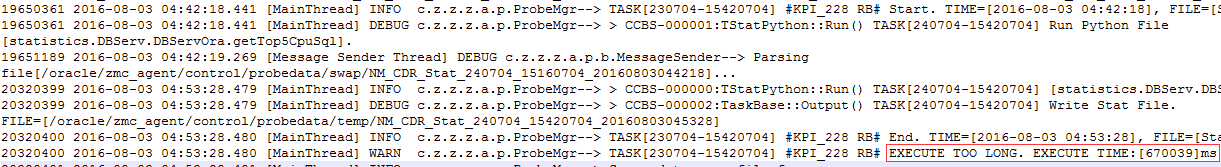

KPI_228 missing告警

故障现象:

解决办法:

检查客户端与服务端进程状态

检查客户端与服务端心跳

select * from nm_ne_heart t where t.app_name = ‘env的id’;

步骤1和2均OK,需分析228探针,该探针是监控oracle进程的CPU使用率,只有当使用率大于%3时,才会采集数据,若低于3%,就不采集数据,服务端每1小时检查KPI数据,发现没有数据,就产生告警。解决不告警需要两步:

设置探针参数;

- 为该任务配置Integrity Rule,具体参考文档

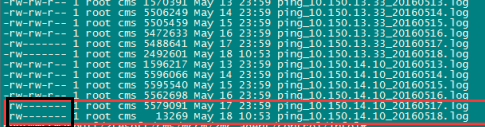

生成文件权限不对,无法读取KPI数据:Permission denied

故障现象:

2016-05-18 00:20:12.564 [MainThread] DEBUG c.z.z.z.c.a.JMSClient—> Send [{“srcModuleId”:0,”eventId”:7000001,”dstModuleId”:0,”data”:{“DATA3”:””,”DATA4”:””,”ALARMCODE”:”31040”,”DATA_1”:””,”DETAIL_INFO”:”[mccmapp01_14.7-cms][132_Ping Resp Stat]> CCBS-205318:TStatFile::Run() TASK[798148-2748148] Parse File [/ztesoft/cms/mccm/zmc_agent/control/info/ping_10.150.14.10_20160518.log] Error,Message=[\n File \”/ztesoft/cms/mccm/zmc_agent/control/probe/agent/component/StatFile.py\”, line 274, in Run\n nNewPos = self.FileParser.ParseFile(sFileName, nPos,self.Log,self.ACMoudle+self.ACRun+18,self.sNEId,self.sTaskId)\n File \”/ztesoft/cms/mccm/zmc_agent/control/probe/agent/function/ParseFile.py\”, line 220, in ParseFile\n self.pReadFile.OpenFile(sFile, nPos,nReadMax,True)\n File \”/ztesoft/cms/mccm/zmc_agent/control/probe/agent/function/ParseFile.py\”, line 55, in OpenFile\n self.ifs = _file(sFile,’rb’)\n File \”/ztesoft/cms/mccm/zmc_agent/control/probe/public/JFile.py\”, line 22, in __init\n self._file = PyFileWrapper(name,mode,buffsize)\nIOError: [Errno 13] Permission denied: ‘/ztesoft/cms/mccm/zmc_agent/control/info/ping_10.150.14.10_20160518.log’\n] “,”ALARM_INDEX_STR”:””,”DATA_2”:”mccmapp01_14.7-cms”,”EVENT_TIME”:”2016-05-18 00:20:12”,”TASK_ID”:”2748148”,”DATA

解决方法:

权限不对可能和umask有关,ZMC使用的umask为0022

执行umask命令查看其值,若不是0022,需要将umask 0022写入zmc.profile,重启进程

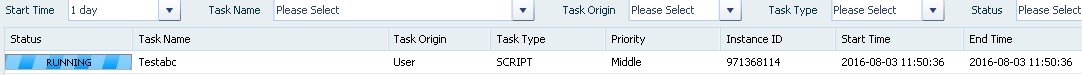

Task触发script类型的任务执行后,一直处于RUNNING状态

故障现象:

从server_app.log发现触发此任务的时候,服务端日志输出仅有如下一条,而客户端没有返回执行结果:

1971046 2016-08-03 11:15:41.686 [pool-3-thread-1] DEBUG c.z.z.z.l.ScriptLib—> Finded resource id[18114] with [18114,5]

解决方法:

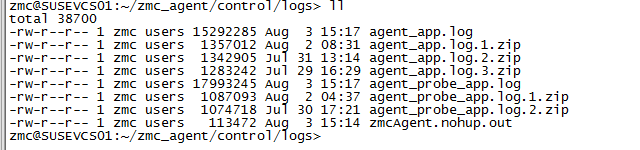

1检查问题客户端zmc_agent/control/logs目录下执行命令ll看日志最后更新时间;

2如果长时间停止输出,查看下日志zmcAgent.nohup.out,能找出挂死原因;

3如果挂死原因已经被解决,重启agent & daemon进程,问题解决

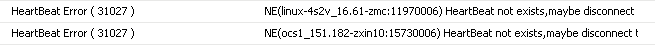

NE(xxx) HeartBeat not exists,maybe disconnect to server!

故障现象:

这种心跳告警一般是查询nm_ne_heart心跳表是否更新,如果未更新则进行告警。

解决方法:

1查看客户端心跳上报记录,zmc_agent/control/probedata/backup/NM_BAK_XXXXXX,心跳1分钟上报一次,可能存在超过5分钟间隔的心跳上报记录;心跳间隔太大估计是探针执行时间过长导致的。

2查看探针执行日志,在zmc_agent/control/logs目录下执行grep ‘ EXECUTE TOO LONG. ‘ agent_probe_app.log,找出执行时间过长的探针,找开发人员咨询。

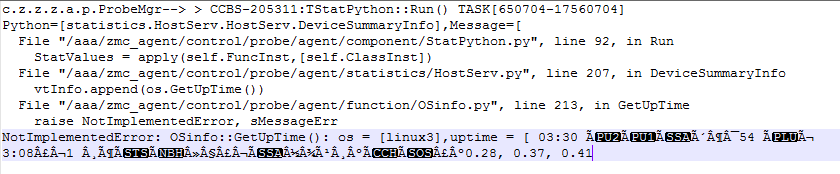

Probe执行报错出现乱码

故障现象:

解决方法:

1 )zmc_agent/zmc.profile 添加

export LANG=en_US.utf8

2)重启agent进程

探针类问题排查

AlarmMoniDmesg探针执行导致帐户被锁

故障现象

ZMC启动执行执行导致帐户密码被锁,检查系统日志(/var/log/messages),发现以下安全告警:

| Jan 24 11:05:34 msc4uip7 sudo: pam_unix(sudo:auth): conversation failed Jan 24 11:05:34 msc4uip7 sudo: pam_unix(sudo:auth): auth could not identify password for [uip] Jan 24 11:05:34 msc4uip7 sudo: uip : command not allowed ; TTY=unknown ; PWD=/ztesoft/uip/zmc_agent/control ; USER=root ; COMMAND=/bin/cat /var/log/messages |

|---|

检查发现现场配置了AlarmMoniDmesg-Monitor linux messages log,但是没有添加相应的权限。

因为现场是使用模板导入,而不是自己配置的,所以这个环节就跳过去了。

解决方法:

添加相应的权限。

sudoedit /etc/sudoers

Defaults requiretty //此行必须注释

zmc ALL=(ALL) NOPASSWD:/bin/cat /var/log/messages

KPI_404探针采集不到数据

故障现象

KPI_404探针用于采集业务,Mediation直接开放了CLT_DAILY_SUMMARY表,供外部系统查询和生成KPI数据,故障发现zmc 探针440 搜集不到数据,数据库的表中是有数据的,但是在服务端将采集到的数据丢掉了。

检查zmc服务端日志server_app.log发现采集过程正常,结果入库失败。

| 104072056 2017-06-23 18:36:01.496 [SendSMPPThread] DEBUG c.z.z.z.s.TSMPPModem—> Socket error when try to recv data,msg len is zero. 104072257 2017-06-23 18:36:01.697 [SendSMPPThread] DEBUG c.z.z.z.s.TSMPPModem—> Socket error when try to recv data,msg len is zero. 104072405 2017-06-23 18:36:01.845 [KPIProcessorThread] WARN c.z.z.z.m.b.KPIProcessor—> KpiValues is invalid and will be discard,NeId=388168,TaskId=4608168,KpiTableId=440,KpiValues[[“25”,”1773”,”2841”,”33123”,”2841”]] 104072405 2017-06-23 18:36:01.845 [KPIProcessorThread] WARN c.z.z.z.m.b.KPIProcessor—> KpiValues is invalid and will be discard,NeId=388168,TaskId=4608168,KpiTableId=440,KpiValues[[“30”,”1”,””,””,””]] |

|---|

解决方法:

可能场景分析:

1,SQL执行结果有空值,需要对空值添加nvl()处理

2,KPI表字段和SQL定义不一致,检查发现KPI_404表的字段和定义的SQL不匹配,原因可能是版本升级的时候没有对已经生成的KPI表增加相应的字段。

KPI_40302采集语音CAPS=0

故障现象

KPI_482探针用于采集QDG业务数据。

1, 检查原始流量文件数据正常,只过滤观察IN-CAPS数据都正常

2, 检查KPI_40302配置正常

3,查看日志agent_probe_app.log.2017-04-07_1129,发现KPI_482处理到同一个文件

1257370514 2017-04-07 07:18:59.415 [MainThread] INFO c.z.z.z.a.p.ProbeMgr—> TASK[1278146-116278146] #482_QDG AGENT REFRESH STAT# Start. TIME=[2017-04-07 07:18:59], FILE=[StatFile]

4,检查KPI_482配置发现掩码和40302冲突

解决方法:

经检查现场配置了另一个KPI #482_QDG AGENT REFRESH STAT# 目录和掩码也读取到同样的流量文件(agentinfo_olc_caps_20170406),导致KPI_40302取不到数据,去掉一个即恢复正常。

*提醒:通配符要精确,不能出现多个探针读同一个文件的情况。

KPI_40302数据阻塞

故障现象

KPI_40302阻塞,检查心跳正常。

现场OLC和PHUB的40302和40304KPI探针堵塞住了,每次重启Agent之后会一次性产生大量KPI数据,然后有15分钟左右的正常时间,即五分钟生成一条KPI数据,但是后面就堵塞住了,探针不会生成新的KPI数据。

先检查一下服务端的心跳,点击右上角的图标进入MQ,刷新一下,看看这个Message Enqueued有没有在变,正常应该是会变化。

zmc部署在双机上,但是实际上只用一个机器,浮动地址切走 zmc不会动还在原来机器上。

解决方法:

根源:ZMC服务器的浮动IP和进程不匹配,没有随双机切换

解决方法:修改ZMC中的HA配置

system parameter里有个isha设置成N,然后把服务端重启下,这样会自动注册一个服务端资源。

ParseLogKey_zxcom探针关键字没有告警

故障现象

项目现场反馈ParseLogKey_zxcom探针异常zxcom.log关键字没有告警。

怀疑zmc不支持监控zxcom.log,imanager可以支持,但是zmc不出告警。

检查日志发现配置了两个探针读取同一个日志文件(zxcom.log),AlarmMoniLog_zxcom_F执行完成后,文件指针已经移到最后,AlarmMoniLog_zxcom_T实际上取不到内容。

解决方法:

去掉其中一个探针。

Wallscreen业务显示锯齿状

故障现象

KPI采集不平滑Wallscreen业务显示锯齿状。

到zmcagent/control/probedata/backup目录找一下KPI 400的数据文件,原始数据(流量文件)—->KPI文件—>KPI表,先确定哪个环节有问题,流量文件agentinfo_olc,和agentinfo_olc_caps是OLC主机上的KPI _40302,不是KPI_400。

检查一下这台机器上的其他的KPI配置,对比一下文件配置的PATH+MASK部分,有没有可能对同一个文件进行操作的。

经过检查确认现场采集时间间隔过小,探针配置配置太多,容易出现因为性能导致漏取数据的情况。

解决方法

调整探针执行时间间隔,减少探针数量,开启KPI Summary整合数据。

AlarmMoniCDR找不到文件

故障现象

配置的探针AlarmMoniCDR配置了Path和Filename,但是采集不到数据。

检查发现探针中的日期参数不支持yyyy,只支持{YYYY}-{MM}-{DD}。

解决方法

修改AlarmMoniCDR探针,把file Mask部分的yyyy改成YYYY,验证通过。

附录

SMPP错误码

| ESME_RINVMSGLEN | 1 | Invalid Message Length (sm_length parameter) |

|---|---|---|

| ESME_RINVCMDLEN | 2 | Invalid Command Length (command_length in SMPP PDU) |

| ESME_RINVCMDID | 3 | Invalid Command ID (command_id in SMPP PDU) |

| ESME_RINVBNDSTS | 4 | Incorrect BIND status for given command (example: trying to submit a message when bound only as a receiver) |

| ESME_RALYBND | 5 | ESME already in bound state (example: sending a second bind command during an existing SMPP session) |

| ESME_RINVPRTFLG | 6 | Invalid Priority Flag (priority_flag parameter) |

| ESME_RINVREGDLVFLG | 7 | Invalid Regstered Delivery Flag (registered_delivery parameter) |

| ESME_RSYSERR | 8 | System Error (indicates server problems on the SMPP host) |

| ESME_RINVSRCADR | 0x0A | Invalid source address (sender/source address is not valid) |

| ESME_RINVDSTADR | 0x0B | Invalid desintation address (recipient/destination phone number is not valid) |

| ESME_RINVMSGID | 0x0C | Message ID is invalid (error only relevant to query_sm, replace_sm, cancel_sm commands) |

| ESME_RBINDFAIL | 0x0D | Bind failed (login/bind failed – invalid login credentials or login restricted by IP address) |

| ESME_RINVPASWD | 0x0E | Invalid password (login/bind failed) |

| ESME_RINVSYSID | 0x0F | Invalid System ID (login/bind failed – invalid username / system id) |

| ESME_RCANCELFAIL | 0x11 | cancel_sm request failed |

| ESME_RREPLACEFAIL | 0x13 | replace_sm request failed |

| ESME_RMSGQFUL | 0x14 | Message Queue Full (This can indicate that the SMPP server has too many queued messages and temporarily cannot accept any more messages. It can also indicate that the SMPP server has too many messages pending for the specified recipient and will not accept any more messages for this recipient until it is able to deliver messages that are already in the queue to this recipient.) |

| ESME_RINVSERTYP | 0x15 | Invalid service_type value |

| ESME_RINVNUMDESTS | 0x33 | Invalid number_of_dests value in submit_multi request |

| ESME_RINVDLNAME | 0x34 | Invalid distribution list name in submit_multi request |

| ESME_RINVDESTFLAG | 0x40 | Invalid dest_flag in submit_multi request |

| ESME_RINVSUBREP | 0x42 | Invalid ‘submit with replace’ request (replace_if_present flag set) |

| ESME_RINVESMCLASS | 0x43 | Invalid esm_class field data |

| ESME_RCNTSUBDL | 0x44 | Cannot submit to distribution list (submit_multi request) |

| ESME_RSUBMITFAIL | 0x45 | Submit message failed |

| ESME_RINVSRCTON | 0x48 | Invalid Source address TON |

| ESME_RINVSRCNPI | 0x49 | Invalid Source address NPI |

| ESME_RINVDSTTON | 0x50 | Invalid Destination address TON |

| ESME_RINVDSTNPI | 0x51 | Invalid Destination address NPI |

| ESME_RINVSYSTYP | 0x53 | Invalid system_type field |

| ESME_RINVREPFLAG | 0x54 | Invalid replace_if_present flag |

| ESME_RINVNUMMSGS | 0x55 | Invalid number_of_messages parameter |

| ESME_RTHROTTLED | 0x58 | Throttling error (This indicates that you are submitting messages at a rate that is faster than the provider allows) |

| ESME_RINVSCHED | 0x61 | Invalid schedule_delivery_time parameter |

| ESME_RINVEXPIRY | 0x62 | Invalid validity_period parameter / Expiry time |

| ESME_RINVDFTMSGID | 0x63 | Invalid sm_default_msg_id parameter (this error can sometimes occur if the “Default Sender Address” field is blank in NowSMS) |

| ESME_RX_T_APPN | 0x64 | ESME Receiver Temporary App Error Code |

| ESME_RX_P_APPN | 0x65 | ESME Receiver Permanent App Error Code (the SMPP provider is rejecting the message due to a policy decision or message filter) |

| ESME_RX_R_APPN | 0x66 | ESME Receiver Reject Message Error Code (the SMPP provider is rejecting the message due to a policy decision or message filter) |

| ESME_RQUERYFAIL | 0x67 | query_sm request failed |

| ESME_RINVOPTPARSTREAM | 0xC0 | Error in the optional TLV parameter encoding |

| ESME_ROPTPARNOTALLWD | 0xC1 | An optional TLV parameter was specified which is not allowed |

| ESME_RINVPARLEN | 0xC2 | An optional TLV parameter has an invalid parameter length |

| ESME_RMISSINGOPTPARAM | 0xC3 | An expected optional TLV parameter is missing |

| ESME_RINVOPTPARAMVAL | 0xC4 | An optional TLV parameter is encoded with an invalid value |

| ESME_RDELIVERYFAILURE | 0xFE | Generice Message Delivery failure |

| ESME_RUNKNOWNERR | 0xFF | An unknown error occurred (indicates server problems on the SMPP host) |

SMPP号码编码类型

addr_ton,该字段指明编码类型。如不需要,可设为NULL。

取值说明:

0 未知,当用户或网络不含关于编码方案的较早信息

1 国际号码,

2 国内号码

3 网络特殊号码

4 用户号码

5 字符数字

6 缩写号码

7 保留

SMPP号码编码方案

addr_npi,该字段指明编码方案。如不需要,可设为NULL。

取值说明:

0 未知

1 ISDN或电话号码编码方案(E164/E163),对于任意实体SC,MSC或MS,都有效

2 保留

3 数据编码方案(X121)

4 电报编码方案

5-7 保留

8 国内编码方案

9 私有编码方案

10 ERMES编码方案(ETSI DE/PS 3 01-3)

11-15 保留

修订历史

| 版本号 | 修订时间 | 修订人 | 修订原因 |

|---|---|---|---|

| 1.0 | 2016-2-14 | 游庆汉 | 创建文档 |