实战环境

1. 192.168.10.11 es nginx

2. 192.168.10.12 logstash nginx

Nginx编译安装

yum install -y lrzsz wget gcc gcc-c++ make pcre pcre-devel zlib zlib-devel

cd /usr/local/src

wget http://nginx.org/download/nginx-1.14.2.tar.gz

tar xvf nginx-1.14.2.tar.gz

cd nginx-1.14.2

./configure —prefix=/usr/local/nginx && make && make install

Nginx环境变量设置

echo export PATH=\$PATH:/usr/local/nginx/sbin/ >> /etc/profile && source /etc/profile

验证环境变量

nginx

nginx -V

Logstash和ES结合说明

3. Logstash支持读取日志发送到ES

4. 但Logstash用来收集日志比较重,后面将对这个进行优化

Logstash配置发送日志到ES数据库/usr/local/logstash-6.6.0/config/logstash.conf

input {

file {

path => “/usr/local/nginx/logs/access.log”

}

}

output {

elasticsearch {

hosts => [“http://192.168.10.11:9200“]

}

}

重载配置

5. kill -1 进程id

[root@server12 ~]# kill -1 ps aux | grep logstash | awk '{print $2}'

[root@server12 ~]# /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf

Sending Logstash logs to /usr/local/logstash-6.6.0/logs which is now configured via log4j2.properties

[2022-03-12T09:39:45,423][WARN ][logstash.config.source.multilocal] Ignoring the ‘pipelines.yml’ file because modules or command line options are specified

[2022-03-12T09:39:45,452][INFO ][logstash.runner ] Starting Logstash {“logstash.version”=>”6.6.0”}

[2022-03-12T09:40:01,300][INFO ][logstash.pipeline ] Starting pipeline {:pipelineid=>”main”, “pipeline.workers”=>1, “pipeline.batch.size”=>125, “pipeline.batch.delay”=>50}

[2022-03-12T09:40:02,027][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the “path” setting {:sincedb_path=>”/usr/local/logstash-6.6.0/data/plugins/inputs/file/.sincedb_730aea1d074d4636ec2eacfacc10f882”, :path=>[“/var/log/secure”]}

[2022-03-12T09:40:02,152][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>”main”, :thread=>”#

[2022-03-12T09:40:02,271][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2022-03-12T09:40:02,494][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2022-03-12T09:40:03,230][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

{

“message” => “Mar 12 09:40:32 jaking polkitd[728]: Registered Authentication Agent for unix-process:3489:2358070 (system bus name :1.81 [/usr/bin/pkttyagent —notify-fd 5 —fallback], object path /org/freedesktop/PolicyKit1/AuthenticationAgent, locale en_US.UTF-8)”,

“path” => “/var/log/secure”,

“@timestamp” => 2022-03-12T14:40:33.532Z,

“host” => “server12”,

“@version” => “1”

}

{

“message” => “Mar 12 09:40:32 jaking polkitd[728]: Unregistered Authentication Agent for unix-process:3489:2358070 (system bus name :1.81, object path /org/freedesktop/PolicyKit1/AuthenticationAgent, locale en_US.UTF-8) (disconnected from bus)”,

“path” => “/var/log/secure”,

“@timestamp” => 2022-03-12T14:40:33.590Z,

“host” => “server12”,

“@version” => “1”

}

[2022-03-12T09:54:45,112][WARN ][logstash.runner ] SIGHUP received.

[2022-03-12T09:54:46,564][INFO ][logstash.pipelineaction.reload] Reloading pipeline {“pipeline.id”=>:main}

[2022-03-12T09:54:46,633][INFO ][filewatch.observingtail ] QUIT - closing all files and shutting down.

[2022-03-12T09:54:47,316][INFO ][logstash.pipeline ] Pipeline has terminated {:pipeline_id=>”main”, :thread=>”#

[2022-03-12T09:54:47,657][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>”main”, “pipeline.workers”=>1, “pipeline.batch.size”=>125, “pipeline.batch.delay”=>50}

[2022-03-12T09:54:48,933][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.10.11:9200/]}}

[2022-03-12T09:54:49,521][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>”http://192.168.10.11:9200/“}

[2022-03-12T09:54:49,631][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2022-03-12T09:54:49,648][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the type event field won’t be used to determine the document _type {:es_version=>6}

[2022-03-12T09:54:49,718][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2022-03-12T09:54:49,756][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>”LogStash::Outputs::ElasticSearch”, :hosts=>[“http://192.168.10.11:9200"]}

[2022-03-12T09:54:49,789][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{“template”=>”logstash-*”, “version”=>60001, “settings”=>{“index.refresh_interval”=>”5s”}, “mappings”=>{“_default

[2022-03-12T09:54:49,976][INFO ][logstash.outputs.elasticsearch] Installing elasticsearch template to _template/logstash

[2022-03-12T09:54:50,032][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the “path” setting {:sincedb_path=>”/usr/local/logstash-6.6.0/data/plugins/inputs/file/.sincedb_d2343edad78a7252d2ea9cba15bbff6d”, :path=>[“/usr/local/nginx/logs/access.log”]}

[2022-03-12T09:54:50,070][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>”main”, :thread=>”#

[2022-03-12T09:54:50,137][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2022-03-12T09:54:50,185][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2022-03-12T09:54:52,502][WARN ][logstash.runner ] SIGHUP received.

[root@server11 ~]# tail -f /usr/local/nginx/logs/access.log

192.168.10.1 - jaking [12/Mar/2022:09:55:54 -0500] “POST /api/console/proxy?path=_aliases&method=GET HTTP/1.1” 200 214 “http://192.168.10.11/app/kibana” “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36”

[root@server12 ~]# tail -f /usr/local/nginx/logs/access.log

192.168.10.1 - - [12/Mar/2022:10:24:05 -0500] “GET / HTTP/1.1” 200 612 “-“ “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36”

192.168.10.1 - - [12/Mar/2022:10:24:05 -0500] “GET /favicon.ico HTTP/1.1” 404 571 “http://192.168.10.12/“ “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36”

192.168.10.1 - - [12/Mar/2022:10:25:23 -0500] “GET / HTTP/1.1” 304 0 “-“ “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36”

192.168.10.1 - - [12/Mar/2022:10:25:23 -0500] “GET / HTTP/1.1” 304 0 “-“ “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36”

Logstash收集日志必要点

6. 日志文件需要有新日志产生

7. Logstash跟Elasticsearch要能通讯

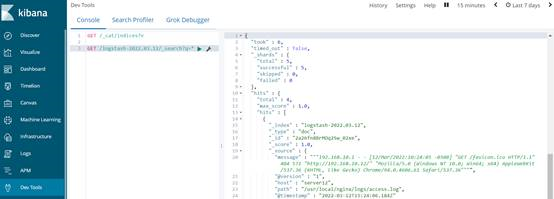

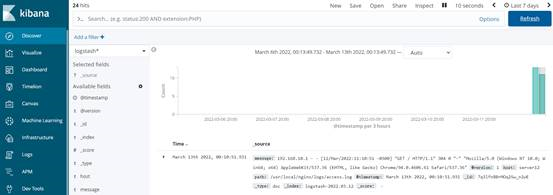

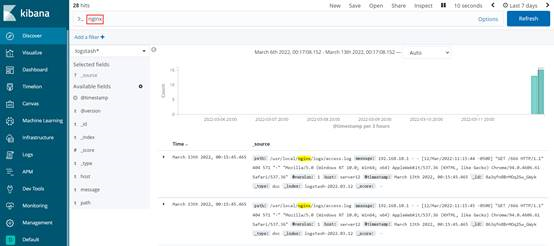

Kibana上查询数据

8. GET /logstash- 2022.03.12/_search?q=

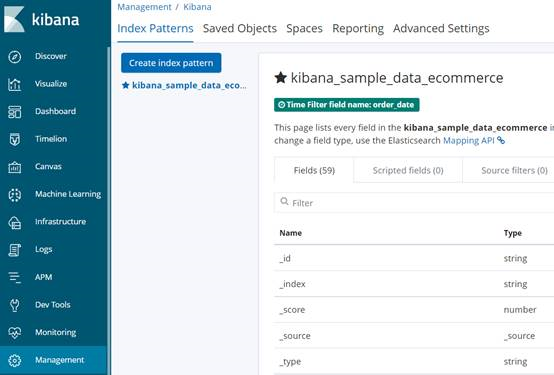

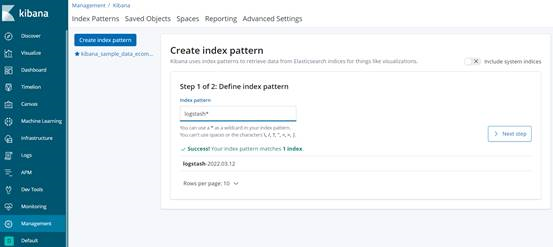

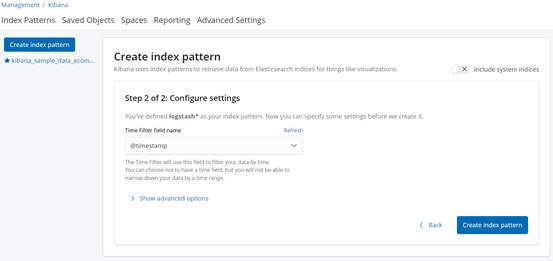

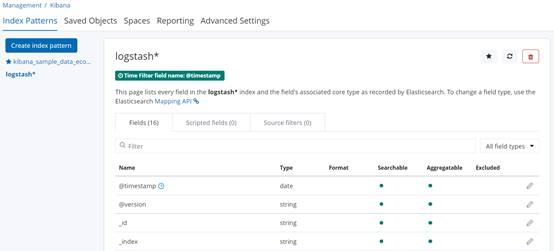

9. Kibana上创建索引直接查看日志

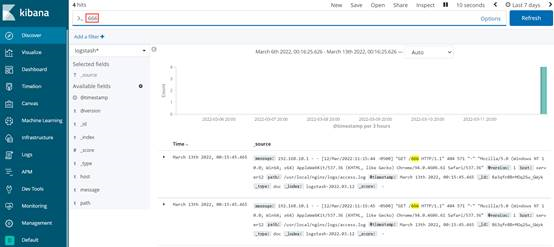

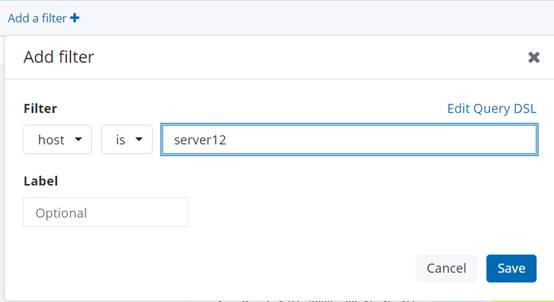

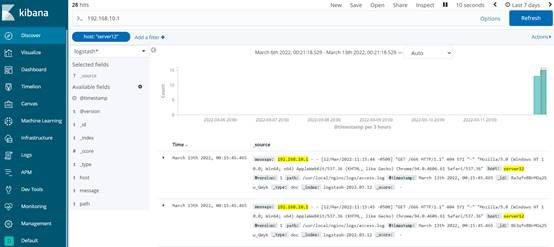

Kibana简单查询

10. 根据字段查询:message: “nginx”

- 根据字段查询:选中查询

ELK流程

Logstash读取日志 -> ES存储数据 -> Kibana展现