部署服务介绍

1. 192.168.10.11 Kibana ES

2. 192.168.10.12 Logstash Filebeat Redis

[root@server12 ~]# netstat -pantul | grep redis

tcp 0 0 127.0.0.1:6379 0.0.0.0: LISTEN 18312/redis-server

[root@server12 ~]# vim /usr/local/redis/conf/redis.conf

#把bind 127.0.0.1改成bind 0.0.0.0

bind 0.0.0.0

[root@server12 ~]# ps aux | grep redis

root 18312 0.1 0.2 145256 7524 ? Ssl 23:29 0:00 /usr/local/redis/bin/redis-server 127.0.0.1:6379

root 18323 0.0 0.0 112652 956 pts/0 R+ 23:40 0:00 grep —color=auto redis

[root@server12 ~]# kill 18312

[root@server12 ~]# ps aux | grep redis

root 18325 0.0 0.0 112652 956 pts/0 R+ 23:40 0:00 grep —color=auto redis

[root@server12 ~]# /usr/local/redis/bin/redis-server /usr/local/redis/conf/redis.conf

18326:C 14 Mar 23:40:47.295 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

18326:C 14 Mar 23:40:47.295 # Redis version=4.0.9, bits=64, commit=00000000, modified=0, pid=18326, just started

18326:C 14 Mar 23:40:47.295 # Configuration loaded

[root@server12 ~]# ps aux | grep redis

root 18327 0.0 0.2 145256 7592 ? Rsl 23:40 0:00 /usr/local/redis/bin/redis-server 0.0.0.0:6379

root 18332 0.0 0.0 112652 956 pts/0 R+ 23:40 0:00 grep —color=auto redis

[root@server12 ~]# netstat -pantul | grep 6379

tcp 0 0 0.0.0.0:6379 0.0.0.0: LISTEN 18327/redis-server

Filebeat配置写入到Redis,Filebeat引入Redis缓存

[root@server12 ~]# cat /usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tailfiles: true

backoff: “1s”

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

- type: log

tail_files: true

backoff: “1s”

paths:

- /var/log/secure

fields:

type: secure

fields_under_root: true

output:

logstash:

hosts: [“192.168.10.12:5044”]

[root@server12 ~]# vim /usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: “1s”

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

output:

redis:

hosts: [“192.168.10.12”]

port: 6379

password: ‘jaking’

key: ‘access’

[root@server12 ~]# ps aux | grep filebeat

root 11917 0.0 0.5 433804 17044 pts/0 Sl 22:10 0:00 /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml

root 18341 0.0 0.0 112652 960 pts/0 R+ 23:45 0:00 grep —color=auto filebeat

[root@server12 ~]# kill -9 11917

[root@server12 ~]# ps aux | grep filebeat

root 18343 0.0 0.0 112652 956 pts/0 R+ 23:45 0:00 grep —color=auto filebeat

[1]+ Killed nohup /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml >> /tmp/filebeat.log

[root@server12 ~]# ps aux | grep filebeat

root 18345 0.0 0.0 112652 960 pts/0 R+ 23:45 0:00 grep —color=auto filebeat

[root@server12 ~]# nohup /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml >> /tmp/filebeat.log &

[1] 18346

[root@server12 ~]# nohup: ignoring input and redirecting stderr to stdout

[root@server12 ~]# ps aux | grep filebeat

root 18346 0.6 0.5 289884 16420 pts/0 Sl 23:45 0:00 /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml

root 18354 0.0 0.0 112652 960 pts/0 R+ 23:45 0:00 grep —color=auto filebeat

[root@server12 ~]# tail -f /tmp/filebeat.log

2022-03-14T23:45:45.724+0800 INFO instance/beat.go:403 filebeat start running.

2022-03-14T23:45:45.724+0800 INFO registrar/registrar.go:134 Loading registrar data from /usr/local/filebeat-6.6.0/data/registry

2022-03-14T23:45:45.724+0800 INFO registrar/registrar.go:141 States Loaded from registrar: 3

2022-03-14T23:45:45.724+0800 WARN beater/filebeat.go:367 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2022-03-14T23:45:45.724+0800 INFO crawler/crawler.go:72 Loading Inputs: 1

2022-03-14T23:45:45.725+0800 INFO [monitoring] log/log.go:117 Starting metrics logging every 30s

2022-03-14T23:45:45.726+0800 INFO log/input.go:138 Configured paths: [/usr/local/nginx/logs/access.json.log]

2022-03-14T23:45:45.726+0800 INFO input/input.go:114 Starting input of type: log; ID: 4627602243620244963

2022-03-14T23:45:45.726+0800 INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

2022-03-14T23:46:15.727+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {“monitoring”: {“metrics”: {“beat”:{“cpu”:{“system”:{“ticks”:10,”time”:{“ms”:11}},”total”:{“ticks”:20,”time”:{“ms”:23},”value”:20},”user”:{“ticks”:10,”time”:{“ms”:12}}},”handles”:{“limit”:{“hard”:4096,”soft”:1024},”open”:6},”info”:{“ephemeral_id”:”69f15581-fdf1-46fa-83a8-fc6b0ee285b1”,”uptime”:{“ms”:30016}},”memstats”:{“gc_next”:4200816,”memory_alloc”:2400088,”memory_total”:4416784,”rss”:17068032}},”filebeat”:{“events”:{“added”:1,”done”:1},”harvester”:{“open_files”:0,”running”:0}},”libbeat”:{“config”:{“module”:{“running”:0}},”output”:{“type”:”redis”},”pipeline”:{“clients”:1,”events”:{“active”:0,”filtered”:1,”total”:1}}},”registrar”:{“states”:{“current”:3,”update”:1},”writes”:{“success”:1,”total”:1}},”system”:{“cpu”:{“cores”:1},”load”:{“1”:0.01,”15”:0.12,”5”:0.08,”norm”:{“1”:0.01,”15”:0.12,”5”:0.08}}}}}}

[root@server12 ~]# curl 127.0.0.1

<!DOCTYPE html>

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

](http://nginx.org/">nginx.org.

)

Commercial support is available at

nginx.com.

Thank you for using nginx.

[root@server12 ~]# /usr/local/redis/bin/redis-cli

127.0.0.1:6379> auth jaking

OK

127.0.0.1:6379> info

# Server

redis_version:4.0.9

redis_git_sha1:00000000

redis_git_dirty:0

redis_build_id:3455642c9da3b028

redis_mode:standalone

os:Linux 3.10.0-514.el7.x86_64 x86_64

arch_bits:64

multiplexing_api:epoll

atomicvar_api:atomic-builtin

gcc_version:4.8.5

process_id:18327

run_id:de00f8c60cd3aefb9570a0f1d44a217f33a98df5

tcp_port:6379

uptime_in_seconds:465

uptime_in_days:0

hz:10

lru_clock:3105744

executable:/usr/local/redis/bin/redis-server

config_file:/usr/local/redis/conf/redis.conf

# Clients

connected_clients:2

client_longest_output_list:0

client_biggest_input_buf:0

blocked_clients:0

# Memory

used_memory:871232

used_memory_human:850.81K

used_memory_rss:7774208

used_memory_rss_human:7.41M

used_memory_peak:871232

used_memory_peak_human:850.81K

used_memory_peak_perc:100.12%

used_memory_overhead:853232

used_memory_startup:786600

used_memory_dataset:18000

used_memory_dataset_perc:21.27%

total_system_memory:2985820160

total_system_memory_human:2.78G

used_memory_lua:37888

used_memory_lua_human:37.00K

maxmemory:0

maxmemory_human:0B

maxmemory_policy:noeviction

mem_fragmentation_ratio:8.92

mem_allocator:jemalloc-4.0.3

active_defrag_running:0

lazyfree_pending_objects:0

# Persistence

loading:0

rdb_changes_since_last_save:1

rdb_bgsave_in_progress:0

rdb_last_save_time:1647272447

rdb_last_bgsave_status:ok

rdb_last_bgsave_time_sec:-1

rdb_current_bgsave_time_sec:-1

rdb_last_cow_size:0

aof_enabled:0

aof_rewrite_in_progress:0

aof_rewrite_scheduled:0

aof_last_rewrite_time_sec:-1

aof_current_rewrite_time_sec:-1

aof_last_bgrewrite_status:ok

aof_last_write_status:ok

aof_last_cow_size:0

# Stats

total_connections_received:2

total_commands_processed:5

instantaneous_ops_per_sec:0

total_net_input_bytes:743

total_net_output_bytes:2846

instantaneous_input_kbps:0.00

instantaneous_output_kbps:0.00

rejected_connections:0

sync_full:0

sync_partial_ok:0

sync_partial_err:0

expired_keys:0

expired_stale_perc:0.00

expired_time_cap_reached_count:0

evicted_keys:0

keyspace_hits:0

keyspace_misses:0

pubsub_channels:0

pubsub_patterns:0

latest_fork_usec:0

migrate_cached_sockets:0

slave_expires_tracked_keys:0

active_defrag_hits:0

active_defrag_misses:0

active_defrag_key_hits:0

active_defrag_key_misses:0

# Replication

role:master

connected_slaves:0

master_replid:4f35949561f6716a4ee2196499885401a53bff9c

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

# CPU

used_cpu_sys:0.28

used_cpu_user:0.19

used_cpu_sys_children:0.00

used_cpu_user_children:0.00

# Cluster

cluster_enabled:0

# Keyspace

db0:keys=2,expires=0,avg_ttl=0

127.0.0.1:6379>

127.0.0.1:6379> keys

1) “name”

2) “access”

127.0.0.1:6379> type access

list

127.0.0.1:6379> type name

string

127.0.0.1:6379> LRANGE access 0 -1

1) “{\”@timestamp\”:\”2022-03-14T15:47:25.733Z\”,\”@metadata\”:{\”beat\”:\”filebeat\”,\”type\”:\”doc\”,\”version\”:\”6.6.0\”},\”offset\”:99410,\”source\”:\”/usr/local/nginx/logs/access.json.log\”,\”input\”:{\”type\”:\”log\”},\”beat\”:{\”name\”:\”server12\”,\”hostname\”:\”server12\”,\”version\”:\”6.6.0\”},\”host\”:{\”name\”:\”server12\”},\”log\”:{\”file\”:{\”path\”:\”/usr/local/nginx/logs/access.json.log\”}},\”message\”:\”{\\”@timestamp\\”:\\”2022-03-14T23:47:19+08:00\\”,\\”clientip\\”:\\”127.0.0.1\\”,\\”status\\”:200,\\”bodysize\\”:612,\\”referer\\”:\\”-\\”,\\”ua\\”:\\”curl/7.29.0\\”,\\”handletime\\”:0.000,\\”url\\”:\\”/index.html\\”}\”,\”prospector\”:{\”type\”:\”log\”},\”type\”:\”access\”}”

127.0.0.1:6379>

127.0.0.1:6379> llen access

(integer) 1

Logstash从Redis中读取数据

[root@server12 ~]# vim /usr/local/logstash-6.6.0/config/logstash.conf

input {

redis {

host => ‘192.168.10.12’

port => 6379

key => “access”

data_type => “list”

password => ‘jaking’

}

}

[root@server12 ~]# cat /usr/local/logstash-6.6.0/config/logstash.conf

#input {

# beats {

# host => ‘0.0.0.0’

# port => 5044

# }

#}

input {

redis {

host => ‘192.168.10.12’

port => 6379

key => “access”

data_type => “list”

password => ‘jaking’

}

}

filter {

if [type] == “access” {

json {

source => “message”

remove_field => [“message”,”@version”,”path”,”beat”,”input”,”log”,”offset”,”prospector”,”source”,”tags”]

}

}

}

output{

if [type] == “access” {

elasticsearch {

hosts => [“http://192.168.10.11:9200“]

index => “access-%{+YYYY.MM.dd}”

}

}

else if [type] == “secure” {

elasticsearch {

hosts => [“http://192.168.10.11:9200“]

index => “secure-%{+YYYY.MM.dd}”

}

}

}

[root@server12 ~]# /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf

[2022-03-14T11:54:38,443][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>”LogStash::Outputs::ElasticSearch”, :hosts=>[“http://192.168.10.11:9200"]}

[2022-03-14T11:54:38,443][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2022-03-14T11:54:38,465][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{“template”=>”logstash-“, “version”=>60001, “settings”=>{“index.refresh_interval”=>”5s”}, “mappings”=>{“_default“=>{“dynamic_templates”=>[{“message_field”=>{“path_match”=>”message”, “match_mapping_type”=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false}}}, {“string_fields”=>{“match”=>”*”, “match_mapping_type”=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false, “fields”=>{“keyword”=>{“type”=>”keyword”, “ignore_above”=>256}}}}}], “properties”=>{“@timestamp”=>{“type”=>”date”}, “@version”=>{“type”=>”keyword”}, “geoip”=>{“dynamic”=>true, “properties”=>{“ip”=>{“type”=>”ip”}, “location”=>{“type”=>”geo_point”}, “latitude”=>{“type”=>”half_float”}, “longitude”=>{“type”=>”half_float”}}}}}}}}

[2022-03-14T11:54:38,550][INFO ][logstash.inputs.redis ] Registering Redis {:identity=>”redis://

[2022-03-14T11:54:38,594][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>”main”, :thread=>”#

[2022-03-14T11:54:38,918][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2022-03-14T11:54:39,991][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

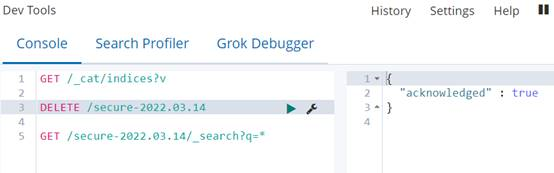

DELETE /access-2022.03.14

DELETE /secure-2022.03.14

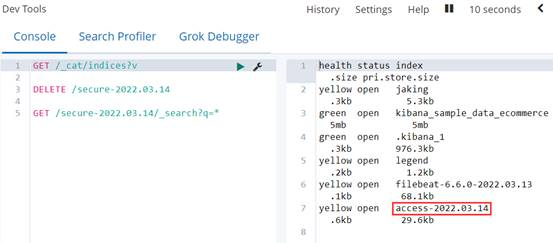

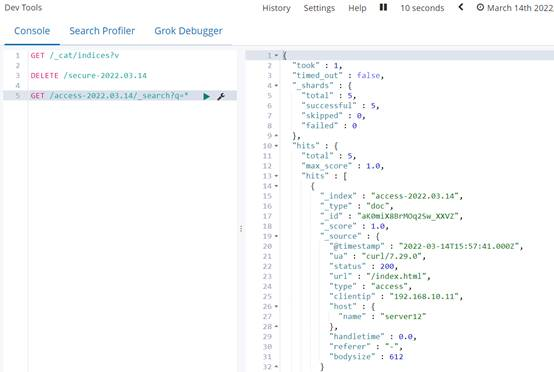

[root@server11 ~]# curl 192.168.10.12 #多访问几次

架构优化

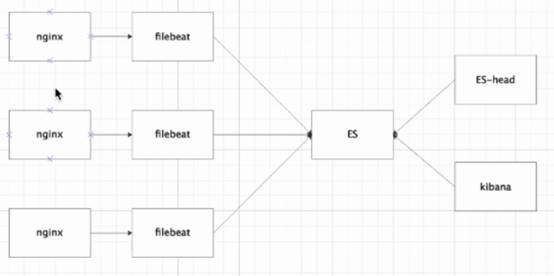

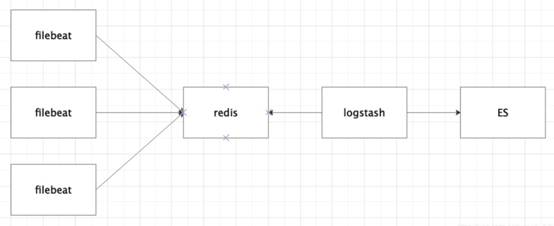

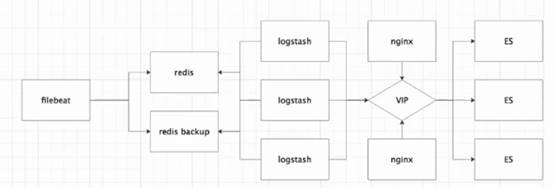

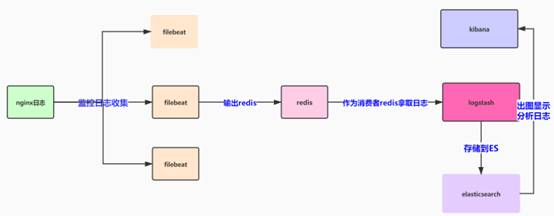

Filebeat(多台) Logstash

Filebeat(多台) -> Redis、Kafka -> Logstash(正则) -> Elasticsearch(入库) -> Kibana展现