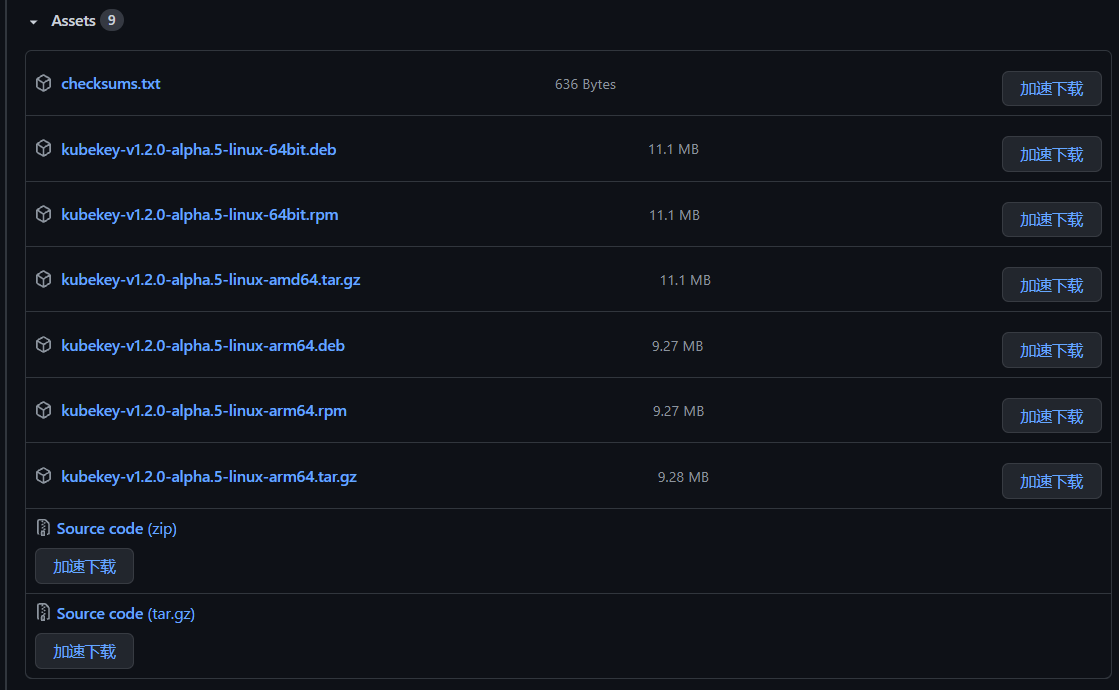

下载 kk rpm 安装包

https://github.com/kubesphere/kubekey/releases/tag/v1.2.0-alpha.5

[root@master ~]# rpm -ivh kubekey-v2.1.1-linux-64bit.rpmPreparing... ################################# [100%]Updating / installing...1:kubekey-0:2.1.1-1 ################################# [100%][root@master ~]# kk versionversion.BuildInfo{Version:"2.1.1", GitCommit:"b19724c7", GitTreeState:"", GoVersion:"go1.17.10"}[root@master ~]#

声明环境变量

export KKZONE=cn

[root@riyimei home]# kk upgrade --with-kubesphere v3.2.0-alpha.0+---------+------+------+---------+----------+-------+-------+-----------+---------+------------+-------------+------------------+--------------+| name | sudo | curl | openssl | ebtables | socat | ipset | conntrack | docker | nfs client | ceph client | glusterfs client | time |+---------+------+------+---------+----------+-------+-------+-----------+---------+------------+-------------+------------------+--------------+| riyimei | y | y | y | y | | y | y | 19.03.7 | | | | CST 10:53:32 |+---------+------+------+---------+----------+-------+-------+-----------+---------+------------+-------------+------------------+--------------+Warning:An old Docker version may cause the failure of upgrade. It is recommended that you upgrade Docker to 20.10+ beforehand.Issue: https://github.com/kubernetes/kubernetes/issues/101056Cluster nodes status:NAME STATUS ROLES AGE VERSIONriyimei Ready control-plane,master,worker 102d v1.20.4Upgrade Confirmation:kubernetes version: v1.20.4 to v1.21.5kubesphere version: v3.1.0 to v3.2.0-alpha.0Continue upgrading cluster? [yes/no]: yesINFO[10:53:35 CST] Get current versionINFO[10:53:35 CST] Configuring operating system ...[riyimei 192.168.11.190] MSG:net.ipv4.ip_forward = 1net.bridge.bridge-nf-call-arptables = 1net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_local_reserved_ports = 30000-32767vm.max_map_count = 262144vm.swappiness = 1fs.inotify.max_user_instances = 524288INFO[10:53:40 CST] Upgrading kube clusterINFO[10:53:40 CST] Start Upgrade: v1.20.4 -> v1.21.5INFO[10:53:40 CST] Downloading Installation FilesINFO[10:53:40 CST] Downloading kubeadm ...INFO[10:53:40 CST] Downloading kubelet ...INFO[10:53:41 CST] Downloading kubectl ...INFO[10:53:41 CST] Downloading helm ...INFO[10:53:42 CST] Downloading kubecni ...INFO[10:54:22 CST] Downloading etcd ...INFO[10:54:40 CST] Downloading docker ...INFO[10:55:43 CST] Downloading crictl ...[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.21.5[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.21.5[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.21.5[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.21.5[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.20.0[riyimei] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.20.0

# 将 completion 脚本添加到你的 ~/.bashrc 文件echo 'source <(kubectl completion bash)' >>~/.bashrc# 将 completion 脚本添加到 /etc/bash_completion.d 目录kubectl completion bash >/etc/bash_completion.d/kubectl

k3s

[root@riyimei ~]# ./kk create cluster --with-kubernetes v1.21.6-k3s --with-kubesphere v3.3.0-alpha.1_ __ _ _ __| | / / | | | | / /| |/ / _ _| |__ ___| |/ / ___ _ _| \| | | | '_ \ / _ \ \ / _ \ | | || |\ \ |_| | |_) | __/ |\ \ __/ |_| |\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |__/ ||___/11:52:23 CST [GreetingsModule] Greetings11:52:23 CST message: [riyimei]Greetings, KubeKey!11:52:23 CST success: [riyimei]11:52:23 CST [K3sNodeBinariesModule] Download installation binaries11:52:23 CST message: [localhost]downloading amd64 k3s v1.21.6 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 46.8M 100 46.8M 0 0 1012k 0 0:00:47 0:00:47 --:--:-- 1052k11:53:11 CST message: [localhost]downloading amd64 helm v3.6.3 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 43.0M 100 43.0M 0 0 1006k 0 0:00:43 0:00:43 --:--:-- 1042k11:53:55 CST message: [localhost]downloading amd64 kubecni v0.9.1 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 37.9M 100 37.9M 0 0 956k 0 0:00:40 0:00:40 --:--:-- 1055k11:54:36 CST message: [localhost]downloading amd64 etcd v3.4.13 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 16.5M 100 16.5M 0 0 1011k 0 0:00:16 0:00:16 --:--:-- 1058k11:54:53 CST success: [LocalHost]11:54:53 CST [ConfigureOSModule] Prepare to init OS11:54:54 CST success: [riyimei]11:54:54 CST [ConfigureOSModule] Generate init os script11:54:54 CST success: [riyimei]11:54:54 CST [ConfigureOSModule] Exec init os script11:54:55 CST stdout: [riyimei]setenforce: SELinux is disabledDisablednet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-arptables = 1net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_local_reserved_ports = 30000-32767vm.max_map_count = 262144vm.swappiness = 1fs.inotify.max_user_instances = 524288kernel.pid_max = 6553511:54:55 CST success: [riyimei]11:54:55 CST [ConfigureOSModule] configure the ntp server for each node11:54:55 CST skipped: [riyimei]11:54:55 CST [StatusModule] Get k3s cluster status11:54:55 CST success: [riyimei]11:54:55 CST [ETCDPreCheckModule] Get etcd status11:54:55 CST success: [riyimei]11:54:55 CST [CertsModule] Fetch etcd certs11:54:55 CST success: [riyimei]11:54:55 CST [CertsModule] Generate etcd Certs[certs] Generating "ca" certificate and key[certs] admin-riyimei serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost riyimei] and IPs [127.0.0.1 ::1 192.168.11.190][certs] member-riyimei serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost riyimei] and IPs [127.0.0.1 ::1 192.168.11.190][certs] node-riyimei serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost riyimei] and IPs [127.0.0.1 ::1 192.168.11.190]11:54:56 CST success: [LocalHost]11:54:56 CST [CertsModule] Synchronize certs file11:54:57 CST success: [riyimei]11:54:57 CST [CertsModule] Synchronize certs file to master11:54:57 CST skipped: [riyimei]11:54:57 CST [InstallETCDBinaryModule] Install etcd using binary11:54:58 CST success: [riyimei]11:54:58 CST [InstallETCDBinaryModule] Generate etcd service11:54:58 CST success: [riyimei]11:54:58 CST [InstallETCDBinaryModule] Generate access address11:54:58 CST success: [riyimei]11:54:58 CST [ETCDConfigureModule] Health check on exist etcd11:54:59 CST skipped: [riyimei]11:54:59 CST [ETCDConfigureModule] Generate etcd.env config on new etcd11:54:59 CST success: [riyimei]11:54:59 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd11:54:59 CST success: [riyimei]11:54:59 CST [ETCDConfigureModule] Restart etcd11:55:04 CST stdout: [riyimei]Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.11:55:04 CST success: [riyimei]11:55:04 CST [ETCDConfigureModule] Health check on all etcd11:55:04 CST success: [riyimei]11:55:04 CST [ETCDConfigureModule] Refresh etcd.env config to exist mode on all etcd11:55:04 CST success: [riyimei]11:55:04 CST [ETCDConfigureModule] Health check on all etcd11:55:04 CST success: [riyimei]11:55:04 CST [ETCDBackupModule] Backup etcd data regularly11:55:11 CST success: [riyimei]11:55:11 CST [InstallKubeBinariesModule] Synchronize k3s binaries11:55:14 CST success: [riyimei]11:55:14 CST [InstallKubeBinariesModule] Generate k3s killall.sh script11:55:15 CST success: [riyimei]11:55:15 CST [InstallKubeBinariesModule] Generate k3s uninstall script11:55:15 CST success: [riyimei]11:55:15 CST [InstallKubeBinariesModule] Chmod +x k3s script11:55:15 CST success: [riyimei]11:55:15 CST [K3sInitClusterModule] Generate k3s Service11:55:15 CST success: [riyimei]11:55:15 CST [K3sInitClusterModule] Generate k3s service env11:55:15 CST success: [riyimei]11:55:15 CST [K3sInitClusterModule] Enable k3s service11:55:29 CST success: [riyimei]11:55:29 CST [K3sInitClusterModule] Copy k3s.yaml to ~/.kube/config11:55:30 CST success: [riyimei]11:55:30 CST [K3sInitClusterModule] Add master taint11:55:30 CST skipped: [riyimei]11:55:30 CST [K3sInitClusterModule] Add worker label11:55:30 CST success: [riyimei]11:55:30 CST [StatusModule] Get k3s cluster status11:55:30 CST stdout: [riyimei]v1.21.6+k3s111:55:30 CST stdout: [riyimei]K10d23736c832e37ae9fbe915cc4a0c35a0156ea207ad58471a2ab39e9adb6cf049::server:6543932a784c55afa18201a11417f29711:55:31 CST stdout: [riyimei]riyimei v1.21.6+k3s1 [map[address:192.168.11.190 type:InternalIP] map[address:riyimei type:Hostname]]11:55:31 CST success: [riyimei]11:55:31 CST [K3sJoinNodesModule] Generate k3s Service11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Generate k3s service env11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Enable k3s service11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Copy k3s.yaml to ~/.kube/config11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Synchronize kube config to worker11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Add master taint11:55:31 CST skipped: [riyimei]11:55:31 CST [K3sJoinNodesModule] Add worker label11:55:31 CST skipped: [riyimei]11:55:31 CST [DeployNetworkPluginModule] Generate calico11:55:31 CST success: [riyimei]11:55:31 CST [DeployNetworkPluginModule] Deploy calico11:55:33 CST stdout: [riyimei]configmap/calico-config createdcustomresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org createdclusterrole.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrole.rbac.authorization.k8s.io/calico-node createdclusterrolebinding.rbac.authorization.k8s.io/calico-node createddaemonset.apps/calico-node createdserviceaccount/calico-node createddeployment.apps/calico-kube-controllers createdserviceaccount/calico-kube-controllers createdWarning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudgetpoddisruptionbudget.policy/calico-kube-controllers created11:55:33 CST success: [riyimei]11:55:33 CST [ConfigureKubernetesModule] Configure kubernetes11:55:33 CST success: [riyimei]11:55:33 CST [ChownModule] Chown user $HOME/.kube dir11:55:33 CST success: [riyimei]11:55:33 CST [SaveKubeConfigModule] Save kube config as a configmap11:55:33 CST success: [LocalHost]11:55:33 CST [AddonsModule] Install addons11:55:33 CST success: [LocalHost]11:55:33 CST [DeployStorageClassModule] Generate OpenEBS manifest11:55:34 CST success: [riyimei]11:55:34 CST [DeployStorageClassModule] Deploy OpenEBS as cluster default StorageClass11:55:35 CST success: [riyimei]11:55:35 CST [DeployKubeSphereModule] Generate KubeSphere ks-installer crd manifests11:55:35 CST success: [riyimei]11:55:35 CST [DeployKubeSphereModule] Apply ks-installer11:55:36 CST stdout: [riyimei]namespace/kubesphere-system createdserviceaccount/ks-installer createdcustomresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io createdclusterrole.rbac.authorization.k8s.io/ks-installer createdclusterrolebinding.rbac.authorization.k8s.io/ks-installer createddeployment.apps/ks-installer created11:55:36 CST success: [riyimei]11:55:36 CST [DeployKubeSphereModule] Add config to ks-installer manifests11:55:36 CST success: [riyimei]11:55:36 CST [DeployKubeSphereModule] Create the kubesphere namespace11:55:36 CST success: [riyimei]11:55:36 CST [DeployKubeSphereModule] Setup ks-installer config11:55:37 CST stdout: [riyimei]secret/kube-etcd-client-certs created11:55:37 CST success: [riyimei]11:55:37 CST [DeployKubeSphereModule] Apply ks-installer11:55:38 CST stdout: [riyimei]namespace/kubesphere-system unchangedserviceaccount/ks-installer unchangedcustomresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchangedclusterrole.rbac.authorization.k8s.io/ks-installer unchangedclusterrolebinding.rbac.authorization.k8s.io/ks-installer unchangeddeployment.apps/ks-installer unchangedclusterconfiguration.installer.kubesphere.io/ks-installer created11:55:38 CST success: [riyimei]######################################################## Welcome to KubeSphere! ########################################################Console: http://192.168.11.190:30880Account: adminPassword: P@88w0rdNOTES:1. After you log into the console, please check themonitoring status of service components in"Cluster Management". If any service is notready, please wait patiently until all componentsare up and running.2. Please change the default password after login.#####################################################https://kubesphere.io 2022-04-23 12:04:21#####################################################12:04:25 CST success: [riyimei]12:04:25 CST Pipeline[K3sCreateClusterPipeline] execute successfulInstallation is complete.Please check the result using the command:kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f[root@riyimei ~]#

[root@riyimei ~]# kubectl get pod -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-system calico-kube-controllers-75ddb95444-ftxhh 1/1 Running 0 9m48skube-system calico-node-g6pjb 1/1 Running 0 9m48skube-system coredns-7448499f4d-c8jg7 1/1 Running 0 9m48skube-system openebs-localpv-provisioner-6c9dcb5c54-64zjb 1/1 Running 0 9m48skube-system snapshot-controller-0 1/1 Running 0 7m40skubesphere-controls-system default-http-backend-5bf68ff9b8-swxb2 1/1 Running 0 6m25skubesphere-controls-system kubectl-admin-6667774bb-kn659 1/1 Running 0 81skubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 3m13skubesphere-monitoring-system kube-state-metrics-7bdc7484cf-wxwz6 3/3 Running 0 3m25skubesphere-monitoring-system node-exporter-27gvk 2/2 Running 0 3m27skubesphere-monitoring-system notification-manager-deployment-8689b68cdc-s6cw5 2/2 Running 0 117skubesphere-monitoring-system notification-manager-operator-75fcc656f7-77ttx 2/2 Running 0 2m34skubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 3m11skubesphere-monitoring-system prometheus-operator-8955bbd98-fptsf 2/2 Running 0 3m28skubesphere-system ks-apiserver-777b6db954-fdc4z 1/1 Running 0 102skubesphere-system ks-console-5bd87ccd4d-ppj4d 1/1 Running 0 6m25skubesphere-system ks-controller-manager-68d86b6db6-vxlb4 1/1 Running 0 101skubesphere-system ks-installer-754fc84489-4xbvd 1/1 Running 0 9m48s[root@riyimei ~]#