- 背景

- 准备集群基础环境

- ">重启网卡会使配置失效,暂不清楚原因

#service network restart

- 准备容器运行环境(Docker)

- 安装Kubeadm、Kubelet、Kubectl

- 准备kubeadm初始化环境

- 各节点拉取所需镜像

- master节点初始化

- 修改3个信息

- worker节点加入集群

- 安装网络插件 flannel

- 若集群令牌过期且有新的worker节点加入,重新生成集群令牌

- 检查网络是否连通

- 部署dashboard

- 完成

背景

服务器配置

| 节点 | 内网IP | 公网IP | 配置 |

|---|---|---|---|

| ren | 10.0.4.17 | 1.15.230.38 | 4C8G |

| yan | 10.0.4.15 | 101.34.64.205 | 4C8G |

| bai | 192.168.0.4 | 106.12.145.172 | 2C8G |

软件版本

| 软件 | 版本 |

|---|---|

| centos | 7.6 |

| docker | 20.10.7 |

| kubelet | 1.20.9 |

| kubeadm | 1.20.9 |

| kubectl | 1.20.9 |

镜像版本

| 镜像 | 版本 |

|---|---|

| k8s.gcr.io/kube-apiserver | 1.20.9 |

| k8s.gcr.io/kube-controller-manager | 1.20.9 |

| k8s.gcr.io/kube-scheduler | 1.20.9 |

| k8s.gcr.io/kube-proxy | 1.20.9 |

| k8s.gcr.io/pause | 3.2 |

| k8s.gcr.io/etcd | 3.4.13-0 |

| k8s.gcr.io/coredns | 1.7.0 |

准备集群基础环境

执行节点:所有节点 执行目录:/root/

配置主机名、各节点SSH连接,参见

设置重启自动加载模块

临时设置

modprobe br_netfiltersysctl -p /etc/sysctl.conf

永久设置

查看

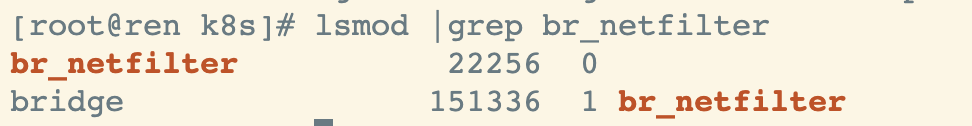

lsmod |grep br_netfilter

新建 rc.sysinit

cat > /etc/rc.sysinit <<EOF#!/bin/bashfor file in /etc/sysconfig/modules/*.modules ; do[ -x $file ] && $filedoneEOF

新建 br_netfilter.modules

cat > /etc/sysconfig/modules/br_netfilter.modules <<EOFmodprobe br_netfilterEOF

授权br_netfilter.modules文件执行权限

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

重启后再次查看已载入系统的模块

lsmod |grep br_netfilter

新建k8s网桥配置文件

cat > /root/k8s.conf <<EOF#开启网桥模式net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1#开启转发net.ipv4.ip_forward = 1##关闭ipv6net.ipv6.conf.all.disable_ipv6=1EOF

拷贝k8s网桥配置文件到系统目录下

cp k8s.conf /etc/sysctl.d/k8s.confsysctl -p /etc/sysctl.d/k8s.conf

设置时区

# 设置系统时区为 中国/上海timedatectl set-timezone Asia/Shanghai# 将当前的UTC时间写入硬件时钟timedatectl set-local-rtc 0# 重启依赖于系统时间的服务systemctl restart rsyslogsystemctl restart crond

关闭邮件服务

systemctl stop postfix && systemctl disable postfix

设置rsyslogd、systemd、journald

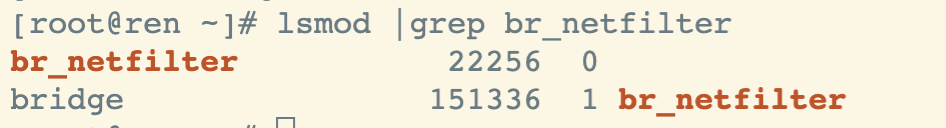

mkdir /var/log/journal # 持久化保存日志的目录mkdir /etc/systemd/journald.conf.d

新建 journald 配置文件

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF[Journal]# 持久化Storage=persistent# 压缩历史日志Compress=yesSysnIntervalSec=5mRateLimitInterval=30sRateLimitBurst=1000# 最大占用空间 10GSystemMaxUse=10G# 单日志文件最大 200MSystemMaxFileSize=200M# 日志保存时间 2 周MaxRetentionSec=2week# 不将日志转发到 syslogForwardToSyslog=noEOF

重启 journald 使生效

systemctl restart systemd-journald

ipvs前置条件准备

modprobe br_netfilter

新建 ipvs.modules 配置文件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4EOF

授权 ipvs.modules 文件执行权限

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

查看已载入系统的模块

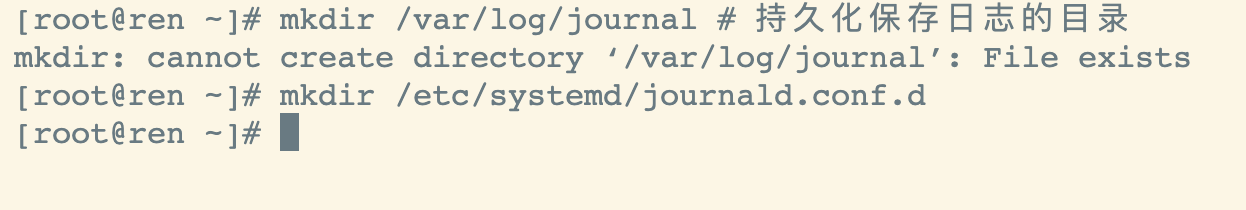

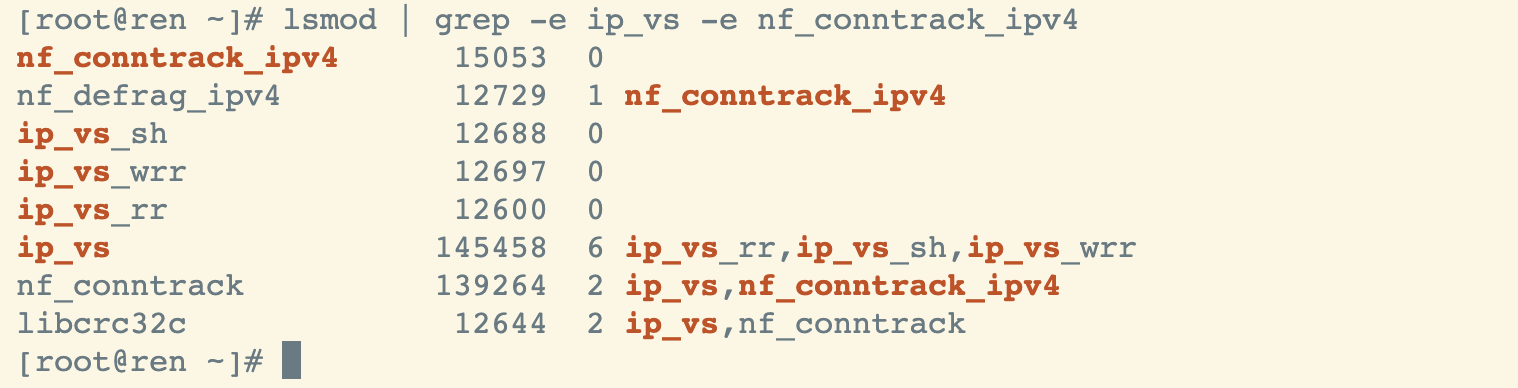

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

关闭swap分区

删除 swap 区所有内容

swapoff -a

修改配置文件 /etc/fstab

vi /etc/fstab#删除 /mnt/swap swap swap defaults 0 0 这一行或者注释掉这一行

修改配置文件 /etc/sysctl.con

echo vm.swappiness=0 >> /etc/sysctl.con

使生效

sysctl -p

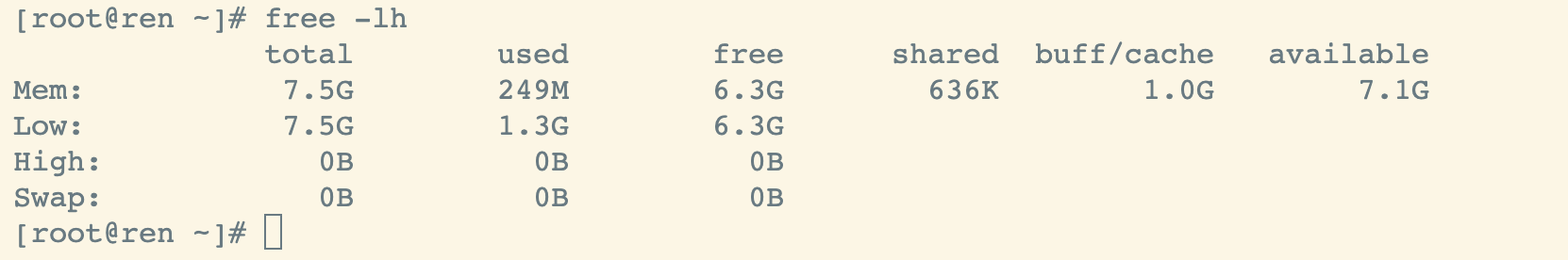

验证

free -lh

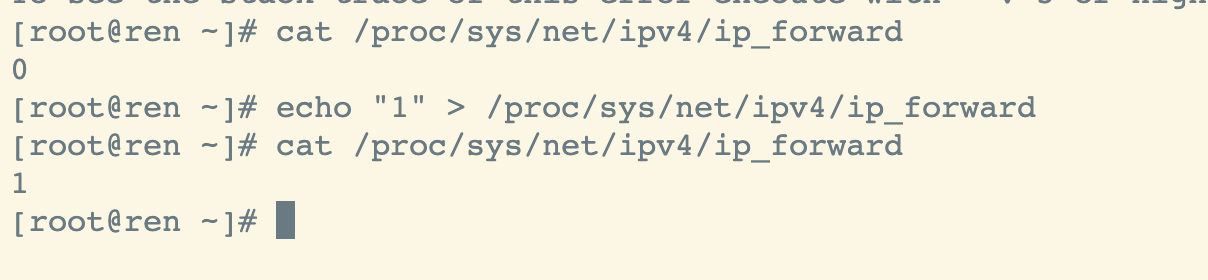

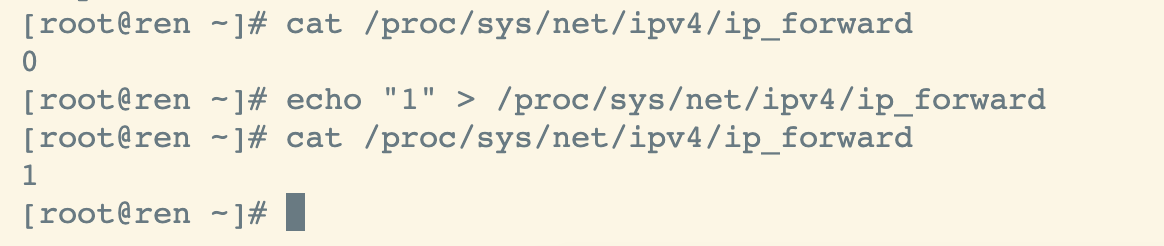

开启ipv4

不执行此步骤,会导致kubeadm初始化主节点报错 [ERROR FileContent—proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

cat /proc/sys/net/ipv4/ip_forwardecho "1" > /proc/sys/net/ipv4/ip_forward

重启网卡会使配置失效,暂不清楚原因

#service network restart

准备容器运行环境(Docker)

参见

docker安装

docker调整cgroup driver以适配k8s

上传文件

#上传相关文件到 /opt/software/dockercd /opt/software/dockertar xzvf docker-20.10.7.tgzchmod +x docker/*mv docker/* /usr/local/bin/

创建配置文件

echo '[Unit]Description=Docker Application Container EngineDocumentation=http://docs.docker.ioAfter=network.target[Service]Environment="PATH=/usr/local/bin:/bin:/sbin:/usr/bin:/usr/sbin"ExecStart=/usr/local/bin/dockerd -H unix:///var/run/docker.sock -H tcp://0.0.0.0:2375ExecReload=/bin/kill -s HUP $MAINPIDRestart=alwaysRestartSec=5TimeoutSec=0LimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityDelegate=yesKillMode=process[Install]WantedBy=multi-user.target' >> /etc/systemd/system/docker.service

重新加载配置文件

cd /usr/local/bin#重新加载配置文件systemctl daemon-reload#设置开机启动systemctl enable docker.service#启动systemctl start docker.service#重启systemctl daemon-reloadsystemctl restart docker

添加docker源

#添加docker源mkdir -p /etc/docker/touch /etc/docker/daemon.jsoncat > /etc/docker/daemon.json <<EOF{"registry-mirrors":["https://docker.mirrors.ustc.edu.cn/"],"exec-opts": ["native.cgroupdriver=systemd"]}EOF

重启

systemctl daemon-reloadsystemctl restart docker

验证

docker info

安装Kubeadm、Kubelet、Kubectl

执行节点:所有节点 执行目录:/root/ 网络:yum在线安装

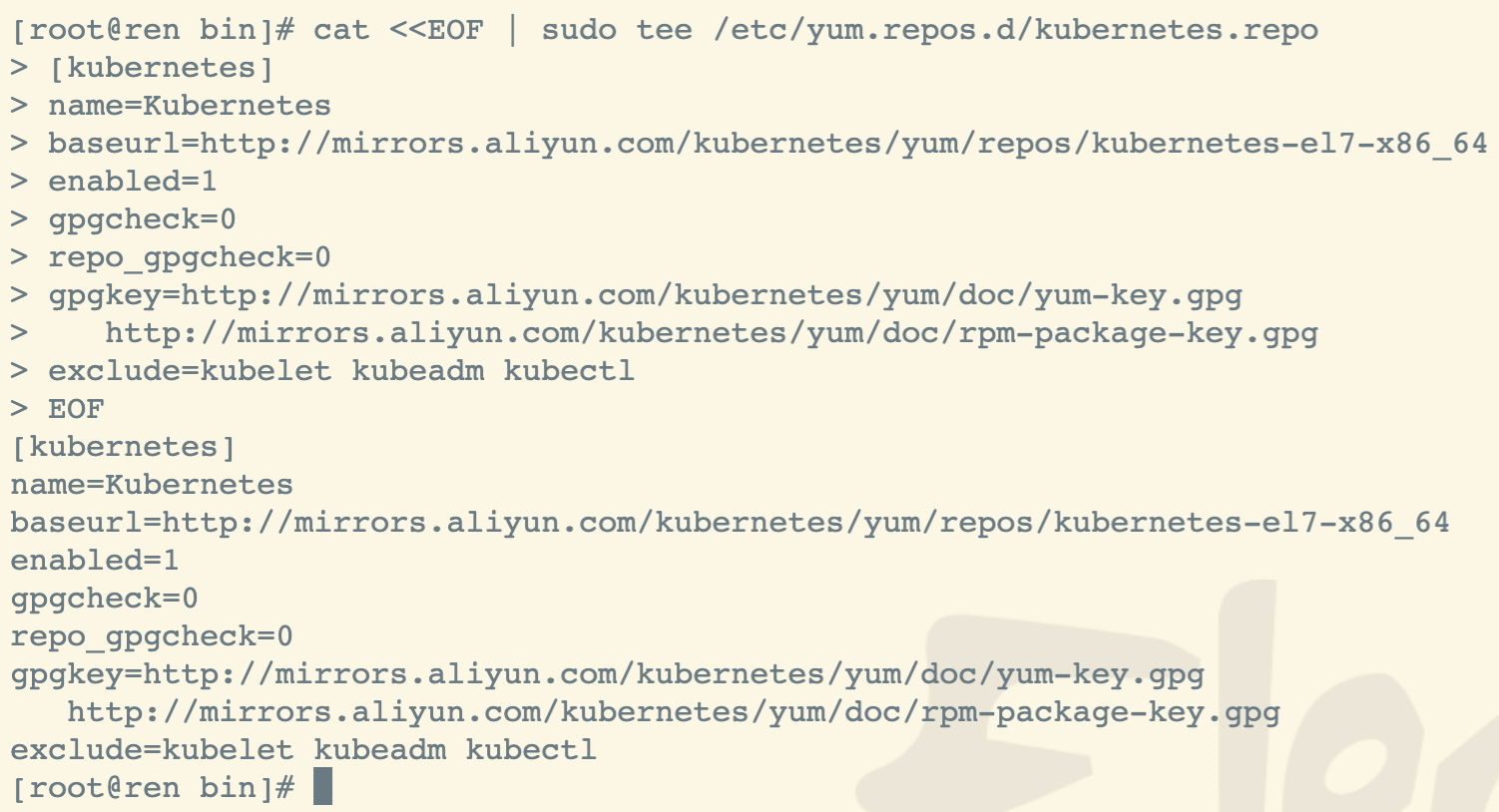

添加yum源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttp://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgexclude=kubelet kubeadm kubectlEOF

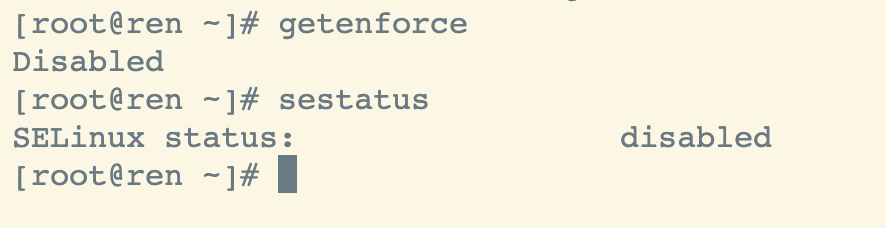

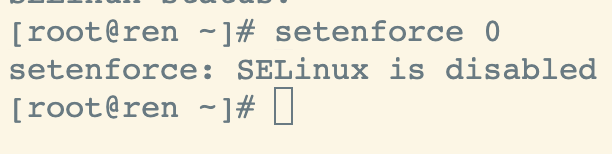

关闭SELinux

查看SELinux当前状态

getenforcesestatus

临时关闭

setenforce 0

永久关闭

vi /etc/sysconfig/selinux#将 SELINUX=enforcing 替换为 SELINUX=disabled#保存后重启CentOS

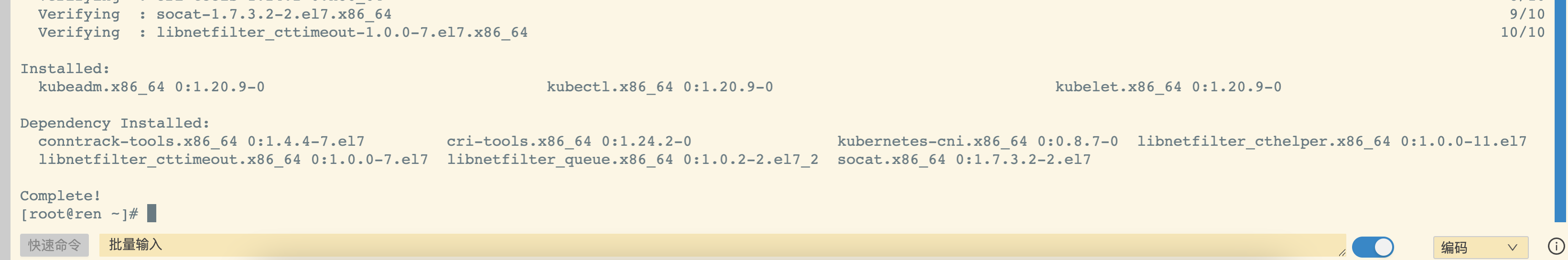

yum安装kubelet、kubeadm、kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

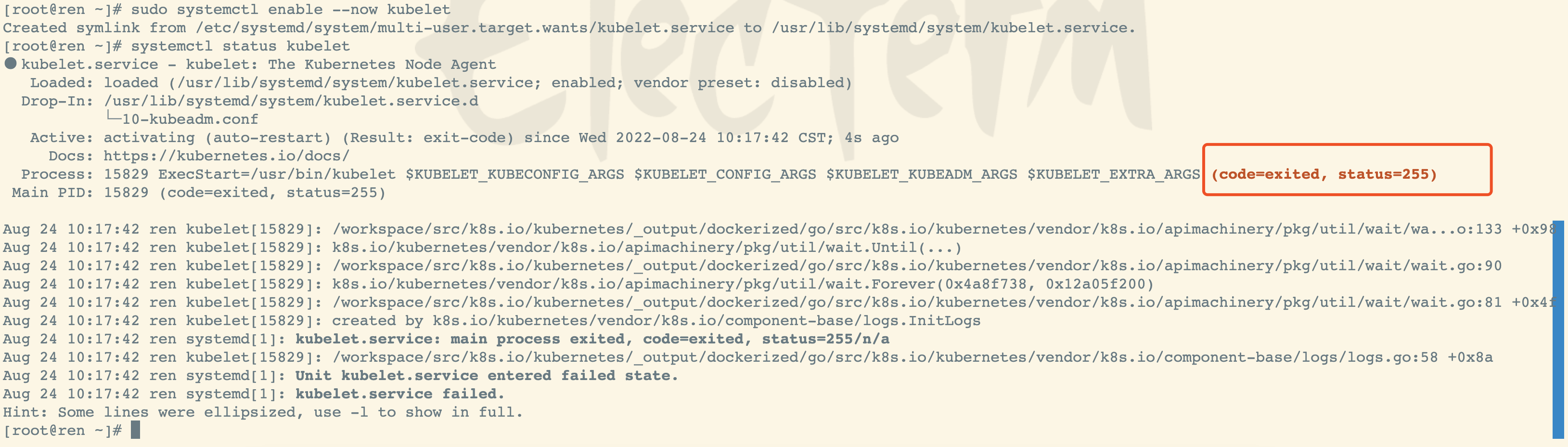

kubelet设置为开机自启

sudo systemctl enable --now kubelet

检查 kubelet 服务

systemctl status kubelet

kubelet 现在每隔几秒就会重启,因为它陷入了一个等待 kubeadm 指令的死循环

建立虚拟网卡

各节点分别操作,替换相应的公网IP

| 节点 | 内网IP | 公网IP | 配置 |

|---|---|---|---|

| ren | 10.0.4.17 | 1.15.230.38 | 4C8G |

| yan | 10.0.4.15 | 101.34.64.205 | 4C8G |

| bai | 192.168.0.4 | 106.12.145.172 | 2C8G |

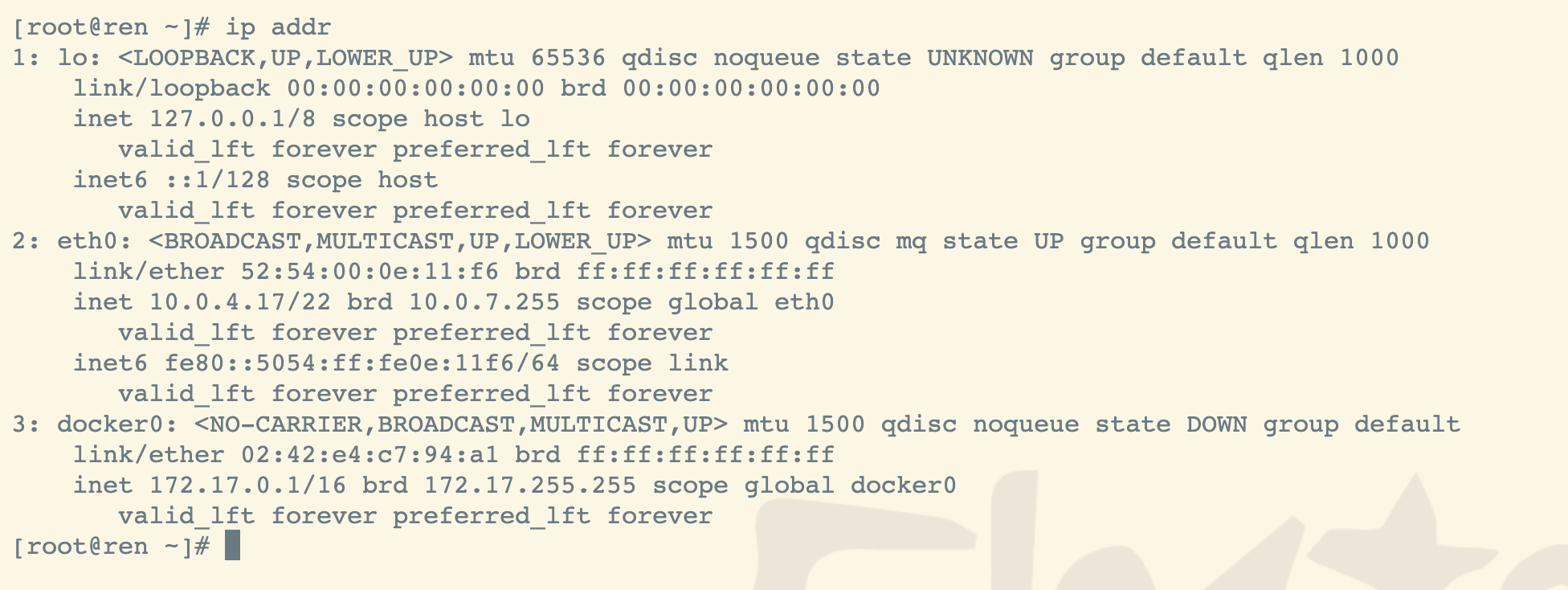

查看网卡信息

ip addr

节点 ren

cat > /etc/sysconfig/network-scripts/ifcfg-eth0:1 <<EOFBOOTPROTO=staticDEVICE=eth0:1IPADDR=1.15.230.38PREFIX=32TYPE=EthernetUSERCTL=noONBOOT=yesEOF

节点 yan

cat > /etc/sysconfig/network-scripts/ifcfg-eth0:1 <<EOFBOOTPROTO=staticDEVICE=eth0:1IPADDR=101.34.64.205PREFIX=32TYPE=EthernetUSERCTL=noONBOOT=yesEOF

节点 bai

cat > /etc/sysconfig/network-scripts/ifcfg-eth0:1 <<EOFBOOTPROTO=staticDEVICE=eth0:1IPADDR=106.12.145.172PREFIX=32TYPE=EthernetUSERCTL=noONBOOT=yesEOF

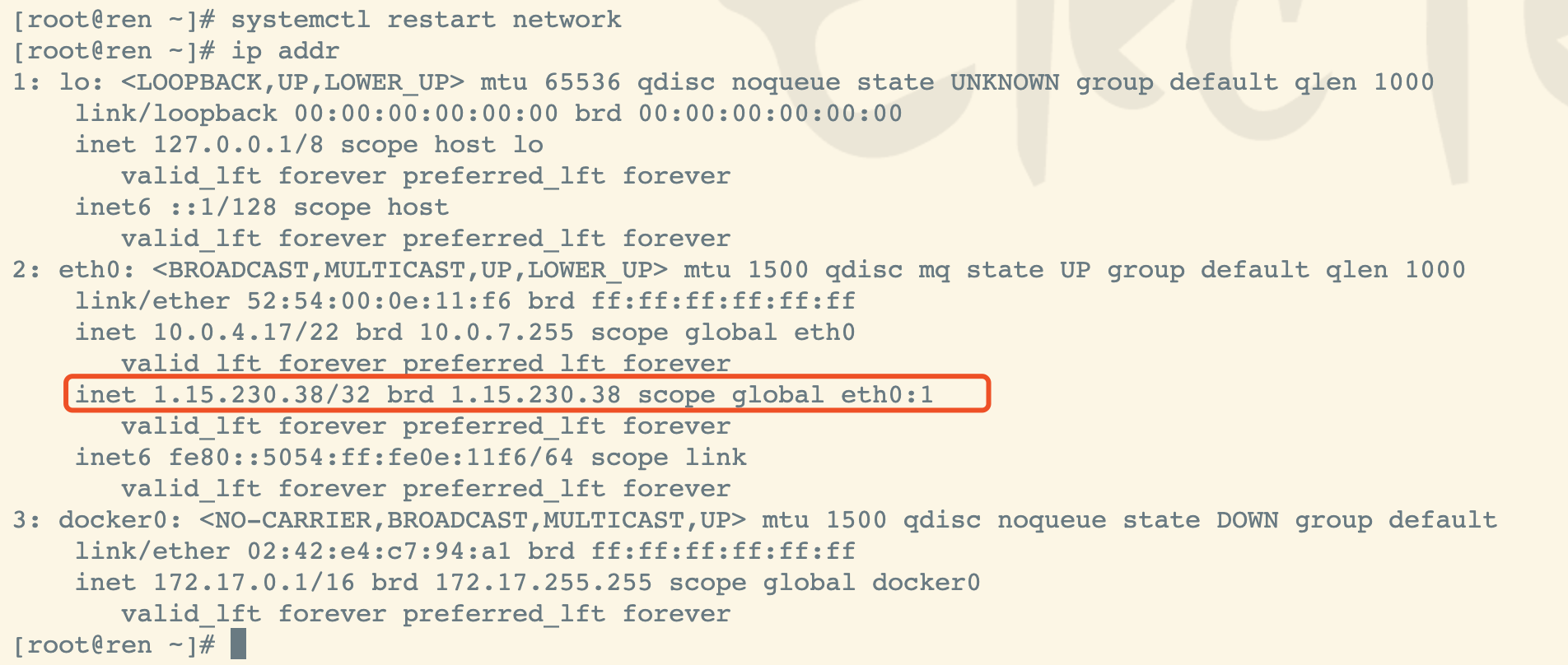

重启网卡

systemctl restart network

查看新建的IP是否进去

ip addr

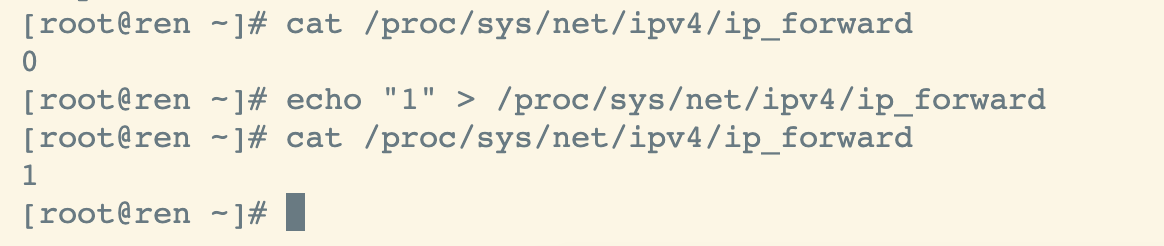

重新开启ipv4

不执行此步骤,会导致kubeadm初始化主节点报错 [ERROR FileContent—proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

cat /proc/sys/net/ipv4/ip_forwardecho "1" > /proc/sys/net/ipv4/ip_forward

修改kubelet启动参数

注意,这步很重要,如果不做,节点仍然会使用内网IP注册进集群

vi /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf# 在末尾添加参数 --node-ip=公网IP

节点 ren 完整文件

cat > /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf <<EOF# Note: This dropin only works with kubeadm and kubelet v1.11+[Service]Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamicallyEnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.EnvironmentFile=-/etc/sysconfig/kubeletExecStart=ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --node-ip=1.15.230.38EOF

节点 yan 完整文件

cat > /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf <<EOF# Note: This dropin only works with kubeadm and kubelet v1.11+[Service]Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamicallyEnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.EnvironmentFile=-/etc/sysconfig/kubeletExecStart=ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --node-ip=101.34.64.205EOF

节点 bai 完整文件

cat > /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf <<EOF# Note: This dropin only works with kubeadm and kubelet v1.11+[Service]Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamicallyEnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.EnvironmentFile=-/etc/sysconfig/kubeletExecStart=ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --node-ip=106.12.145.172EOF

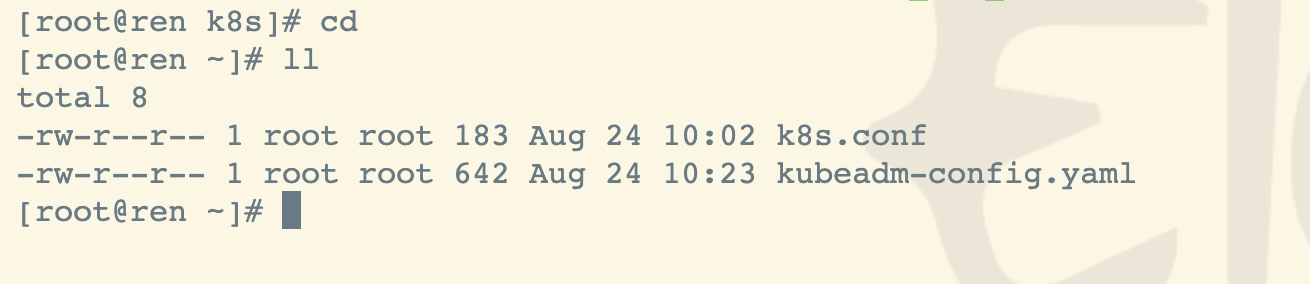

准备kubeadm初始化环境

编写 kubeadm-config.yaml 文件,准备初始化主节点

执行节点:所有节点 执行目录:/root/

添加配置文件,注意替换下面的IP

cat > /root/kubeadm-config.yaml <<EOFapiVersion: kubeadm.k8s.io/v1beta2kind: ClusterConfigurationkubernetesVersion: v1.20.9apiServer:certSANs: #填写所有kube-apiserver节点的hostname、IP、VIP- ren #替换为hostname- 1.15.230.38 #替换为公网- 10.0.4.17 #替换为私网- 10.96.0.1 #不要替换,此IP是API的集群地址,部分服务会用到controlPlaneEndpoint: 1.15.230.38:6443 #替换为公网IPnetworking:podSubnet: 10.244.0.0/16serviceSubnet: 10.96.0.0/12--- 将默认调度方式改为ipvsapiVersion: kubeproxy-config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: ipvsEOF

此时不能直接执行,因为下列镜像需要从谷歌服务器拉取,国内网络不通 k8s.gcr.io/kube-apiserver:v1.20.9 k8s.gcr.io/kube-controller-manager:v1.20.9 k8s.gcr.io/kube-scheduler:v1.20.9 k8s.gcr.io/kube-proxy:v1.20.9 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns:1.7.0

提前下载镜像

查看要下载的镜像

kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.20.9 k8s.gcr.io/kube-controller-manager:v1.20.9 k8s.gcr.io/kube-scheduler:v1.20.9 k8s.gcr.io/kube-proxy:v1.20.9 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns:1.7.0

编写镜像拉取脚本,准备分发各节点执行

文件路径:/root/

完整文件如下

或手动编写

cat >/root/pull_k8s_images.sh << "EOF"# 内容为set -o errexitset -o nounsetset -o pipefail##这里定义需要下载的版本KUBE_VERSION=v1.20.9KUBE_PAUSE_VERSION=3.2ETCD_VERSION=3.4.13-0DNS_VERSION=1.7.0##这是原来被墙的仓库GCR_URL=k8s.gcr.io##这里就是写你要使用的仓库,也可以使用gotok8sDOCKERHUB_URL=registry.cn-hangzhou.aliyuncs.com/google_containers##这里是镜像列表images=(kube-proxy:${KUBE_VERSION}kube-scheduler:${KUBE_VERSION}kube-controller-manager:${KUBE_VERSION}kube-apiserver:${KUBE_VERSION}pause:${KUBE_PAUSE_VERSION}etcd:${ETCD_VERSION}coredns:${DNS_VERSION})## 这里是拉取和改名的循环语句, 先下载, 再tag重命名生成需要的镜像, 再删除下载的镜像for imageName in ${images[@]} ; dodocker pull $DOCKERHUB_URL/$imageNamedocker tag $DOCKERHUB_URL/$imageName $GCR_URL/$imageNamedocker rmi $DOCKERHUB_URL/$imageNamedoneEOF

将脚本推送到其他节点

scp /root/pull_k8s_images.sh root@yan:/root/scp /root/pull_k8s_images.sh root@bai:/root/

各节点拉取所需镜像

执行节点:所有节点 执行目录:/root/

授权 镜像拉取脚本 执行权限

chmod +x /root/pull_k8s_images.sh

执行 镜像拉取 脚本

bash /root/pull_k8s_images.sh

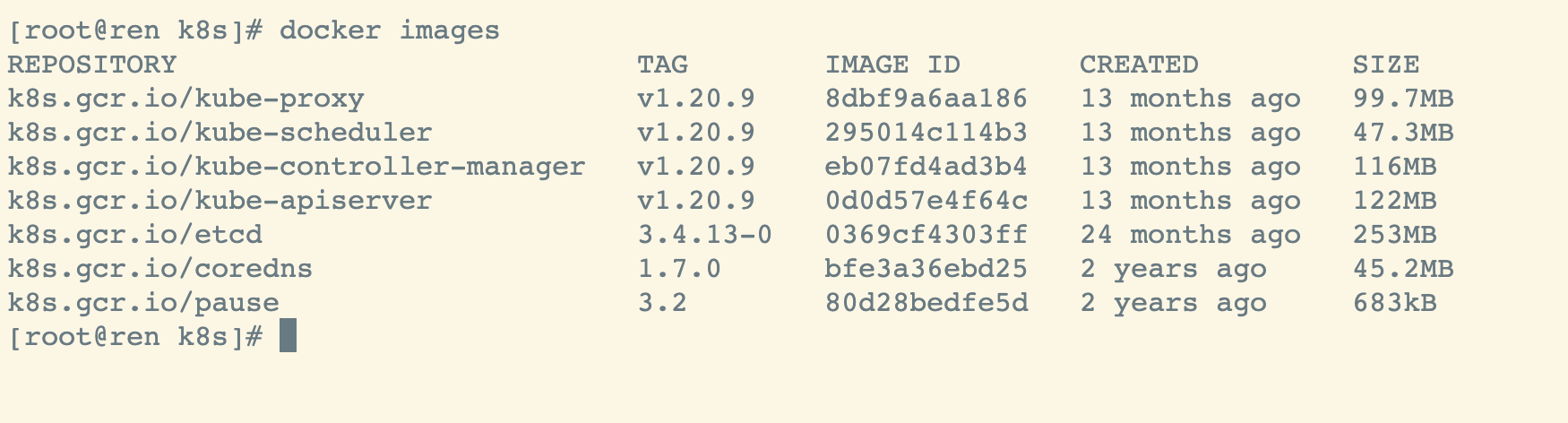

验证

docker images

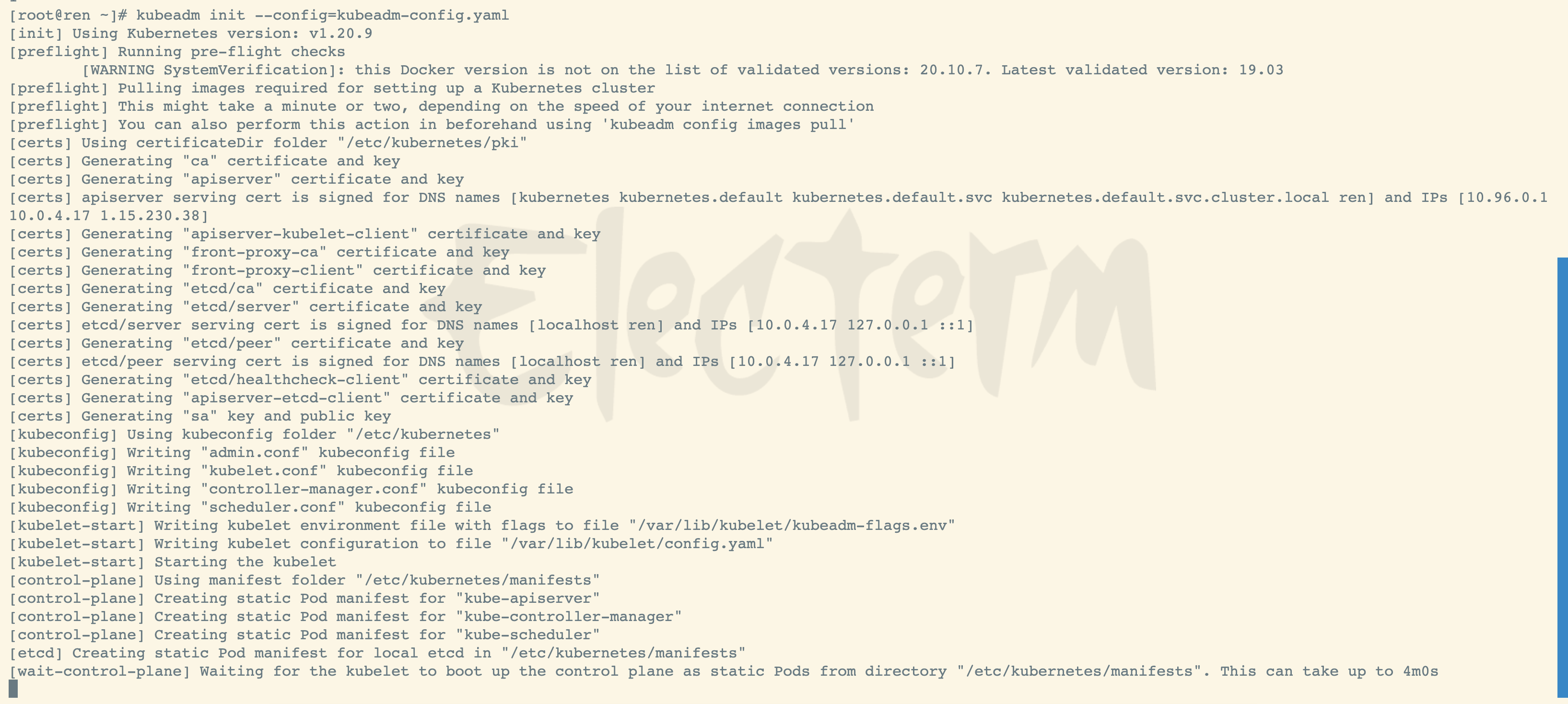

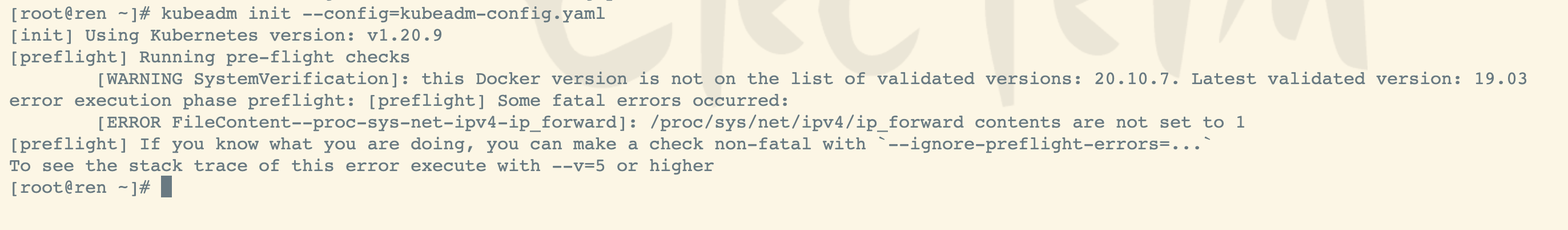

master节点初始化

执行节点:主节点 执行目录:/root/

如果是1核心或者1G内存的请在末尾添加参数(—ignore-preflight-errors=all),否则会初始化失败 同时注意,此步骤成功后,会打印,两个重要信息,注意保存

执行 kubeadm ,进行初始化

kubeadm init --config=kubeadm-config.yaml

[ERROR FileContent—proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

执行日志如下

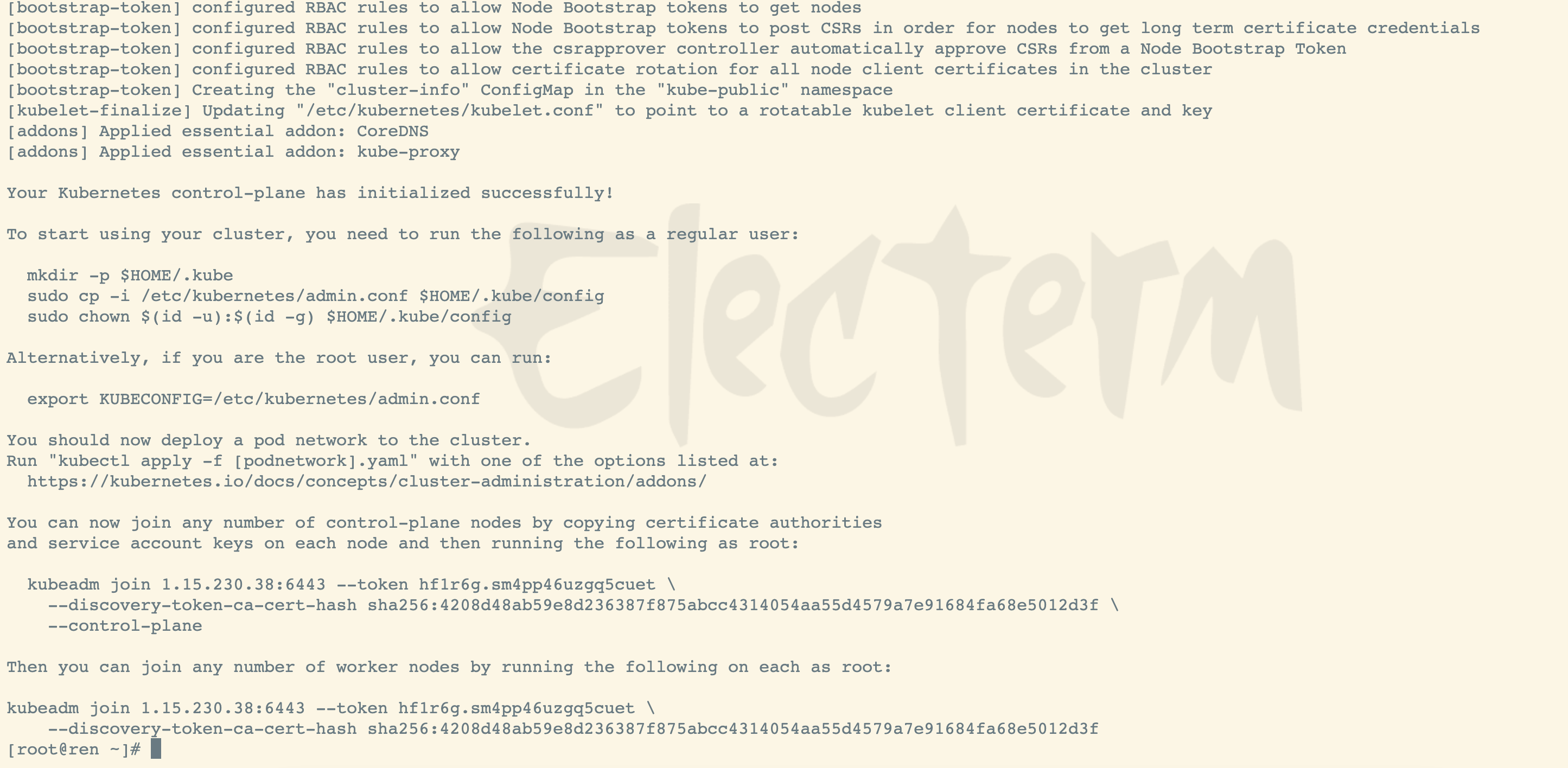

初始化成功后,将会生成kubeconfig文件,用于请求api服务器

按提示操作

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

拷贝命令,用于后面工作节点加入主节点使用

kubeadm join 1.15.230.38:6443 --token uifpif.fyr2s4f5gtemqgnn \--discovery-token-ca-cert-hash sha256:80accd0bf78574cd8e0df8b3d276e2a8c1453277b510eb02507f8e5a0675676e

修改kube-apiserver参数

文件路径:/etc/kubernetes/manifests/kube-apiserver.yaml

完整文件如下

cp kube-apiserver.yaml /etc/kubernetes/manifests/

或手动编写

修改3个信息

修改 -address.endpoint 添加 —bind-address 修改 —advertise-address

vim /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1kind: Podmetadata:annotations:kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 1.15.230.38:6443creationTimestamp: nulllabels:component: kube-apiservertier: control-planename: kube-apiservernamespace: kube-systemspec:containers:- command:- kube-apiserver- --advertise-address=1.15.230.38- --bind-address=0.0.0.0- --allow-privileged=true- --authorization-mode=Node,RBAC- --client-ca-file=/etc/kubernetes/pki/ca.crt- --enable-admission-plugins=NodeRestriction- --enable-bootstrap-token-auth=true- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key- --etcd-servers=https://127.0.0.1:2379- --insecure-port=0- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key- --requestheader-allowed-names=front-proxy-client- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt- --requestheader-extra-headers-prefix=X-Remote-Extra-- --requestheader-group-headers=X-Remote-Group- --requestheader-username-headers=X-Remote-User- --secure-port=6443- --service-account-issuer=https://kubernetes.default.svc.cluster.local- --service-account-key-file=/etc/kubernetes/pki/sa.pub- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key- --service-cluster-ip-range=10.96.0.0/12- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt- --tls-private-key-file=/etc/kubernetes/pki/apiserver.keyimage: k8s.gcr.io/kube-apiserver:v1.20.9imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 8httpGet:host: 10.0.4.17path: /livezport: 6443scheme: HTTPSinitialDelaySeconds: 10periodSeconds: 10timeoutSeconds: 15name: kube-apiserverreadinessProbe:failureThreshold: 3httpGet:host: 10.0.4.17path: /readyzport: 6443scheme: HTTPSperiodSeconds: 1timeoutSeconds: 15resources:requests:cpu: 250mstartupProbe:failureThreshold: 24httpGet:host: 10.0.4.17path: /livezport: 6443scheme: HTTPSinitialDelaySeconds: 10periodSeconds: 10timeoutSeconds: 15volumeMounts:- mountPath: /etc/ssl/certsname: ca-certsreadOnly: true- mountPath: /etc/pkiname: etc-pkireadOnly: true- mountPath: /etc/kubernetes/pkiname: k8s-certsreadOnly: truehostNetwork: truepriorityClassName: system-node-criticalvolumes:- hostPath:path: /etc/ssl/certstype: DirectoryOrCreatename: ca-certs- hostPath:path: /etc/pkitype: DirectoryOrCreatename: etc-pki- hostPath:path: /etc/kubernetes/pkitype: DirectoryOrCreatename: k8s-certsstatus: {}

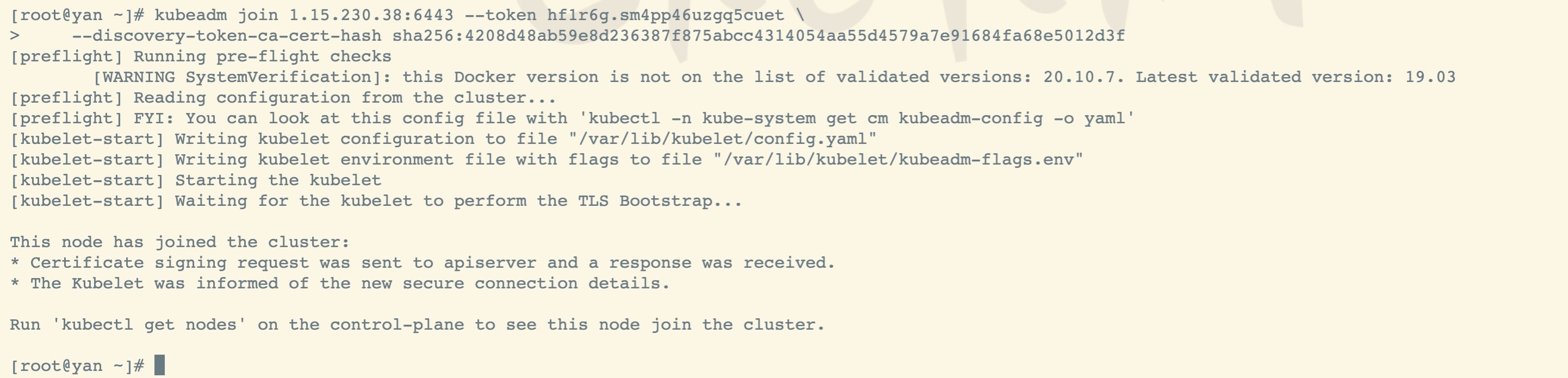

worker节点加入集群

执行节点:worker节点 执行目录:/root/ 一定要等master 的pod就绪之后,才可以加入worker

执行 kubeadm join 命令

参考步骤 “拷贝命令,用于后面工作节点加入主节点使用”

kubeadm join 1.15.230.38:6443 --token uifpif.fyr2s4f5gtemqgnn \--discovery-token-ca-cert-hash sha256:80accd0bf78574cd8e0df8b3d276e2a8c1453277b510eb02507f8e5a0675676e

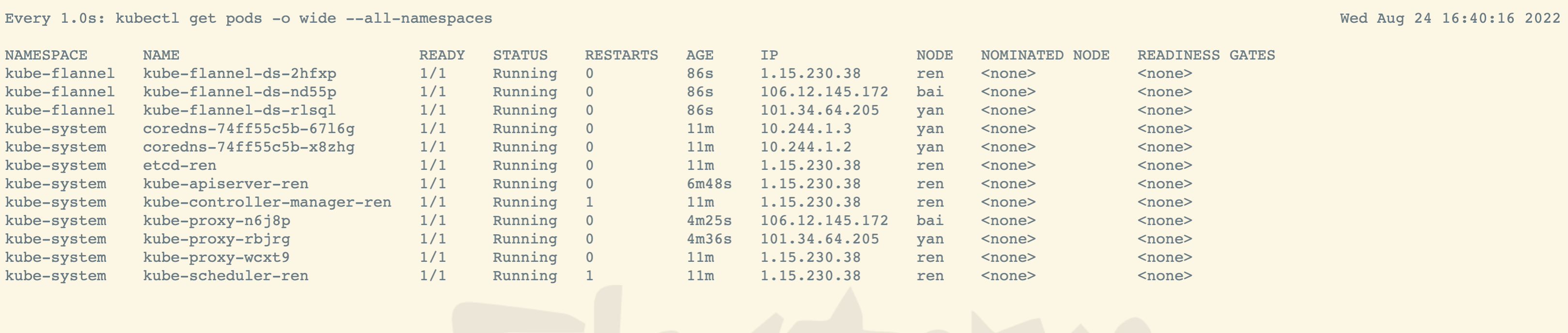

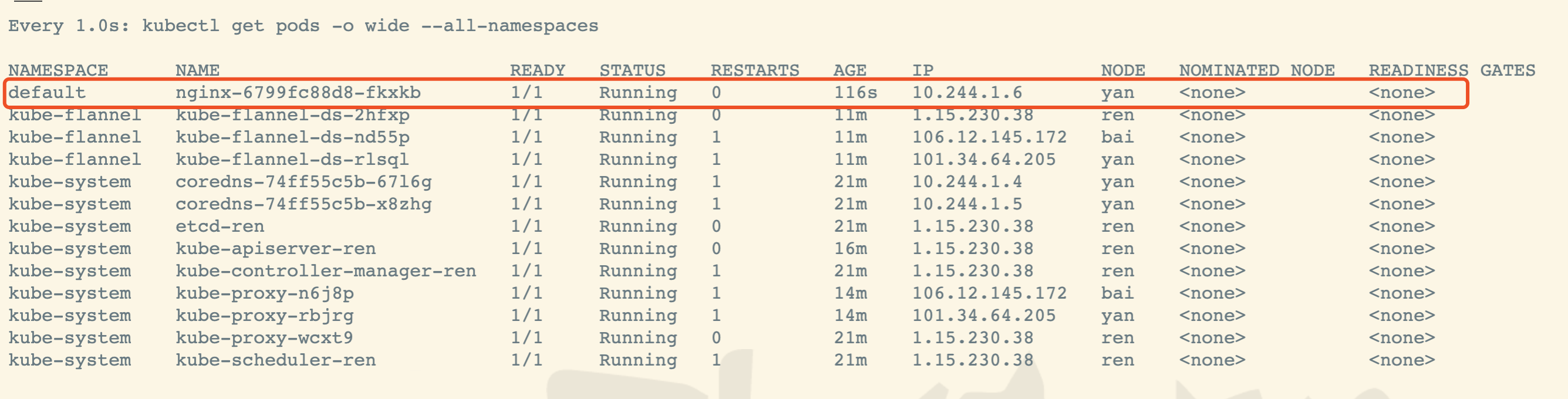

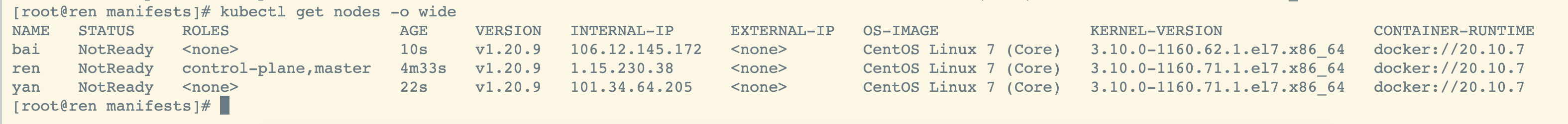

查看节点状态

kubectl get nodes -o wide

节点未就绪是因为没有安装网络插件

#监听应用启动情况kubectl get pod -A -w#或者watch -n 1 kubectl get pod -A#检查各节点连接状态kubectl get pods -o wide --all-namespaces#或者watch -n 1 kubectl get pods -o wide --all-namespaces

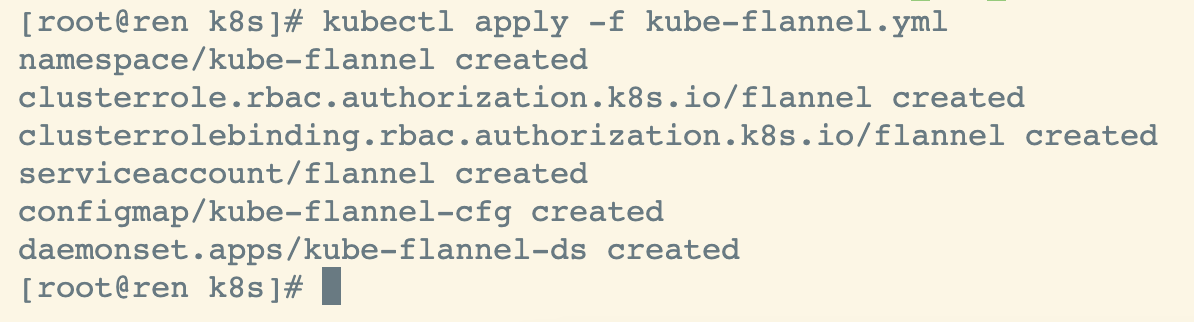

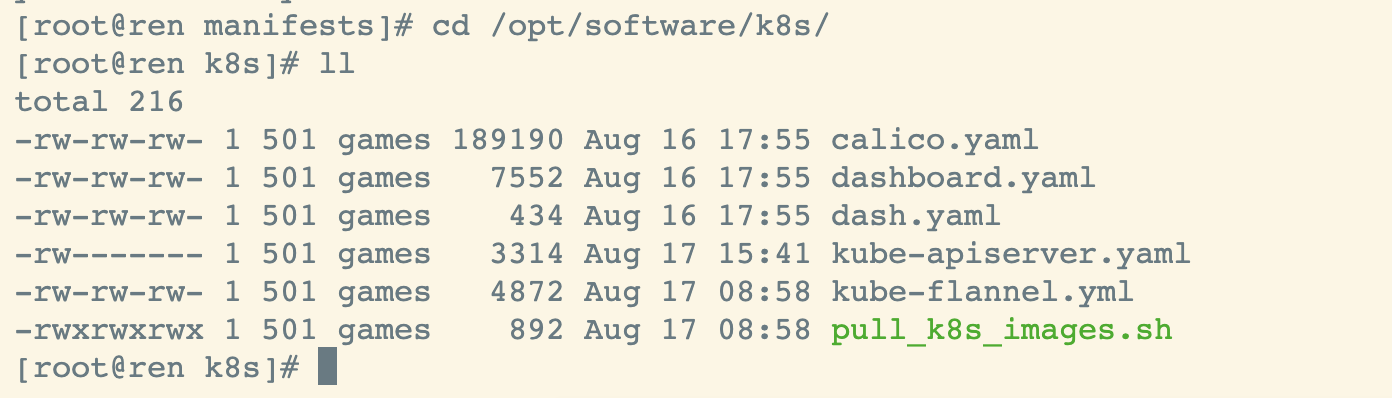

安装网络插件 flannel

执行节点:主节点 执行目录:/opt/software/k8s/

下载flannel的yaml配置文件

完整文件如下

或手动编写

下载

cd /root/wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改

# 共修改两个地方,一个是args下,添加args:- --public-ip=$(PUBLIC_IP) # 添加此参数,申明公网IP- --iface=eth0 # 添加此参数,绑定网卡# 然后是env下env:- name: PUBLIC_IP #添加环境变量valueFrom:fieldRef:fieldPath: status.podIP

部署flannel

kubectl apply -f kube-flannel.yml

再次查看pod状态,等待初始化完成

#监听应用启动情况kubectl get pod -A -w#或者watch -n 1 kubectl get pod -A#检查各节点连接状态kubectl get pods -o wide --all-namespaces#或者watch -n 1 kubectl get pods -o wide --all-namespaces

若发现pod有异常,排查

kubectl describe pod coredns-74ff55c5b-654lm -n kube-system

原因:master节点有 /run/flannel/subnet.env 文件,而其他worker节点没有,网络通信异常

cat /run/flannel/subnet.env

解决方案:master节点拷贝文件到worker节点

scp /run/flannel/subnet.env root@yan:/run/flannel/scp /run/flannel/subnet.env root@bai:/run/flannel/

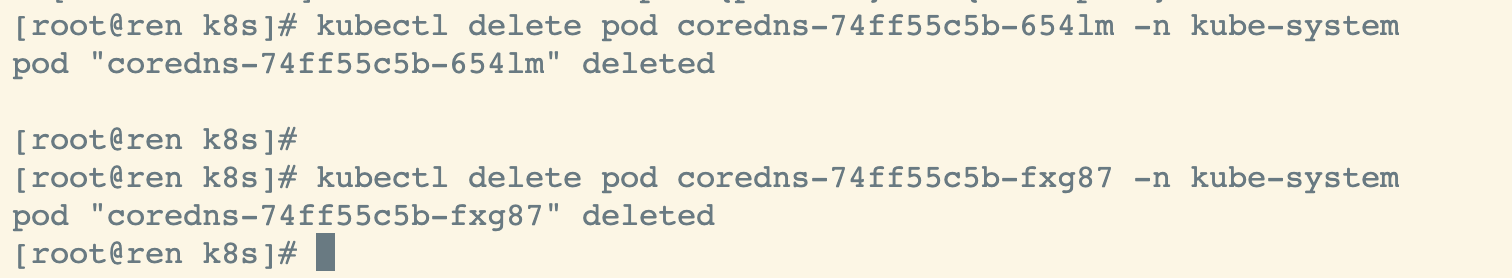

删除异常pod

kubectl delete pod coredns-74ff55c5b-654lm -n kube-systemkubectl delete pod coredns-74ff55c5b-fxg87 -n kube-system

重启pod

kubectl replace --force -f kube-flannel.yml

一定要等master 的pod就绪之后,才可以加入worker

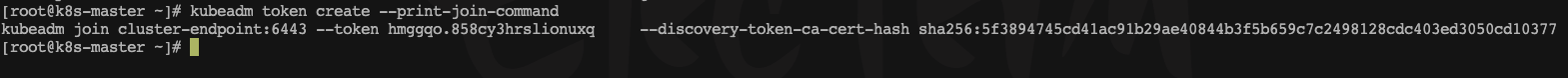

若集群令牌过期且有新的worker节点加入,重新生成集群令牌

master节点操作 高可用部署方式,也是在这一步的时候,使用添加主节点的命令即可

kubeadm token create --print-join-command

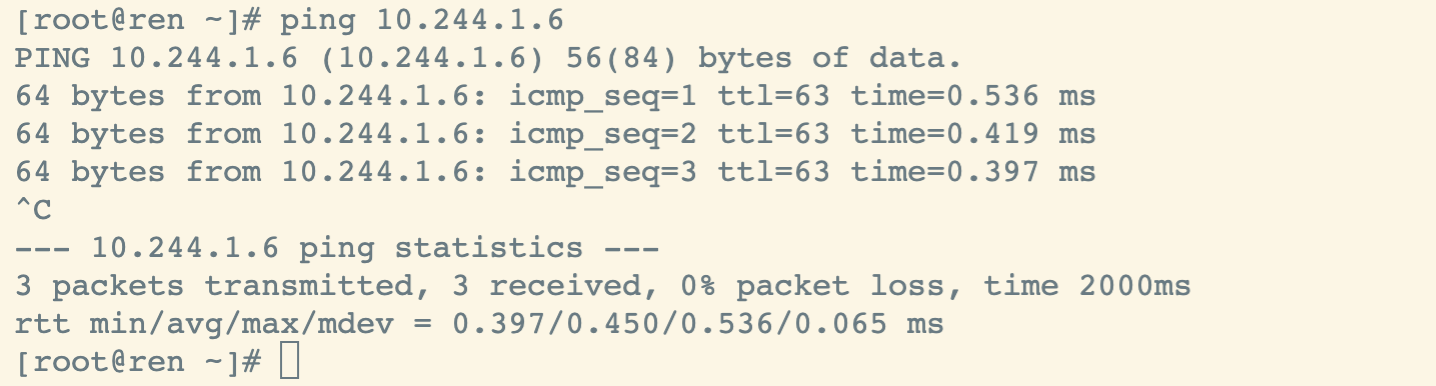

检查网络是否连通

# 检查pod是否都是ready状态kubectl get pods -o wide --all-namespaces...# 手动创建一个podkubectl create deployment nginx --image=nginx# 查看pod的ipkubectl get pods -o wide# 主节点或其它节点,ping一下此ip,看看是否能ping通

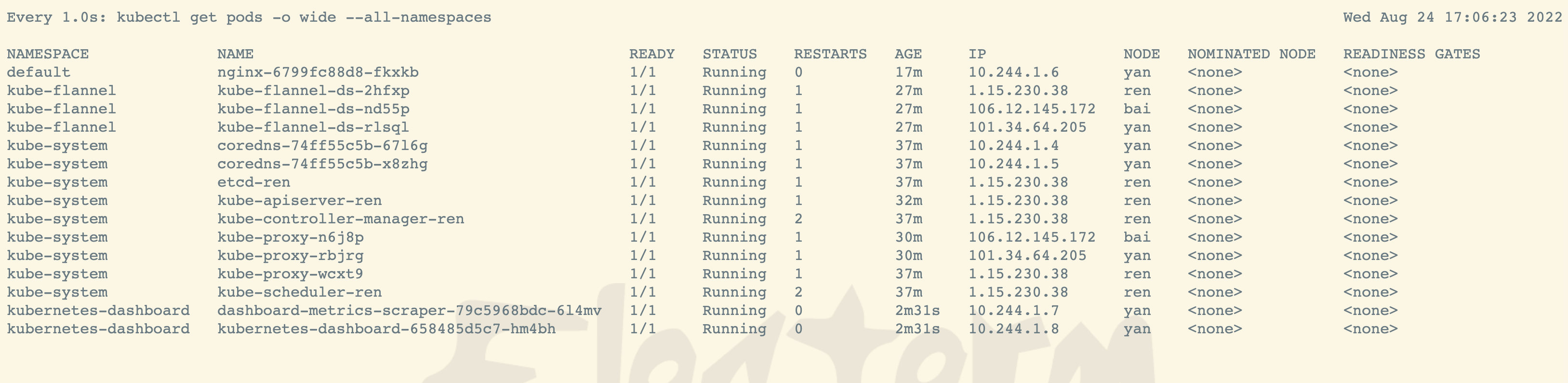

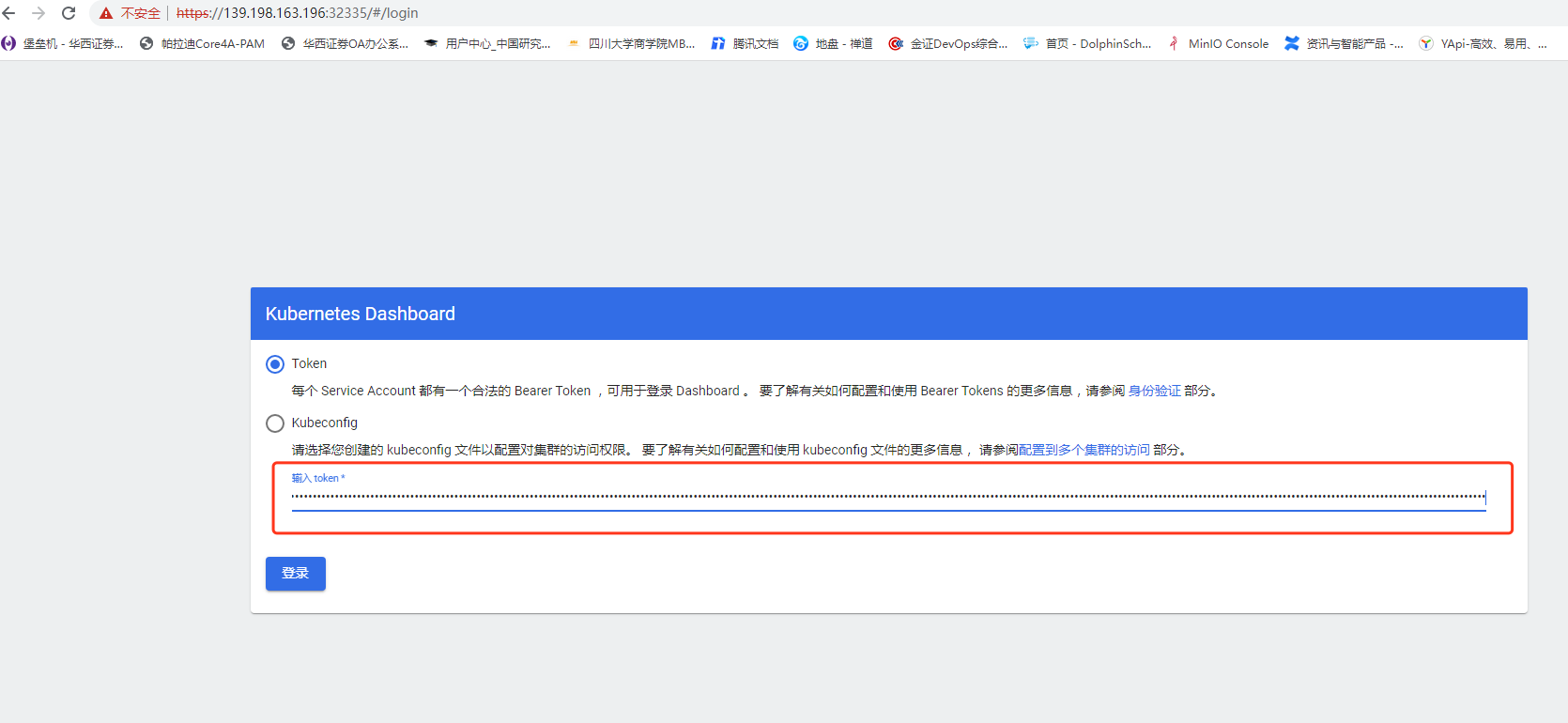

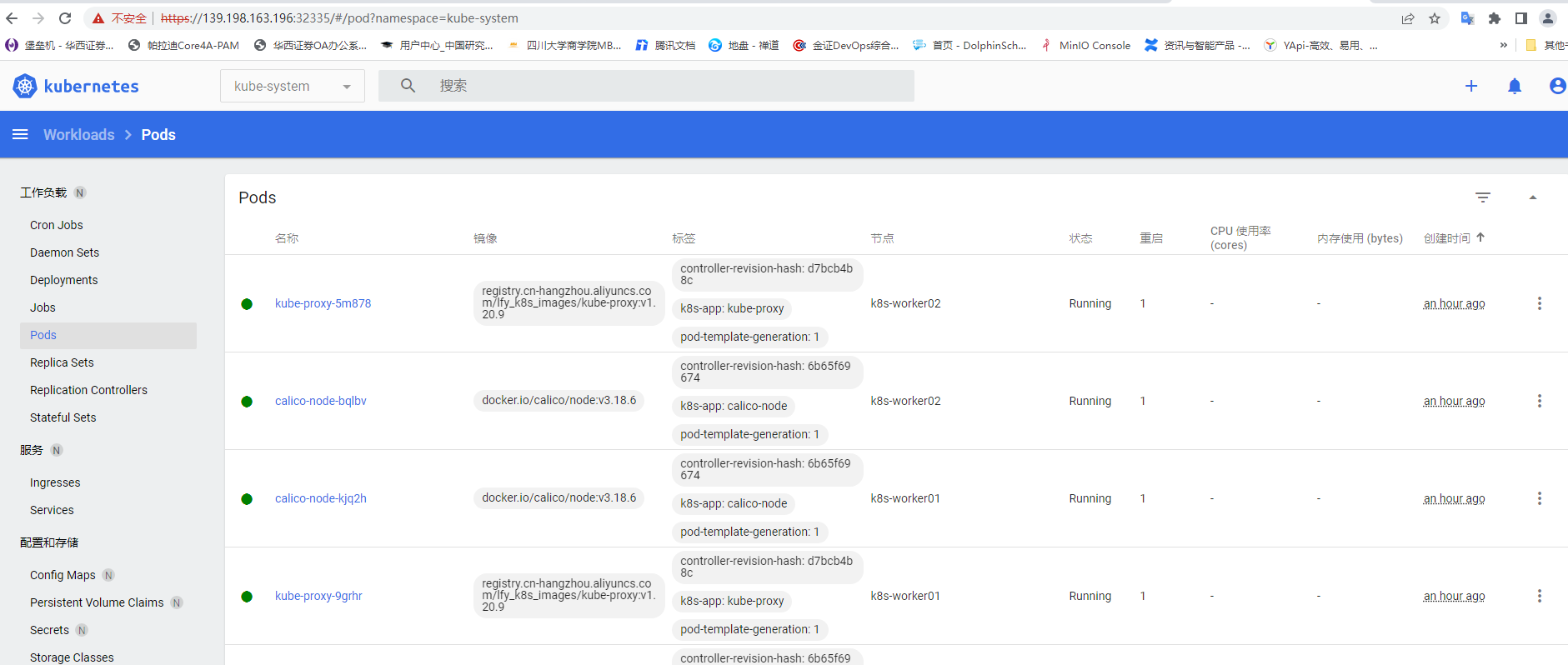

部署dashboard

执行节点:主节点 执行目录:/root/

yaml安装

kubernetes官方提供的可视化界面

https://github.com/kubernetes/dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

无法下载则离线安装

完整文件如下

dashboard.yaml

或手动编写

# Copyright 2017 The Kubernetes Authors.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.apiVersion: v1kind: Namespacemetadata:name: kubernetes-dashboard---apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard---kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:ports:- port: 443targetPort: 8443selector:k8s-app: kubernetes-dashboard---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-certsnamespace: kubernetes-dashboardtype: Opaque---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-csrfnamespace: kubernetes-dashboardtype: Opaquedata:csrf: ""---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-key-holdernamespace: kubernetes-dashboardtype: Opaque---kind: ConfigMapapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-settingsnamespace: kubernetes-dashboard---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardrules:# Allow Dashboard to get, update and delete Dashboard exclusive secrets.- apiGroups: [""]resources: ["secrets"]resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]verbs: ["get", "update", "delete"]# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]resourceNames: ["kubernetes-dashboard-settings"]verbs: ["get", "update"]# Allow Dashboard to get metrics.- apiGroups: [""]resources: ["services"]resourceNames: ["heapster", "dashboard-metrics-scraper"]verbs: ["proxy"]- apiGroups: [""]resources: ["services/proxy"]resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]verbs: ["get"]---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardrules:# Allow Metrics Scraper to get metrics from the Metrics server- apiGroups: ["metrics.k8s.io"]resources: ["pods", "nodes"]verbs: ["get", "list", "watch"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: kubernetes-dashboardroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardspec:containers:- name: kubernetes-dashboardimage: kubernetesui/dashboard:v2.3.1imagePullPolicy: Alwaysports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates- --namespace=kubernetes-dashboard# Uncomment the following line to manually specify Kubernetes API server Host# If not specified, Dashboard will attempt to auto discover the API server and connect# to it. Uncomment only if the default does not work.# - --apiserver-host=http://my-address:portvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30securityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---kind: ServiceapiVersion: v1metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboardspec:ports:- port: 8000targetPort: 8000selector:k8s-app: dashboard-metrics-scraper---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboardspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: dashboard-metrics-scrapertemplate:metadata:labels:k8s-app: dashboard-metrics-scraperannotations:seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'spec:containers:- name: dashboard-metrics-scraperimage: kubernetesui/metrics-scraper:v1.0.6ports:- containerPort: 8000protocol: TCPlivenessProbe:httpGet:scheme: HTTPpath: /port: 8000initialDelaySeconds: 30timeoutSeconds: 30volumeMounts:- mountPath: /tmpname: tmp-volumesecurityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedulevolumes:- name: tmp-volumeemptyDir: {}

执行安装命令

kubectl apply -f dashboard.yaml

等待状态变为running

kubectl get pod -A

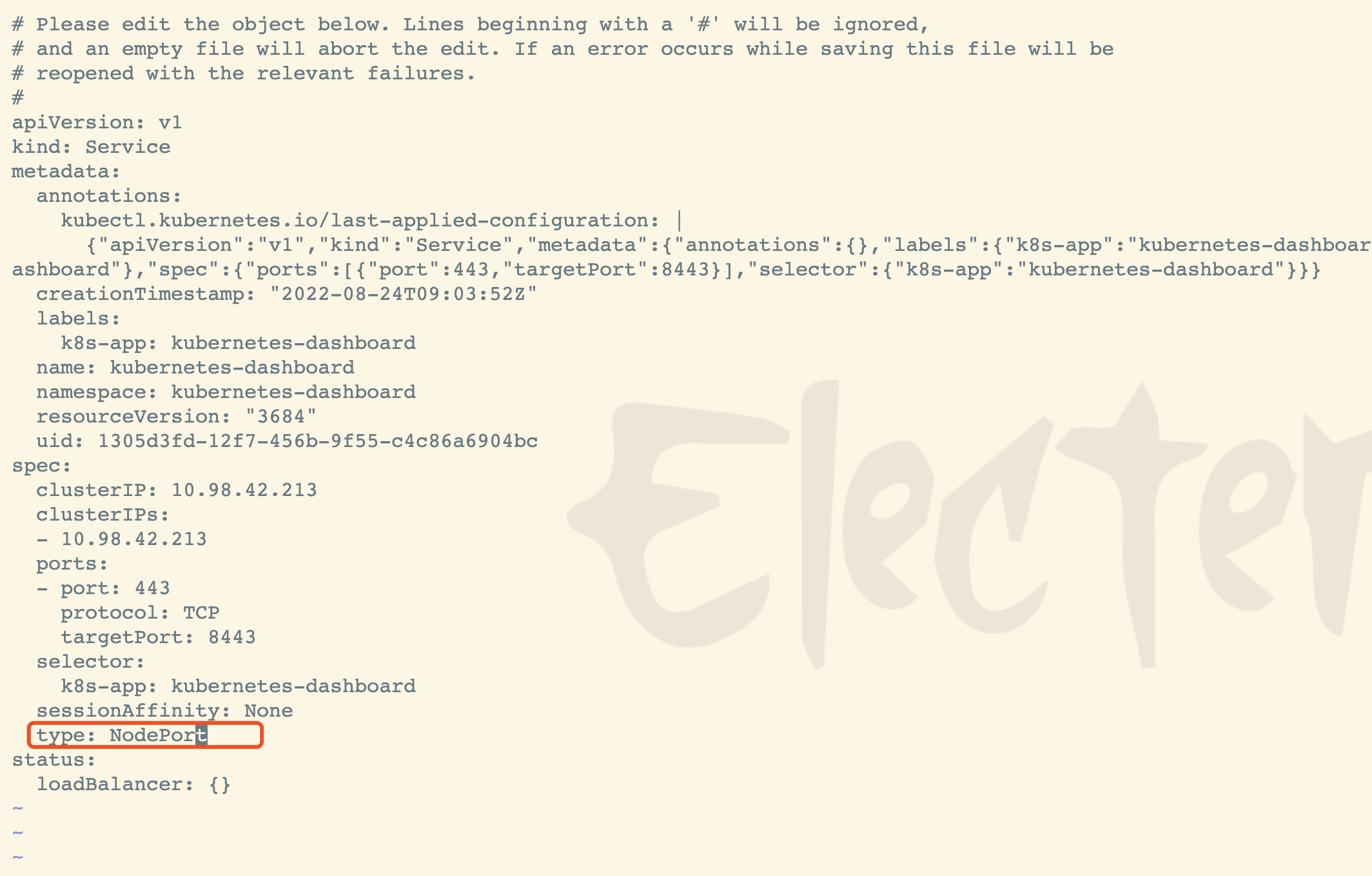

暴露端口

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

设置type

type: ClusterIP 改为 type: NodePort

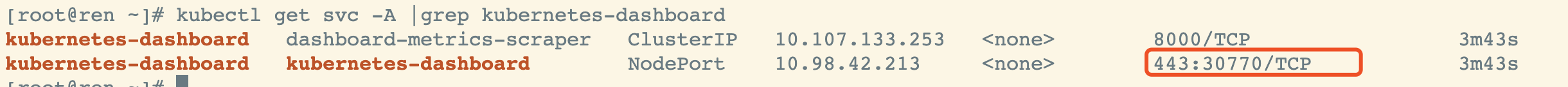

验证,确认端口映射,便于安全组放行

kubectl get svc -A |grep kubernetes-dashboard

30770

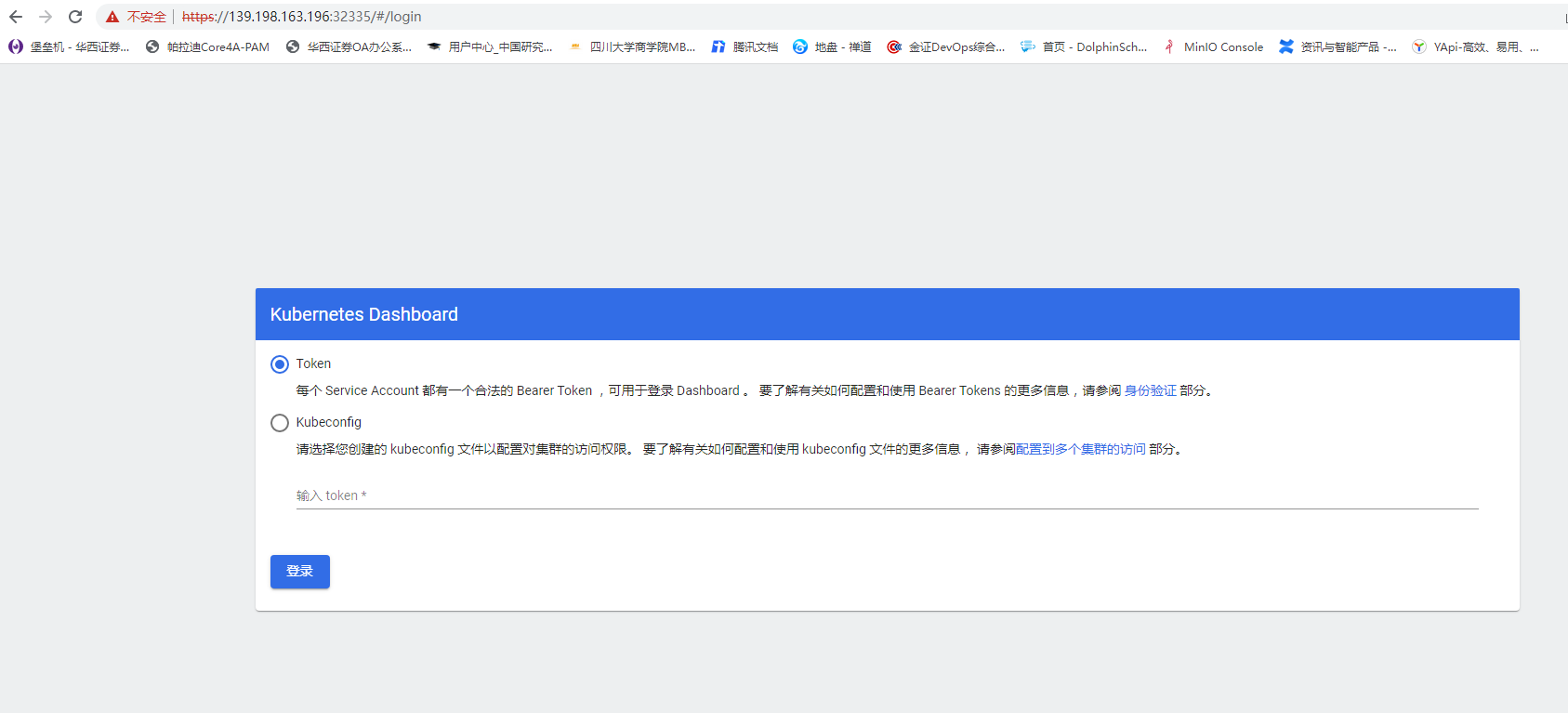

浏览器访问任意一台:

https://yan:30770

获取 Token

创建访问者账号

完整文件如下

dash.yaml

或手动编写

#创建访问账号,准备一个yaml文件; vi dash.yamlapiVersion: v1kind: ServiceAccountmetadata:name: admin-usernamespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: admin-userroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: admin-usernamespace: kubernetes-dashboard

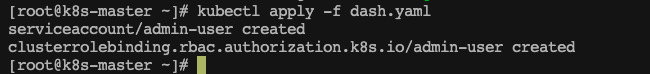

安装

kubectl apply -f dash.yaml

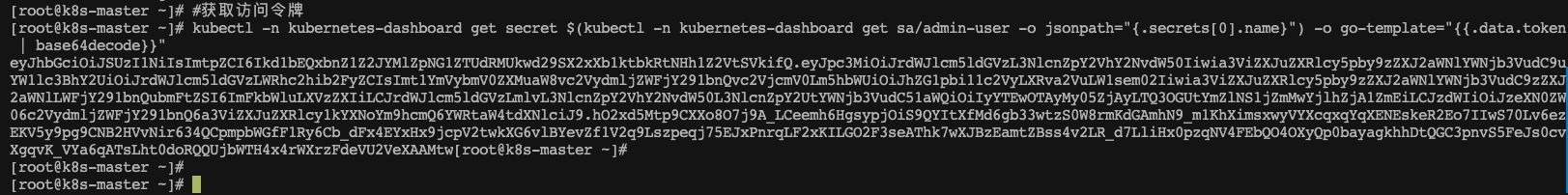

获取令牌

#获取访问令牌kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

令牌内容如下

eyJhbGciOiJSUzI1NiIsImtpZCI6Ik9ma3lPV0NSZGVHeUtsU0N6cU1mdGdsQTJaR0RMREp4Y2g4djV5SEN2WmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW1tbDd3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2MzZjOTkwNS0yNjA5LTQzMDItOGQzYS05ZDM1ZTNmM2UzODEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.drsFRwwSwjYTahaeTN8Kou3bBnbx_RIQLIPnsx7eyBHRn78XTTq3xOhAFtquWBUZv2LJhfpq4zf8z1zlMizD_9Ys7warApuj5SH6pJy-IDrk2pbOfwOX5M89tswCgG85qofXhSVqUmfGn5avkpq81wb4bT6TeQaY-5OHaWZGeHc7sUvrv9NR5wEUo5FSZmx3aStZum-lir5tp64MYvbosVhNkOMlEUc1-j5OhEr6UHcMPkhkFCCyU7Y8JZitpT6oHY32Kl51Yqj2eJMYeA5wtBk2yeXXZ00EvEwMlgdaPPWgzVGx8GMjbfA2ACfYx1bWaNmdEMLrQfAnkpVBVHcG3Q