帧率是什么

FPS是图像领域中的定义,是指画面每秒传输帧数,通俗来讲就是指动画或视频的画面数

帧率的影响是什么

我们用华为解读的《软件绿色联盟应用体验标准3.0》原话来解释:应用界面的刷新帧率,尤其是滑动时,如果帧率较低,会带来卡顿的感觉,所以保持一个相对较高的帧率会带来更流畅的体验。还有刷新频率越低,图像闪烁和抖动的就越厉害,眼睛疲劳得就越快。

那么影响帧率的是什么呢

现在的Android旗舰机都做到了120HzZ的刷新率,但实际上,我们需要这么高的刷新率吗?其实我们对帧率的要求,不同的场景要求不太一样,电影做到24Hz就可以正常观看了,游戏的话需要最低30Hz,想要流畅的感觉就需要>60Hz,这也是为啥王者荣耀要达到60Hz左右才不会卡顿的原因。那么反过来再想,到底是什么在影响帧率呢?

- 显卡,FPS越高则需要的显卡能力越高

- 分辨率,分辨率越低,越容易做到高帧率

有个公式,提供了帧率的计算方法:显卡的处理能力= 分辨率×帧率,举个例子:分辨率是1024×768 帧率是24帧/秒,那么需要:

1024×768×24=18874368 一千八百八十七万个像素量/秒 的显卡处理能力,想达到50HZ 就需要三千九百万的像素处理能力。

帧率多少属于正常呢

软件绿色联盟应用体验标准3.0 -性能标准中提到如下标准:

- 普通应用的帧率应≥55fps

- 游戏类、地图类和视频类的帧率应≥25fps

游戏类不是达到60Hz才感觉流畅吗,看到绿色联盟对游戏要求倒是很低,哈哈。

如何监控Android手机的帧率呢?

既然保持高帧率对用户体验如此重要,那么我们如何实时监控手机的帧率呢?一种最简单的方式就是手机里面的开发者模式中,打开HWHI呈现模式分析,选择屏幕条形图,或者adb shell输出都行。详细介绍在这里:

使用 GPU 渲染模式分析工具进行分析

但这好像也不是我们这次关心的重点,我们更想从代码的角度来分析,那么如何实现呢?答案就是Choreographer,谷歌在 Android API 16 中提供了这个类,它会在每一帧绘制之前通过FrameCallBack 接口的方式回调doFrame方法,且提供了当前帧开始绘制的时间(单位:纳秒),代码如下:

/*** Implement this interface to receive a callback when a new display frame is* being rendered. The callback is invoked on the {@link Looper} thread to* which the {@link Choreographer} is attached.*/public interface FrameCallback {/*** Called when a new display frame is being rendered.* <p>* This method provides the time in nanoseconds when the frame started being rendered.* The frame time provides a stable time base for synchronizing animations* and drawing. It should be used instead of {@link SystemClock#uptimeMillis()}* or {@link System#nanoTime()} for animations and drawing in the UI. Using the frame* time helps to reduce inter-frame jitter because the frame time is fixed at the time* the frame was scheduled to start, regardless of when the animations or drawing* callback actually runs. All callbacks that run as part of rendering a frame will* observe the same frame time so using the frame time also helps to synchronize effects* that are performed by different callbacks.* </p><p>* Please note that the framework already takes care to process animations and* drawing using the frame time as a stable time base. Most applications should* not need to use the frame time information directly.* </p>** @param frameTimeNanos The time in nanoseconds when the frame started being rendered,* in the {@link System#nanoTime()} timebase. Divide this value by {@code 1000000}* to convert it to the {@link SystemClock#uptimeMillis()} time base.*/public void doFrame(long frameTimeNanos);}

通过注释就发现,确实如上描述的那样。我们再深挖一下,到底是什么动力促使有了doFrame的回调呢?然后再想一想如何计算出帧率。

Choreographer背后的动力

这就需要了解Android的渲染原理,Android的渲染也是经过Google长期的迭代,不断优化更新,其实整个渲染过程很复杂,需要很多框架的支持,我们知道的的底层库如:Skia或者OpenGL,Flutter就使用了Skia绘制,但它是2D的,且使用的是CPU,OpenGL可以绘制3D,且使用的是GPU,而最终呈现图形的是Surface。所有的元素都在 Surface 这张画纸上进行绘制和渲染,每个窗口Window都会关联一个 Surface,WindowManager 则负责管理这些Window窗口,并且把它们的数据传递给 SurfaceFlinger,SurfaceFlinger 接受缓冲区,对它们进行合成,然后发送到屏幕。

WindowManager 为 SurfaceFlinger 提供缓冲区和窗口元数据,而 SurfaceFlinger通过硬件合成器 Hardware Composer 合成并输出到显示屏,Surface会被绘制很多层,所以就是上面提到的缓冲区,在 Android 4.1 之前使用的是双缓冲机制;在 Android 4.1 之后,使用的是三缓冲机制。

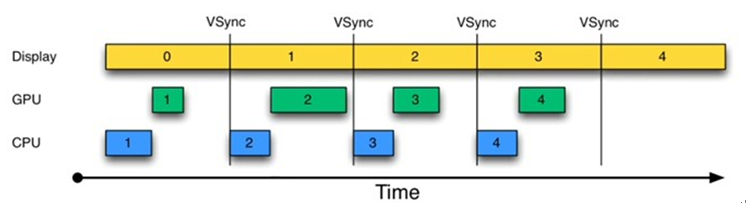

然而这还没完,Google 在 2012 年的 I/O 大会上宣布了 Project Butter 黄油计划,并且在 Android 4.1 中正式开启了这个机制,也就是VSYNC 信号,这是个什么东西呢?先来看一张图,你会发现一次屏幕的绘制,需要经过CPU计算,然后GPU,最后Display,VSYNC 像是一个队列(生产者消费者模型),一个个随着时间累加,一个个又立刻输出后被消费,我们知道最终的Buffer数据是通过SurfaceFlinger输出到显示屏的,而VSYNC在这期间起到的作用其实就是有序规划渲染流程,降低延时,而我们还知道一个VSYNC的时间间隔是16ms,超过16就会导致页面绘制停留在上一帧画面,从而感觉像是掉帧

为什么要16ms呢?这来源一个计算公式:

1000ms/60fps 约等于 16ms/1fps,而Choreographer的doFrame方法正常情况下就是16ms一次回调。且它的动力就是来源于这个VSYNC信号。

怎么通过Choreographer计算帧率

由于doFrame方法正常是16ms被调一次,那么我们就可以顺着这个特点来做个计算,看段代码来梳理下整个思路:

//记录上次的帧时间private long mLastFrameTime;Choreographer.getInstance().postFrameCallback(new Choreographer.FrameCallback() {@Overridepublic void doFrame(long frameTimeNanos) {//每500毫秒重新赋值一次最新的帧时间if (mLastFrameTime == 0) {mLastFrameTime = frameTimeNanos;}//本次帧开始时间减去上次的时间除以100万,得到毫秒的差值float diff = (frameTimeNanos - mLastFrameTime) / 1000000.0f;//这里是500毫秒输出一次帧率if (diff > 500) {double fps = (((double) (mFrameCount * 1000L)) / diff);mFrameCount = 0;mLastFrameTime = 0;Log.d("doFrame", "doFrame: " + fps);} else {++mFrameCount;}//注册监听下一次 vsync信号Choreographer.getInstance().postFrameCallback(this);}});

为什么要按500毫秒计算呢?其实也可以用一秒来算哈,你自己决定,总之doFrame方法,如果在一秒内被回调60次左右,那就基本正常哦。好了知道了如何用代码测算帧率,那么我们就来开始分析,Matrix的帧率检测代码。看看他都做了哪些。

Matrix 帧率检测代码分析

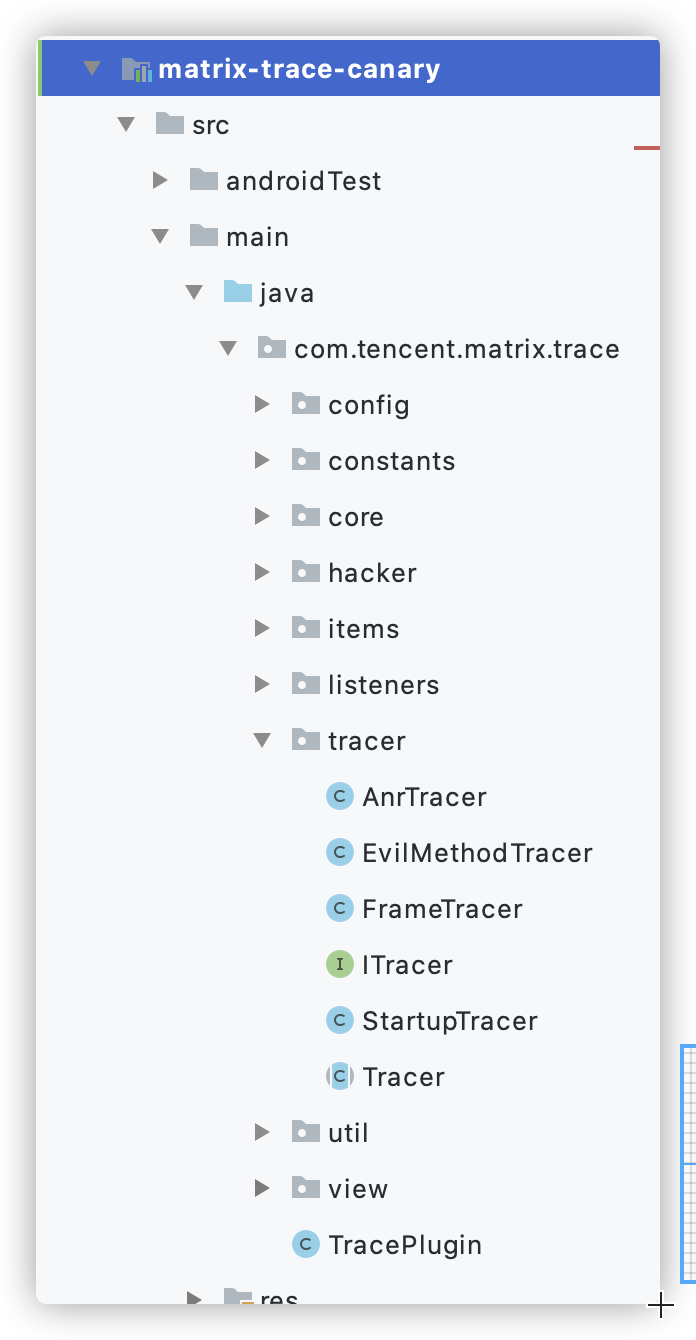

帧率检测的代码肯定是在trace canary中,首先来看下整体目录,我们发现了ITracer抽象

分包有,AnrTracer、EvilMethodTracer、FrameTracer、StartupTracer四个,看名字应该就能判断出FrameTracer肯定和帧率相关,于是我们打开FrameTracer,并检索fps字段,发现如下代码:

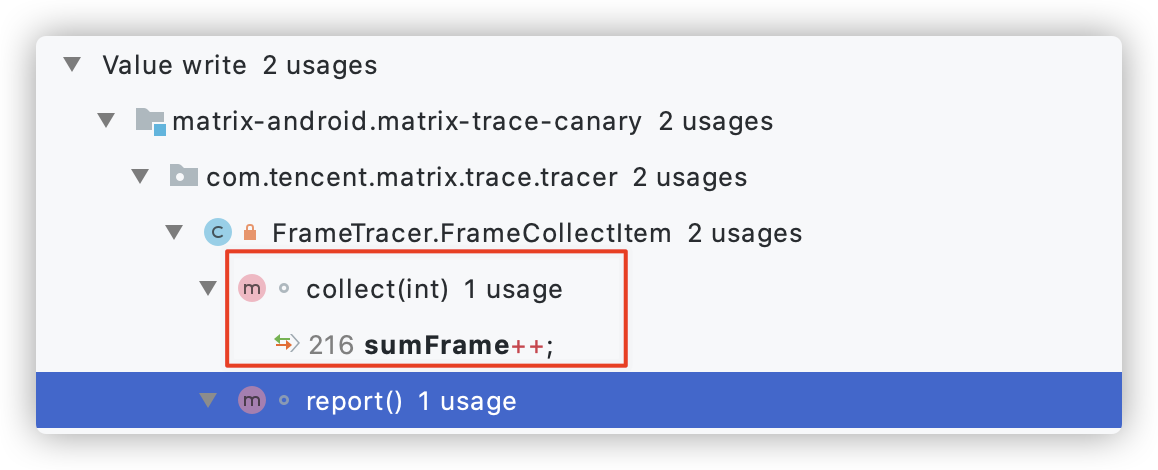

FrameTracer类中的私有类FrameCollectItem

private class FrameCollectItem {long sumFrameCost;int sumFrame = 0;void report() {// 这里计算帧率,1000.f * sumFrame / sumFrameCost 公式和我们之前的// double fps = (((double) (mFrameCount * 1000L)) / diff) 是不是有异曲同工之处?// sumFrameCost应该是动态的时间差常量,可以是500毫秒也可以是1秒。float fps = Math.min(60.f, 1000.f * sumFrame / sumFrameCost);MatrixLog.i(TAG, "[report] FPS:%s %s", fps, toString());try {//这里就是生成Json报告,就不看了。TracePlugin plugin = Matrix.with().getPluginByClass(TracePlugin.class);if (null == plugin) {return;}JSONObject dropLevelObject = new JSONObject();dropLevelObject.put(DropStatus.DROPPED_FROZEN.name(), dropLevel[DropStatus.DROPPED_FROZEN.index]);dropLevelObject.put(DropStatus.DROPPED_HIGH.name(), dropLevel[DropStatus.DROPPED_HIGH.index]);dropLevelObject.put(DropStatus.DROPPED_MIDDLE.name(), dropLevel[DropStatus.DROPPED_MIDDLE.index]);dropLevelObject.put(DropStatus.DROPPED_NORMAL.name(), dropLevel[DropStatus.DROPPED_NORMAL.index]);dropLevelObject.put(DropStatus.DROPPED_BEST.name(), dropLevel[DropStatus.DROPPED_BEST.index]);JSONObject dropSumObject = new JSONObject();dropSumObject.put(DropStatus.DROPPED_FROZEN.name(), dropSum[DropStatus.DROPPED_FROZEN.index]);dropSumObject.put(DropStatus.DROPPED_HIGH.name(), dropSum[DropStatus.DROPPED_HIGH.index]);dropSumObject.put(DropStatus.DROPPED_MIDDLE.name(), dropSum[DropStatus.DROPPED_MIDDLE.index]);dropSumObject.put(DropStatus.DROPPED_NORMAL.name(), dropSum[DropStatus.DROPPED_NORMAL.index]);dropSumObject.put(DropStatus.DROPPED_BEST.name(), dropSum[DropStatus.DROPPED_BEST.index]);JSONObject resultObject = new JSONObject();resultObject = DeviceUtil.getDeviceInfo(resultObject, plugin.getApplication());resultObject.put(SharePluginInfo.ISSUE_SCENE, visibleScene);resultObject.put(SharePluginInfo.ISSUE_DROP_LEVEL, dropLevelObject);resultObject.put(SharePluginInfo.ISSUE_DROP_SUM, dropSumObject);resultObject.put(SharePluginInfo.ISSUE_FPS, fps);Issue issue = new Issue();issue.setTag(SharePluginInfo.TAG_PLUGIN_FPS);issue.setContent(resultObject);plugin.onDetectIssue(issue);} catch (JSONException e) {MatrixLog.e(TAG, "json error", e);} finally {sumFrame = 0;sumDroppedFrames = 0;sumFrameCost = 0;}}}

我跟着这段代码,找一下sumFrame被调用的地方,看看都做了什么,下面可以快速看,因为没有具体代码,原因是我们已经知道了可以通过Choreographer.FrameCallback来注册监听,为了快速验证Matrix Trace 帧率的实现方案,我们跳过细节,直接找到核心逻辑再贴代码。

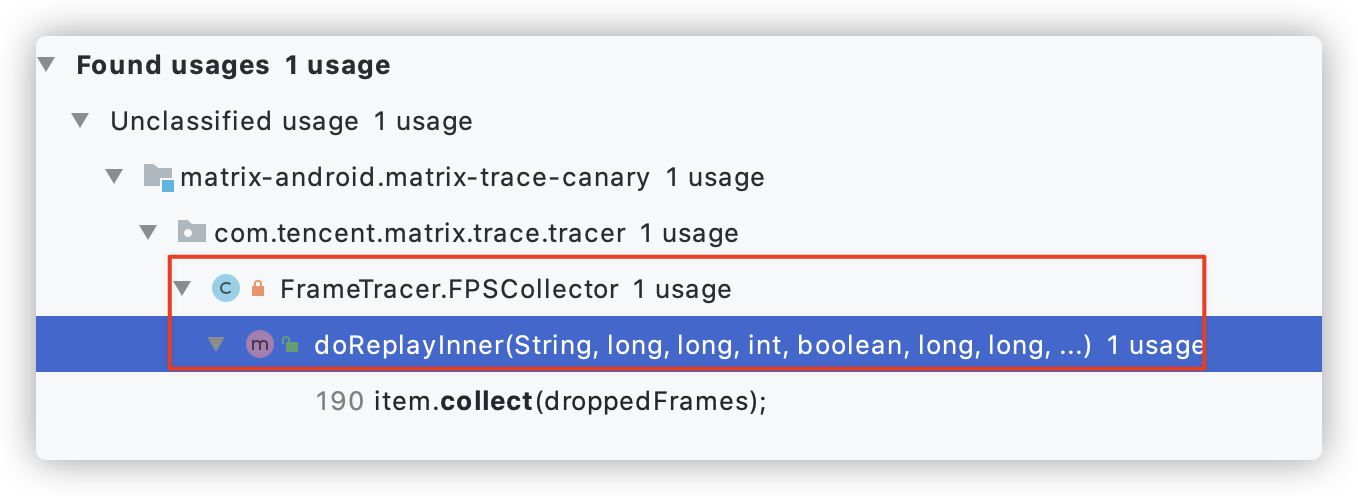

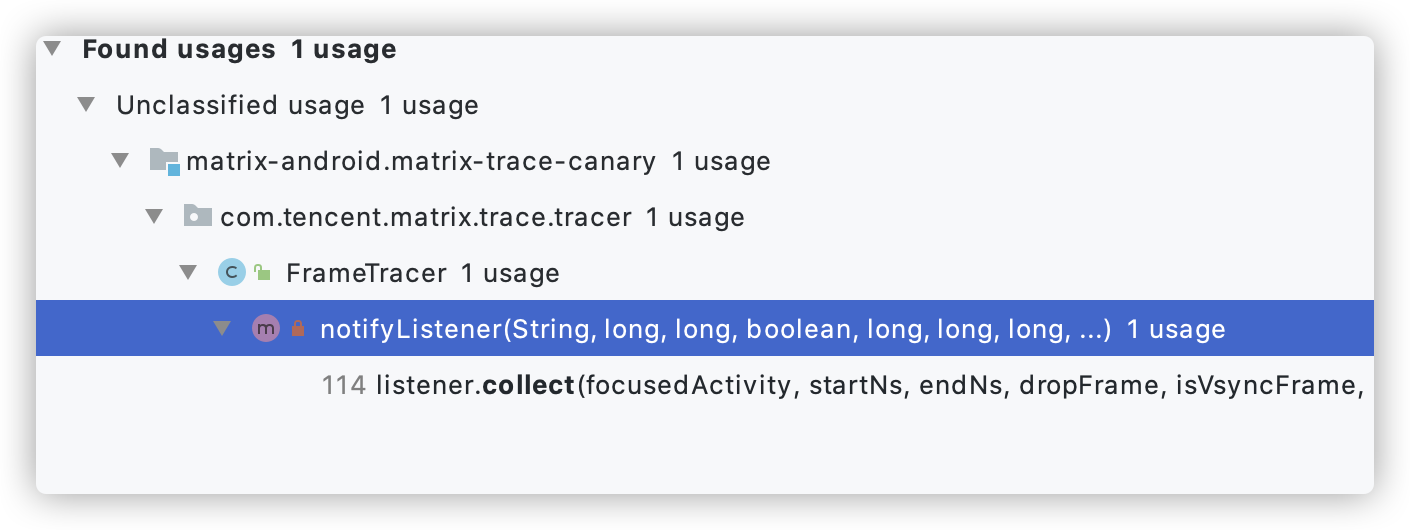

找到了一个函数collect,做了++操作,再往上找发现,FrameTracer又一个内部类FPSCollector

再往上,发现doReplay方法调用了doReplayInner

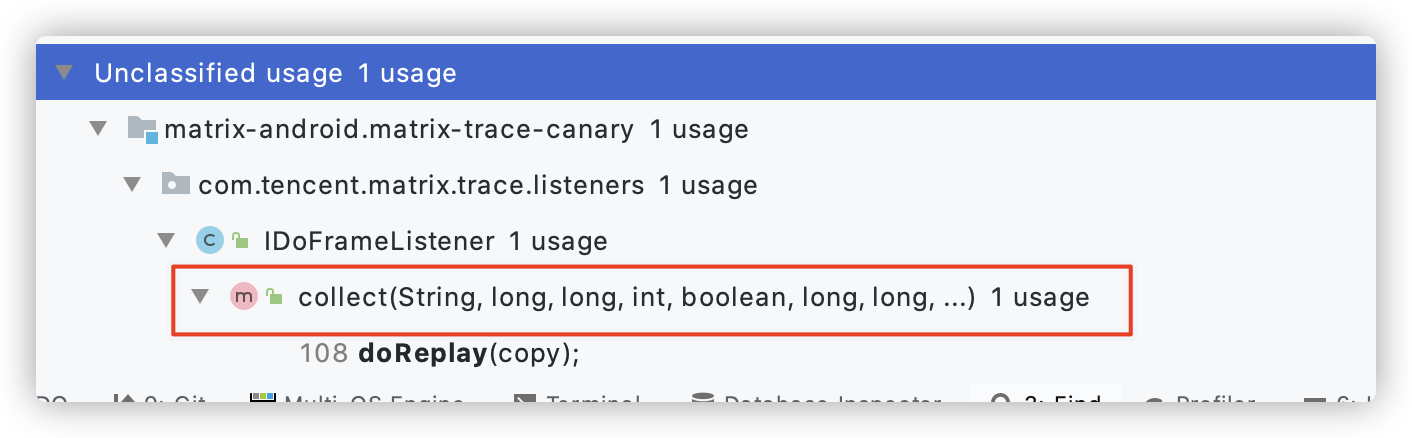

继续后,发现了IDoFrameListener在调用doReplay函数

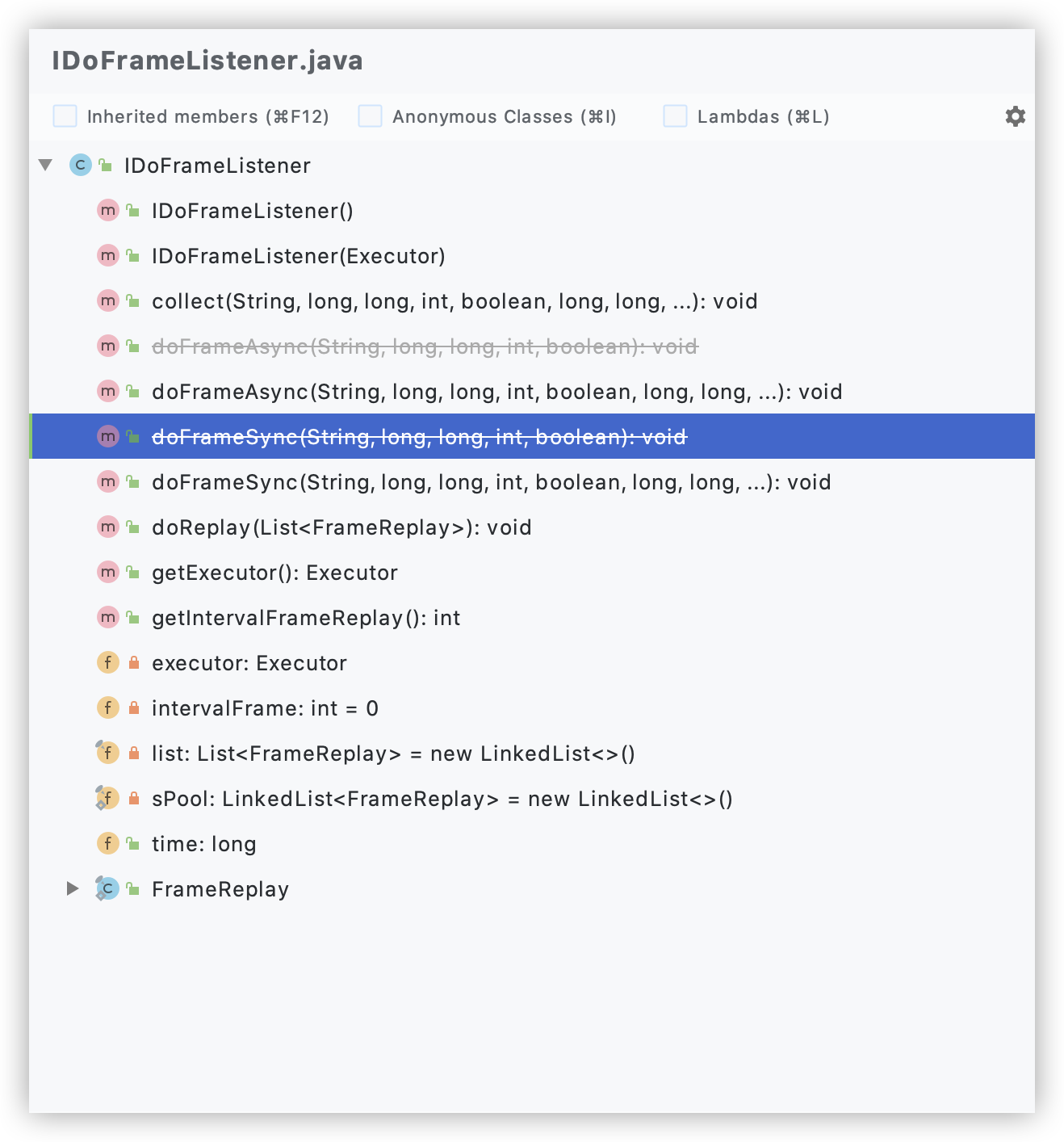

而且FPSCollector就是继承自IDoFrameListener,再来看IDoFrameListener

和我们之前分析的不太一样,并没有找到Choreographer.FrameCallback的影子,计算方式倒是差不多。我不信,我还要再往上找一下

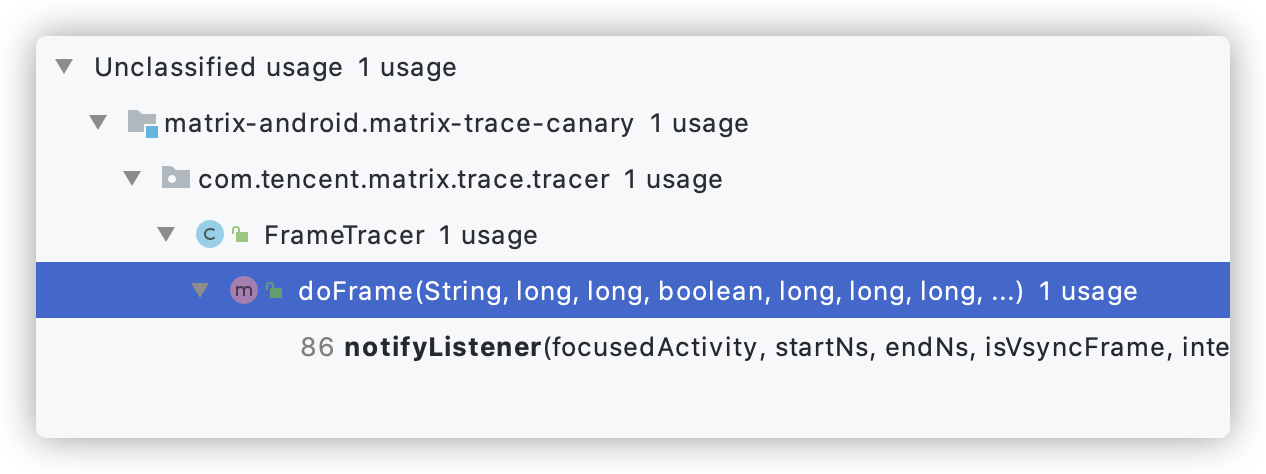

看到这里,发现了doFrame函数,似乎找到了FrameCallback的影子,却又不是。继续看

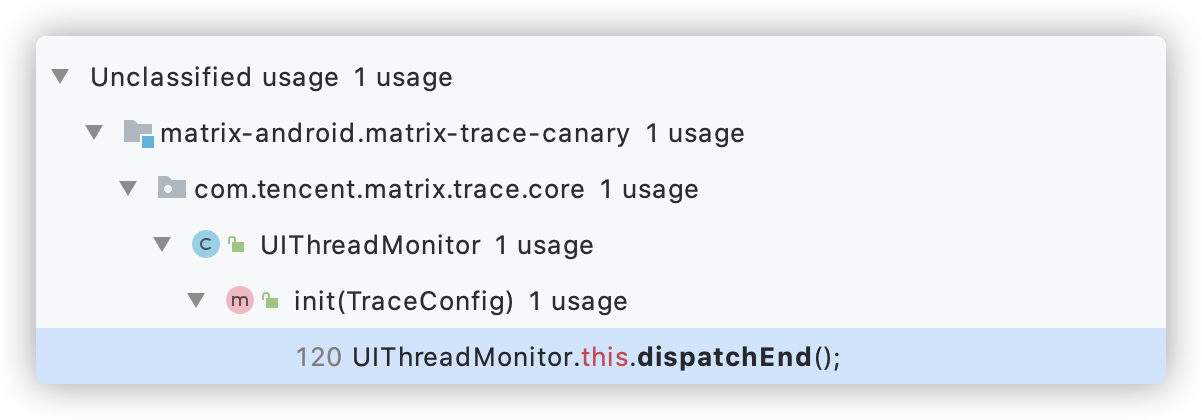

发现了UIThreadMonitor类,继续往上

发现在init函数中被调用,来该看代码了

LooperMonitor.register(new LooperMonitor.LooperDispatchListener() {@Overridepublic boolean isValid() {return isAlive;}@Overridepublic void dispatchStart() {super.dispatchStart();UIThreadMonitor.this.dispatchBegin();}@Overridepublic void dispatchEnd() {super.dispatchEnd();UIThreadMonitor.this.dispatchEnd();}});

LooperMonitor是个什么鬼,为啥能感知帧率?看下它是个啥?

class LooperMonitor implements MessageQueue.IdleHandler

查询一下发现MessageQueue.IdleHandler,它可以用来在线程空闲的时候,指定一个操作,只要线程空闲了,就可以执行它指定的操作,这跟我们之前的方案是不是就不一样了,我们可没有考虑线程是否是空闲,随时都在计算帧率,看到这里,我算是知道了它根本没有用FrameCallback,而是通过另一种方式来计算的,先不说是什么,我们再跟踪一下LooperDispatchListener

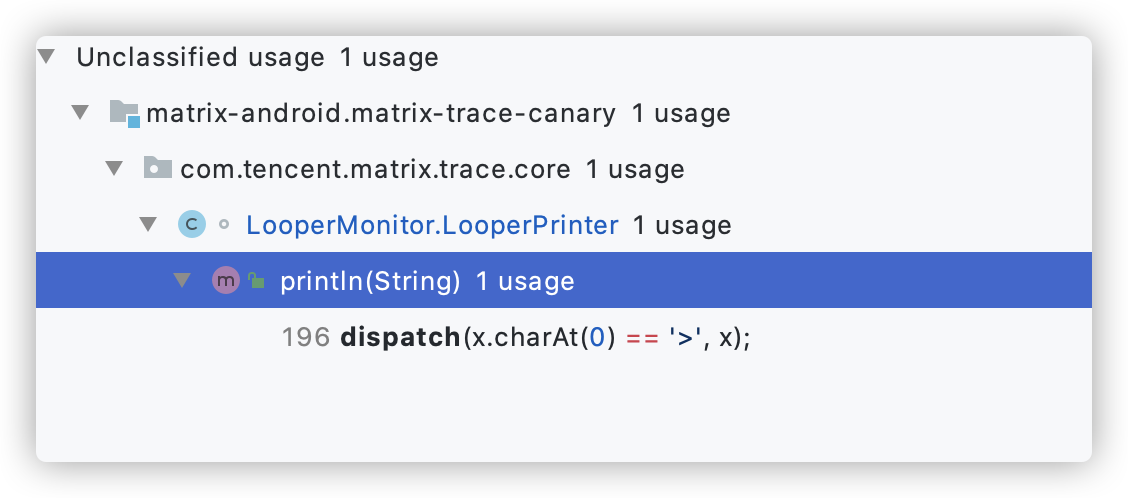

发现一个LooperPrinter,它分发的,来看LooperPrinter

class LooperPrinter implements Printer//打印?public interface Printer {/*** Write a line of text to the output. There is no need to terminate* the given string with a newline.*/void println(String x);}

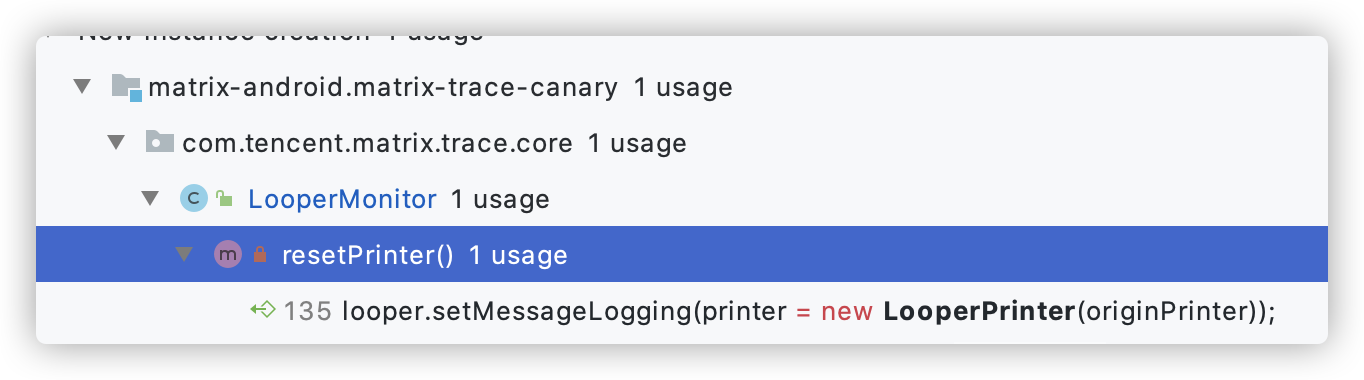

看看这个LooperPrinter是怎么创建对象的,找到如下引用

详细看下代码

private synchronized void resetPrinter() {Printer originPrinter = null;try {if (!isReflectLoggingError) {originPrinter = ReflectUtils.get(looper.getClass(), "mLogging", looper);if (originPrinter == printer && null != printer) {return;}}} catch (Exception e) {isReflectLoggingError = true;Log.e(TAG, "[resetPrinter] %s", e);}if (null != printer) {MatrixLog.w(TAG, "maybe thread:%s printer[%s] was replace other[%s]!",looper.getThread().getName(), printer, originPrinter);}//setMessageLogging用来记录Looper.loop()中相关log信息,给他设置一个printer,//那岂不是打印的工作就交给了LooperPrinterlooper.setMessageLogging(printer = new LooperPrinter(originPrinter));if (null != originPrinter) {MatrixLog.i(TAG, "reset printer, originPrinter[%s] in %s", originPrinter, looper.getThread().getName());}}

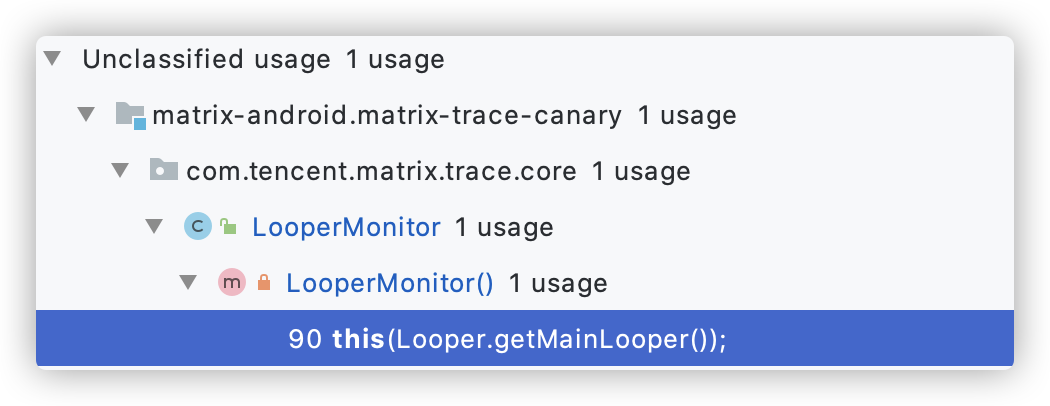

我顺着这个looper,找到了这个

对的就是主线程的Looper,我们都知道主线程,一直负责UI的刷新工作,原来如此,它利用这Looper提供的日志机制,且考虑到在线程空闲时来处理数据,来监控帧率和其他。很不错的设计,值得借鉴学习。而我还发现了一个细节,其实它还是用到了Choreographer来计算帧率,且利用反射来获取字段信息如:

//帧间隔时间frameIntervalNanos= ReflectUtils.reflectObject(choreographer, "mFrameIntervalNanos", Constants.DEFAULT_FRAME_DURATION);//vsync信号接受vsyncReceiver = ReflectUtils.reflectObject(choreographer, "mDisplayEventReceiver", null);上面doFrame函数回调中的 frameTimeNanos 其实就是从 vsyncReceiver中拿到的。

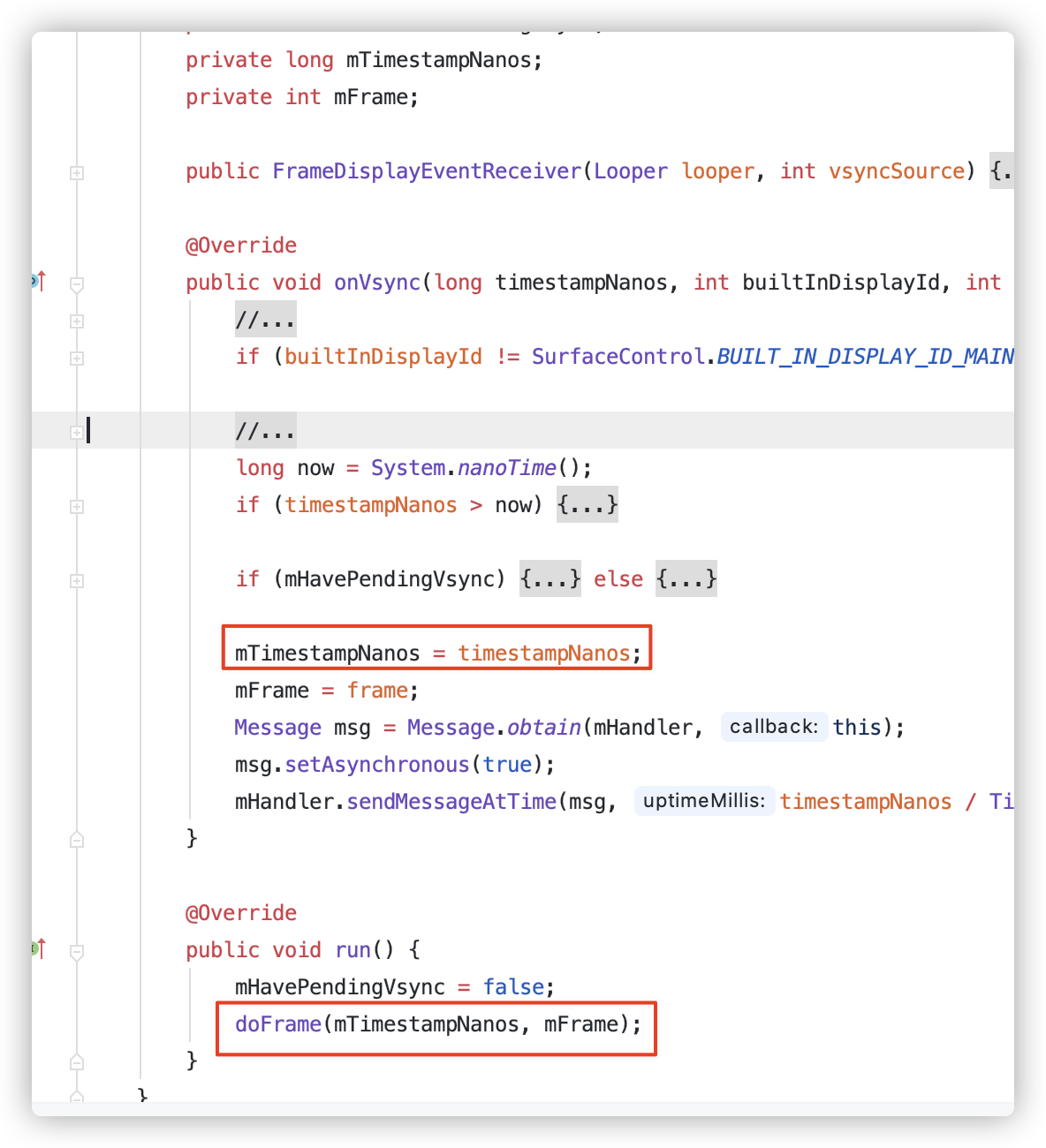

源码截图,看来计算帧率肯定是离不开Choreographer

那么问题又来了。

为什么Looper提供的日志机制可以计算帧率

你是不是跟我一样有这个疑问,我带你看看Choreographer的源码你就明白了,来

private static final ThreadLocal<Choreographer> sThreadInstance =new ThreadLocal<Choreographer>() {@Overrideprotected Choreographer initialValue() {Looper looper = Looper.myLooper();if (looper == null) {throw new IllegalStateException("The current thread must have a looper!");}Choreographer choreographer = new Choreographer(looper, VSYNC_SOURCE_APP);if (looper == Looper.getMainLooper()) {mMainInstance = choreographer;}return choreographer;}};

从这段代码分析我们得出:

Choreographer 是线程私有的,因为ThreadLocal创建的变量只能被当前线程访问,也就是说 一个线程对应一个Choreographer,主线程的Choreographer就是mMainInstance。再来看一段代码

private Choreographer(Looper looper, int vsyncSource) {mLooper = looper;mHandler = new FrameHandler(looper);mDisplayEventReceiver = USE_VSYNC? new FrameDisplayEventReceiver(looper, vsyncSource): null;mLastFrameTimeNanos = Long.MIN_VALUE;mFrameIntervalNanos = (long)(1000000000 / getRefreshRate());mCallbackQueues = new CallbackQueue[CALLBACK_LAST + 1];for (int i = 0; i <= CALLBACK_LAST; i++) {mCallbackQueues[i] = new CallbackQueue();}// b/68769804: For low FPS experiments.setFPSDivisor(SystemProperties.getInt(ThreadedRenderer.DEBUG_FPS_DIVISOR, 1));}

这是Choreographer构造,这里其实我们发现,Choreographer是通过Looper创建的,它俩是一对一的关系,也就是在一个线程中有一个Looper也有一个Choreographer,这里面两个比较重要 FrameHandler,FrameDisplayEventReceiver 现在还不知道他俩用来干嘛,往下看代码

private final class FrameHandler extends Handler {public FrameHandler(Looper looper) {super(looper);}@Overridepublic void handleMessage(Message msg) {switch (msg.what) {case MSG_DO_FRAME:doFrame(System.nanoTime(), 0);break;case MSG_DO_SCHEDULE_VSYNC:doScheduleVsync();break;case MSG_DO_SCHEDULE_CALLBACK:doScheduleCallback(msg.arg1);break;}}}void doFrame(long frameTimeNanos, int frame) {final long startNanos;synchronized (mLock) {if (!mFrameScheduled) {return; // no work to do}if (DEBUG_JANK && mDebugPrintNextFrameTimeDelta) {mDebugPrintNextFrameTimeDelta = false;Log.d(TAG, "Frame time delta: "+ ((frameTimeNanos - mLastFrameTimeNanos) * 0.000001f) + " ms");}long intendedFrameTimeNanos = frameTimeNanos;startNanos = System.nanoTime();final long jitterNanos = startNanos - frameTimeNanos;if (jitterNanos >= mFrameIntervalNanos) {final long skippedFrames = jitterNanos / mFrameIntervalNanos;if (skippedFrames >= SKIPPED_FRAME_WARNING_LIMIT) {Log.i(TAG, "Skipped " + skippedFrames + " frames! "+ "The application may be doing too much work on its main thread.");}final long lastFrameOffset = jitterNanos % mFrameIntervalNanos;if (DEBUG_JANK) {Log.d(TAG, "Missed vsync by " + (jitterNanos * 0.000001f) + " ms "+ "which is more than the frame interval of "+ (mFrameIntervalNanos * 0.000001f) + " ms! "+ "Skipping " + skippedFrames + " frames and setting frame "+ "time to " + (lastFrameOffset * 0.000001f) + " ms in the past.");}frameTimeNanos = startNanos - lastFrameOffset;}if (frameTimeNanos < mLastFrameTimeNanos) {if (DEBUG_JANK) {Log.d(TAG, "Frame time appears to be going backwards. May be due to a "+ "previously skipped frame. Waiting for next vsync.");}scheduleVsyncLocked();return;}if (mFPSDivisor > 1) {long timeSinceVsync = frameTimeNanos - mLastFrameTimeNanos;if (timeSinceVsync < (mFrameIntervalNanos * mFPSDivisor) && timeSinceVsync > 0) {scheduleVsyncLocked();return;}}mFrameInfo.setVsync(intendedFrameTimeNanos, frameTimeNanos);mFrameScheduled = false;mLastFrameTimeNanos = frameTimeNanos;}try {Trace.traceBegin(Trace.TRACE_TAG_VIEW, "Choreographer#doFrame");AnimationUtils.lockAnimationClock(frameTimeNanos / TimeUtils.NANOS_PER_MS);mFrameInfo.markInputHandlingStart();doCallbacks(Choreographer.CALLBACK_INPUT, frameTimeNanos);mFrameInfo.markAnimationsStart();doCallbacks(Choreographer.CALLBACK_ANIMATION, frameTimeNanos);mFrameInfo.markPerformTraversalsStart();doCallbacks(Choreographer.CALLBACK_TRAVERSAL, frameTimeNanos);doCallbacks(Choreographer.CALLBACK_COMMIT, frameTimeNanos);} finally {AnimationUtils.unlockAnimationClock();Trace.traceEnd(Trace.TRACE_TAG_VIEW);}if (DEBUG_FRAMES) {final long endNanos = System.nanoTime();Log.d(TAG, "Frame " + frame + ": Finished, took "+ (endNanos - startNanos) * 0.000001f + " ms, latency "+ (startNanos - frameTimeNanos) * 0.000001f + " ms.");}}

通过代码我们发现,FrameHandler它接收消息,然后处理消息,调用Choreographer的doFrame函数,这个doFrame非postFrameCallback的FrameCallback的doFrame,而经过代码的搜索,我发现FrameCallback的doFrame 就是通过这里触发的,在doCallbacks函数中。这个细节不带你们看了,我们来看这个FrameHandler的消息是谁触发的,接着看FrameDisplayEventReceiver

private final class FrameDisplayEventReceiver extends DisplayEventReceiverimplements Runnable {private boolean mHavePendingVsync;private long mTimestampNanos;private int mFrame;public FrameDisplayEventReceiver(Looper looper, int vsyncSource) {super(looper, vsyncSource);}@Overridepublic void onVsync(long timestampNanos, int builtInDisplayId, int frame) {// Ignore vsync from secondary display.// This can be problematic because the call to scheduleVsync() is a one-shot.// We need to ensure that we will still receive the vsync from the primary// display which is the one we really care about. Ideally we should schedule// vsync for a particular display.// At this time Surface Flinger won't send us vsyncs for secondary displays// but that could change in the future so let's log a message to help us remember// that we need to fix this.if (builtInDisplayId != SurfaceControl.BUILT_IN_DISPLAY_ID_MAIN) {Log.d(TAG, "Received vsync from secondary display, but we don't support "+ "this case yet. Choreographer needs a way to explicitly request "+ "vsync for a specific display to ensure it doesn't lose track "+ "of its scheduled vsync.");scheduleVsync();return;}// Post the vsync event to the Handler.// The idea is to prevent incoming vsync events from completely starving// the message queue. If there are no messages in the queue with timestamps// earlier than the frame time, then the vsync event will be processed immediately.// Otherwise, messages that predate the vsync event will be handled first.long now = System.nanoTime();if (timestampNanos > now) {Log.w(TAG, "Frame time is " + ((timestampNanos - now) * 0.000001f)+ " ms in the future! Check that graphics HAL is generating vsync "+ "timestamps using the correct timebase.");timestampNanos = now;}if (mHavePendingVsync) {Log.w(TAG, "Already have a pending vsync event. There should only be "+ "one at a time.");} else {mHavePendingVsync = true;}mTimestampNanos = timestampNanos;mFrame = frame;Message msg = Message.obtain(mHandler, this);msg.setAsynchronous(true);mHandler.sendMessageAtTime(msg, timestampNanos / TimeUtils.NANOS_PER_MS);}@Overridepublic void run() {mHavePendingVsync = false;doFrame(mTimestampNanos, mFrame);}}

onVsync 检索源码发现这个函数是由android.view包下DisplayEventReceiver触发,DisplayEventReceiver实际是在C++层初始化,并监听Vsync信号,其实是由SurfaceFlinger传递过来,所以这里我知道了,FrameDisplayEventReceiver用来接收onVsync信号,然后通过mHandler也就是上面的FrameHandler触发一次消息的传递。然而你是不是有点怀疑不对劲,因为上面的case MSGDO_FRAME 才会触发doFrame函数,这里没有设置这样的消息mHandler.obtainMessage(_MSG_DO_FRAME),这样就会触发对吧,但仔细看Message.obtain(mHandler, this),这里的this就是FrameDisplayEventReceiver,而FrameDisplayEventReceiver实现Runnable,那么就会导致FrameHandler在收到消息后,执行FrameDisplayEventReceiver的run函数,而这个函数就是调用了doFrame,那么就通了。

好了,可以总结下为什么可以了:

Choreographer的onVsync 消息消费其实就是通过所在线程中的Looper中处理的,那么我们监控主线程中的looper消息,同样也能监控到帧率。就是这样的道理。

小结

- Main Looper 中设置 Printer来做分发

- 分发后来计算帧率

- 通过MessageQueue.IdleHandler避开线程忙碌的时间,等待闲时处理

大致就这么多,如果你有什么新发现,或者我有不对的地方,欢迎评论执教。