更换镜像源

http://www.xiaochenboss.cn/article_detail/1587183252459

channels:- https://mirrors.ustc.edu.cn/anaconda/pkgs/free/- defaultsshow_channel_urls: truechannel_alias: https://mirrors.tuna.tsinghua.edu.cn/anacondadefault_channels:- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/r- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/pro- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/msys2custom_channels:conda-forge: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudmsys2: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudbioconda: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudmenpo: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudpytorch: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudsimpleitk: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

安装第三方模块镜像加速指令

pip install tensorflow==1.15.0 -i https://pypi.douban.com/simplepip install numpy -i https://mirrors.aliyun.com/pypi/simple/

安装第三方模块指令

pip install pandas

卸载第三方模块指令

pip uninstall pandas

查看变量的地址 id()

a = {1: 'one', 2: 'two', 3: 'three', 4: 'four'}b = a # 表示赋值c = a.copy() # 表示拷贝print('a的地址:', id(a))print('b的地址:', id(b))print('c的地址:', id(c))# 打印a的地址: 6317872b的地址: 6317872c的地址: 6317952

查看内置方法函数 dir()

需要在py 的idea 才能查询到

dir(dict)['__class__', '__contains__', '__delattr__', '__delitem__', '__dir__', '__doc__', '__eq__', '__format__','__ge__', '__getattribute__', '__getitem__', '__gt__', '__hash__', '__init__', '__init_subclass__','__iter__', '__le__', '__len__', '__lt__', '__ne__', '__new__', '__reduce__', '__reduce_ex__','__repr__', '__reversed__', '__setattr__', '__setitem__', '__sizeof__', '__str__', '__subclasshook__','clear', 'copy', 'fromkeys', 'get', 'items', 'keys', 'pop', 'popitem', 'setdefault', 'update', 'values']

查看类名的属性 dict

class CC:def setXY(self,x,y):self.x = xself.y = ydef printXY(self):print(self.x,self.y)dd =CC()dd.setXY(12,15)print(dd.__dict__)print(CC.__dict__)-------------------------{'x': 12, 'y': 15}{'__module__': '__main__', 'setXY': <function CC.setXY at 0x00A58658>, 'printXY': <function CC.printXY at 0x00A58610>, '__dict__': <attribute '__dict__' of 'CC' objects>, '__weakref__': <attribute '__weakref__' of 'CC' objects>, '__doc__': None}

查看内置方法使用文档 help()

需要在py 的idea 才能查询到

help(dict)class dict(object)| dict() -> new empty dictionary| dict(mapping) -> new dictionary initialized from a mapping object's| (key, value) pairs| dict(iterable) -> new dictionary initialized as if via:| d = {}| for k, v in iterable:| d[k] = v| dict(**kwargs) -> new dictionary initialized with the name=value pairs| in the keyword argument list. For example: dict(one=1, two=2)|| Methods defined here:|| __contains__(self, key, /)| True if the dictionary has the specified key, else False.|| __delitem__(self, key, /)| Delete self[key].|| __eq__(self, value, /)| Return self==value.|| __ge__(self, value, /)| Return self>=value.|| __getattribute__(self, name, /)| Return getattr(self, name).|| __getitem__(...)| x.__getitem__(y) <==> x[y]|| __gt__(self, value, /)| Return self>value.|| __init__(self, /, *args, **kwargs)| Initialize self. See help(type(self)) for accurate signature.|| __iter__(self, /)| Implement iter(self).|| __le__(self, value, /)| Return self<=value.|| __len__(self, /)| Return len(self).|| __lt__(self, value, /)| Return self<value.|| __ne__(self, value, /)| Return self!=value.|| __repr__(self, /)| Return repr(self).|| __reversed__(self, /)| Return a reverse iterator over the dict keys.|| __setitem__(self, key, value, /)| Set self[key] to value.|| __sizeof__(...)| D.__sizeof__() -> size of D in memory, in bytes|| clear(...)| D.clear() -> None. Remove all items from D.|| copy(...)| D.copy() -> a shallow copy of D|| get(self, key, default=None, /)| Return the value for key if key is in the dictionary, else default.|| items(...)| D.items() -> a set-like object providing a view on D's items|| keys(...)| D.keys() -> a set-like object providing a view on D's keys|| pop(...)| D.pop(k[,d]) -> v, remove specified key and return the corresponding value.| If key is not found, d is returned if given, otherwise KeyError is raised|| popitem(self, /)| Remove and return a (key, value) pair as a 2-tuple.|| Pairs are returned in LIFO (last-in, first-out) order.| Raises KeyError if the dict is empty.|| setdefault(self, key, default=None, /)| Insert key with a value of default if key is not in the dictionary.|| Return the value for key if key is in the dictionary, else default.|| update(...)| D.update([E, ]**F) -> None. Update D from dict/iterable E and F.| If E is present and has a .keys() method, then does: for k in E: D[k] = E[k]| If E is present and lacks a .keys() method, then does: for k, v in E: D[k] = v| In either case, this is followed by: for k in F: D[k] = F[k]|| values(...)| D.values() -> an object providing a view on D's values|| ----------------------------------------------------------------------| Class methods defined here:|| fromkeys(iterable, value=None, /) from builtins.type| Create a new dictionary with keys from iterable and values set to value.|| ----------------------------------------------------------------------| Static methods defined here:|| __new__(*args, **kwargs) from builtins.type| Create and return a new object. See help(type) for accurate signature.|| ----------------------------------------------------------------------| Data and other attributes defined here:|| __hash__ = None

导出环境依耐包

pip freeze > requirements.txt

通过依赖包安装环境

pip install -r requirements.txtpip install -r requirements.txt -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com

后台启动脚本

把脚本文件后缀 改为**.pyw** 即可

没有界面的直接在 任务管理器 直接停止

鼠标双击 main.pyw 文件即可

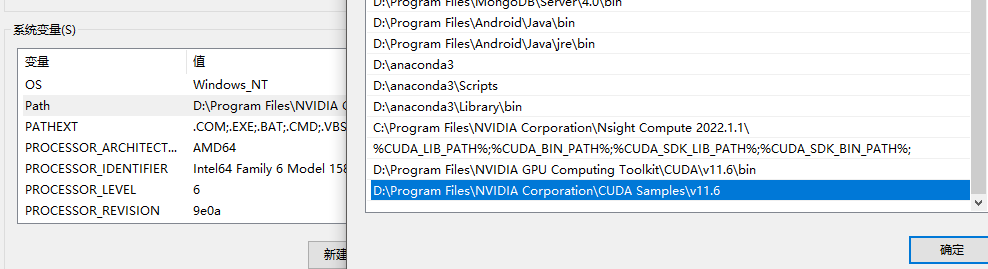

如何查看是否安装了cudA和GPU

安装的cuda和gpu 要添加系统环境变量 在path

%CUDA_LIB_PATH%;%CUDA_BIN_PATH%;%CUDA_SDK_LIB_PATH%;%CUDA_SDK_BIN_PATH%;

D:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\bin

D:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\bin

- cd ‘.\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\extras\demo_suite\

cmd 进入到这文件夹下 输入 .\bandwidthTest.exe

打印出信息 来 看最后一行 显示 Result = PASS 表示配置成功

- cd ‘.\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\extras\demo_suite\

cmd 进入到这文件夹下 输入 .\deviceQuery.exe

打印出信息 来 看最后一行 显示 Result = PASS 表示配置成功

ERROR chentao@null D:\ cd '.\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\extras\demo_suite\' chentao@null D: Program Files NVIDIA GPU Computing Toolkit CUDA v11.6 extras demo_suite .\bandwidthTest.exe[CUDA Bandwidth Test] - Starting...Running on...Device 0: NVIDIA GeForce GTX 1050Quick ModeHost to Device Bandwidth, 1 Device(s)PINNED Memory TransfersTransfer Size (Bytes) Bandwidth(MB/s)33554432 12520.5Device to Host Bandwidth, 1 Device(s)PINNED Memory TransfersTransfer Size (Bytes) Bandwidth(MB/s)33554432 12764.8Device to Device Bandwidth, 1 Device(s)PINNED Memory TransfersTransfer Size (Bytes) Bandwidth(MB/s)33554432 71500.4Result = PASS

chentao@null D: Program Files NVIDIA GPU Computing Toolkit CUDA v11.6 extras demo_suite .\deviceQuery.exeD:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6\extras\demo_suite\deviceQuery.exe Starting...CUDA Device Query (Runtime API) version (CUDART static linking)Detected 1 CUDA Capable device(s)Device 0: "NVIDIA GeForce GTX 1050"CUDA Driver Version / Runtime Version 11.6 / 11.6CUDA Capability Major/Minor version number: 6.1Total amount of global memory: 3072 MBytes (3221028864 bytes)( 6) Multiprocessors, (128) CUDA Cores/MP: 768 CUDA CoresGPU Max Clock rate: 1442 MHz (1.44 GHz)Memory Clock rate: 3504 MhzMemory Bus Width: 96-bitL2 Cache Size: 786432 bytesMaximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layersMaximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layersTotal amount of constant memory: zu bytesTotal amount of shared memory per block: zu bytesTotal number of registers available per block: 65536Warp size: 32Maximum number of threads per multiprocessor: 2048Maximum number of threads per block: 1024Max dimension size of a thread block (x,y,z): (1024, 1024, 64)Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)Maximum memory pitch: zu bytesTexture alignment: zu bytesConcurrent copy and kernel execution: Yes with 5 copy engine(s)Run time limit on kernels: YesIntegrated GPU sharing Host Memory: NoSupport host page-locked memory mapping: YesAlignment requirement for Surfaces: YesDevice has ECC support: DisabledCUDA Device Driver Mode (TCC or WDDM): WDDM (Windows Display Driver Model)Device supports Unified Addressing (UVA): YesDevice supports Compute Preemption: YesSupports Cooperative Kernel Launch: YesSupports MultiDevice Co-op Kernel Launch: NoDevice PCI Domain ID / Bus ID / location ID: 0 / 1 / 0Compute Mode:< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 11.6, CUDA Runtime Version = 11.6, NumDevs = 1, Device0 = NVIDIA GeForce GTX 1050Result = PASS

vscode VS pycharm

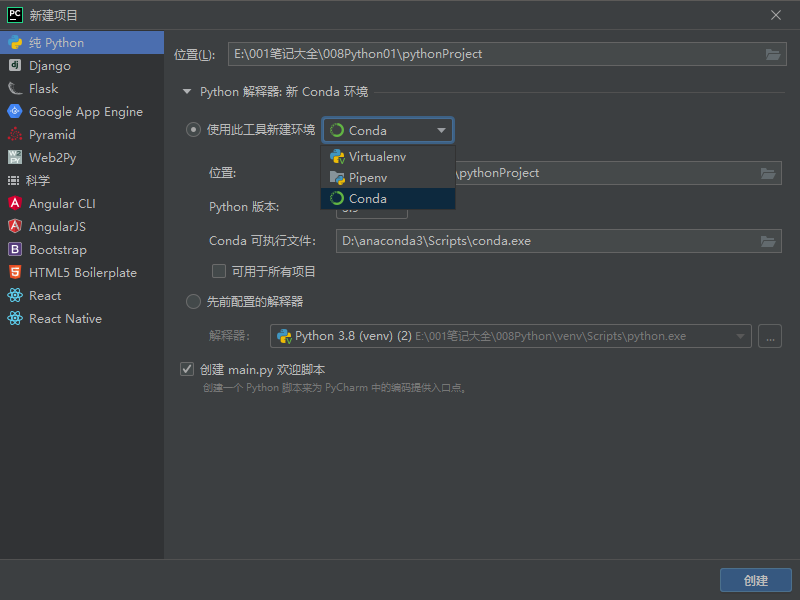

pycharm 编辑器

配置简单, 无需关心环境 , 软件自带 项目环境管理 , 一键创建配置好项目