PyTorch 中通道在最后的内存格式(beta)

原文:https://pytorch.org/tutorials/intermediate/memory_format_tutorial.html

作者: Vitaly Fedyunin

什么是通道在最后

通道在最后的内存格式是在保留内存尺寸的顺序中对 NCHW 张量进行排序的另一种方法。 通道最后一个张量的排序方式使通道成为最密集的维度(又称为每像素存储图像)。

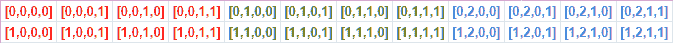

例如,NCHW 张量的经典(连续)存储(在我们的示例中是具有 3 个颜色通道的两个2x2图像)如下所示:

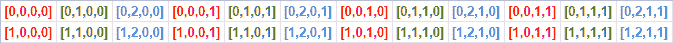

通道最后的存储格式对数据的排序方式不同:

Pytorch 通过使用现有的跨步结构支持内存格式(并提供与现有模型(包括 eager,JIT 和 TorchScript)的向后兼容性)。 例如,通道在最后的格式中的10x3x16x16批量的步幅等于(768, 1, 48, 3)。

通道最后一个存储格式仅适用于 4D NCWH 张量。

import torchN, C, H, W = 10, 3, 32, 32

内存格式 API

这是在连续和通道最后存储格式之间转换张量的方法。

经典 PyTorch 连续张量

x = torch.empty(N, C, H, W)print(x.stride()) # Ouputs: (3072, 1024, 32, 1)

出:

(3072, 1024, 32, 1)

转换运算符

x = x.contiguous(memory_format=torch.channels_last)print(x.shape) # Outputs: (10, 3, 32, 32) as dimensions order preservedprint(x.stride()) # Outputs: (3072, 1, 96, 3)

出:

torch.Size([10, 3, 32, 32])(3072, 1, 96, 3)

返回连续

x = x.contiguous(memory_format=torch.contiguous_format)print(x.stride()) # Outputs: (3072, 1024, 32, 1)

出:

(3072, 1024, 32, 1)

替代选择

x = x.to(memory_format=torch.channels_last)print(x.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

格式检查

print(x.is_contiguous(memory_format=torch.channels_last)) # Ouputs: True

出:

True

最后创建为渠道

x = torch.empty(N, C, H, W, memory_format=torch.channels_last)print(x.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

clone保留内存格式

y = x.clone()print(y.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

to,cuda,float…保留内存格式

if torch.cuda.is_available():y = x.cuda()print(y.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

empty_like和*_like运算符保留内存格式

y = torch.empty_like(x)print(y.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

点向运算符保留内存格式

z = x + yprint(z.stride()) # Ouputs: (3072, 1, 96, 3)

出:

(3072, 1, 96, 3)

转换,Batchnorm模块支持通道在最后(仅适用于CudNN >= 7.6)

if torch.backends.cudnn.version() >= 7603:input = torch.randint(1, 10, (2, 8, 4, 4), dtype=torch.float32, device="cuda", requires_grad=True)model = torch.nn.Conv2d(8, 4, 3).cuda().float()input = input.contiguous(memory_format=torch.channels_last)model = model.to(memory_format=torch.channels_last) # Module parameters need to be Channels Lastout = model(input)print(out.is_contiguous(memory_format=torch.channels_last)) # Ouputs: True

出:

True

性能提升

在具有张量核心支持的 Nvidia 硬件上观察到了最大的性能提升。 在运行 Nvidia 提供的 AMP(自动混合精度)训练脚本时,我们可以将性能提高 22% 以上。

python main_amp.py -a resnet50 --b 200 --workers 16 --opt-level O2 ./data

# opt_level = O2# keep_batchnorm_fp32 = None <class 'NoneType'># loss_scale = None <class 'NoneType'># CUDNN VERSION: 7603# => creating model 'resnet50'# Selected optimization level O2: FP16 training with FP32 batchnorm and FP32 master weights.# Defaults for this optimization level are:# enabled : True# opt_level : O2# cast_model_type : torch.float16# patch_torch_functions : False# keep_batchnorm_fp32 : True# master_weights : True# loss_scale : dynamic# Processing user overrides (additional kwargs that are not None)...# After processing overrides, optimization options are:# enabled : True# opt_level : O2# cast_model_type : torch.float16# patch_torch_functions : False# keep_batchnorm_fp32 : True# master_weights : True# loss_scale : dynamic# Epoch: [0][10/125] Time 0.866 (0.866) Speed 230.949 (230.949) Loss 0.6735125184 (0.6735) Prec@1 61.000 (61.000) Prec@5 100.000 (100.000)# Epoch: [0][20/125] Time 0.259 (0.562) Speed 773.481 (355.693) Loss 0.6968704462 (0.6852) Prec@1 55.000 (58.000) Prec@5 100.000 (100.000)# Epoch: [0][30/125] Time 0.258 (0.461) Speed 775.089 (433.965) Loss 0.7877287269 (0.7194) Prec@1 51.500 (55.833) Prec@5 100.000 (100.000)# Epoch: [0][40/125] Time 0.259 (0.410) Speed 771.710 (487.281) Loss 0.8285319805 (0.7467) Prec@1 48.500 (54.000) Prec@5 100.000 (100.000)# Epoch: [0][50/125] Time 0.260 (0.380) Speed 770.090 (525.908) Loss 0.7370464802 (0.7447) Prec@1 56.500 (54.500) Prec@5 100.000 (100.000)# Epoch: [0][60/125] Time 0.258 (0.360) Speed 775.623 (555.728) Loss 0.7592862844 (0.7472) Prec@1 51.000 (53.917) Prec@5 100.000 (100.000)# Epoch: [0][70/125] Time 0.258 (0.345) Speed 774.746 (579.115) Loss 1.9698858261 (0.9218) Prec@1 49.500 (53.286) Prec@5 100.000 (100.000)# Epoch: [0][80/125] Time 0.260 (0.335) Speed 770.324 (597.659) Loss 2.2505953312 (1.0879) Prec@1 50.500 (52.938) Prec@5 100.000 (100.000)

传递--channels-last true允许以通道在最后的格式运行模型,观察到 22% 的表现增益。

python main_amp.py -a resnet50 --b 200 --workers 16 --opt-level O2 --channels-last true ./data

# opt_level = O2# keep_batchnorm_fp32 = None <class 'NoneType'># loss_scale = None <class 'NoneType'>## CUDNN VERSION: 7603## => creating model 'resnet50'# Selected optimization level O2: FP16 training with FP32 batchnorm and FP32 master weights.## Defaults for this optimization level are:# enabled : True# opt_level : O2# cast_model_type : torch.float16# patch_torch_functions : False# keep_batchnorm_fp32 : True# master_weights : True# loss_scale : dynamic# Processing user overrides (additional kwargs that are not None)...# After processing overrides, optimization options are:# enabled : True# opt_level : O2# cast_model_type : torch.float16# patch_torch_functions : False# keep_batchnorm_fp32 : True# master_weights : True# loss_scale : dynamic## Epoch: [0][10/125] Time 0.767 (0.767) Speed 260.785 (260.785) Loss 0.7579724789 (0.7580) Prec@1 53.500 (53.500) Prec@5 100.000 (100.000)# Epoch: [0][20/125] Time 0.198 (0.482) Speed 1012.135 (414.716) Loss 0.7007197738 (0.7293) Prec@1 49.000 (51.250) Prec@5 100.000 (100.000)# Epoch: [0][30/125] Time 0.198 (0.387) Speed 1010.977 (516.198) Loss 0.7113101482 (0.7233) Prec@1 55.500 (52.667) Prec@5 100.000 (100.000)# Epoch: [0][40/125] Time 0.197 (0.340) Speed 1013.023 (588.333) Loss 0.8943189979 (0.7661) Prec@1 54.000 (53.000) Prec@5 100.000 (100.000)# Epoch: [0][50/125] Time 0.198 (0.312) Speed 1010.541 (641.977) Loss 1.7113249302 (0.9551) Prec@1 51.000 (52.600) Prec@5 100.000 (100.000)# Epoch: [0][60/125] Time 0.198 (0.293) Speed 1011.163 (683.574) Loss 5.8537774086 (1.7716) Prec@1 50.500 (52.250) Prec@5 100.000 (100.000)# Epoch: [0][70/125] Time 0.198 (0.279) Speed 1011.453 (716.767) Loss 5.7595844269 (2.3413) Prec@1 46.500 (51.429) Prec@5 100.000 (100.000)# Epoch: [0][80/125] Time 0.198 (0.269) Speed 1011.827 (743.883) Loss 2.8196096420 (2.4011) Prec@1 47.500 (50.938) Prec@5 100.000 (100.000)

以下模型列表完全支持通道在最后,并在 Volta 设备上显示了 8%-35% 的表现增益:alexnet,mnasnet0_5,mnasnet0_75,mnasnet1_0,mnasnet1_3,mobilenet_v2,resnet101,resnet152,resnet18,resnet34,resnet50,resnext50_32x4d,shufflenet_v2_x0_5,shufflenet_v2_x1_0,shufflenet_v2_x1_5,shufflenet_v2_x2_0,squeezenet1_0,squeezenet1_1,vgg11 ,vgg11_bn,vgg13,vgg13_bn,vgg16,vgg16_bn,vgg19,vgg19_bn,wide_resnet101_2,wide_resnet50_2

转换现有模型

通道在最后支持不受现有模型的限制,因为只要输入格式正确,任何模型都可以转换为通道在最后,并通过图传播格式。

# Need to be done once, after model initialization (or load)model = model.to(memory_format=torch.channels_last) # Replace with your model# Need to be done for every inputinput = input.to(memory_format=torch.channels_last) # Replace with your inputoutput = model(input)

但是,并非所有运算符都完全转换为支持通道在最后(通常返回连续输出)。 这意味着您需要根据支持的运算符列表来验证已使用运算符的列表,或将内存格式检查引入急切的执行模式并运行模型。

运行以下代码后,如果运算符的输出与输入的存储格式不匹配,运算符将引发异常。

def contains_cl(args):for t in args:if isinstance(t, torch.Tensor):if t.is_contiguous(memory_format=torch.channels_last) and not t.is_contiguous():return Trueelif isinstance(t, list) or isinstance(t, tuple):if contains_cl(list(t)):return Truereturn Falsedef print_inputs(args, indent=''):for t in args:if isinstance(t, torch.Tensor):print(indent, t.stride(), t.shape, t.device, t.dtype)elif isinstance(t, list) or isinstance(t, tuple):print(indent, type(t))print_inputs(list(t), indent=indent + ' ')else:print(indent, t)def check_wrapper(fn):name = fn.__name__def check_cl(*args, **kwargs):was_cl = contains_cl(args)try:result = fn(*args, **kwargs)except Exception as e:print("`{}` inputs are:".format(name))print_inputs(args)print('-------------------')raise efailed = Falseif was_cl:if isinstance(result, torch.Tensor):if result.dim() == 4 and not result.is_contiguous(memory_format=torch.channels_last):print("`{}` got channels_last input, but output is not channels_last:".format(name),result.shape, result.stride(), result.device, result.dtype)failed = Trueif failed and True:print("`{}` inputs are:".format(name))print_inputs(args)raise Exception('Operator `{}` lost channels_last property'.format(name))return resultreturn check_clold_attrs = dict()def attribute(m):old_attrs[m] = dict()for i in dir(m):e = getattr(m, i)exclude_functions = ['is_cuda', 'has_names', 'numel','stride', 'Tensor', 'is_contiguous', '__class__']if i not in exclude_functions and not i.startswith('_') and '__call__' in dir(e):try:old_attrs[m][i] = esetattr(m, i, check_wrapper(e))except Exception as e:print(i)print(e)attribute(torch.Tensor)attribute(torch.nn.functional)attribute(torch)

出:

Optional'_Optional' object has no attribute '__name__'

如果您发现不支持通道在最后的张量的运算符并且想要贡献力量,请随时使用以下开发人员指南。

下面的代码是恢复火炬的属性。

for (m, attrs) in old_attrs.items():for (k,v) in attrs.items():setattr(m, k, v)

要做的工作

仍有许多事情要做,例如:

- 解决 N1HW 和 NC11 张量的歧义;

- 测试分布式训练支持;

- 提高运算符覆盖率。

如果您有反馈和/或改进建议,请通过创建 ISSUE 来通知我们。

脚本的总运行时间:(0 分钟 2.300 秒)

下载 Python 源码:memory_format_tutorial.py

下载 Jupyter 笔记本:memory_format_tutorial.ipynb

由 Sphinx 画廊生成的画廊