参考:

- https://blog.csdn.net/wuchenlhy/article/details/103310954

- https://blog.csdn.net/weixin_41867777/article/details/80401640?utm_medium=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.channel_param&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.channel_param

- 参考并运行以下docker-compose.yml

镜像里的环境(镜像自带pyspark):

singularities/spark:2.2版本中Hadoop版本:2.8.2Spark版本: 2.2.1Scala版本:2.11.8Java版本:1.8.0_151

version: "2"services:master:image: singularities/sparkcommand: start-spark masterhostname: masterports:- "6066:6066"- "7070:7070"- "8080:8080"- "8888:8888"- "50070:50070"stdin_open: truetty: truevolumes:- /home/pqchen/code/docker/pyspark:/home/mysparkworking_dir: /home/mysparksecurity_opt:- seccomp=unconfinedcap_add:- SYS_PTRACEworker:image: singularities/sparkcommand: start-spark worker masterenvironment:SPARK_WORKER_CORES: 1SPARK_WORKER_MEMORY: 2glinks:- master

- 进入容器,安装pip和jupyter ```bash curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py # 下载安装脚本

whereis python # 查看python版本号

用哪个版本的 Python 运行安装脚本,pip 就被关联到哪个版本,如果是 Python3.5 则执行以下命令

python3.5 get-pip.py # 运行安装脚本。

3. 处理jupyter自身的配置(略)3. 修改环境变量 .bashrc文件,添加:```bashexport PYSPARK_PYTHON=python3.5 #使用python3export PYSPARK_DRIVER_PYTHON=jupyterexport PYSPARK_DRIVER_PYTHON_OPTS="notebook --no-browser --allow-root"

- 执行 pyspark 即可

- 结果:

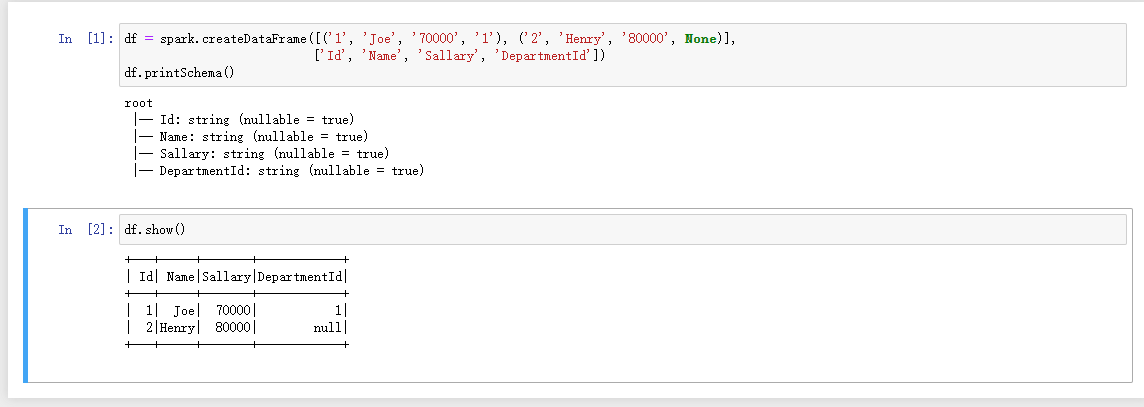

```python

df = spark.createDataFrame([(‘1’, ‘Joe’, ‘70000’, ‘1’), (‘2’, ‘Henry’, ‘80000’, None)],

df.printSchema()['Id', 'Name', 'Sallary', 'DepartmentId'])

df.show()

```