一、什么是kubernetes

以下内容来自于官方文档

Kubernetes 是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化。 Kubernetes 拥有一个庞大且快速增长的生态系统。Kubernetes 的服务、支持和工具广泛可用。

Kubernetes 这个名字源于希腊语,意为“舵手”或“飞行员”。k8s 这个缩写是因为 k 和 s 之间有八个字符的关系。 Google 在 2014 年开源了 Kubernetes 项目。Kubernetes 建立在 Google 在大规模运行生产工作负载方面拥有十几年的经验 的基础上,结合了社区中最好的想法和实践。

时光回溯

让我们回顾一下为什么 Kubernetes 如此有用。

传统部署时代:

早期,各个组织机构在物理服务器上运行应用程序。无法为物理服务器中的应用程序定义资源边界,这会导致资源分配问题。 例如,如果在物理服务器上运行多个应用程序,则可能会出现一个应用程序占用大部分资源的情况, 结果可能导致其他应用程序的性能下降。 一种解决方案是在不同的物理服务器上运行每个应用程序,但是由于资源利用不足而无法扩展, 并且维护许多物理服务器的成本很高。

虚拟化部署时代:

作为解决方案,引入了虚拟化。虚拟化技术允许你在单个物理服务器的 CPU 上运行多个虚拟机(VM)。 虚拟化允许应用程序在 VM 之间隔离,并提供一定程度的安全,因为一个应用程序的信息 不能被另一应用程序随意访问。

虚拟化技术能够更好地利用物理服务器上的资源,并且因为可轻松地添加或更新应用程序 而可以实现更好的可伸缩性,降低硬件成本等等。

每个 VM 是一台完整的计算机,在虚拟化硬件之上运行所有组件,包括其自己的操作系统。

容器部署时代:

容器类似于 VM,但是它们具有被放宽的隔离属性,可以在应用程序之间共享操作系统(OS)。 因此,容器被认为是轻量级的。容器与 VM 类似,具有自己的文件系统、CPU、内存、进程空间等。 由于它们与基础架构分离,因此可以跨云和 OS 发行版本进行移植。

容器因具有许多优势而变得流行起来。下面列出的是容器的一些好处:

- 敏捷应用程序的创建和部署:与使用 VM 镜像相比,提高了容器镜像创建的简便性和效率。

- 持续开发、集成和部署:通过快速简单的回滚(由于镜像不可变性),支持可靠且频繁的 容器镜像构建和部署。

- 关注开发与运维的分离:在构建/发布时而不是在部署时创建应用程序容器镜像, 从而将应用程序与基础架构分离。

- 可观察性:不仅可以显示操作系统级别的信息和指标,还可以显示应用程序的运行状况和其他指标信号。

- 跨开发、测试和生产的环境一致性:在便携式计算机上与在云中相同地运行。

- 跨云和操作系统发行版本的可移植性:可在 Ubuntu、RHEL、CoreOS、本地、 Google Kubernetes Engine 和其他任何地方运行。

- 以应用程序为中心的管理:提高抽象级别,从在虚拟硬件上运行 OS 到使用逻辑资源在 OS 上运行应用程序。

- 松散耦合、分布式、弹性、解放的微服务:应用程序被分解成较小的独立部分, 并且可以动态部署和管理 - 而不是在一台大型单机上整体运行。

- 资源隔离:可预测的应用程序性能。

- 资源利用:高效率和高密度。

为什么需要 Kubernetes,它能做什么?

容器是打包和运行应用程序的好方式。在生产环境中,你需要管理运行应用程序的容器,并确保不会停机。 例如,如果一个容器发生故障,则需要启动另一个容器。如果系统处理此行为,会不会更容易?

这就是 Kubernetes 来解决这些问题的方法! Kubernetes 为你提供了一个可弹性运行分布式系统的框架。 Kubernetes 会满足你的扩展要求、故障转移、部署模式等。 例如,Kubernetes 可以轻松管理系统的 Canary 部署。

Kubernetes 为你提供:

- 服务发现和负载均衡

Kubernetes 可以使用 DNS 名称或自己的 IP 地址公开容器,如果进入容器的流量很大, Kubernetes 可以负载均衡并分配网络流量,从而使部署稳定。 - 存储编排

Kubernetes 允许你自动挂载你选择的存储系统,例如本地存储、公共云提供商等。 - 自动部署和回滚

你可以使用 Kubernetes 描述已部署容器的所需状态,它可以以受控的速率将实际状态 更改为期望状态。例如,你可以自动化 Kubernetes 来为你的部署创建新容器, 删除现有容器并将它们的所有资源用于新容器。 - 自动完成装箱计算

Kubernetes 允许你指定每个容器所需 CPU 和内存(RAM)。 当容器指定了资源请求时,Kubernetes 可以做出更好的决策来管理容器的资源。 - 自我修复

Kubernetes 重新启动失败的容器、替换容器、杀死不响应用户定义的 运行状况检查的容器,并且在准备好服务之前不将其通告给客户端。 - 密钥与配置管理

Kubernetes 允许你存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。 你可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。

Kubernetes 不是什么

Kubernetes 不是传统的、包罗万象的 PaaS(平台即服务)系统。 由于 Kubernetes 在容器级别而不是在硬件级别运行,它提供了 PaaS 产品共有的一些普遍适用的功能, 例如部署、扩展、负载均衡、日志记录和监视。 但是,Kubernetes 不是单体系统,默认解决方案都是可选和可插拔的。 Kubernetes 提供了构建开发人员平台的基础,但是在重要的地方保留了用户的选择和灵活性。

Kubernetes:

- 不限制支持的应用程序类型。 Kubernetes 旨在支持极其多种多样的工作负载,包括无状态、有状态和数据处理工作负载。 如果应用程序可以在容器中运行,那么它应该可以在 Kubernetes 上很好地运行。

- 不部署源代码,也不构建你的应用程序。 持续集成(CI)、交付和部署(CI/CD)工作流取决于组织的文化和偏好以及技术要求。

- 不提供应用程序级别的服务作为内置服务,例如中间件(例如,消息中间件)、 数据处理框架(例如,Spark)、数据库(例如,mysql)、缓存、集群存储系统 (例如,Ceph)。这样的组件可以在 Kubernetes 上运行,并且/或者可以由运行在 Kubernetes 上的应用程序通过可移植机制(例如, 开放服务代理)来访问。

- 不要求日志记录、监视或警报解决方案。 它提供了一些集成作为概念证明,并提供了收集和导出指标的机制。

- 不提供或不要求配置语言/系统(例如 jsonnet),它提供了声明性 API, 该声明性 API 可以由任意形式的声明性规范所构成。

- 不提供也不采用任何全面的机器配置、维护、管理或自我修复系统。

- 此外,Kubernetes 不仅仅是一个编排系统,实际上它消除了编排的需要。 编排的技术定义是执行已定义的工作流程:首先执行 A,然后执行 B,再执行 C。 相比之下,Kubernetes 包含一组独立的、可组合的控制过程, 这些过程连续地将当前状态驱动到所提供的所需状态。 如何从 A 到 C 的方式无关紧要,也不需要集中控制,这使得系统更易于使用 且功能更强大、系统更健壮、更为弹性和可扩展。

二、部署一个高可用的kubernetes集群

2.1、环境准备

2.1.1、服务器配置

| 节点 | IP | 配置 | 系统 | 内核 |

|---|---|---|---|---|

| Master01 | 172.168.1.51 | 4c8G | Centos7 | 5.4 |

| Master01 | 172.168.1.52 | 4c8G | Centos7 | 5.4 |

| Master01 | 172.168.1.53 | 4c8G | Centos7 | 5.4 |

| Node01 | 172.168.1.61 | 4c8G | Centos7 | 5.4 |

| Node02 | 172.168.1.62 | 4c8G | Centos7 | 5.4 |

- 以上配置为测试环境配置,仅用于熟悉k8s使用,如果需要部署到生产环境,建议配置越高越好

- 服务器CPU和内存资源建议根据应用需求配置,避免浪费

2.1.2、应用版本配置

| kubernetes | 1.22.2 |

|---|---|

| etcd | 3.5.0 |

| docker | 19.03.9 |

| kube-vip | 0.4.2 |

| Calico | 3.22.1 |

2.2、环境准备

以下步骤需要在所有节点执行

升级内核

# 安装 elrepo 的GPG KEYrpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org# 安装elrepo 的yum源rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm# 查看可用的内核yum --disablerepo="*" --enablerepo="elrepo-kernel" list available# 卸载旧版本yum -y remove kernel-tools-libs-3.10.0-1160.49.1.el7.x86_64 kernel-tools-3.10.0-1160.49.1.el7.x86_64# 安装新版本内核(lt为LTS,ml为主线,主线过于激进,这里采用lt版本)yum -y --enablerepo=elrepo-kernel install kernel-lt.x86_64 kernel-lt-devel.x86_64 kernel-lt-doc.noarch kernel-lt-headers.x86_64 kernel-lt-tools.x86_64 kernel-lt-tools-libs.x86_64 kernel-lt-tools-libs-devel.x86_64# 在boot中配置内核启动顺序,默认为新版内核grub2-mkconfig -o /boot/grub2/grub.cfg# 重启服务器reboot# 查看内核版本uname -r

配置/etc/hosts

cat >>/etc/hosts<<EOF

172.168.1.41 api.k8s.local # vip

172.168.1.51 k8s01

172.168.1.52 k8s02

172.168.1.53 k8s03

172.168.1.61 k8s04

172.168.1.62 k8s05

EOF

禁用防火墙、selinux

systemctl stop firewalld

systemctl disable firewalld

iptables -F

iptables -X

iptables -t nat -F

iptables -t nat -X

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/conf

禁用swap分区

swapoff -a

sed -i '/swap/ s/^/#/g' /etc/fstab

修改内核参数

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl -f /etc/sysctl.d/kubernetes.conf

开启ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

modprobe -- br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

安装依赖

yum -y install epel-release

yum -y install ipset

yum -y install ipvsadm

yum -y install chrony

yum -y install tcpdump

yum -y install jq

yum -y install curl

yum -y install sysstat

yum -y install wget

yum -y install net-tools

yum install -y yum-utils

配置时间服务器

systemctl enable chronyd

systemctl start chronyd

chronyc sources

2.3、安装docker

移除旧版本docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

安装阿里docker源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

yum makecache

yum -y install docker-ce-19.03.9 docker-ce-cli-19.03.9 containerd.io

创建docker配置文件

mkdir /etc/docker

cat >>/etc/docker/daemon.json<<EOF

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn","https://hub-mirror.c.163.com"],

"max-concurrent-downloads": 20,

"live-restore": true,

"max-concurrent-uploads": 10,

"debug": true,

"data-root": "/dockerHome/data",

"exec-root": "/dockerHome/exec",

"log-opts": {

"max-size": "100m",

"max-file": "5"

}

}

EOF

启动docker

systemctl daemon-reload

systemctl enable docker

systemctl start docker

2.4、在k8s01节点生成kube-vip资源

配置环境变量

export VIP=172.168.1.41

export INTERFACE=ens32

KVVERSION=$(curl -sL https://api.github.com/repos/kube-vip/kube-vip/releases | jq -r ".[0].name")

export KVVERSION=v0.4.2

创建静态pod资源文件

mkdir -p /etc/kubernetes/manifests

alias kube-vip="docker run --network host --rm ghcr.io/kube-vip/kube-vip:$KVVERSION"

kube-vip manifest pod \

--interface $INTERFACE \

--address $VIP \

--controlplane \

--services \

--arp \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

这里是静态文件内容

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

name: kube-vip

namespace: kube-system

spec:

containers:

- args:

- manager

env:

- name: vip_arp

value: "true"

- name: port

value: "6443"

- name: vip_interface

value: ens192

- name: vip_cidr

value: "32"

- name: cp_enable

value: "true"

- name: cp_namespace

value: kube-system

- name: vip_ddns

value: "false"

- name: svc_enable

value: "true"

- name: vip_leaderelection

value: "true"

- name: vip_leaseduration

value: "5"

- name: vip_renewdeadline

value: "3"

- name: vip_retryperiod

value: "1"

- name: address

value: 192.168.0.40

image: ghcr.io/kube-vip/kube-vip:v0.4.2

imagePullPolicy: Always

name: kube-vip

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

- SYS_TIME

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

hostAliases:

- hostnames:

- kubernetes

ip: 127.0.0.1

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

status: {}

2.5、部署kubernetes 控制平面

安装阿里kubernetes源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubeadm、kubelet、kubectl

yum makecache fast

yum install -y kubelet-1.22.2 kubeadm-1.22.2 kubectl-1.22.2 --disableexcludes=kubernetes

设置kubelet 开机自启

systemctl enable --now kubelet

下面操作仅在k8s01节点操作

生成kubeadm 配置文件

kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadm.yaml

下面是修改后的kubeadm.yaml

# kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.168.1.51 # 指定当前节点内网IP

bindPort: 6443

nodeRegistration:

criSocket: /run/run/dockershim.sock # 使用 containerd的Unix socket 地址

imagePullPolicy: IfNotPresent

name: k8s01

taints: # 给master添加污点,master节点不能调度应用

- effect: "NoSchedule"

key: "node-role.kubernetes.io/master"

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # kube-proxy 模式

---

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/k8sxio

kind: ClusterConfiguration

kubernetesVersion: 1.22.2

controlPlaneEndpoint: api.k8s.local:6443 # 设置控制平面Endpoint地址

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

certSANs: # 添加其他master节点的相关信息

- api.k8s.local

- k8s01

- k8s02

- k8s03

- 172.168.1.51

- 172.168.1.52

- 172.168.1.53

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 192.168.0.0/16 # 指定 pod 子网,参考calico官网

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

cgroupDriver: systemd # 配置 cgroup driver

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

拉取镜像

kubeadm config images pull --config kubeadm.yaml

# coreDNS 因为配置问题拉取不到,需要单独操作一下

docker pull docker.io/coredns/coredns:1.8.4

docker tag docker.io/coredns/coredns:1.8.4 registry.aliyuncs.com/k8sxio/coredns:v1.8.4

初始化集群

kubeadm init --upload-certs --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [api.k8s.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master k8s01 k8s02 k8s03] and IPs [10.96.0.1 172.168.1.51 172.168.1.52 172.168.1.53]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [172.168.1.51 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [172.168.1.51 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 11.012184 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

9e1a67d17aae95c8286d957b69c6e5e98428c807eeb62560e670510bd7d0637e

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join api.k8s.local:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1a2abb0e866b46194cc19f706459f68e275b2359f923107b9c453f2b8f7011b6 \ --control-plane --certificate-key 9e1a67d17aae95c8286d957b69c6e5e98428c807eeb62560e670510bd7d0637e

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join api.k8s.local:6443 --token uh6138.il5pzddd71a6bqpn \

--discovery-token-ca-cert-hash sha256:1a2abb0e866b46194cc19f706459f68e275b2359f923107b9c453f2b8f7011b6

拷贝kubeconfig

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

添加k8s02和k8s03节点到控制平面

# 在k8s02 和 k8s03节点执行如下命令

kubeadm join api.k8s.local:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1a2abb0e866b46194cc19f706459f68e275b2359f923107b9c453f2b8f7011b6 \ --control-plane --certificate-key 9e1a67d17aae95c8286d957b69c6e5e98428c807eeb62560e670510bd7d0637e

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装calico插件

curl https://projectcalico.docs.tigera.io/manifests/calico.yaml -O

kubecctl apply -f calico.yaml

为k8s02和k8s03节点创建kube-vip

export VIP=172.168.1.41

export INTERFACE=ens32

KVVERSION=$(curl -sL https://api.github.com/repos/kube-vip/kube-vip/releases | jq -r ".[0].name")

export KVVERSION=v0.4.2

alias kube-vip="docker run --network host --rm ghcr.io/kube-vip/kube-vip:$KVVERSION"

kube-vip manifest pod \

--interface $INTERFACE \

--address $VIP \

--controlplane \

--services \

--arp \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

查看控制平面

查看控制平面pod

[root@k8s01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6fd7b9848d-z29dm 1/1 Running 0 3d15h

calico-node-7rbgd 1/1 Running 0 3d15h

calico-node-9mjgb 1/1 Running 0 3d15h

calico-node-gwpsm 1/1 Running 0 3d15h

calico-node-lbb47 1/1 Running 0 3d15h

calico-node-zq66h 1/1 Running 0 3d15h

coredns-7568f67dbd-5klwx 1/1 Running 0 3d15h

coredns-7568f67dbd-9l9l8 1/1 Running 0 3d15h

etcd-k8s01 1/1 Running 2 (3d15h ago) 3d15h

etcd-k8s02 1/1 Running 2 (3d15h ago) 3d15h

etcd-k8s03 1/1 Running 1 (3d15h ago) 3d15h

kube-apiserver-k8s01 1/1 Running 3 (3d15h ago) 3d15h

kube-apiserver-k8s02 1/1 Running 2 (3d15h ago) 3d15h

kube-apiserver-k8s03 1/1 Running 3 (3d15h ago) 3d15h

kube-controller-manager-k8s01 1/1 Running 8 (3d15h ago) 3d15h

kube-controller-manager-k8s02 1/1 Running 4 (3d15h ago) 3d15h

kube-controller-manager-k8s03 1/1 Running 5 (3d15h ago) 3d15h

kube-proxy-2lkq8 1/1 Running 0 3d15h

kube-proxy-57b9q 1/1 Running 0 3d15h

kube-proxy-k9scd 1/1 Running 0 3d15h

kube-proxy-qw4dv 1/1 Running 0 3d15h

kube-proxy-zgs67 1/1 Running 0 3d15h

kube-scheduler-k8s01 1/1 Running 8 (3d15h ago) 3d15h

kube-scheduler-k8s02 1/1 Running 2 (3d15h ago) 3d15h

kube-scheduler-k8s03 1/1 Running 3 (3d15h ago) 3d15h

kube-vip-k8s01 1/1 Running 14 (3d9h ago) 3d15h

kube-vip-k8s02 1/1 Running 5 (3d15h ago) 3d15h

kube-vip-k8s03 1/1 Running 6 (3d15h ago) 3d15h

查看控制平面node

[root@k8s01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s01 Ready control-plane,master 4d14h v1.22.2

k8s02 Ready control-plane,master 4d14h v1.22.2

k8s03 Ready control-plane,master 4d14h v1.22.2

2.6、部署worker节点

在worker节点执行如下命令即可

kubeadm join api.k8s.local:6443 --token uh6138.il5pzddd71a6bqpn \

--discovery-token-ca-cert-hash sha256:1a2abb0e866b46194cc19f706459f68e275b2359f923107b9c453f2b8f7011b6

查看整个集群

[root@k8s01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s01 Ready control-plane,master 4d14h v1.22.2

k8s02 Ready control-plane,master 4d14h v1.22.2

k8s03 Ready control-plane,master 4d14h v1.22.2

k8s04 Ready <none> 3d15h v1.22.2

k8s05 Ready <none> 3d15h v1.22.2

至此 k8s集群部署完毕。

三、监控kubernetes

3.1、部署prometheus集群

创建namespace

kubectl create ns mube-mon

创建prometheus的configmap

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-mon

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['192.168.236.129:9153', '192.168.235.130:9153']

- job_name: "nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: "(.*):10250"

replacement: "${1}:9100"

target_label: __address__

action: replace

- job_name: "kubernetes-nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: "(.*):10250"

replacement: "${1}:9100"

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: "kubelet"

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: "cadvisor"

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

- replacement: /metrics/cadvisor # <nodeip>/metrics -> <nodeip>/metrics/cadvisor

target_label: __metrics_path__

- job_name: "endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

# 保留 Service 的注解为 prometheus.io/scrape: true 的 Endpoints

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

# 指标接口协议通过 prometheus.io/scheme 这个注解获取 http 或 https

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

# 指标接口端点路径通过 prometheus.io/path 这个注解获取

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# 直接接口地址端口通过 prometheus.io/port 注解获取

- source_labels:

[__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+) # RE2 正则规则,+是一次或多次,?是0次或1次,其中?:表示非匹配组(意思就是不获取匹配结果)

replacement: $1:$2

# 映射 Service 的 Label 标签

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

# 将 namespace 映射成标签

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

# 将 Service 名称映射成标签

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

# 将 Pod 名称映射成标签

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: "apiservers"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: default;kubernetes;https

创建prometheus的编排文件

# prometheus-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: kube-mon

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- image: prom/prometheus:v2.31.1

name: prometheus

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus" # 指定tsdb数据路径

- "--storage.tsdb.retention.time=24h"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

name: http

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: "/prometheus"

name: data

resources:

requests:

cpu: 200m

memory: 1024Mi

limits:

cpu: 200m

memory: 1024Mi

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-data

- configMap:

name: prometheus-config

name: config-volume

创建prometheus pv编排文件

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-local

labels:

app: prometheus

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 20Gi

storageClassName: local-storage

local:

path: /data/k8s/prometheus

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s04

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-data

namespace: kube-mon

spec:

selector:

matchLabels:

app: prometheus

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: local-storage

创建prometheus rbac认证

# prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-mon

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-mon

创建svc提供访问

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-mon

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http

部署node exporter 提供监控指标

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-mon

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

containers:

- name: node-exporter

image: prom/node-exporter:v1.3.1

args:

- --web.listen-address=$(HOSTIP):9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.hwmon # 禁用不需要的一些采集器

- --no-collector.nfs

- --no-collector.nfsd

- --no-collector.nvme

- --no-collector.dmi

- --no-collector.arp

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/containerd/.+|/var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

ports:

- containerPort: 9100

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP

resources:

requests:

cpu: 150m

memory: 180Mi

limits:

cpu: 150m

memory: 180Mi

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: root

mountPath: /host/root

mountPropagation: HostToContainer

readOnly: true

tolerations: # 添加容忍

- operator: "Exists"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /

部署kube-state-metrics

git clone https://github.com/kubernetes/kube-state-metrics.git

cd kube-state-metrics/examples/standard

# 需要梯子

kubectl apply -f deployment.yaml

至此,prometheus部署完毕,并可以实现数据采集

3.2、部署grafana提供面板

创建grafana编排文件,部署grafana

# grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: kube-mon

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-data

initContainers:

- name: fix-permissions

image: busybox

command: [chown, -R, "472:472", "/var/lib/grafana"]

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

containers:

- name: grafana

image: grafana/grafana:8.3.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: admin321

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 3

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: 150m

memory: 512Mi

requests:

cpu: 150m

memory: 512Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-mon

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-local

labels:

app: grafana

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

storageClassName: local-storage

local:

path: /data/k8s/grafana

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s04

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data

namespace: kube-mon

spec:

selector:

matchLabels:

app: grafana

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-storage

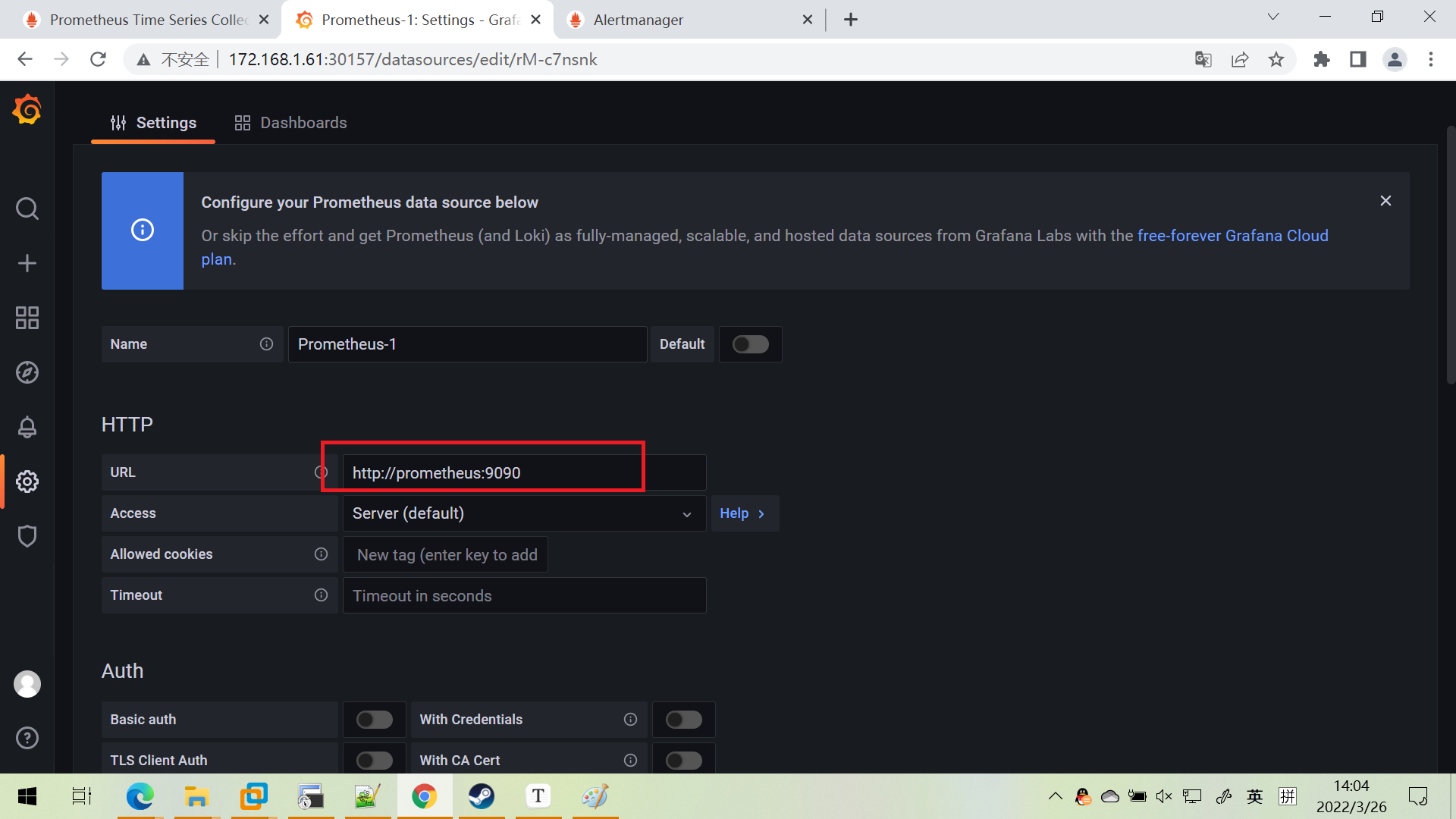

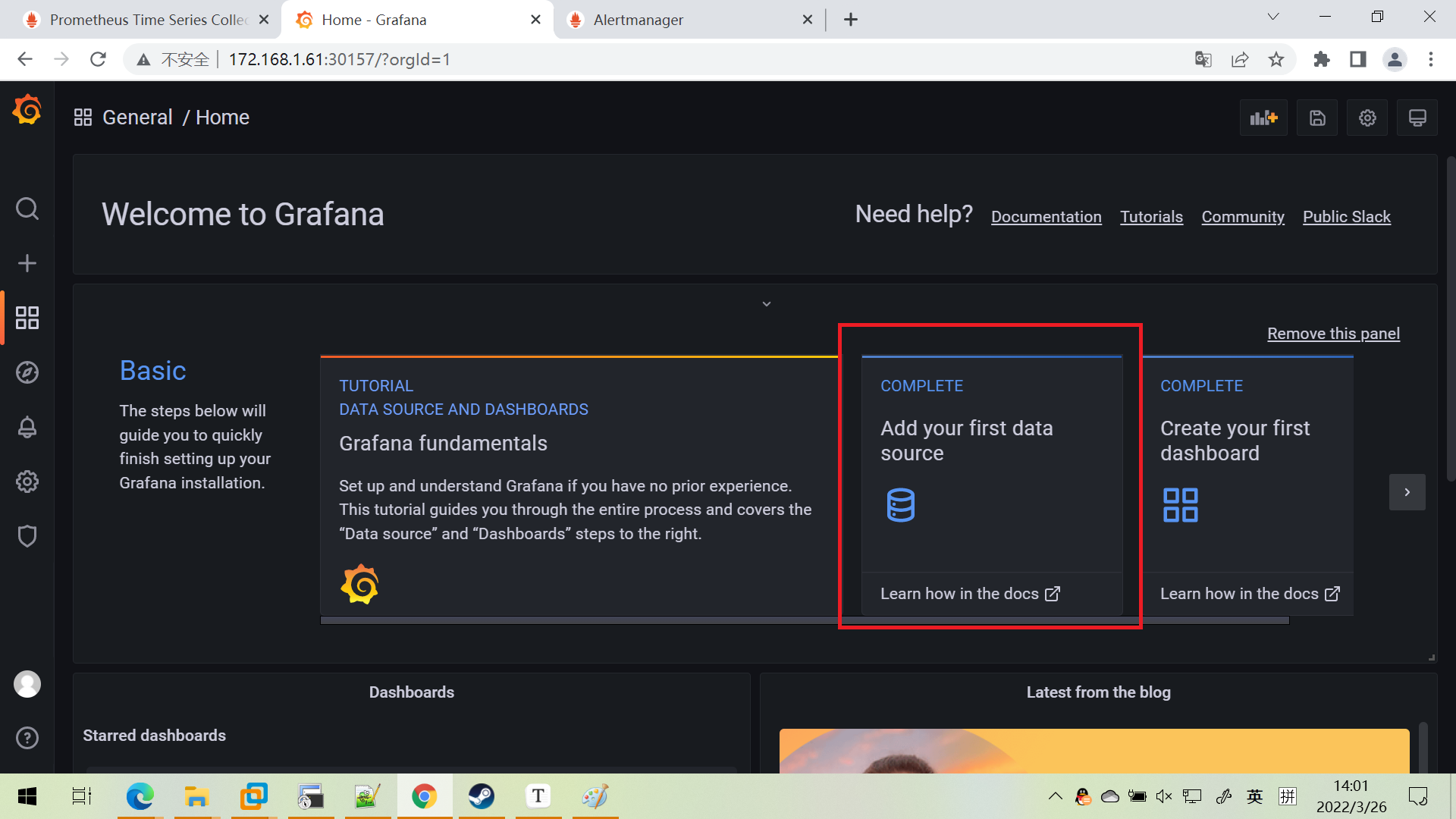

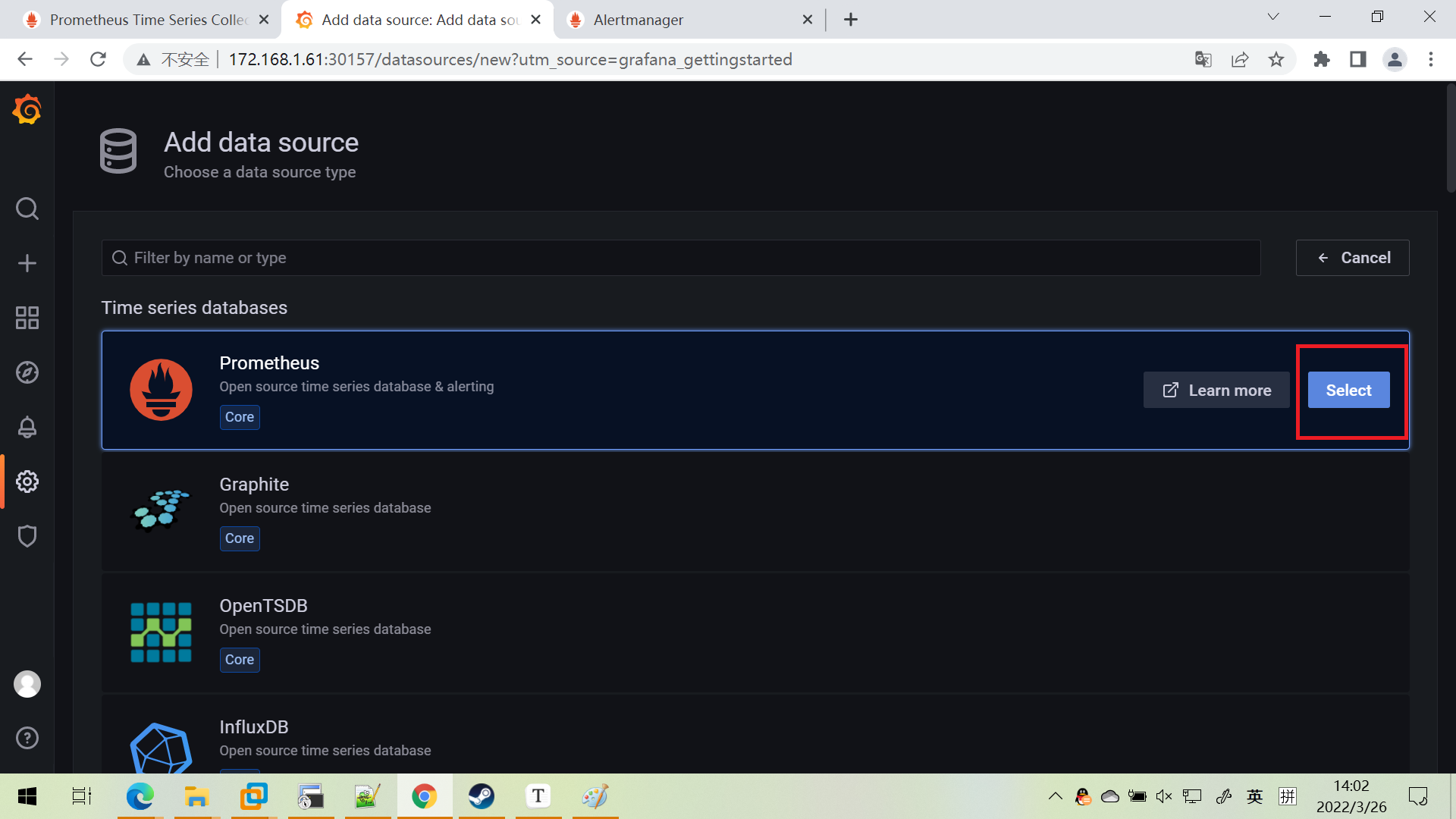

配置prometheus数据源

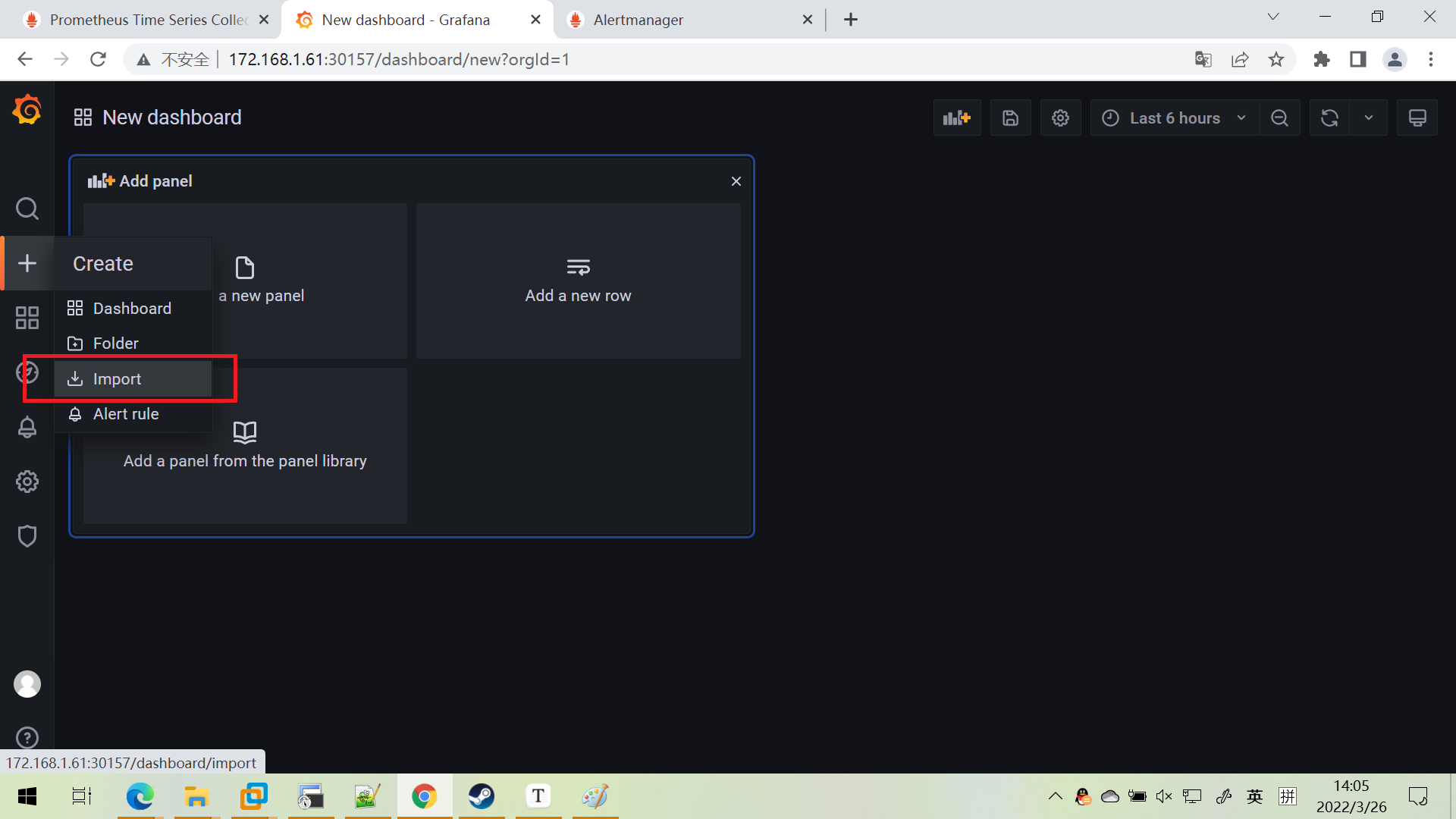

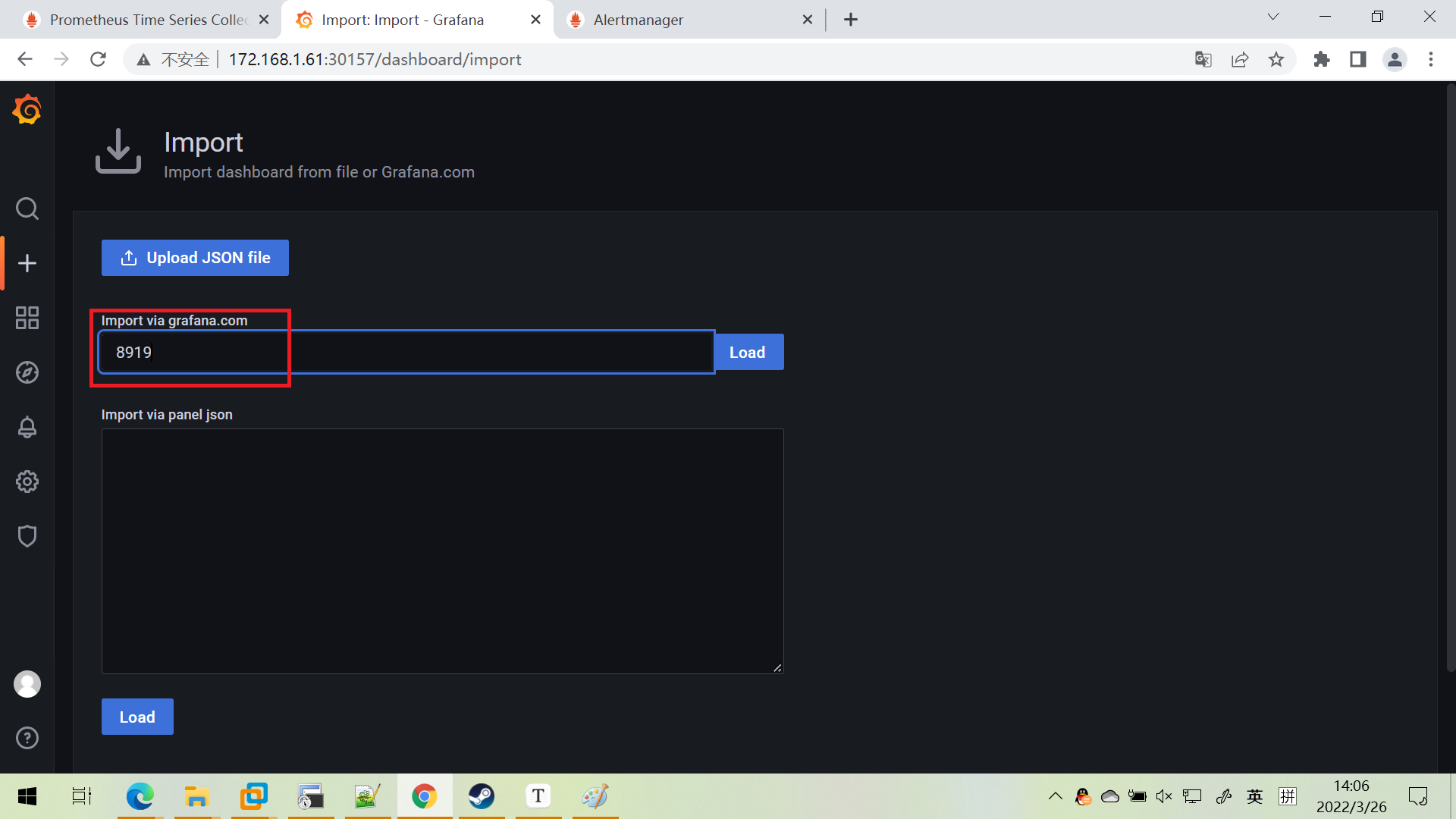

安装仪表盘模板

展示成果

3.3、部署altermanager

3.4、prometheus高可用

参考文献: