一、部署docker

1.1、centos 部署docker

# 卸载老版本docker[root@harbor ~]# sudo yum remove docker \docker-client \docker-client-latest \docker-common \docker-latest \docker-latest-logrotate \docker-logrotate \docker-engine# 添加阿里yum 镜像站[root@harbor ~]# sudo yum install -y yum-utils[root@harbor ~]# sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 查询可安装的docker版本[root@harbor ~]# yum list docker-ce --showduplicates | sort -r* updates: mirrors.cn99.comLoading mirror speeds from cached hostfileLoaded plugins: fastestmirrorInstalled Packages* extras: mirrors.ustc.edu.cn* epel: hk.mirrors.thegigabit.com* elrepo: mirrors.tuna.tsinghua.edu.cndocker-ce.x86_64 3:20.10.9-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.8-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.7-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.6-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.5-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.4-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.3-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.2-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.1-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.13-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.13-3.el7 @docker-ce-stabledocker-ce.x86_64 3:20.10.12-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.11-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.10-3.el7 docker-ce-stabledocker-ce.x86_64 3:20.10.0-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.9-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.8-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.7-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.6-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.5-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.4-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.3-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.2-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.15-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.14-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.1-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.13-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.12-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.11-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.10-3.el7 docker-ce-stabledocker-ce.x86_64 3:19.03.0-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.9-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.8-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.7-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.6-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.5-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.4-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.3-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.2-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.1-3.el7 docker-ce-stabledocker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stabledocker-ce.x86_64 18.06.3.ce-3.el7 docker-ce-stabledocker-ce.x86_64 18.06.2.ce-3.el7 docker-ce-stabledocker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stabledocker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stabledocker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stabledocker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stabledocker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable* base: mirrors.cn99.comAvailable Packages# 安装最新版 docker# 如果是针对k8s 安装的话,可以去kubernetes.io 查询不同版本k8s适配的docker 版本[root@harbor ~]# sudo yum -y install docker-ce docker-ce-cli containerd.io# 启动docker[root@harbor ~]# sudo systemctl enable docker[root@harbor ~]# sudo systemctl start docker[root@harbor ~]#

1.2、ubuntu 部署docker

# 卸载旧版本docker

test@ubuntu:~$ sudo apt-get remove docker docker-engine docker.io containerd runc

# 安装依赖包

test@ubuntu:~$ sudo apt-get update

test@ubuntu:~$ sudo apt-get install apt-transport-https ca-certificates curl gnupg lsb-release

# 添加docker 官方GPG密钥

test@ubuntu:~$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# 添加docker 源

test@ubuntu:~$ sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# 添加阿里 GPG密钥

test@ubuntu:~$ curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# 部署docker

test@ubuntu:~$ sudo apt-cache policy docker-ce

test@ubuntu:~$ sudo apt-get install -y docker-ce

test@ubuntu:~$ sudo systemctl enable docker

test@ubuntu:~$ sudo systemctl start docker

二、配置docker

2.1、如何配置docker

docker的配置方法时十分简单的,可以通过修改 /usr/lib/systemd/system/docker.service 添加启动项,或者是修改/etc/docker/daemon.json来实现。关于docker的配置可以通过 man dockerd 或者是 dockerd —help 来查询

# 查询docker的配置项

[root@harbor ~]# dockerd --help

Usage: dockerd [OPTIONS]

A self-sufficient runtime for containers.

Options:

--add-runtime runtime Register an additional OCI compatible runtime (default [])

--allow-nondistributable-artifacts list Allow push of nondistributable artifacts to registry

--api-cors-header string Set CORS headers in the Engine API

--authorization-plugin list Authorization plugins to load

--bip string Specify network bridge IP

-b, --bridge string Attach containers to a network bridge

--cgroup-parent string Set parent cgroup for all containers

--config-file string Daemon configuration file (default "/etc/docker/daemon.json")

--containerd string containerd grpc address

--containerd-namespace string Containerd namespace to use (default "moby")

--containerd-plugins-namespace string Containerd namespace to use for plugins (default "plugins.moby")

--cpu-rt-period int Limit the CPU real-time period in microseconds for the parent cgroup for all containers

--cpu-rt-runtime int Limit the CPU real-time runtime in microseconds for the parent cgroup for all containers

--cri-containerd start containerd with cri

--data-root string Root directory of persistent Docker state (default "/var/lib/docker")

-D, --debug Enable debug mode

--default-address-pool pool-options Default address pools for node specific local networks

--default-cgroupns-mode string Default mode for containers cgroup namespace ("host" | "private") (default "host")

--default-gateway ip Container default gateway IPv4 address

--default-gateway-v6 ip Container default gateway IPv6 address

--default-ipc-mode string Default mode for containers ipc ("shareable" | "private") (default "private")

--default-runtime string Default OCI runtime for containers (default "runc")

--default-shm-size bytes Default shm size for containers (default 64MiB)

--default-ulimit ulimit Default ulimits for containers (default [])

--dns list DNS server to use

--dns-opt list DNS options to use

--dns-search list DNS search domains to use

--exec-opt list Runtime execution options

--exec-root string Root directory for execution state files (default "/var/run/docker")

--experimental Enable experimental features

--fixed-cidr string IPv4 subnet for fixed IPs

--fixed-cidr-v6 string IPv6 subnet for fixed IPs

-G, --group string Group for the unix socket (default "docker")

--help Print usage

-H, --host list Daemon socket(s) to connect to

--host-gateway-ip ip IP address that the special 'host-gateway' string in --add-host resolves to. Defaults to the IP

address of the default bridge

--icc Enable inter-container communication (default true)

--init Run an init in the container to forward signals and reap processes

--init-path string Path to the docker-init binary

--insecure-registry list Enable insecure registry communication

--ip ip Default IP when binding container ports (default 0.0.0.0)

--ip-forward Enable net.ipv4.ip_forward (default true)

--ip-masq Enable IP masquerading (default true)

--ip6tables Enable addition of ip6tables rules

--iptables Enable addition of iptables rules (default true)

--ipv6 Enable IPv6 networking

--label list Set key=value labels to the daemon

--live-restore Enable live restore of docker when containers are still running

--log-driver string Default driver for container logs (default "json-file")

-l, --log-level string Set the logging level ("debug"|"info"|"warn"|"error"|"fatal") (default "info")

--log-opt map Default log driver options for containers (default map[])

--max-concurrent-downloads int Set the max concurrent downloads for each pull (default 3)

--max-concurrent-uploads int Set the max concurrent uploads for each push (default 5)

--max-download-attempts int Set the max download attempts for each pull (default 5)

--metrics-addr string Set default address and port to serve the metrics api on

--mtu int Set the containers network MTU

--network-control-plane-mtu int Network Control plane MTU (default 1500)

--no-new-privileges Set no-new-privileges by default for new containers

--node-generic-resource list Advertise user-defined resource

--oom-score-adjust int Set the oom_score_adj for the daemon

-p, --pidfile string Path to use for daemon PID file (default "/var/run/docker.pid")

--raw-logs Full timestamps without ANSI coloring

--registry-mirror list Preferred Docker registry mirror

--rootless Enable rootless mode; typically used with RootlessKit

--seccomp-profile string Path to seccomp profile

--selinux-enabled Enable selinux support

--shutdown-timeout int Set the default shutdown timeout (default 15)

-s, --storage-driver string Storage driver to use

--storage-opt list Storage driver options

--swarm-default-advertise-addr string Set default address or interface for swarm advertised address

--tls Use TLS; implied by --tlsverify

--tlscacert string Trust certs signed only by this CA (default "/root/.docker/ca.pem")

--tlscert string Path to TLS certificate file (default "/root/.docker/cert.pem")

--tlskey string Path to TLS key file (default "/root/.docker/key.pem")

--tlsverify Use TLS and verify the remote

--userland-proxy Use userland proxy for loopback traffic (default true)

--userland-proxy-path string Path to the userland proxy binary

--userns-remap string User/Group setting for user namespaces

-v, --version Print version information and quit

2.2、常用配置项解读

先贴出一份deamon.json供参考

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn","https://hub-mirror.c.163.com"],

"insecure-registries": ["docker02:35000"],

"max-concurrent-downloads": 20,

"live-restore": true,

"max-concurrent-uploads": 10,

"debug": true,

"data-root": "/dockerHome/data",

"exec-root": "/dockerHome/exec",

"log-opts": {

"max-size": "100m",

"max-file": "5"

}

}

registry-mirrors

docker 镜像站,常用的有

- docker 中国:https://registry.docker-cn.com

- 网易:http://hub-mirror.c.163.com

- 中科大镜像站:https://docker.mirrors.ustc.edu.cn

- 腾讯云镜像站:https://mirror.ccs.tencentyun.com

- 阿里云需要配置镜像加速,每个人都是独享的

insecure-registries

不安全镜像仓库,因为docker 要求使用https进行传输,所以针对http 镜像站需要添加 insecure-registries来实现可信

max-concurrent-downloads

最大并发数,用于优化拉取镜像时的并发。因为docker images 采用了unionFS 技术,所以在拉取镜像时分层拉拉取

[root@harbor ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

ae13dd578326: Pull complete

6c0ee9353e13: Pull complete

dca7733b187e: Pull complete

352e5a6cac26: Pull complete

9eaf108767c7: Pull complete

be0c016df0be: Pull complete

Digest: sha256:e1211ac17b29b585ed1aee166a17fad63d344bc973bc63849d74c6452d549b3e

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

max-concurrent-downloads 默认是3,通过优化此参数可实现提升拉取镜像效率的目的,但同时,并发过高也会导致网卡负载过大,所以此参数需要按照具体环境来调整

max-concurrent-uploads

上传镜像时最大并发数

live-restore

重启docker daemon时保持容器存活

debug

开启debug日志,dockerd的日志会输入到/var/log/message 中

data-root

用于指定docker数据目录,默认在 /var/lib/docker 目录下,存储内容包括集群状态,image,本地卷等等

exec-root

用于存储容器状态的目录

log-opts

max-size:日志文件大小

max-file:日志文件数目

完整daemon.json配置如下,来自官方网站

{

"allow-nondistributable-artifacts": [],

"api-cors-header": "",

"authorization-plugins": [],

"bip": "",

"bridge": "",

"cgroup-parent": "",

"cluster-advertise": "",

"cluster-store": "",

"cluster-store-opts": {},

"containerd": "/run/containerd/containerd.sock",

"containerd-namespace": "docker",

"containerd-plugin-namespace": "docker-plugins",

"data-root": "",

"debug": true,

"default-address-pools": [

{

"base": "172.30.0.0/16",

"size": 24

},

{

"base": "172.31.0.0/16",

"size": 24

}

],

"default-cgroupns-mode": "private",

"default-gateway": "",

"default-gateway-v6": "",

"default-runtime": "runc",

"default-shm-size": "64M",

"default-ulimits": {

"nofile": {

"Hard": 64000,

"Name": "nofile",

"Soft": 64000

}

},

"dns": [],

"dns-opts": [],

"dns-search": [],

"exec-opts": [],

"exec-root": "",

"experimental": false,

"features": {},

"fixed-cidr": "",

"fixed-cidr-v6": "",

"group": "",

"hosts": [],

"icc": false,

"init": false,

"init-path": "/usr/libexec/docker-init",

"insecure-registries": [],

"ip": "0.0.0.0",

"ip-forward": false,

"ip-masq": false,

"iptables": false,

"ip6tables": false,

"ipv6": false,

"labels": [],

"live-restore": true,

"log-driver": "json-file",

"log-level": "",

"log-opts": {

"cache-disabled": "false",

"cache-max-file": "5",

"cache-max-size": "20m",

"cache-compress": "true",

"env": "os,customer",

"labels": "somelabel",

"max-file": "5",

"max-size": "10m"

},

"max-concurrent-downloads": 3,

"max-concurrent-uploads": 5,

"max-download-attempts": 5,

"mtu": 0,

"no-new-privileges": false,

"node-generic-resources": [

"NVIDIA-GPU=UUID1",

"NVIDIA-GPU=UUID2"

],

"oom-score-adjust": -500,

"pidfile": "",

"raw-logs": false,

"registry-mirrors": [],

"runtimes": {

"cc-runtime": {

"path": "/usr/bin/cc-runtime"

},

"custom": {

"path": "/usr/local/bin/my-runc-replacement",

"runtimeArgs": [

"--debug"

]

}

},

"seccomp-profile": "",

"selinux-enabled": false,

"shutdown-timeout": 15,

"storage-driver": "",

"storage-opts": [],

"swarm-default-advertise-addr": "",

"tls": true,

"tlscacert": "",

"tlscert": "",

"tlskey": "",

"tlsverify": true,

"userland-proxy": false,

"userland-proxy-path": "/usr/libexec/docker-proxy",

"userns-remap": ""

}

三、深入理解docker

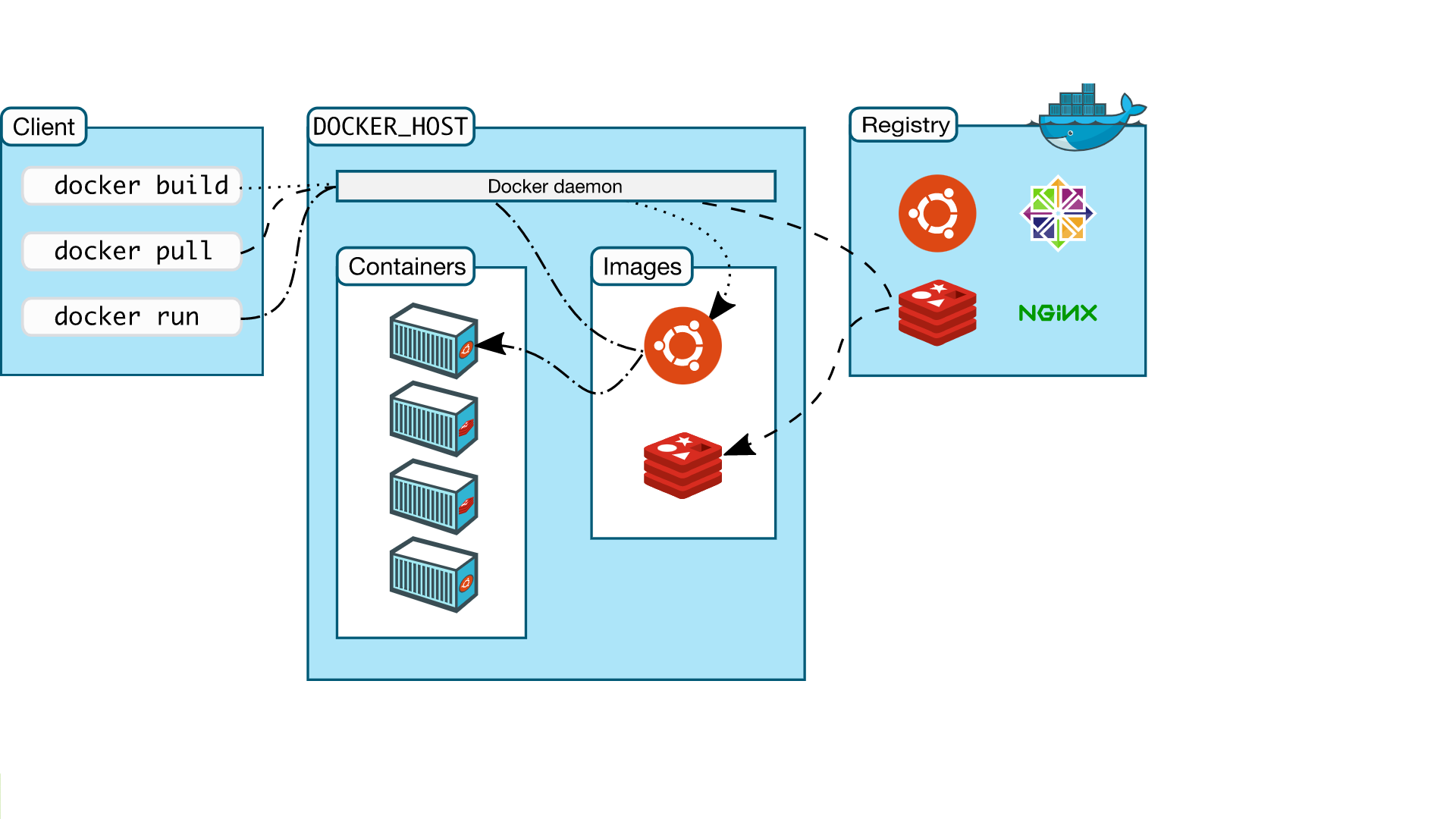

3.1、docker 架构

docker 属于标准的CS架构架构图如下

docker 命令为 client,docker daemon为 server。用户可以通过docker 命令同 docker daemon进行交互

3.2、docker底层原理之namespace

namespace 是linux中的的隔离技术,包括有如下7种

| namespace | 系统调用参数 | 隔离内容 |

|---|---|---|

| UTS | CLONE_NEWUTS | 主机名与域名 |

| IPC | CLONE_NEWIPC | 信号量,消息队列,共享内存 |

| PID | CLONE_NEWPID | 进程 |

| NETWORK | CLONE_NEWNET | 网络设备,网络栈,端口 |

| MOUNT | CLONE_NEWNS | 挂载点,文件系统 |

| USER | CLONE_NEWUSER | 用户和用户组 |

| CGROUP | CLONE_NEWCGROUP | cgroup根目录 |

docker 运行的容器可以认为是一个没有systemd进程的虚拟机,并且通过namespace实现进程级别的隔离

3.2.1、PIDnamespace

当我们执行如下命令后,可以运行一个容器

[root@harbor ~]# docker run -itd --name=nginx nginx

081599f8f8cf1fc55e988b63002db7291983fca073ea98f28d370ccbdcb1a1d4

此时我们在宿主机上执行ps 命令可以看到nginx进程

[root@harbor ~]# ps -ef | grep nginx

root 15007 14989 0 20:52 pts/0 00:00:00 nginx: master process nginx -g daemon off;

101 15059 15007 0 20:52 pts/0 00:00:00 nginx: worker process

101 15060 15007 0 20:52 pts/0 00:00:00 nginx: worker process

root 15063 14843 0 20:53 pts/0 00:00:00 grep --color=auto nginx

这时可以清晰地看到 nginx master 的master进程的PID为15007, nginx worker 进程的PID为15059 和 15060

那么我们进入容器内看一下呢

[root@harbor ~]# docker exec -it nginx bash

# 因为nginx 容器内没有ps 命令,所以通过查看nginx.pid 来确认nginx 进程

root@081599f8f8cf:/# cat /var/run/nginx.pid

1

可以清晰的看到 Nginx容器内 nginx 进程的PID为1,而这正是PID NameSpace 实现的PID隔离。

现在我们通过另一个更加清晰的例子来说明PID NameSpace,现在我们先构建一个镜像

[root@harbor dockerfile]# cat dockerfile

FROM centos:7

RUN yum -y clean all && \

yum makecache && \

yum -y install epel-release && \

yum -y install redis && \

rm -rf /var/cache/yum/*

CMD redis-server

[root@harbor dockerfile]# docker build -t redis:v1 .

[root@harbor dockerfile]# docker run -itd --name=redis redis:v1

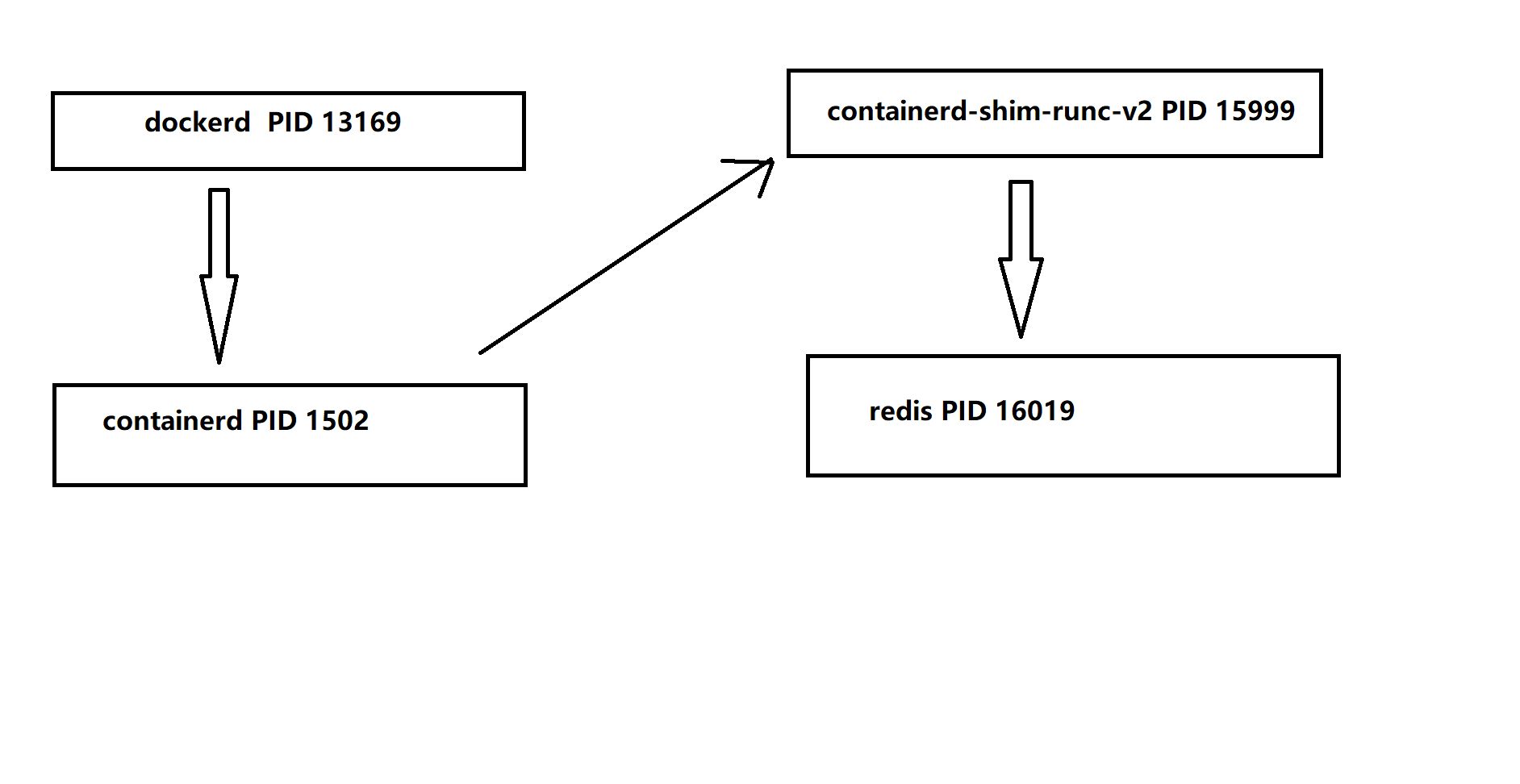

现在我们在宿主机上执行如下命令观察

# 获取dockerd PID

[root@harbor dockerfile]# ps -ef | grep dockerd | grep -v grep

root 13169 1 0 04:25 ? 00:00:21 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

# 获取containerd PID

[root@harbor dockerfile]# ps -ef | grep containerd | grep -v grep

root 1502 1 0 02:54 ? 00:04:10 /usr/bin/containerd

root 13169 1 0 04:25 ? 00:00:21 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

root 15999 1 0 21:23 ? 00:00:00 /usr/bin/ -namespace moby -id 0129ea131ef7d28a1d31996834f85fcffc075ae7d481b8dbaaf8d77c6cb81937 -address /run/containerd/containerd.sock

# 获取redis PID

[root@harbor dockerfile]# ps -ef | grep redis | grep -v grep

root 16019 15999 0 21:23 pts/0 00:00:00 redis-server *:6379

# 获取容器内的redis PID

[root@harbor dockerfile]# docker exec -it redis ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 13:23 pts/0 00:00:00 redis-server *:6379

root 45 0 0 13:36 pts/1 00:00:00 ps -ef

可以看到PID的关系如下图

3.2.2、NetWork NameSpace

NetWorkNameSpace是linux内核提供的一种进行网络隔离额资源,通过调用CLONE_NEWNET参数来实现网络设备、网络栈、端口等资源的隔离。NetWorkNameSpace可以在一个操作系统内创建多个网络空间,并为每一个网络空间创建独立的网络协议栈,系统管理员也可以通过ip工具来进行管理

[root@harbor ~]# ip net help

Usage: ip netns list

ip netns add NAME

ip netns set NAME NETNSID

ip [-all] netns delete [NAME]

ip netns identify [PID]

ip netns pids NAME

ip [-all] netns exec [NAME] cmd ...

ip netns monitor

ip netns list-id

我们先尝试通过ip netns add example_net1命令创建一个名为example_net1的namespace

[root@harbor ~]# ip netns add example_net1

[root@harbor ~]# ip netns list example_net1

[root@harbor ~]# ls /var/run/netns/ example_net1

在这个新的namespace内,会有独立的网卡、arp表、路由表、iptables规则,我们可以通过ip netns exec 命令来进行查看

[root@harbor ~]# ip netns exec example_net1 ip link list

1: lo: <loopback> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[root@harbor ~]# ip netns exec example_net1 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

[root@harbor ~]# ip netns exec example_net1 iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

[root@harbor ~]# ip netns exec example_net1 arp -a

通过上述实验我们可以看到,namespace的arp表、路由表、iptables规则是隔离开的,那么网卡我们怎么来验证一下呢?下面我们先创建一对网卡,并将其中一个网卡插入到namespace中

[root@harbor ~]# ip link add type veth

[root@harbor ~]# ip link list

1: lo: <loopback,up,lower_up> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <broadcast,multicast,up,lower_up> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:fc:3f:5f brd ff:ff:ff:ff:ff:ff

3: docker0: <no-carrier,broadcast,multicast,up> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:ad:84:cb:87 brd ff:ff:ff:ff:ff:ff

4: veth0@veth1: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 86:62:51:08:e8:1b brd ff:ff:ff:ff:ff:ff

5: veth1@veth0: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 3a:21:26:35:f8:82 brd ff:ff:ff:ff:ff:ff

[root@harbor ~]# ip link set veth0 netns example_net1

[root@harbor ~]# ip link list

1: lo: <loopback,up,lower_up> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <broadcast,multicast,up,lower_up> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:fc:3f:5f brd ff:ff:ff:ff:ff:ff

3: docker0: <no-carrier,broadcast,multicast,up> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:ad:84:cb:87 brd ff:ff:ff:ff:ff:ff

5: veth1@if4: <broadcast,multicast> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 3a:21:26:35:f8:82 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@harbor ~]# ip netns exec example_net1 ip link list

1: lo: <loopback> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: veth0@if5: <broadcast,multicast> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 86:62:51:08:e8:1b brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@harbor ~]#

我们可以看到,创建完veth pair后,可以看到5个网卡,当将veth0插入到example_net1 namespace后,便只剩下了4个网卡,而example_net1 中多了一个veth0,所以,网卡也是隔离开的。

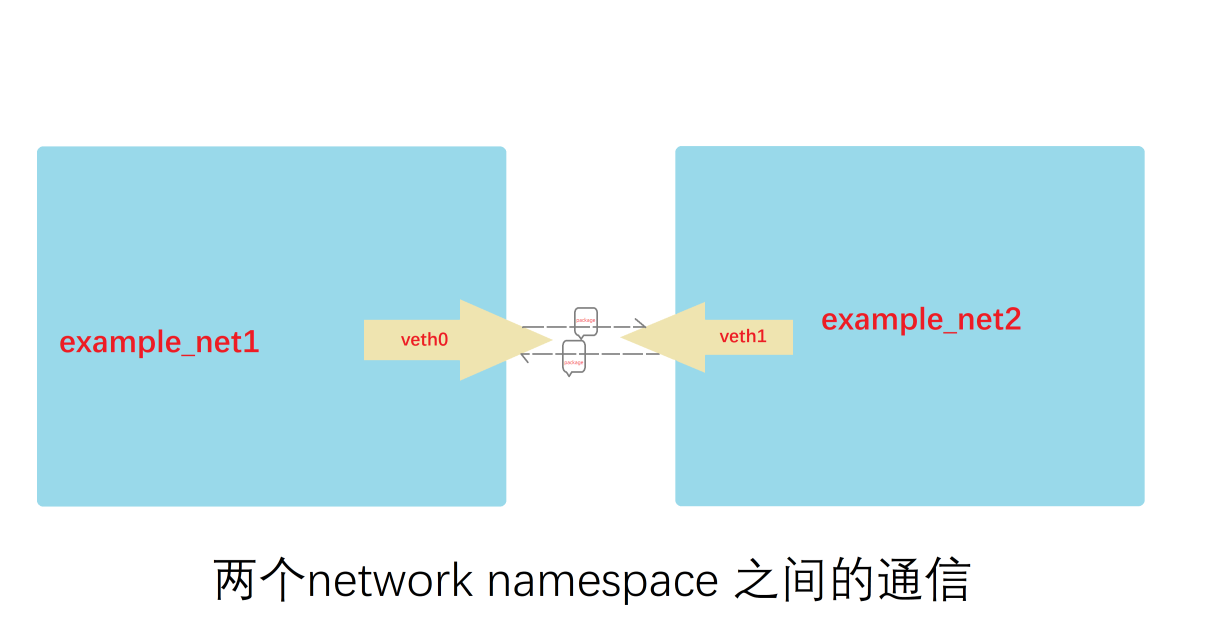

那么跨namespace是怎么通信的呢?我们可以再深入模拟一下,下面我们创建另一个namespace,并veth1插入到另一个namespace中

[root@harbor ~]# ip netns add example_net2

[root@harbor ~]# ip link set veth1 netns example_net2

下面我们开始配置网卡

[root@harbor ~]# ip netns exec example_net1 ip link set veth0 up

[root@harbor ~]# ip netns exec example_net2 ip link set veth1 up

[root@harbor ~]# ip netns exec example_net1 ip addr add 172.19.0.1/24 dev veth0

[root@harbor ~]# ip netns exec example_net2 ip addr add 172.19.0.2/24 dev veth1

[root@harbor ~]# ip netns exec example_net1 ip a

1: lo: <loopback> mtu 65536 qdisc noop state DOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: veth0@if5: <broadcast,multicast,up,lower_up> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 86:62:51:08:e8:1b brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 172.19.0.1/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::8462:51ff:fe08:e81b/64 scope link

valid_lft forever preferred_lft forever

[root@harbor ~]# ip netns exec example_net2 ip a

1: lo: <loopback> mtu 65536 qdisc noop state DOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

5: veth1@if4: <broadcast,multicast,up,lower_up> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3a:21:26:35:f8:82 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.19.0.2/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::3821:26ff:fe35:f882/64 scope link

valid_lft forever preferred_lft foreve

现在两个namespace可以通信了,网络模型如下图所示

[root@harbor ~]# ip netns exec example_net1 ping 172.19.0.2

PING 172.19.0.2 (172.19.0.2) 56(84) bytes of data. 64 bytes from 172.19.0.2:

icmp_seq=1 ttl=64 time=0.095 ms 64 bytes from 172.19.0.2:

icmp_seq=2 ttl=64 time=0.042 ms 64 bytes from 172.19.0.2:

icmp_seq=3 ttl=64 time=0.057 ms ^C

--- 172.19.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2015ms

rtt min/avg/max/mdev = 0.042/0.064/0.095/0.024 ms

[root@harbor ~]#

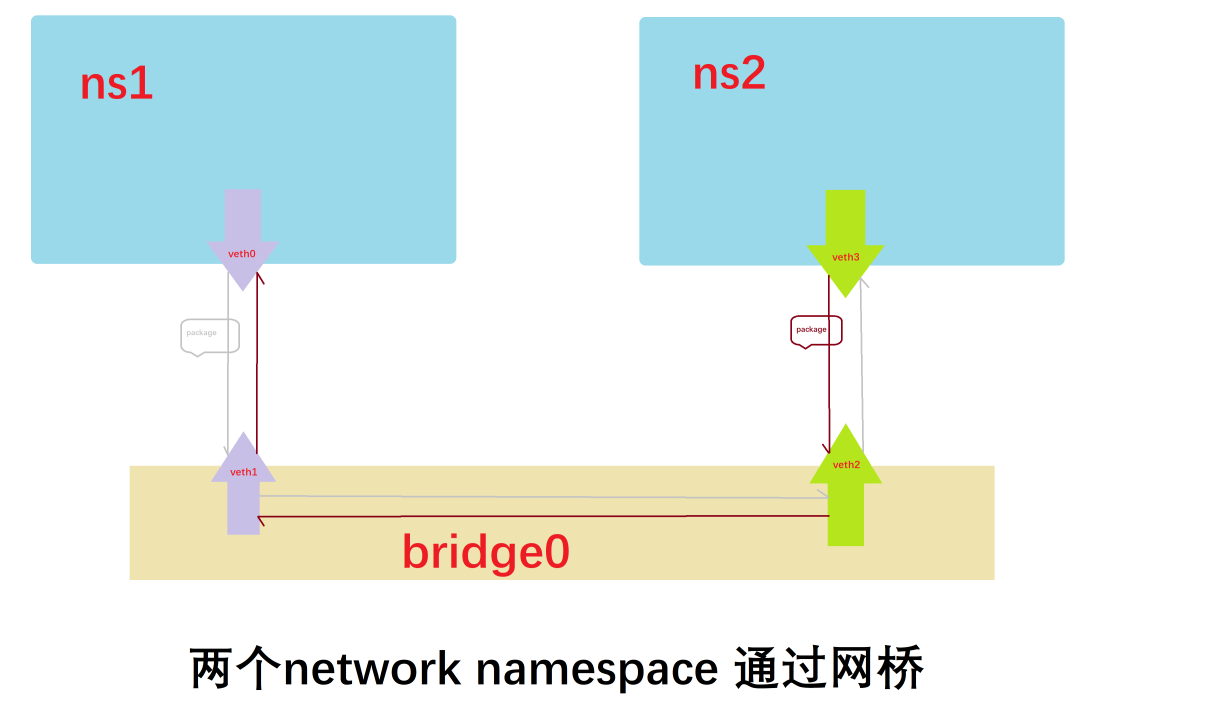

现在我们已经高度接近容器网络了,那么docker中用于跨主机通信的docker0又是怎么实现的呢?下面我们在另一台机器上模拟一下。

[root@harbor ~]# ip netns add ns1

[root@harbor ~]# ip netns add ns2

[root@harbor ~]# brctl addbr bridge0

[root@harbor ~]# ip link set dev bridge0 up

[root@harbor ~]# ip link add type veth

[root@harbor ~]# ip link add type veth

[root@harbor ~]# ip link list

1: lo: <loopback,up,lower_up> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <broadcast,multicast,up,lower_up> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:1b:1e:63 brd ff:ff:ff:ff:ff:ff

3: docker0: <no-carrier,broadcast,multicast,up> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:85:70:1a:f0 brd ff:ff:ff:ff:ff:ff

24: bridge0: <broadcast,multicast,up,lower_up> mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 8e:34:ad:1e:80:18 brd ff:ff:ff:ff:ff:ff

25: veth0@veth1: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether a2:2f:0e:64:d0:7b brd ff:ff:ff:ff:ff:ff

26: veth1@veth0: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether d6:13:14:1a:d4:42 brd ff:ff:ff:ff:ff:ff

27: veth2@veth3: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2e:f1:f1:ae:77:1e brd ff:ff:ff:ff:ff:ff

28: veth3@veth2: <broadcast,multicast,m-down> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether da:08:09:21:36:98 brd ff:ff:ff:ff:ff:ff

[root@harbor ~]# ip link set dev veth0 netns ns1

[root@harbor ~]# ip netns exec ns1 ip addr add 192.21.0.1/24 dev veth0

[root@harbor ~]# ip netns exec ns1 ip link set veth0 up

[root@harbor ~]# ip link set dev veth3 netns ns2

[root@harbor ~]# ip netns exec ns2 ip addr add 192.21.0.2/24 dev veth3

[root@harbor ~]# ip netns exec ns2 ip link set veth3 up

[root@harbor ~]# ip link set dev veth1 master bridge0

[root@harbor ~]# ip link set dev veth2 master bridge0

[root@harbor ~]# ip link set dev veth1 up

[root@harbor ~]# ip link set dev veth2 up

[root@harbor ~]# ip netns exec ns1 ping 192.21.0.2

PING 192.21.0.2 (192.21.0.2) 56(84) bytes of data. 64 bytes from 192.21.0.2:

icmp_seq=1 ttl=64 time=0.075 ms 64 bytes from 192.21.0.2:

icmp_seq=2 ttl=64 time=0.064 ms 64 bytes from 192.21.0.2: icmp_seq=3 ttl=64 time=0.077 ms ^C

--- 192.21.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2005ms

rtt min/avg/max/mdev = 0.064/0.072/0.077/0.005 ms

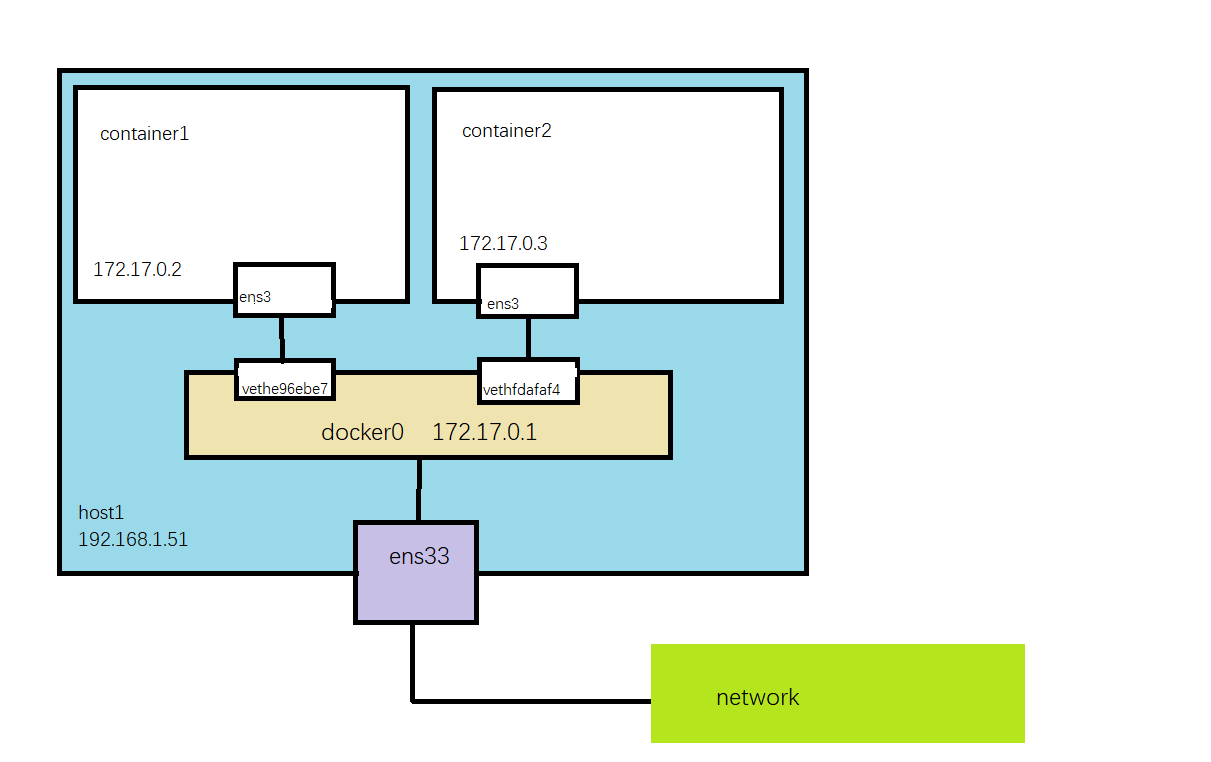

这个就模拟了容器通过docker0网桥通信的过程,网络模型如下图所示。

基于network Namespace docker实现了4个网络模式

- bridge模式

- host模式

- container模式

- none模式

3.2.2.1、bridge模式

当我们将docker安装好之后,docker会自动一个默认的网桥docker0,而bridge模式也是docker默认的网络模式,在不指定—network的前提下,会自动为每一个容器分配一个network namespace,并单独配置IP。我们可以在宿主机上更直观的看到这个现象

[root@harbor ~]# ifconfig

docker0: flags=4099<up,broadcast,multicast> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:f7:b9:1b:10 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 192.168.1.51 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::20c:29ff:fefc:3f5f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:fc:3f:5f txqueuelen 1000 (Ethernet)

RX packets 145458 bytes 212051596 (202.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9782 bytes 817967 (798.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<up,loopback,running> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 8 bytes 684 (684.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 684 (684.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

我们可以看到,docker在宿主机上创建的网桥docker,其IP为172.17.0.1,而网桥则是桥接在了宿主机网卡ens33上面。下面我们将创建两个容器

[root@harbor ~]# docker run -itd centos:7.2.1511

22db06123b51ad671e2545e5204dec5c40853f60cbe1784519d614fd54fee838

[root@harbor ~]# docker run -itd centos:7.2.1511

f35b9d78e08f9c708c421913496cd1b0f37e7afd05a6619b2cd0997513d98885

[root@harbor ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f35b9d78e08f centos:7.2.1511 "/bin/bash" 3 seconds ago Up 2 seconds tender_nightingale

22db06123b51 centos:7.2.1511 "/bin/bash" 4 seconds ago Up 3 seconds elastic_darwin

现在我们再查看一下网卡,又会有什么发现呢?

[root@harbor ~]# ifconfig

docker0: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:f7ff:feb9:1b10 prefixlen 64 scopeid 0x20<link>

ether 02:42:f7:b9:1b:10 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 648 (648.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 192.168.1.51 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::20c:29ff:fefc:3f5f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:fc:3f:5f txqueuelen 1000 (Ethernet)

RX packets 145565 bytes 212062634 (202.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9839 bytes 828247 (808.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<up,loopback,running> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 8 bytes 684 (684.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 684 (684.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethe96ebe7: flags=4163<up,broadcast,running,multicast> mtu 1500

inet6 fe80::fcf9:fbff:fefb:db3b prefixlen 64 scopeid 0x20<link>

ether fe:f9:fb:fb:db:3b txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 1038 (1.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethfdafaf4: flags=4163<up,broadcast,running,multicast> mtu 1500

inet6 fe80::1426:8eff:fe86:1dfb prefixlen 64 scopeid 0x20<link>

ether 16:26:8e:86:1d:fb txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 16 bytes 1296 (1.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

我们可以清楚地看到这里多出来了两个网卡:vethe96ebe7和vethfdafaf4,同时每个容器内也创建了一个网卡设备ens3,而这就组成了bridge模式的虚拟网卡设备veth pair。veth pair会组成一个数据通道,而网桥docker0则充当了网关,可参考下图

同一宿主机通过广播通信,跨主机则是通过docker0网关。

3.2.2.2、host模式

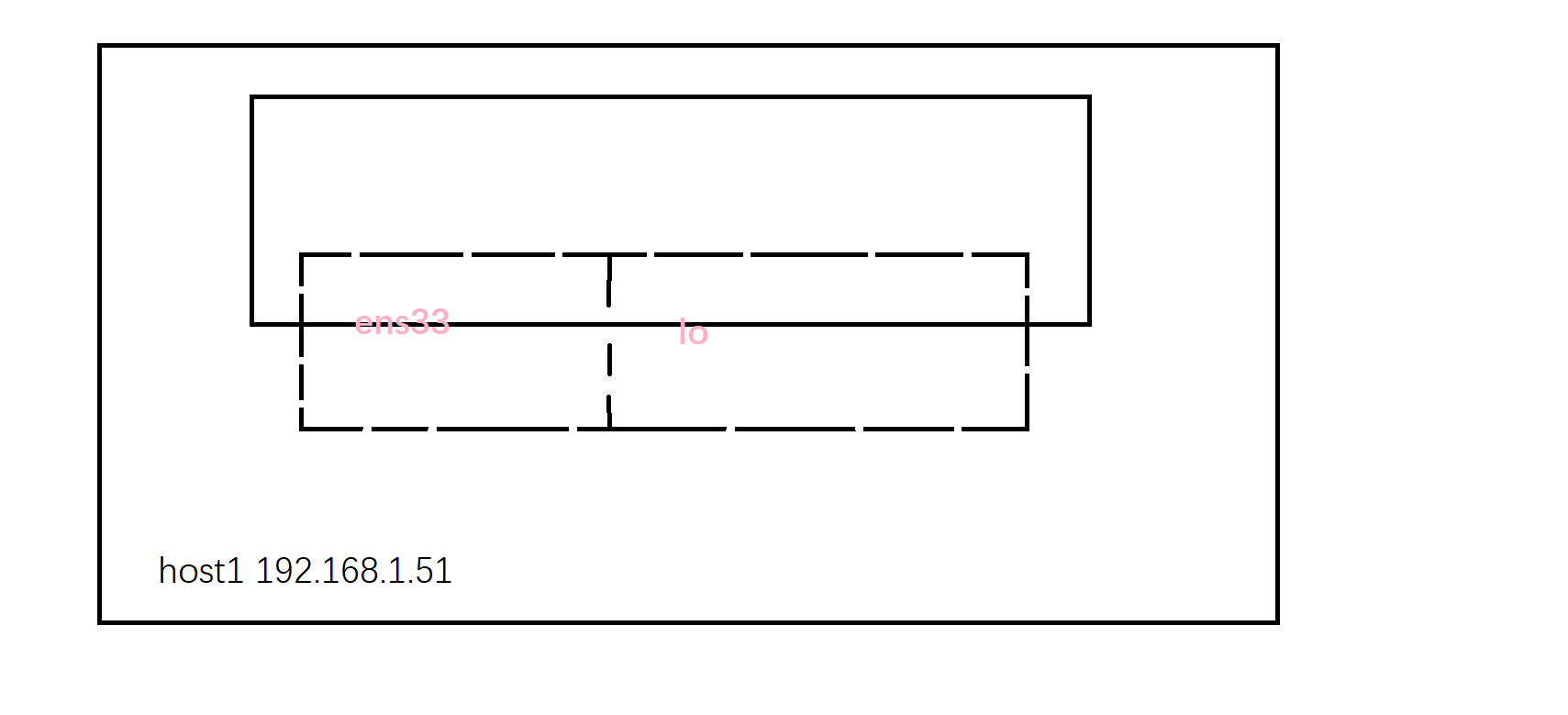

host模式是共享宿主机网络,使得容器和host的网络配置完全一样。我们先启动一个host模式的容器

[root@harbor ~]# docker run -itd --network=host centos:7.2.1511

841b66477f9c1fc1cdbcd4377cc691db919f7e02565e03aa8367eb5b0357abeb

[root@harbor ~]# ifconfig

docker0: flags=4099<up,broadcast,multicast> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:65ff:fead:8ecf prefixlen 64 scopeid 0x20<link>

ether 02:42:65:ad:8e:cf txqueuelen 0 (Ethernet)

RX packets 18 bytes 19764 (19.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 16 bytes 2845 (2.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 192.168.1.51 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::20c:29ff:fefc:3f5f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:fc:3f:5f txqueuelen 1000 (Ethernet)

RX packets 147550 bytes 212300608 (202.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 11002 bytes 1116344 (1.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<up,loopback,running> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 8 bytes 684 (684.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 684 (684.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到host模式下并未创建虚拟网卡设备,我们进入到容器内看一下

[root@harbor /]# hostname -i fe80::20c:29ff:fefc:3f5f%ens32 fe80::42:65ff:fead:8ecf%docker0 192.168.1.51 172.17.0.1

我们可以很直观的看到容器内IP为宿主机IP,同时还有一个docker0的网关。我们可以通过下图更加直观的认识一下

3.2.2.3、container模式

这个模式下,是将新创建的容器丢到一个已存在的容器的网络栈中,共享ip等网络资源,而两者通过lo回环进行通信,下面我们启动一个容器可以观察一下

[root@harbor ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b7b201d3139f centos:7.2.1511 "/bin/bash" 28 minutes ago Up 27 minutes jovial_kepler

[root@harbor ~]# docker run -itd --network=container:b7b201d3139f centos:7.2.1511

c4a6d59a971a7f5d92bfcc22de85853bfd36580bf08272de58cb70bc4898a5f0

[root@harbor ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c4a6d59a971a centos:7.2.1511 "/bin/bash" 2 seconds ago Up 2 seconds confident_shockley

b7b201d3139f centos:7.2.1511 "/bin/bash" 28 minutes ago Up 28 minutes jovial_kepler

[root@harbor ~]# ifconfig

docker0: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

inet6 fe80::42:8ff:feed:83e7 prefixlen 64 scopeid 0x20<link>

ether 02:42:08:ed:83:e7 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 648 (648.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32: flags=4163<up,broadcast,running,multicast> mtu 1500

inet 192.168.1.52 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::20c:29ff:fe1b:1e63 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:1b:1e:63 txqueuelen 1000 (Ethernet)

RX packets 146713 bytes 212204228 (202.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10150 bytes 947975 (925.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<up,loopback,running> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 4 bytes 340 (340.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4 bytes 340 (340.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethb360066: flags=4163<up,broadcast,running,multicast> mtu 1500

inet6 fe80::ca6:62ff:fe45:70e8 prefixlen 64 scopeid 0x20<link>

ether 0e:a6:62:45:70:e8 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 1038 (1.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

这里可以看到,两个容器,但是虚拟网卡只有一个,下面我们进入到容器内

[root@harbor ~]# docker exec -it c4a6d59a971a bash

[root@b7b201d3139f /]# hostname -i 172.18.0.3

可以清晰地看到,两个容器 是同一套网络资源

3.2.2.4、none

通过—network=node 可以创建一个隔离的namespace,但是没有进行任何配置。使用这一网络模式需要管理员对网络配置有自己独到的认识,根据需求配置网络栈即可。

3.3、docker 成名的关键——镜像

作为最为火爆的容器技术,docker快速占据市场的原因之一就是docker镜像。那么docker镜像是什么呢?docker镜像可以简单的理解为环境和应用的集合,是一种封装方式,类似于java的jar包,centos的rpm包。这一打包格式使得通过docker封装的应用可以快速的推广,并且在任何环境下运行此应用。所以说,镜像的规范是docker的最大的贡献,那么这一规范是什么呢?

我们先从dockerhub上拉取一个镜像

[root@harbor dockerfile]# docker pull tomcat

Using default tag: latest

latest: Pulling from library/tomcat

e4d61adff207: Pull complete

4ff1945c672b: Pull complete

ff5b10aec998: Pull complete

12de8c754e45: Pull complete

4848edf44506: Pull complete

e80286f947f6: Pull complete

fe10bb9a5d5c: Pull complete

e5e1929c6f14: Pull complete

d6131e5ef883: Pull complete

d1bc376657d0: Pull complete

Digest: sha256:2e6f26bc723256c7dfdc6e5d9bf34c190ae73fa3a73b8582095cc08b45eb09d9

Status: Downloaded newer image for tomcat:latest

docker.io/library/tomcat:latest

这是一个从dockerhub上拉取的tomcat镜像,可以看到,在拉取过程中镜像分成了10层,下面可以查看一下镜像的详情

[root@harbor dockerfile]# docker inspect 5cae83f90298

[

{

"Id": "sha256:5cae83f90298ae977da8a556b78a96bc3e390a8b782c8624ed0c06d69793a935",

"RepoTags": [

"tomcat:latest"

],

"RepoDigests": [

"tomcat@sha256:2e6f26bc723256c7dfdc6e5d9bf34c190ae73fa3a73b8582095cc08b45eb09d9"

],

"Parent": "",

"Comment": "",

"Created": "2022-03-16T01:42:37.143861055Z",

"Container": "c062a90b82df6e208c9d0d37f57665d5479708af7041b41475cd3e7614993edb",

"ContainerConfig": {

"Hostname": "c062a90b82df",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/local/openjdk-11",

"LANG=C.UTF-8",

"JAVA_VERSION=11.0.14.1",

"CATALINA_HOME=/usr/local/tomcat",

"TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib",

"LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib",

"GPG_KEYS=A9C5DF4D22E99998D9875A5110C01C5A2F6059E7",

"TOMCAT_MAJOR=10",

"TOMCAT_VERSION=10.0.18",

"TOMCAT_SHA512=a9e3c516676369bd9d52e768071898b0e07659a9ff03b9dc491e53f084b9981a929bf2c74a694f06ad26dae0644fb9617cc6e364f0e1dcd953c857978a95a644"

],

"Cmd": [

"/bin/sh",

"-c",

"#(nop) ",

"CMD [\"catalina.sh\" \"run\"]"

],

"Image": "sha256:79f590c9fc025f4c8f0f9da36ead3cdd90f934aad0edd8631e771776c50829a3",

"Volumes": null,

"WorkingDir": "/usr/local/tomcat",

"Entrypoint": null,

"OnBuild": null,

"Labels": {}

},

"DockerVersion": "20.10.12",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/local/openjdk-11",

"LANG=C.UTF-8",

"JAVA_VERSION=11.0.14.1",

"CATALINA_HOME=/usr/local/tomcat",

"TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib",

"LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib",

"GPG_KEYS=A9C5DF4D22E99998D9875A5110C01C5A2F6059E7",

"TOMCAT_MAJOR=10",

"TOMCAT_VERSION=10.0.18",

"TOMCAT_SHA512=a9e3c516676369bd9d52e768071898b0e07659a9ff03b9dc491e53f084b9981a929bf2c74a694f06ad26dae0644fb9617cc6e364f0e1dcd953c857978a95a644"

],

"Cmd": [

"catalina.sh",

"run"

],

"Image": "sha256:79f590c9fc025f4c8f0f9da36ead3cdd90f934aad0edd8631e771776c50829a3",

"Volumes": null,

"WorkingDir": "/usr/local/tomcat",

"Entrypoint": null,

"OnBuild": null,

"Labels": null

},

"Architecture": "amd64",

"Os": "linux",

"Size": 684979820,

"VirtualSize": 684979820,

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/fb710714eb8710ee3a2ab7c8fef97db7c8d5ff0cfc7d4b722cf8057dda0dcb50/diff:/var/lib/docker/overlay2/dc0cab0975eee25cd2e07212af372b71faff49c244b64774e01a5655bb7c9ad5/diff:/var/lib/docker/overlay2/85ef0bdc5666b4cf86943b91a618ef72fb3c37014dbb1efcb74cee2125d756aa/diff:/var/lib/docker/overlay2/02349334e5edde3d623e28b55d957a97d7c84a5536cfdec1d1c03b5f123ef0b7/diff:/var/lib/docker/overlay2/11e565986463adade917c7ab9b613b9c91ff22f7ce8c6007bd874fa003e4d140/diff:/var/lib/docker/overlay2/8e6bd3b86dd7bf8b961d85ccad1a894049fd4b779d6f600f6f477d5ae9185eda/diff:/var/lib/docker/overlay2/b8acff43f3b717bbdd6b9676fa1f5d8bdefb82eb842a7d4784e1786b5ad010fa/diff:/var/lib/docker/overlay2/322d3808c7ad35c38c6c97e4d5872f2952147071e769826741c529d0be913062/diff:/var/lib/docker/overlay2/675e14319f5d989334c6374061cefe85203db3c25f9f9d5291a9fe3b8d6d506d/diff",

"MergedDir": "/var/lib/docker/overlay2/3261f99abf26c763cbc185f247868fcf2a78e45e47e8b51c712823b563741b37/merged",

"UpperDir": "/var/lib/docker/overlay2/3261f99abf26c763cbc185f247868fcf2a78e45e47e8b51c712823b563741b37/diff",

"WorkDir": "/var/lib/docker/overlay2/3261f99abf26c763cbc185f247868fcf2a78e45e47e8b51c712823b563741b37/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:89fda00479fc0fe3bf2c411d92432001870e9dad42ddd0c53715ab77ac4f2a97",

"sha256:26d5108b2cba762ee9b91c30670091458a0c32b02132620b7f844085af596e22",

"sha256:48144a6f44ae89c578bd705dba2ebdb2a086b36215affa5659b854308fa22e4b",

"sha256:e3f84a8cee1f3e6a38a463251eb05b87a444dec565a7331217c145e9ef4dd192",

"sha256:d1609e012401924c7b64459163fd47033dbec7df2eacddbf190d42d934737598",

"sha256:804bc49f369a8842a9d438142eafc5dcc8fa8e5489596920e1ae6882a9fc9a26",

"sha256:da814db69f74ac380d1443168b969a07ac8f16c7c4a3175c86a48482a5c7b25f",

"sha256:e72ea1ea48e9f8399d034213c46456d86efe52e7106d99310143ba705ad8d4ee",

"sha256:8ab35e31d8f9f46a63509d3e68dee718905b86ebf15e0fedc75a547ccbc4b5c0",

"sha256:97fa55a28116271700a98a5e4b48a55bc831e0d1d91cd806636ac371cd4db770"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}

}

]

而镜像的数据就在这些目录之内,而这也就是基于UnionS实现的镜像的分层规范。同时除了最底层镜像,每一层都有一个指向底层的索引,便于找到这一层镜像的父层,而这就意味着,父层是可以进行复用的,通过这一共用父层的方式,可以降低储存的使用,提高构建的速率。

3.3.1、构建镜像

第一种是通过容器构建镜像,即容器镜像,通过docker commit 的方式进行构建,该方法并不推荐,原因有如下几点:1)镜像为分层结构,容器则为镜像顶层加了一个可写层,这一方式构建的镜像极容易镜像过大。2)容器镜像无法确定这一层可写层内的内容,对于安全性上,存在问题,而且也不易维护。第二种镜像构建的方法则为dockerfile,通过dockerfile来控制镜像的构建,这个也是官方推荐的方案。

3.3.1.1、基础构建方案

下面是一个标准的tomcat的dockerfile

FROM centos:7

ENV VERSION=8.5.43

RUN yum install java-1.8.0-openjdk wget curl unzip iproute net-tools -y && \

yum clean all && \

rm -rf /var/cache/yum/*

COPY apache-tomcat-${VERSION}.tar.gz /

RUN tar zxf apache-tomcat-${VERSION}.tar.gz && \

mv apache-tomcat-${VERSION} /usr/local/tomcat && \

rm -rf apache-tomcat-${VERSION}.tar.gz /usr/local/tomcat/webapps/* && \

mkdir /usr/local/tomcat/webapps/test && \

echo "ok" > /usr/local/tomcat/webapps/test/status.html && \

sed -i '1a JAVA_OPTS="-Djava.security.egd=file:/dev/./urandom"' /usr/local/tomcat/bin/catalina.sh && \

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV PATH $PATH:/usr/local/tomcat/bin

WORKDIR /usr/local/tomcat

EXPOSE 8080

CMD ["catalina.sh", "run"]

这是一个tomcat的dockerfile,里面涉及到了dockerfile的专用语法,包括FROM、RUN、ENV、WORKDIR等等,那么都有什么作用呢?

3.3.1.1、FROM

FROM <img>:<tag>

镜像是分层的,通过FROM可以引用一个基础镜像,这个是必须的模块,而镜像是以此为基础,没执行一条命令,就在此基础上增加一层镜像,所以基础镜像需要尽可能的精简,同时要保证安全性。

3.3.1.2、WORKDIR

WORKDIR /path/to/workdir

这一模块用于指定工作目录,如果指定后,通过exec进入容器后,便在WORKDIR中,同时需要注意,通过相对路径执行命令时,一定要正确设置WORKDIR,避免出现因路径导致的失败。

3.3.1.3、COPY 和AND

COPY 源文件 目标路径 COPY 源文件 目标路径

ADD 源文件 目标路径 ADD 源文件 目标路径

这两个模块用处相同,都是将文件拷贝到镜像内,但是还是存在一些差异:

a、ADD 可以通过网络源下载文件封入镜像内。

b、ADD可以对tar、gzip、bzip2等文件进行解压。

3.3.1.4、RUN

RUN command1 ... commandN

RUN模块引导的是构建镜像时执行的命令,没执行一次RUN,会生成一层镜像,所以建议在使用中,通过&& 链接来减少镜像层数,比如构建基础镜像时,通过一条RUN 将java和tomcat封装在同一层内做基础镜像,那么业务镜像仅需要在基础镜像外再加一层,这样便可以保障镜像的精简。

3.3.1.5、ENV

ENV key=value

ENV模块将环境变量注入到镜像内。

3.3.1.6、EXPOSE

EXPOSE port XXXX

此模块用于声名暴露的端口。

3.3.1.7、USER

USER app USER app

USER app:app USER app:app

USER 500 USER 500

USER 500:500 USER 500:500

USER app:500 USER app:500

USER 500:app USER 500:app

USER模块用于限定用户,可以用来实现降权运行容器内的应用,提高安全性。再使用这个模块时,需要使用useradd创建用户。

3.3.1.8、ENTRYPOINT

ENTRYPOINT ["command","param"] ENTRYPOINT ["command","param"]

ENTRYPOINT command param ENTRYPOINT command param

ENTRYPOINT模块用于设置容器启动时的命令,设置一个不退出的前台进程,同时,ENTRYPOINT设置的命令不会被docker run所覆盖。

3.3.1.9、CMD

CMD ["command","param"] CMD command param CMD ["param1","param2"]

CMD模块和ENTRYPOINT 使用方法一致,但是CMD模块可以被docker run所覆盖,同时CMD 可以和ENTRYPOINT 配合使用,作为ENTRYPOINT 的参数出现。

下面我们亲自构建一个tomcat 镜像

FROM centos:7

ENV VERSION=8.5.77

RUN yum install java-1.8.0-openjdk wget curl unzip iproute net-tools -y && \

yum clean all && \

rm -rf /var/cache/yum/*

COPY apache-tomcat-${VERSION}.tar.gz /

RUN tar zxf apache-tomcat-${VERSION}.tar.gz && \

mv apache-tomcat-${VERSION} /usr/local/tomcat && \

rm -rf apache-tomcat-${VERSION}.tar.gz /usr/local/tomcat/webapps/* && \

mkdir /usr/local/tomcat/webapps/test && \

echo "ok" > /usr/local/tomcat/webapps/test/status.html && \

sed -i '1a JAVA_OPTS="-Djava.security.egd=file:/dev/./urandom"' /usr/local/tomcat/bin/catalina.sh && \

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV PATH $PATH:/usr/local/tomcat/bin

WORKDIR /usr/local/tomcat

EXPOSE 8080

CMD ["catalina.sh", "run"]

开始构建镜像

[root@harbor dockerfile]# docker build -t tomcat8:base .

Sending build context to Docker daemon 10.56MB

Step 1/9 : FROM centos:7

---> eeb6ee3f44bd

Step 2/9 : ENV VERSION=8.5.77

---> Running in c3df3fcc63cc

Removing intermediate container c3df3fcc63cc

---> ac866324e50d

Step 3/9 : RUN yum install java-1.8.0-openjdk wget curl unzip iproute net-tools -y && yum clean all && rm -rf /var/cache/yum/*

---> Running in 2dc60477854d

Loaded plugins: fastestmirror, ovl

......

......

Dependency Updated:

libcurl.x86_64 0:7.29.0-59.el7_9.1

Complete!

Loaded plugins: fastestmirror, ovl

Cleaning repos: base extras updates

Cleaning up list of fastest mirrors

Removing intermediate container 2dc60477854d

---> ba9e5716041f

Step 4/9 : COPY apache-tomcat-${VERSION}.tar.gz /

---> 59f9b402515f

Step 5/9 : RUN tar zxf apache-tomcat-${VERSION}.tar.gz && mv apache-tomcat-${VERSION} /usr/local/tomcat && rm -rf apache-tomcat-${VERSION}.tar.gz /usr/local/tomcat/webapps/* && mkdir /usr/local/tomcat/webapps/test && echo "ok" > /usr/local/tomcat/webapps/test/status.html && sed -i '1a JAVA_OPTS="-Djava.security.egd=file:/dev/./urandom"' /usr/local/tomcat/bin/catalina.sh && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

---> Running in 6bc6f93dedcf

Removing intermediate container 6bc6f93dedcf

---> b219db3618d4

Step 6/9 : ENV PATH $PATH:/usr/local/tomcat/bin

---> Running in 79dc2da9c5d7

Removing intermediate container 79dc2da9c5d7

---> 888f74d91c5e

Step 7/9 : WORKDIR /usr/local/tomcat

---> Running in ce9f128b62cd

Removing intermediate container ce9f128b62cd

---> 5b7e1f4509f4

Step 8/9 : EXPOSE 8080

---> Running in 110c4362db20

Removing intermediate container 110c4362db20

---> 8c754855adb1

Step 9/9 : CMD ["catalina.sh", "run"]

---> Running in a09c81f963a0

Removing intermediate container a09c81f963a0

---> 333033fa3b65

Successfully built 333033fa3b65

Successfully tagged tomcat8:base

这里有一个很有意思的事情,当构建构成中,执行docker ps命令可以看到一个构件中的容器

[root@harbor ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c5f2fe101512 f073544f2d8a "/bin/sh -c 'yum ins…" 4 seconds ago Up 3 seconds pensive_burnell

0129ea131ef7 redis:v1 "/bin/sh -c redis-se…" 47 minutes ago Up 47 minutes redis

至此,一个基于java8 和 tomcat8的 centos7基础镜像构建完毕

3.3.1.2、分阶段构建

分阶段构建是docker在17.5后推出的一个概念

FROM openjdk:8 as Builder

RUN git clone ssh://xxxxxx.git /home/ && \

cd xxxxxx && \

mvn clean package

FROM alpine

COPY --fromBuilder /home/xxxxxx/target /opt/xxx

ENTRYPOINT /opt/xxx/start.sh

3.3.1.3、buildkit

3.2.2、镜像管理

3.2.2.1、清理dangling镜像

在日常工作中,常常会出现一个现象,就是针对一个部署包进行多次修改,每次修改都创建一个新的镜像,但是tag和image都为修改,这样每一次构建后,image和tag都会指向最新的镜像,而之前的镜像就会变成:的样式,也就是dangling镜像

[root@k8s03 hgfs]# docker images -f "dangling=true"

REPOSITORY TAG IMAGE ID CREATED SIZE

<none> <none> d4aa089b72f7 16 minutes ago 734MB

<none> <none> e0d2d9814c3f 17 minutes ago 357MB

这一镜像是需要频繁清理以实现节约空间的目的

3.2.2.2、清理业务镜像

业务镜像理论上应谨慎清理,可定时清理超期镜像,但是为了实现版本控制,制品库内的镜像进行归档封存,不建议彻底清理。

3.3.3、关于镜像个人经验

3.3.3.1、镜像切忌追求小

很多人在构建镜像时追求镜像的精简,比如使用alpine或者busybox作为基础镜像,这样虽然可以有效减小镜像体积,但是alpine 和busybox 镜像由于缺失大量动态库,常常会带来其他诡异的问题,增大排查难度。而且image采用了UFS的架构,实际上编排好镜像的话,业务镜像其实是共享基础镜像,实际上变更的内容是很小的,所以在这基础上并不用担心镜像过大导致的包括分发慢,扩容慢等问题

3.3.3.2、一个image 只运行一个进程

docker 被人所称道的是其故障恢复功能,而docker所监控的是PID为1的进程,如果一个容器内有多个应用,那么当PID大于1的进程出现异常后,docker并不能感知并重启此进程,会给服务维护带来巨大的问题

3.3.3.3、禁止使用类似tail -f hung 的进程作为PID为1的进程

3.3.3.4、降权

docker由于共享内核,所以只做到了进程级别的隔离,如果使用root权限运行进程,会带来渗透等安全风险,所以如果非必要,禁用root运行容器

3.3.3.5、禁止ssh

3.3.3.6、严禁 docker commit 构建镜像

dockerfile可以保证image的构建过程清晰可见可追溯,当使用docker commit 构建镜像时,无法实现diff操作,导致镜像的构建过程称为黑盒,无法追溯,使维护难度增大。

3.3.3.6、实时清理镜像内无用产出

docker image 采用unionFS技术,在执行dockerfile时,每一条RUN、COPY等指令都会形成新的一层,如果不在RUN指令下清理无用产出,那么就会导致镜像异常增大

FROM centos:7

RUN yum -y install nginx && \

yum -y clean all && \

rm -rf /var/cache/yum/*

3.3.3.7、使用好 —no-cache指令

docker在构建镜像时,第一次构建完毕后,镜像机上便已经形成了cache,再次构建时可实现高速构建,但是如果构建镜像时希望对之前的某层进行修改(比如yum 安装默认最新版本,版本发生了变更),就需要丢掉cache,使用 —no-cache指令,但是会导致构建变慢。

3.3.4、浅谈UnionFS

docker image是基于Linux 的unionFS (union File System)来实现的。

unionFS 在2004年由纽约州立大学石溪分校开发,可以实现把多个branch联合挂载在同一个目录下,同时branch在物理层彼此隔离。unionFS有一个重要的概念叫做CopyOnWrite,在unionFS的哲学中认为,一个重复的资源是可以共享的,我们可以把这种可重复引用的资源 Release 出去,当需要对此资源进行二次创作时,会在资源上面挂载一个可写层进行修改,通过这种资源共享的方式,可以显著地减少未修改资源复制带来的消耗,但是也会在进行资源修改的时候增减小部分的开销。

docker 在制作镜像时,就是在制作一个一个可以共享的资源,当使用这个image运行一个容器时,挂载branch包括只读层、init层、读写层

- 只读层就是image

- init层时docker添加的一层,用于维护/etc/hosts 和/etc/resove.conf等不希望被共享的信息

- 读写层为空,可供用户进行读写操作,当读写层修改只读层对应内容时,仅仅是将对应内容遮挡住,并为真实修改,读写层需要通过docker commit操作来进行保存,如果需要的话

3.4、一些好用的高级命令

3.4.1、docker system df

在上一篇章里,我们认识了image,同时也认识了UnionFS这一文件系统,这也带来一个问题,通过docker images无法真正认识到image使用了多少存储空间

[root@harbor ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx test b2ead77fc9af 16 seconds ago 141MB nginx latest 4bb46517cac3 3 weeks ago 133MB

通过nginx:latest镜像创建nginx:test镜像,通过docker images查看到的镜像所占空间总和为141+133 也就是274M,但事实真的如此吗?我们可以看一下文件系统overlay2的空间,可以认为这个空间使用量近乎为images实际使用空间大小

[root@harbor docker]# du -sh overlay2/

149M overlay2/

可以看到,仅有149M。而docker提供了docker system df命令可以看到docker的磁盘占用

[root@harbor docker]# docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 2 1 141MB 141MB (100%)

Containers 1 0 1.114kB 1.114kB (100%)

Local Volumes 0 0 0B 0B

Build Cache 0 0 0B 0B

这里清晰地展示了,空间使用为141M,这也为清理空间提供了度量的标准。

3.4.2、docker system events

除此之外,docker system还可以获得docker的实时事件,我们打开两个终端,并在一个终端内操作,而另一个终端内查看实时事件

[root@harbor docker]# docker run -d nginx 7

042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9

[root@harbor docker]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7042ffd3c291 nginx "/docker-entrypoint.…" 37 seconds ago Up 36 seconds 80/tcp serene_villani

14476a7ee535 nginx "/docker-entrypoint.…" 49 minutes ago Exited (0) 31 minutes ago relaxed_bohr

[root@harbor docker]# docker rm

14476a7ee535 14476a7ee535

[root@harbor docker]# docker stop 7042ffd3c291 7042ffd3c291

[root@harbor docker]# docker rm 7042ffd3c291 7042ffd3c291

[root@harbor ~]# docker system events

2020-09-09T22:03:33.971697001+08:00 container create 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani)

2020-09-09T22:03:34.000975395+08:00 network connect 1ac84996ba0384fba699dda0592047fcb66c1c2ca595fce3c21bc770e6bb50ef (container=7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9, name=bridge, type=bridge)

2020-09-09T22:03:34.201250017+08:00 container start 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani)

2020-09-09T22:04:28.694443162+08:00 container destroy 14476a7ee53502db003352d92acad1ba6646d2d8bbadd0651d8bc32e4421f687 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=relaxed_bohr)

2020-09-09T22:06:03.281628948+08:00 container kill 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani, signal=15)

2020-09-09T22:06:03.333752851+08:00 container die 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (exitCode=0, image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani)

2020-09-09T22:06:03.364630265+08:00 network disconnect 1ac84996ba0384fba699dda0592047fcb66c1c2ca595fce3c21bc770e6bb50ef (container=7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9, name=bridge, type=bridge)

2020-09-09T22:06:03.404858530+08:00 container stop 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani)

2020-09-09T22:06:09.933835238+08:00 container destroy 7042ffd3c291150a0180e3879d9632ee82145d50662d581891ae21e2fcc67bf9 (image=nginx, maintainer=NGINX Docker Maintainers <docker-maint@nginx.com>, name=serene_villani)

当创建容器时,第一个事件为创建容器7042ffd3c291150a,第二个事件为链接网络到容器7042ffd3c291150a,第三个事件为启动容器7042ffd3c291150a,第四个事件为删除容器14476a7ee53502db00,第五个事件为停止容器7042ffd3c291150a,第七个事件为容器死亡(用的die来描述),第八个事件为断开7042ffd3c291150a的网络连接,第九个事件为停止容器7042ffd3c291150a,第十个事件为删除容器7042ffd3c291150a,这个命令可以获取每一个,对于学习容器的业务逻辑,以及定位问题也是有着巨大的帮助的。

3.4.3、docker system prune

在上一篇章,我们通过docker images -f “dangling=true” 或者docker images prune -a来清理无用的镜像,docker 还提供了docker system prune命令,我们可以看一下效果

[root@harbor docker]# docker system prune

WARNING! This will remove:

- all stopped containers

- all networks not used by at least one container

- all dangling images

- all dangling build cache

可以看到清理的十分彻底,包括停止的容器,未使用的网络,dangling镜像,还有dangling镜像的缓存。不过这也代表命令很危险,特别是对于容器,谨慎使用,避免误删误停止的容器。

3.4.4 、docker system info

这个和docker info相同,展示docker配置的信息

3.5、docker 安全

3.5.1、禁止使用 privileged

[root@harbor docker]# docker run --help | grep privileged

--privileged Give extended privileges to this container

在linux系统内我们可以简单的把用户划分为特权用户和非特权用户,也就是root和非root用户,针对特权用户,linux把这些特权做了更加详细的划分,每一个单元就是一个capability,可以参考Linux capabilities。

在docker 里面,默认开放了15个capabilities,可以参考 runC 文档

所以在运行docker 容器时,应采用最小原则开放capabilities

docker run --name xxx --cap-add NET_ADMIN -itd xxx

3.5.2、你真的需要root吗?

虽然使用root运行容器未开放所有的capabilities,但是仍默认开放了15个

| Capability | Enabled |

|---|---|

| CAP_NET_RAW | 1 |

| CAP_NET_BIND_SERVICE | 1 |

| CAP_AUDIT_READ | 1 |

| CAP_AUDIT_WRITE | 1 |

| CAP_DAC_OVERRIDE | 1 |

| CAP_SETFCAP | 1 |

| CAP_SETPCAP | 1 |

| CAP_SETGID | 1 |

| CAP_SETUID | 1 |

| CAP_MKNOD | 1 |

| CAP_CHOWN | 1 |

| CAP_FOWNER | 1 |

| CAP_FSETID | 1 |

| CAP_KILL | 1 |

| CAP_SYS_CHROOT | 1 |

那么如果使用目录映射,将/etc/目录映射到容器内后,还是可以修改宿主机文件的,而且docker暴露出的CVE漏洞中也不乏此类问题。所以如非必要,禁止使用root运行容器。

总而言之docker是一款极好用的容器运行时服务,同类服务还有podman、containerd、rkt(终结了)、cri-o(用的少),但是由于自身作死,已经逐渐离开了游戏圈,这里就不做更深的介绍了,有兴趣可以学习官网。以后有时间可以介绍containerd。