正常删除

1.查看节点状态,如果是pin ,则需要设置为unpin

su - grid[grid@rac1 ~]$ olsnodes -n -s -trac1 1 Active Unpinnedrac2 2 Active Unpinnedrac3 3 Active Unpinnedcrsctl unpin css -n rac3

2.查看数据库状态

srvctl config database -d racdbsrvctl status database -db racdb

3.设置ocr备份

su - rootcd /opt/app/12.2.0.1/grid/bin/./ocrconfig -showbackup./ocrconfig -manualbackup

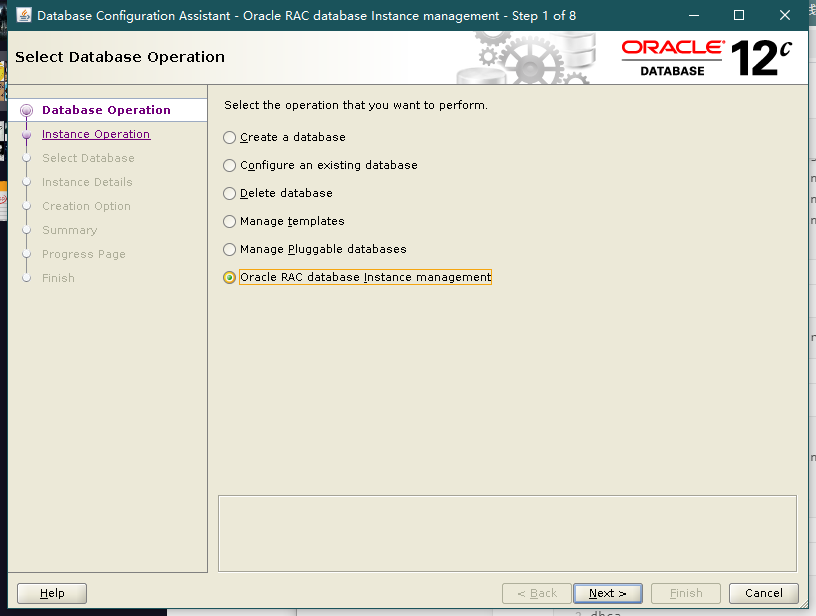

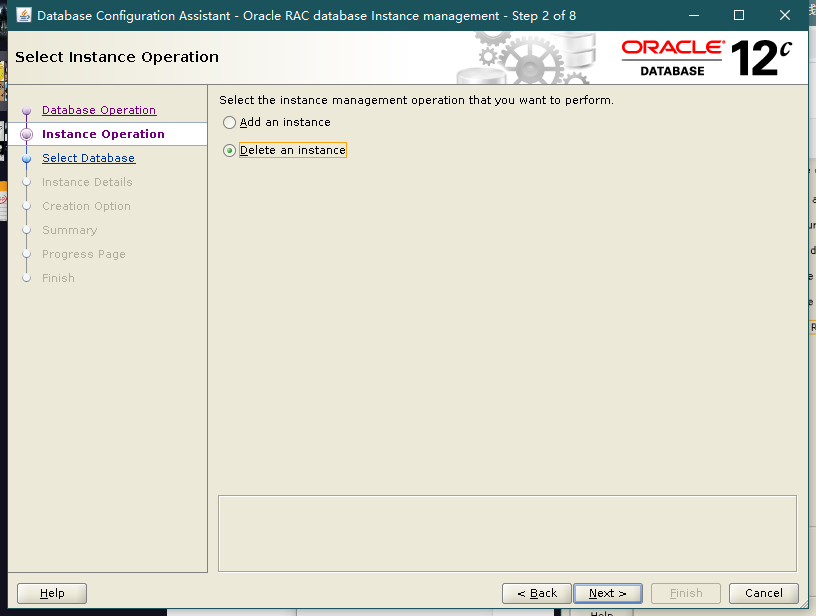

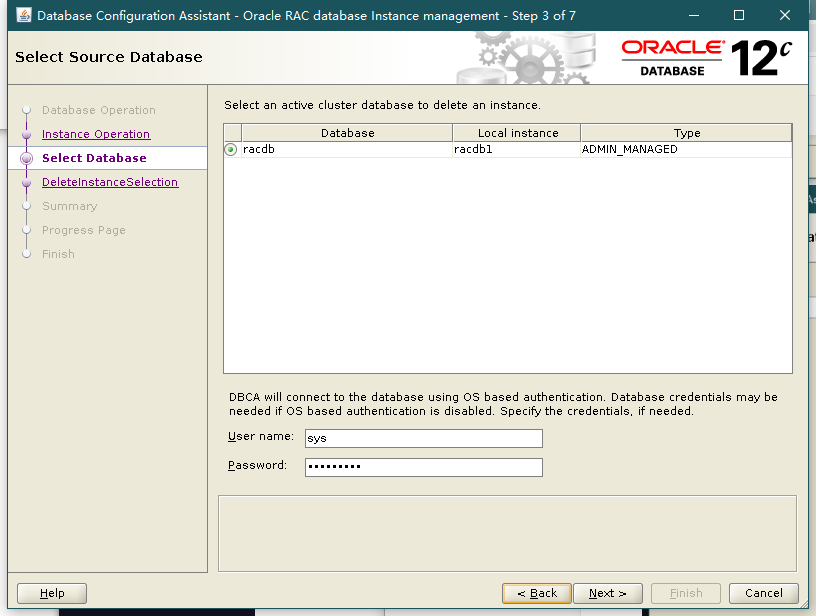

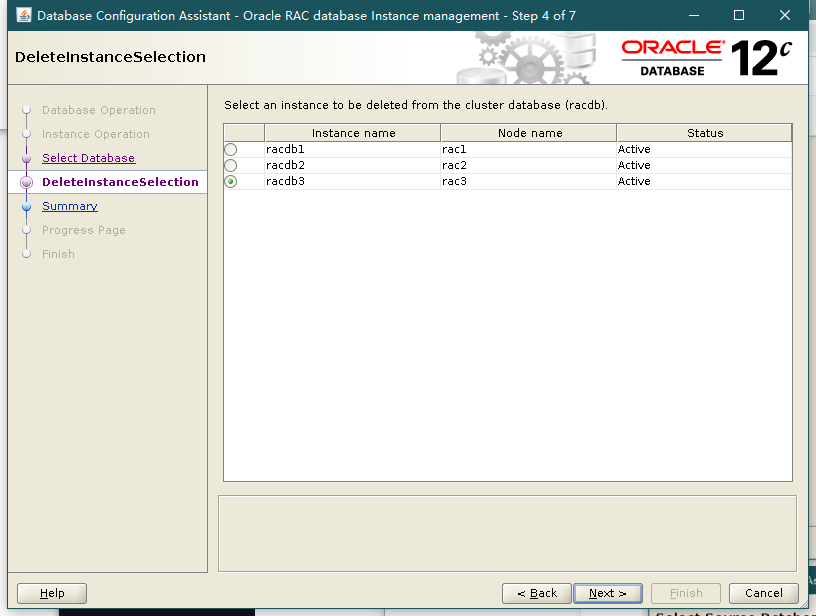

4.删除实例(任意节点)

命令图形化界面任选一种

su - root./srvctl remove instance -db racdb -instance racdb3su - oracledbca

5.查看实例是否删除

crsctl stat res -tlsnrctl status listener_scan1select * from gv$instance;

6.停止 and 禁止 监听

srvctl stop listener -l listener -n rac3srvctl disable listener -l listener -n rac3srvctl status listener -l listener -n rac3

7.在需要删除的节点上更新NodeList(本地删除)

su - oraclecd /opt/app/oracle/product/12.2.0.1/dbhome_1/oui/bin./runInstaller -updateNodeList ORACLE_HOME=/opt/app/oracle/product/12.2.0.1/dbhome_1 "cluster_nodes=rac3" -local

8.删除oracle 软件

cd /opt/app/oracle/product/12.2.0.1/dbhome_1/deinstall./deinstall -local

9.进入其他所有运行节点更新Nodelist

su - oraclecd /opt/app/oracle/product/12.2.0.1/dbhome_1/oui/bin./runInstaller -updateNodeList ORACLE_HOME=/opt/app/oracle/product/12.2.0.1/dbhome_1 "cluster_nodes=rac1,rac2" -local

10.更新inventory(在被要删除的节点上)

su - gridcd /opt/app/12.2.0.1/grid/oui/bin/./runInstaller -updateNodeList ORACLE_HOME=/opt/app/12.2.0.1/grid " cluster_nodes=rac3" CRS=TRUE -silent -local

11.禁用集群资源

su - rootcd /opt/app/12.2.0.1/grid/crs/install./rootcrs.pl -deconfig -forceredhat /centos 7 会报错---------------------------------------------------------------------------------------Can't locate Env.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 . . ./../../perl/lib) at crsinstall.pm line 286.--------------------------------------------------------------------------------------拷贝下列文件cp -p /opt/app/12.2.0.1/grid/perl/lib/5.22.0/Env.pm /usr/lib64/perl5/vendor_perl在此执行./rootcrs.pl -deconfig -force

12.再此检查资源是否停用

正在运行的节点上执行

su - rootcrsctl stat res -t

13.更新所有正在运行节点的NodeList

su - gridcd /opt/app/oracle/product/12.2.0.1/dbhome_1/oui/bin./runInstaller -updateNodeList ORACLE_HOME=/opt/app/12.2.0.1/grid " cluster_nodes=rac1,rac2" CRS=TRUE -silent -local

14.删除grid 软件

注意如果不指定local会删除集群中所有grid软件

su - gridcd /opt/app/12.2.0.1/grid/deinstall/./deinstall -local删除残余目录rm -rf /etc/oraInst.locrm -rf /opt/ORCLfmaprm -rf /etc/oratab

15.在正在运行的节点删除rac3

su - rootcd /opt/app/12.2.0.1/grid/bin./crsctl delete node -n rac3su - grid[grid@rac1 ~]$ olsnodes -s -trac1 Active Unpinnedrac2 Active Unpinned

故障删除

1.删除实例

通过dbca或者下列命令删除

如果故障节点停机dbca图形化界面获取不到故障节点,则在正常节点的oracle 账户下执行下列命令

dbca -silent -deleteInstance -nodeList rac3 -gdbName racdb -instanceName racdb3 -sysDBAUserName sys -sysDBAPassword "Oracle123"

2.查看实例是否删除

crsctl stat res -tlsnrctl status listener_scan1select * from gv$instance;srvctl config database -d racdb

3.更新所有正常节点oracle nodelist

su - oraclecd /opt/app/oracle/product/12.2.0.1/dbhome_1/oui/bin./runInstaller -updateNodeList ORACLE_HOME=/opt/app/oracle/product/12.2.0.1/dbhome_1 "cluster_nodes=rac1,rac2" -local

4.更新所有正常节点 grid nodelist

su - gridcd /opt/app/12.2.0.1/grid/oui/bin/./runInstaller -updateNodeList ORACLE_HOME=/opt/app/12.2.0.1/grid " cluster_nodes=rac1,rac2" CRS=TRUE -silent -local

5.停止和删除vip 节点

su - gridcrsctl stat res -tsu - rootcd /opt/app/12.2.0.1/grid/bin/./srvctl stop vip -i rac3 停止./srvctl stop vip -i rac3 -f 强制停止./crsctl delete node -n rac3 删除节点

6.检查其他节点是否已经删除

su - gridcluvfy stage -post nodedel -n rac3 -verbose

异常处理

删除节点报错

关闭所有节点,开启主节点,删除故障节点,之后开启其他节点。

./crsctl delete node -n host2node2CRS-4662: Error while trying to delete node host2node2.CRS-4000: Command Delete failed, or completed with errors.