04-5-持续部署

1. 概念

1.1. 基于Kubernets生态的闭环

Kubernets集群的目标是为了构建一套Paas平台:

- 代码提交:开发将代码提交到Git仓库

- 持续集成:通过流水线将开发提交的代码克隆、编译、构建镜像并推到docker镜像仓库

- 持续部署:通过流水线配置Kubernetes中Pod控制器、service和ingress等,将docker镜像部署到测试环境

- 生产发布:通过流水线配置Kubernetes中Pod控制器、service和ingress等,将通过测试的docker镜像部署到生产环境

涉及到的功能组件:

- 持续集成用Jenkins实现

- 持续部署用Spinnaker实现

- 服务配置中心用Apollo实现

- 监控用Prometheus+Grafana实现

- 日志收集用ELK实现

- 通过外挂存储方式实现数据持久化,甚至可以通过StoargeClass配合PV和PVC来实现自动分配和挂盘

- 数据库属于有状态的服务,一般不会放大Kubernets集群中

1.2. Spinnaker

Spinnaker(https://www.spinnaker.io)是一个开源多云持续交付的平台,主要提供了两个功能:

1.2.1. 应用管理

Spinnaker使用应用程序管理功(Application management)能来查看和管理您的云资源,常涉及到的云资源有 Azure、AWS、Kubernetes等,不支持国内阿里云、腾讯云。

Applications, clusters, server groups是Spinnaker用来描述服务的关键概念。Load balancers and firewalls 描述了您的服务如何向用户公开。

1.2.2. 应用部署

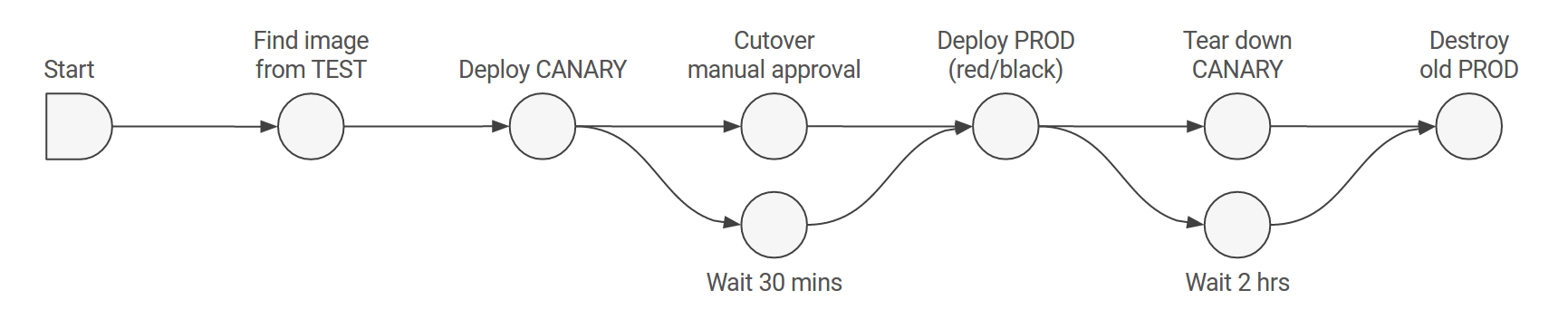

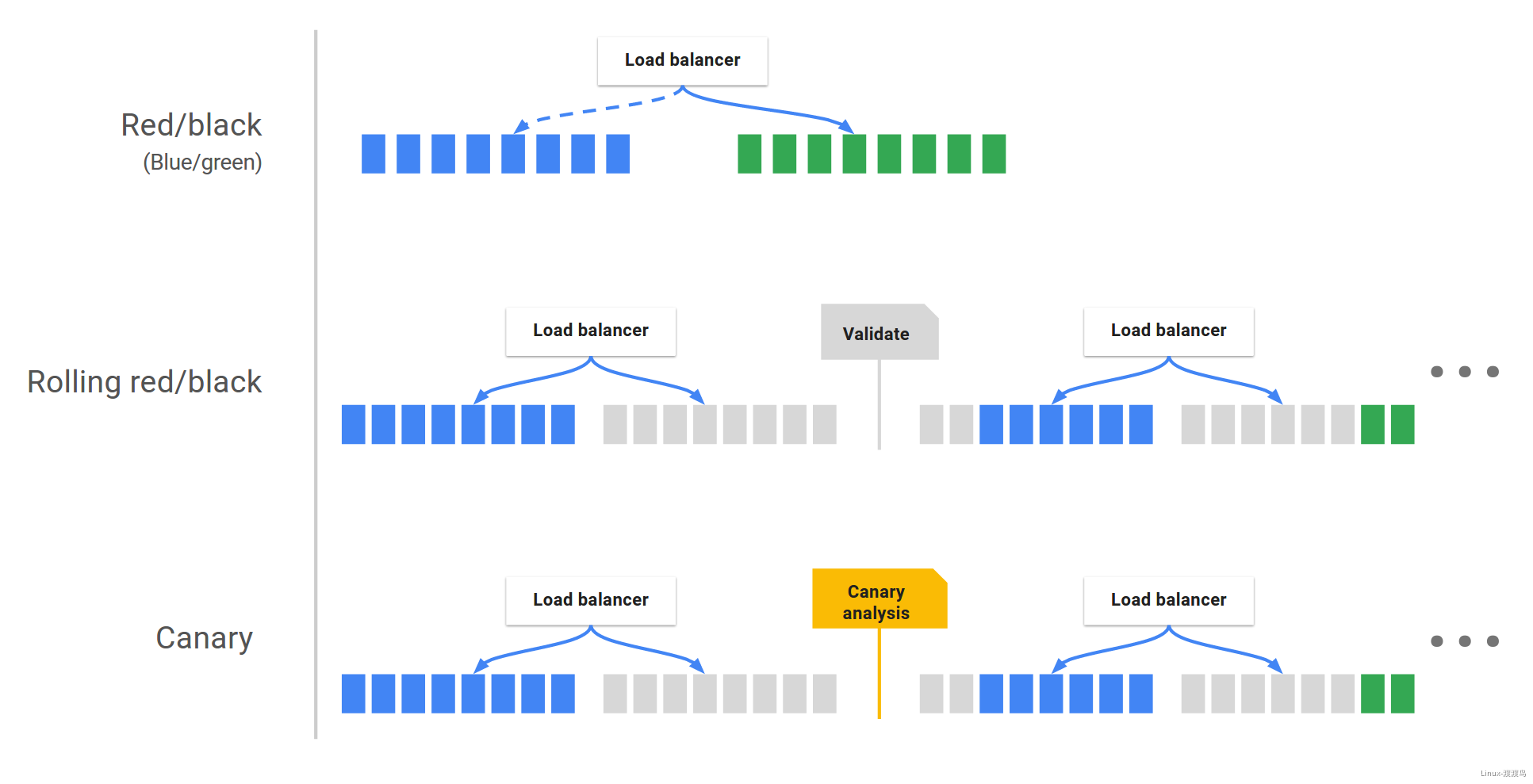

应用部署中核心功能有两个,流水线和部署策略。流水线将CI和CD过程串联起来,每个项目构建一个流水线,通过传递变化的参数(服务名、版本号、镜像标签等),调用Jenkins中持续集成流水线完成构建,再通过提前部署方式(如kubernetes中deployment/service/ingress)方式来将构建好的镜像发布到指定的环境中。部署策略是在通过测试环境测试之后,在生产环境中的升级策略,常用的有蓝绿发布、金丝雀发布、滚动发布。

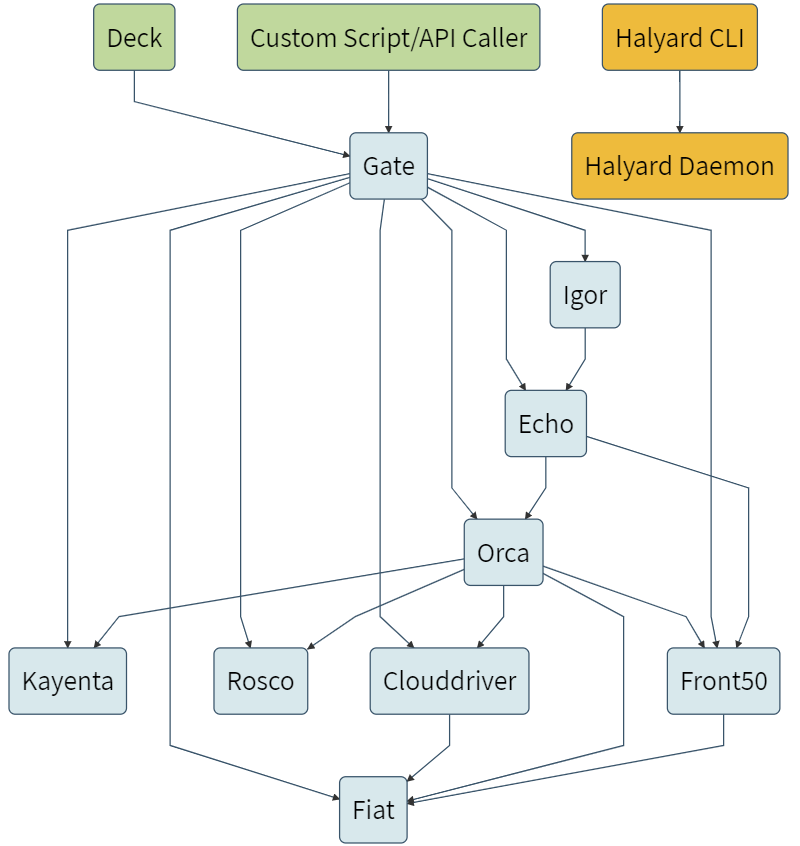

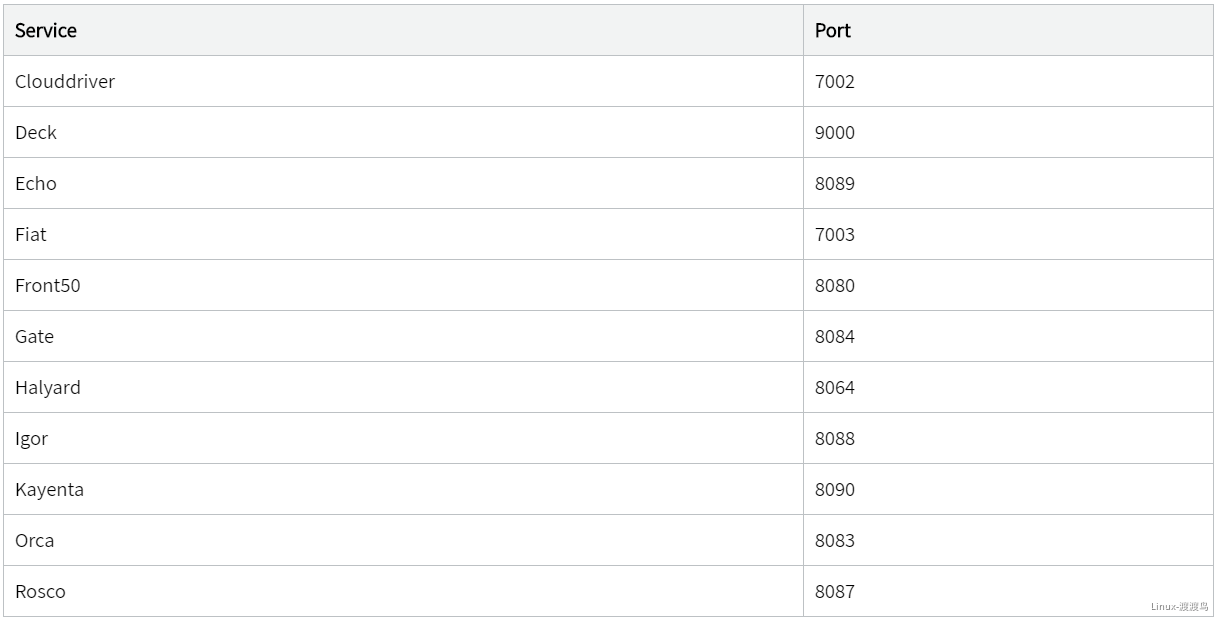

1.3. Spinnaker的常用组件

1.3.1. 组件

- Deck: 基于浏览器的UI界面

- Gate: 即Apigateway,UI和所有api调用程序都通过Gate与Spinnaker进行通信

- Orca: 编排引擎

- Clouddrive: 操纵云环境资源的驱动

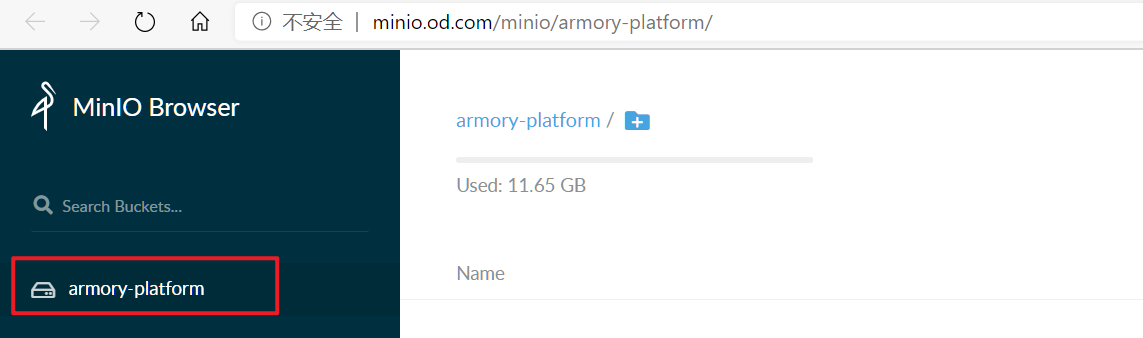

- Front50: 用于持久保存应用程序,管道,项目和通知的元数据,存放在桶中。本实验采用Minio存储(类似s3)

- Rosoc: 为云厂商提供VM镜像或者镜像模板,Kubernetes集群中不涉及

- Igor: 提供流水线构建

- Echo: 它支持发送通知(例如,Slack,电子邮件,SMS),并处理来自Github之类的服务中传入的Webhook。

- Fiat: 提供用户认证,本实验未涉及,后期可以考虑使用

- Kayenta: 提供金丝雀部署分析的,本实验未涉及

- Halyard: 提供spinnaker集群部署、升级和配置的,本实验未涉及

1.3.2. 架构图

2. 部署Spinnaker

2.1. 部署Minio

2.1.1. 准备镜像

[root@hdss7-200 ~]# docker pull minio/minio:latest[root@hdss7-200 ~]# docker image tag minio/minio:latest harbor.od.com/public/minio:latest[root@hdss7-200 ~]# docker image push harbor.od.com/public/minio:latest

2.1.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: minio

name: minio

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

name: minio

template:

metadata:

labels:

app: minio

name: minio

spec:

containers:

- name: minio

image: harbor.od.com/public/minio:latest

ports:

- containerPort: 9000

protocol: TCP

args:

- server

- /data

env:

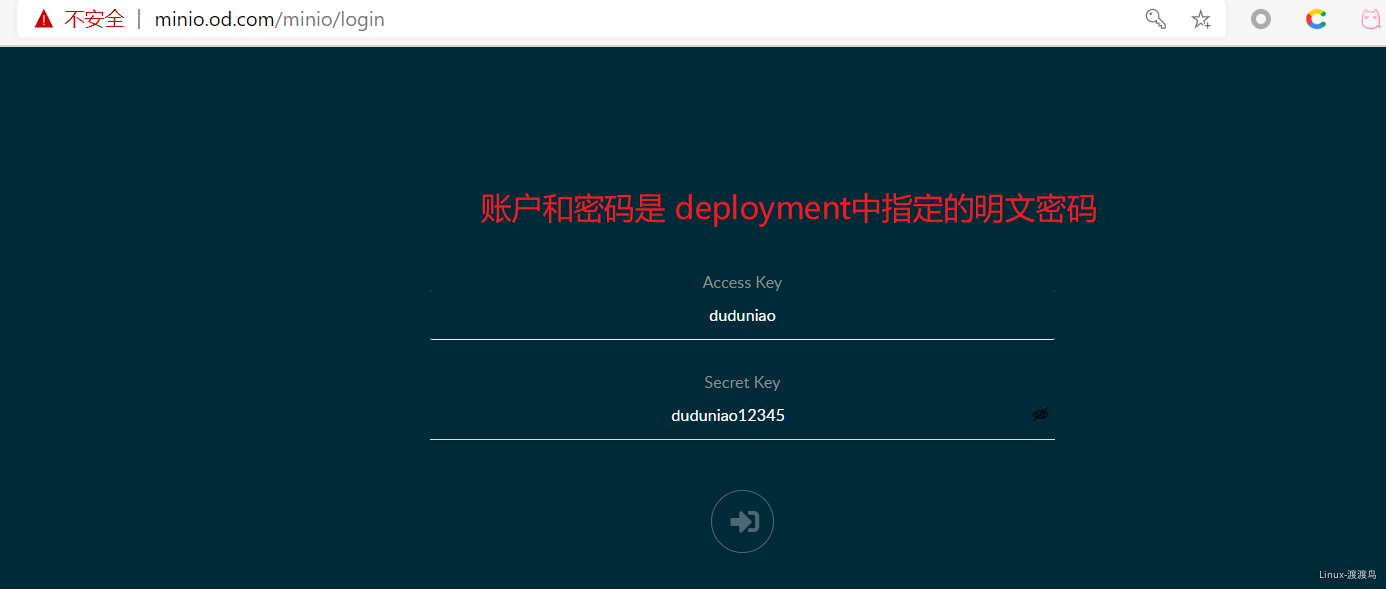

- name: MINIO_ACCESS_KEY

value: duduniao

- name: MINIO_SECRET_KEY

value: duduniao12345

readinessProbe:

failureThreshold: 3

httpGet:

path: /minio/health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- mountPath: /data

name: data

volumes:

- nfs:

server: hdss7-200

path: /data/nfs-volume/minio

name: data

apiVersion: v1

kind: Service

metadata:

name: minio

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: minio

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: minio

namespace: armory

spec:

rules:

- host: minio.od.com

http:

paths:

- path: /

backend:

serviceName: minio

servicePort: 80

2.1.3. 交付minio到k8s

[root@hdss7-200 ~]# mkdir /data/nfs-volume/minio # 创建待共享的目录

[root@hdss7-21 ~]# kubectl create namespace armory

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/minio/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/minio/service.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/minio/ingress.yaml

[root@hdss7-11 ~]# vim /var/named/od.com.zone

......

minio A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# host minio.od.com

minio.od.com has address 10.4.7.10

2.2. 部署redis

Spinnaker中的redis仅仅是起到缓存作用,对Spinnaker的作用不是很大,即使宕机重启也问题不大,且并发小。基于当前有限的资源条件下,考虑使用单个副本非持久化的方式部署redis。如果需要持久化,在启动容器时,指定command和args,如改为: /usr/local/bin/redis-server /etc/myredis.conf

2.2.1. 准备镜像

# 推荐使用4.x版本,高版本的在spinnaker中是否适暂不清楚

[root@hdss7-200 ~]# docker image pull redis:4.0.14

[root@hdss7-200 ~]# docker image tag redis:4.0.14 harbor.od.com/public/redis:v4.0.14

[root@hdss7-200 ~]# docker image push harbor.od.com/public/redis:v4.0.14

2.2.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: redis

name: redis

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

name: redis

template:

metadata:

labels:

app: redis

name: redis

spec:

containers:

- name: redis

image: harbor.od.com/public/redis:v4.0.14

ports:

- containerPort: 6379

protocol: TCP

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: armory

spec:

ports:

- port: 6379

protocol: TCP

targetPort: 6379

selector:

app: redis

2.2.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/redis/depolyment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/redis/service.yaml

[root@hdss7-21 ~]# kubectl get pod -n armory -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

minio-6cb7db494b-hjqvh 1/1 Running 0 33m 172.7.22.5 hdss7-22.host.com <none> <none>

redis-5886797648-mxjbm 1/1 Running 0 7m57s 172.7.22.6 hdss7-22.host.com <none> <none>

[root@hdss7-21 ~]# telnet 172.7.22.6 6379

Trying 172.7.22.6...

Connected to 172.7.22.6.

Escape character is '^]'.

^]

telnet> quit

Connection closed.

[root@hdss7-21 ~]# kubectl get svc -n armory -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

minio ClusterIP 192.168.139.95 <none> 80/TCP 33m app=minio

redis ClusterIP 192.168.70.230 <none> 6379/TCP 8m16s app=redis

[root@hdss7-21 ~]# telnet 192.168.70.230 6379

Trying 192.168.70.230...

Connected to 192.168.70.230.

Escape character is '^]'.

^]

telnet> quit

2.3. 部署Spinnaker-clouddrive

2.3.1. 准备镜像

# 当前版本的镜像比较老,可以考虑新版本进行尝试

[root@hdss7-200 ~]# docker pull armory/spinnaker-clouddriver-slim:release-1.8.x-14c9664

[root@hdss7-200 ~]# docker image tag armory/spinnaker-clouddriver-slim:release-1.8.x-14c9664 harbor.od.com/public/spinnaker-clouddriver-slim:v1.8.x-14c9664

[root@hdss7-200 ~]# docker image push harbor.od.com/public/spinnaker-clouddriver-slim:v1.8.x-14c9664

2.3.2. 制作secret

[root@hdss7-21 ~]# cat minio-login.secret # minio 账号密码,后期会挂载到spinnakr中

[default]

aws_access_key_id=duduniao

aws_secret_access_key=duduniao12345

[root@hdss7-21 ~]# kubectl create secret generic credentials --from-file=credentials=minio-login.secret -n armory

2.3.3. 制作kube-config文件

# 签发证书,注意CN和用户名一致

[root@hdss7-200 certs]# cat spinnaker-csr.json

{

"CN": "spinnake",

"hosts": [

"10.4.7.10"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client spinnake-csr.json | cfssl-json -bare spinnake

[root@hdss7-200 certs]# ls spinnake* -l

-rw-r--r-- 1 root root 1037 Feb 15 13:58 spinnake.csr

-rw-r--r-- 1 root root 296 Feb 15 13:58 spinnake-csr.json

-rw------- 1 root root 1675 Feb 15 13:58 spinnake-key.pem

-rw-r--r-- 1 root root 1391 Feb 15 13:58 spinnake.pem

[root@hdss7-200 certs]# scp spinnake* ca.pem hdss7-21:~/spinnaker/

# kube-config文件也是用于通过apiserver来操作k8s集群创建资源使用,一般给予cluster-admin权限

[root@hdss7-21 spinnaker]# kubectl config set-cluster myk8s --certificate-authority=ca.pem --embed-certs=true --server=https://10.4.7.10:7443 --kubeconfig=config

[root@hdss7-21 spinnaker]# kubectl config set-credentials spinnake --client-certificate=spinnake.pem --client-key=spinnake-key.pem --embed-certs=true --kubeconfig=config

[root@hdss7-21 spinnaker]# kubectl config set-context myk8s-context --cluster=myk8s --user=spinnake --kubeconfig=config

[root@hdss7-21 spinnaker]# kubectl config use-context myk8s-context --kubeconfig=config

[root@hdss7-21 spinnaker]# kubectl create clusterrolebinding spinnake --clusterrole=cluster-admin --user=spinnake

# 测试kube-config是否可以用,注意:dashborad只能用service account登陆

[root@hdss7-200 ~]# scp hdss7-21:~/spinnaker/config /tmp/

[root@hdss7-200 ~]# kubectl get pod -n armory --kubeconfig=/tmp/config

NAME READY STATUS RESTARTS AGE

minio-6cb7db494b-hjqvh 1/1 Running 0 3h23m

redis-5886797648-mxjbm 1/1 Running 0 178m

[root@hdss7-200 ~]# rm -f /tmp/config

# 创建configmap配置,spinnaker将kube-config文件挂载到容器中使用

[root@hdss7-21 spinnaker]# kubectl create configmap default-kubeconfig --from-file=default-kubeconfig=config -n armory

[root@hdss7-21 ~]# rm -fr spinnaker

2.3.4. 资源配置清单

Spinnaker 的配置比较繁琐,其中有一个default-config.yaml的configmap非常复杂,一般不需要修改:

# init-env.yaml

# 包括redis地址、对外的API接口域名等

apiVersion: v1

kind: ConfigMap

metadata:

name: init-env

namespace: armory

data:

API_HOST: http://spinnaker.od.com/api

ARMORY_ID: c02f0781-92f5-4e80-86db-0ba8fe7b8544

ARMORYSPINNAKER_CONF_STORE_BUCKET: armory-platform

ARMORYSPINNAKER_CONF_STORE_PREFIX: front50

ARMORYSPINNAKER_GCS_ENABLED: "false"

ARMORYSPINNAKER_S3_ENABLED: "true"

AUTH_ENABLED: "false"

AWS_REGION: us-east-1

BASE_IP: 127.0.0.1

CLOUDDRIVER_OPTS: -Dspring.profiles.active=armory,configurator,local

CONFIGURATOR_ENABLED: "false"

DECK_HOST: http://spinnaker.od.com

ECHO_OPTS: -Dspring.profiles.active=armory,configurator,local

GATE_OPTS: -Dspring.profiles.active=armory,configurator,local

IGOR_OPTS: -Dspring.profiles.active=armory,configurator,local

PLATFORM_ARCHITECTURE: k8s

REDIS_HOST: redis://redis:6379

SERVER_ADDRESS: 0.0.0.0

SPINNAKER_AWS_DEFAULT_REGION: us-east-1

SPINNAKER_AWS_ENABLED: "false"

SPINNAKER_CONFIG_DIR: /home/spinnaker/config

SPINNAKER_GOOGLE_PROJECT_CREDENTIALS_PATH: ""

SPINNAKER_HOME: /home/spinnaker

SPRING_PROFILES_ACTIVE: armory,configurator,local

# custom-config.yaml

# 该配置文件指定访问k8s、harbor、minio、Jenkins的访问方式

# 其中部分地址可以根据是否在k8s内部,和是否同一个名称空间来选择是否使用短域名

apiVersion: v1

kind: ConfigMap

metadata:

name: custom-config

namespace: armory

data:

clouddriver-local.yml: |

kubernetes:

enabled: true

accounts:

- name: spinnake

serviceAccount: false

dockerRegistries:

- accountName: harbor

namespace: []

namespaces:

- dev

- fat

- pro

kubeconfigFile: /opt/spinnaker/credentials/custom/default-kubeconfig

primaryAccount: spinnake

dockerRegistry:

enabled: true

accounts:

- name: harbor

requiredGroupMembership: []

providerVersion: V1

insecureRegistry: true

address: http://harbor.od.com

username: admin

password: Harbor12345

primaryAccount: harbor

artifacts:

s3:

enabled: true

accounts:

- name: armory-config-s3-account

apiEndpoint: http://minio

apiRegion: us-east-1

gcs:

enabled: false

accounts:

- name: armory-config-gcs-account

custom-config.json: ""

echo-configurator.yml: |

diagnostics:

enabled: true

front50-local.yml: |

spinnaker:

s3:

endpoint: http://minio

igor-local.yml: |

jenkins:

enabled: true

masters:

- name: jenkins-admin

address: http://jenkins.infra

username: admin

password: admin123

primaryAccount: jenkins-admin

nginx.conf: |

gzip on;

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/vnd.ms-fontobject application/x-font-ttf font/opentype image/svg+xml image/x-icon;

server {

listen 80;

location / {

proxy_pass http://armory-deck/;

}

location /api/ {

proxy_pass http://armory-gate:8084/;

}

rewrite ^/login(.*)$ /api/login$1 last;

rewrite ^/auth(.*)$ /api/auth$1 last;

}

spinnaker-local.yml: |

services:

igor:

enabled: true

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-clouddriver

name: armory-clouddriver

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-clouddriver

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-clouddriver"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"clouddriver"'

labels:

app: armory-clouddriver

spec:

containers:

- name: armory-clouddriver

image: harbor.od.com/public/spinnaker-clouddriver-slim:v1.8.x-14c9664

command:

- bash

- -c

args:

# 脚本在default-config.yaml中

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/clouddriver/bin/clouddriver

ports:

- containerPort: 7002

protocol: TCP

env:

- name: JAVA_OPTS

# 生产中调大到2048-4096M

value: -Xmx1024M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/credentials/custom

name: default-kubeconfig

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: default-kubeconfig

name: default-kubeconfig

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-clouddriver

namespace: armory

spec:

ports:

- port: 7002

protocol: TCP

targetPort: 7002

selector:

app: armory-clouddriver

2.3.5. 应用资源配置清单

[root@hdss7-21 spinnaker]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/spinnake/init-env.yaml

[root@hdss7-21 spinnaker]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/spinnake/custon-config.yaml

[root@hdss7-21 spinnaker]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/spinnake/default-config.yaml

[root@hdss7-21 spinnaker]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/spinnake/deployment.yaml

[root@hdss7-21 spinnaker]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/spinnake/service.yaml

[root@hdss7-21 spinnaker]# kubectl get svc -n armory | grep armory-clouddriver

armory-clouddriver ClusterIP 192.168.109.209 <none> 7002/TCP 11m

[root@hdss7-21 spinnaker]# kubectl get pod -n armory -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5f8b5f4cbb-2qfgf 1/1 Running 0 8m1s 172.7.21.5 hdss7-21.host.com <none> <none>

# 通过curl测试,发现一个现象:通过pod的IP或者service的域名都可以访问health,但是通过svc的IP却不行

[root@hdss7-21 spinnaker]# curl 172.7.21.5:7002/health

{"status":"UP","kubernetes":{"status":"UP"},"redisHealth":{"status":"UP","maxIdle":100,"minIdle":25,"numActive":0,"numIdle":4,"numWaiters":0},"dockerRegistry":{"status":"UP"},"diskSpace":{"status":"UP","total":53659832320,"free":43917455360,"threshold":10485760}}[root@hdss7-21 spinnake]#

[root@hdss7-21 spinnaker]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s armory-clouddriver:7002/health

{"status":"UP","kubernetes":{"status":"UP"},"redisHealth":{"status":"UP","maxIdle":100,"minIdle":25,"numActive":0,"numIdle":4,"numWaiters":0},"dockerRegistry":{"status":"UP"},"diskSpace":{"status":"UP","total":53659832320,"free":43917455360,"threshold":10485760}}

2.4. 部署front50

2.4.1. 准备镜像

[root@hdss7-200 ~]# docker pull armory/spinnaker-front50-slim:release-1.8.x-93febf2

[root@hdss7-200 ~]# docker image tag armory/spinnaker-front50-slim:release-1.8.x-93febf2 harbor.od.com/public/spinnaker-front50-slim:v1.8.x-93febf2

[root@hdss7-200 ~]# docker image push harbor.od.com/public/spinnaker-front50-slim:v1.8.x-93febf2

2.4.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-front50

name: armory-front50

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-front50

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-front50"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"front50"'

labels:

app: armory-front50

spec:

containers:

- name: armory-front50

image: harbor.od.com/public/spinnaker-front50-slim:v1.8.x-93febf2

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/front50/bin/front50

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

# 生产中给大一些,本实验一开始给了512M,启动后运行一会就宕了

value: -javaagent:/opt/front50/lib/jamm-0.2.5.jar -Xmx1024M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 8

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-front50

namespace: armory

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: armory-front50

2.4.2. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/front50/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/front50/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s 'http://armory-front50:8080/health'

{"status":"UP"}

2.5. 部署Orca

2.5.1. 准备镜像

[root@hdss7-200 ~]# docker pull armory/spinnaker-orca-slim:release-1.8.x-de4ab55

[root@hdss7-200 ~]# docker image tag armory/spinnaker-orca-slim:release-1.8.x-de4ab55 harbor.od.com/public/spinnaker-orca-slim:v1.8.x-de4ab55

[root@hdss7-200 ~]# docker image push harbor.od.com/public/spinnaker-orca-slim:v1.8.x-de4ab55

2.5.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-orca

name: armory-orca

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-orca

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-orca"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"orca"'

labels:

app: armory-orca

spec:

containers:

- name: armory-orca

image: harbor.od.com/public/spinnaker-orca-slim:v1.8.x-de4ab55

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/orca/bin/orca

ports:

- containerPort: 8083

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-orca

namespace: armory

spec:

ports:

- port: 8083

protocol: TCP

targetPort: 8083

selector:

app: armory-orca

2.5.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/orca/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/orca/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s 'http://armory-orca:8083/health'

{"status":"UP"}

2.6. 部署Echo

2.6.1. 准备镜像

[root@hdss7-200 ~]# docker pull armory/echo-armory:c36d576-release-1.8.x-617c567

[root@hdss7-200 ~]# docker image tag armory/echo-armory:c36d576-release-1.8.x-617c567 harbor.od.com/public/echo-armory:v1.8.x-617c567

[root@hdss7-200 ~]# docker image push harbor.od.com/public/echo-armory:v1.8.x-617c567

2.6.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-echo

name: armory-echo

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-echo

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-echo"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"echo"'

labels:

app: armory-echo

spec:

containers:

- name: armory-echo

image: harbor.od.com/public/echo-armory:v1.8.x-617c567

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/echo/bin/echo

ports:

- containerPort: 8089

protocol: TCP

env:

- name: JAVA_OPTS

value: -javaagent:/opt/echo/lib/jamm-0.2.5.jar -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-echo

namespace: armory

spec:

ports:

- port: 8089

protocol: TCP

targetPort: 8089

selector:

app: armory-echo

2.6.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/echo/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/echo/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s 'http://armory-echo:8089/health'

{"status":"UP"}

2.7. 部署igor

2.7.1. 镜像准备

[root@hdss7-200 ~]# docker image pull armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

[root@hdss7-200 ~]# docker image tag armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329 harbor.od.com/public/igor:v1.8-x-ae2b329

[root@hdss7-200 ~]# docker image push harbor.od.com/public/igor:v1.8-x-ae2b329

2.7.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-igor

name: armory-igor

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-igor

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-igor"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"igor"'

labels:

app: armory-igor

spec:

containers:

- name: armory-igor

image: harbor.od.com/public/igor:v1.8-x-ae2b329

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/igor/bin/igor

ports:

- containerPort: 8088

protocol: TCP

env:

- name: IGOR_PORT_MAPPING

value: -8088:8088

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-igor

namespace: armory

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 8088

selector:

app: armory-igor

2.7.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/igor/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/igor/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s 'http://armory-igor:8088/health'

{"status":"UP"}

2.8. 部署gate

2.8.1. 准备镜像

[root@hdss7-200 ~]# docker pull armory/gate-armory:dfafe73-release-1.8.x-5d505ca

[root@hdss7-200 ~]# docker image tag armory/gate-armory:dfafe73-release-1.8.x-5d505ca harbor.od.com/public/gate-armory:v1.8.x-5d505ca

[root@hdss7-200 ~]# docker image push harbor.od.com/public/gate-armory:v1.8.x-5d505ca

2.8.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-gate

name: armory-gate

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-gate

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-gate"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"gate"'

labels:

app: armory-gate

spec:

containers:

- name: armory-gate

image: harbor.od.com/public/gate-armory:v1.8.x-5d505ca

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh gate && cd /home/spinnaker/config

&& /opt/gate/bin/gate

ports:

- containerPort: 8084

name: gate-port

protocol: TCP

- containerPort: 8085

name: gate-api-port

protocol: TCP

env:

- name: GATE_PORT_MAPPING

value: -8084:8084

- name: GATE_API_PORT_MAPPING

value: -8085:8085

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health || wget -O - https://localhost:8084/health

failureThreshold: 5

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

|| wget -O - https://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

failureThreshold: 3

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 10

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-gate

namespace: armory

spec:

ports:

- name: gate-port

port: 8084

protocol: TCP

targetPort: 8084

- name: gate-api-port

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-gate

2.8.3. 应用资源配清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/gate/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/gate/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -s 'http://armory-gate:8084/health?checkDownstreamServices=true&downstreamServices=true'

{"status":"UP"}

2.9. 部署deck

2.9.1. 准备镜像

[root@hdss7-200 ~]# docker image pull armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

[root@hdss7-200 ~]# docker image tag armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94 harbor.od.com/public/deck-armory:v1.8.x-0a33f94

[root@hdss7-200 ~]# docker image push harbor.od.com/public/deck-armory:v1.8.x-0a33f94

2.9.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-deck

name: armory-deck

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-deck

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-deck"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"deck"'

labels:

app: armory-deck

spec:

containers:

- name: armory-deck

image: harbor.od.com/public/deck-armory:v1.8.x-0a33f94

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && /entrypoint.sh

ports:

- containerPort: 9000

protocol: TCP

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

apiVersion: v1

kind: Service

metadata:

name: armory-deck

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: armory-deck

2.9.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/deck/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/deck/service.yaml

[root@hdss7-21 ~]# kubectl exec minio-6cb7db494b-hjqvh -n armory -- curl -Is 'http://armory-deck'

HTTP/1.1 200 OK

Server: nginx/1.4.6 (Ubuntu)

Date: Sat, 15 Feb 2020 10:07:24 GMT

Content-Type: text/html

Content-Length: 22031

Last-Modified: Tue, 17 Jul 2018 17:42:20 GMT

Connection: keep-alive

ETag: "5b4e2a7c-560f"

Accept-Ranges: bytes

2.10. 部署Nginx

2.10.1. 准备镜像

[root@hdss7-200 ~]# docker image pull nginx:1.12.2

[root@hdss7-200 ~]# docker image tag nginx:1.12.2 harbor.od.com/public/nginx:v1.12.2

[root@hdss7-200 ~]# docker image push harbor.od.com/public/nginx:v1.12.2

2.10.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-nginx

name: armory-nginx

namespace: armory

spec:

replicas: 1

selector:

matchLabels:

app: armory-nginx

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-nginx"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"nginx"'

labels:

app: armory-nginx

spec:

containers:

- name: armory-nginx

image: harbor.od.com/public/nginx:v1.12.2

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh nginx && nginx -g 'daemon off;'

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8085

name: api

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /etc/nginx/conf.d

name: custom-config

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

apiVersion: v1

kind: Service

metadata:

name: armory-nginx

namespace: armory

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

- name: api

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-nginx

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

app: spinnaker

web: spinnaker.od.com

name: armory-nginx

namespace: armory

spec:

rules:

- host: spinnaker.od.com

http:

paths:

- backend:

serviceName: armory-nginx

servicePort: 80

2.10.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/nginx/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/nginx/service.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/armory/nginx/ingress.yaml

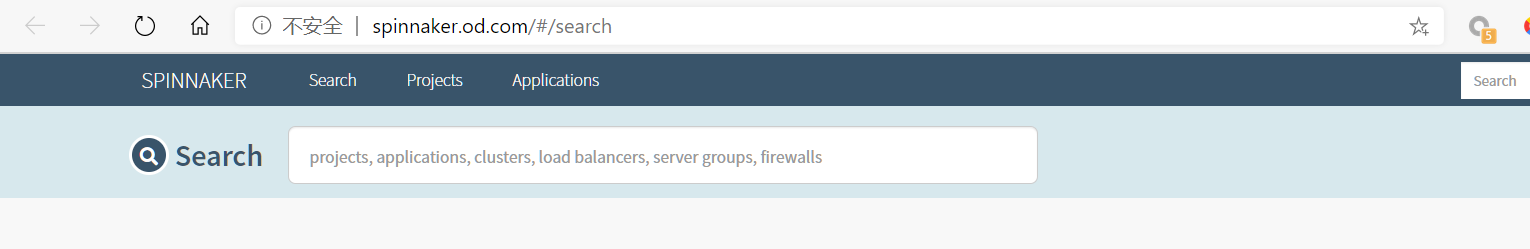

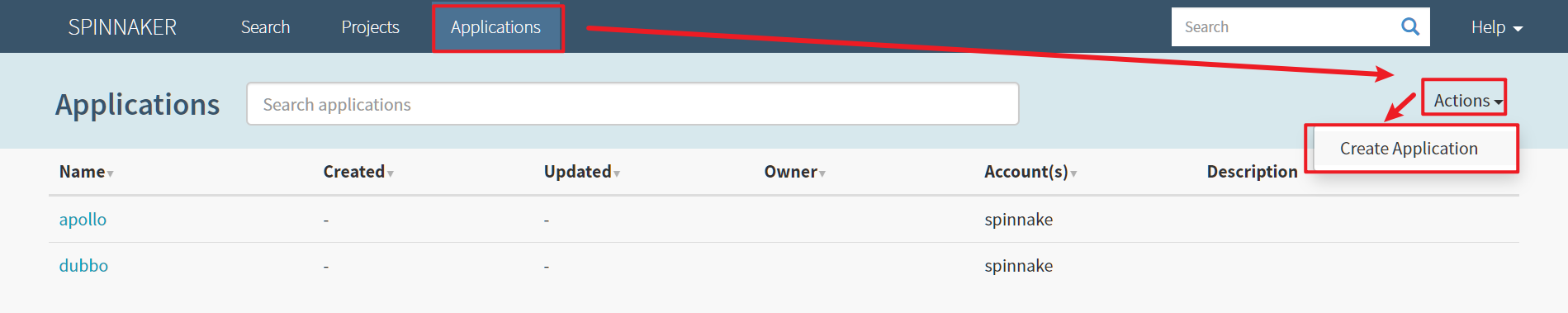

3. Spinnaker的使用

3.1. Spinnaker创建应用集

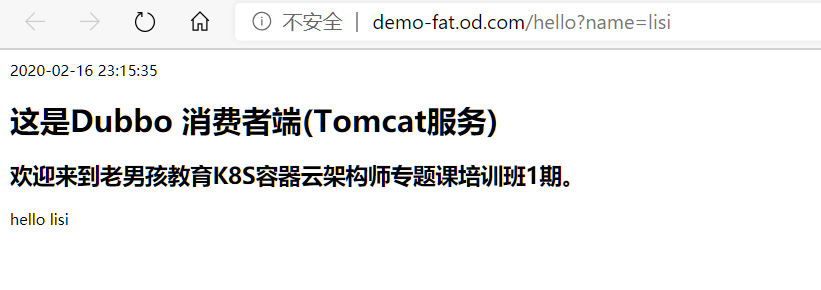

为了方便,启动FAT环境中Apollo,并删除FAT环境中的 dubbo-demo 提供者和消费者服务的资源配置清单,尝试通过Spinnaker实现从构建到发布的完整流程。

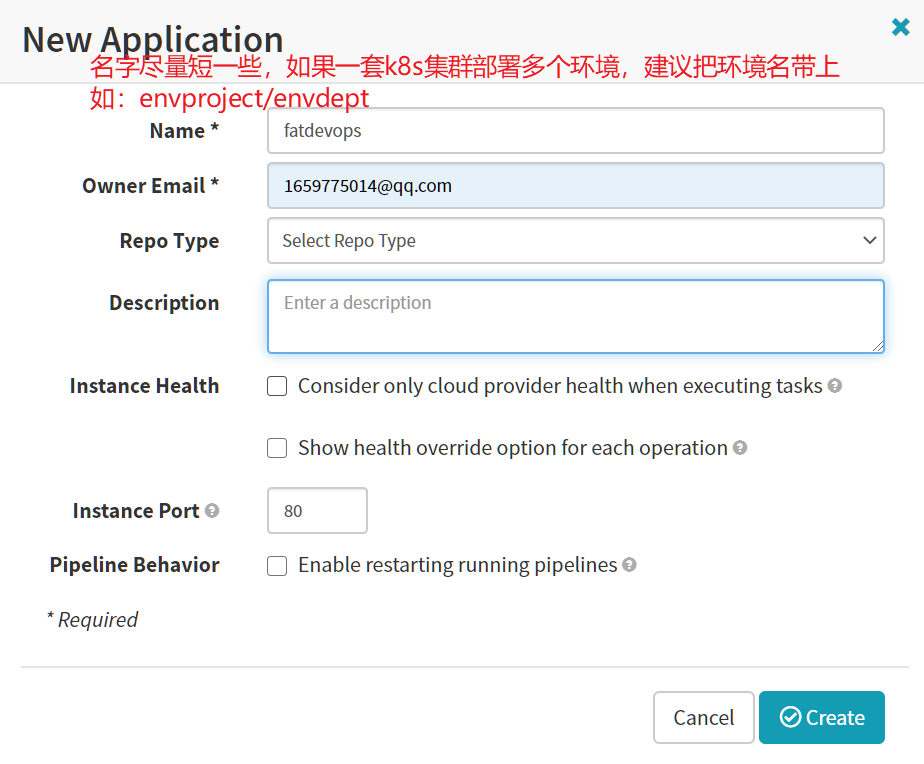

3.1.1. 创建应用集

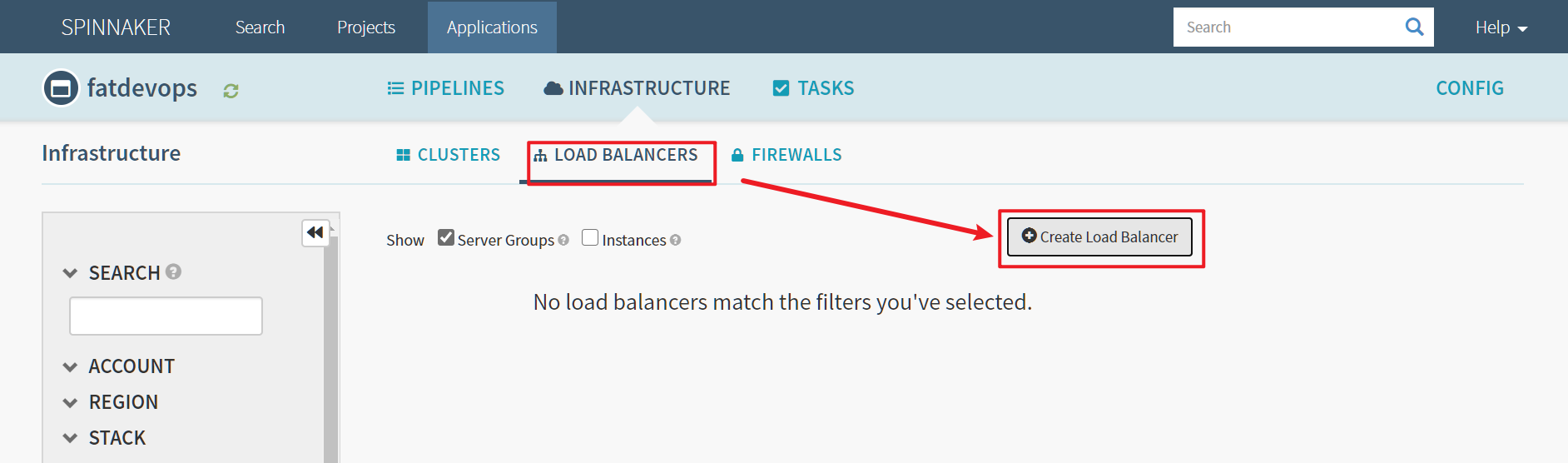

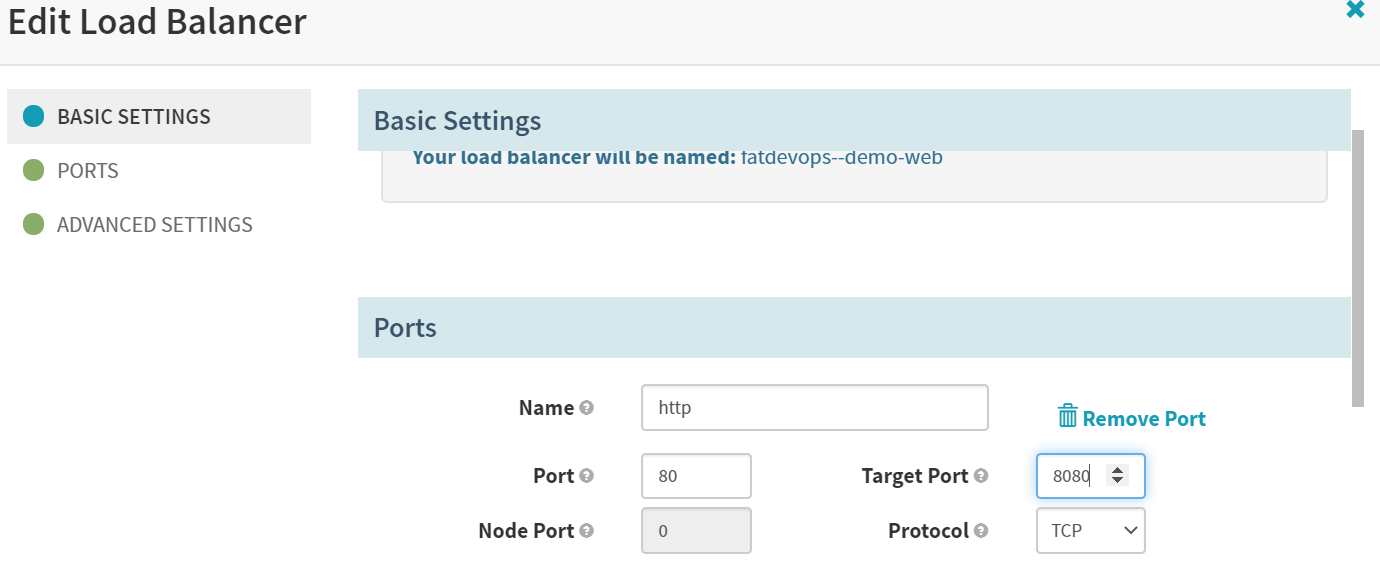

3.1.2. 创建service

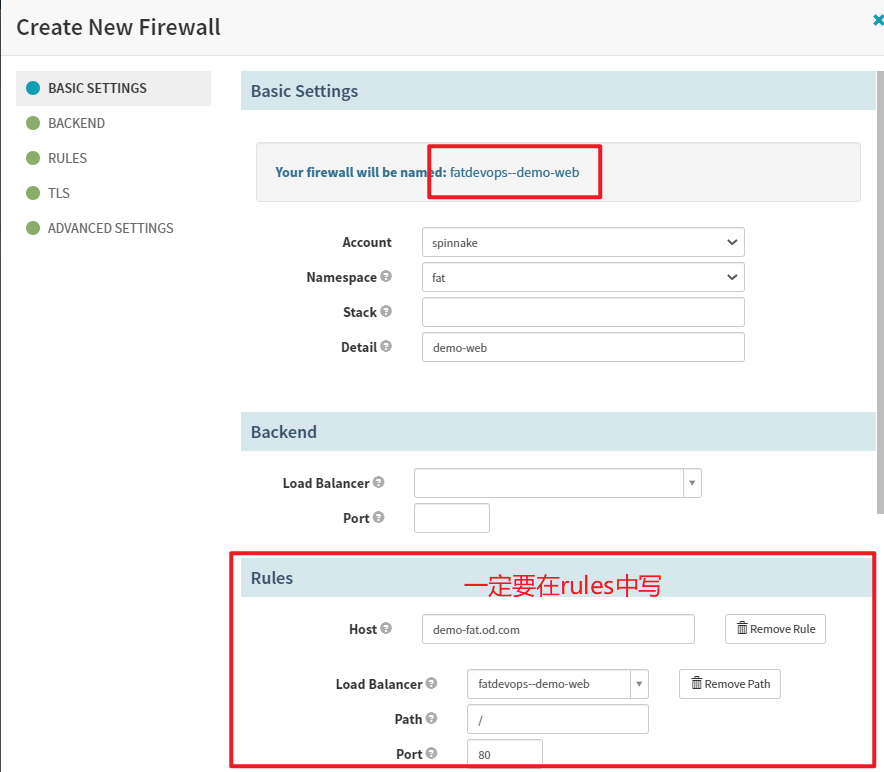

3.1.3. 创建ingress

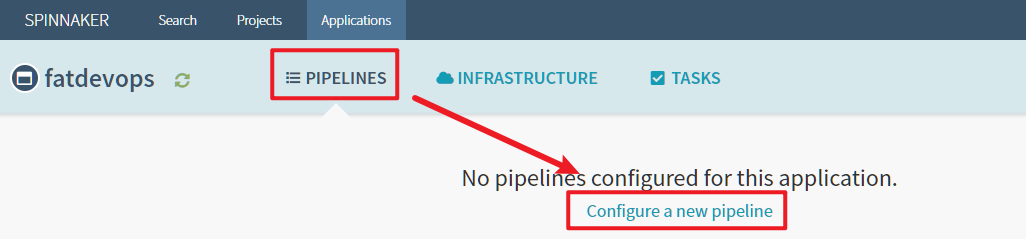

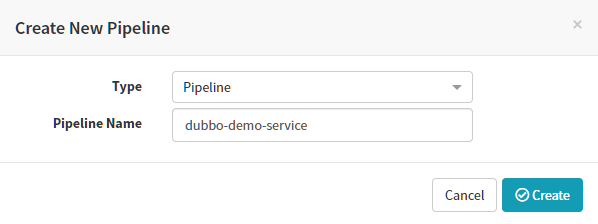

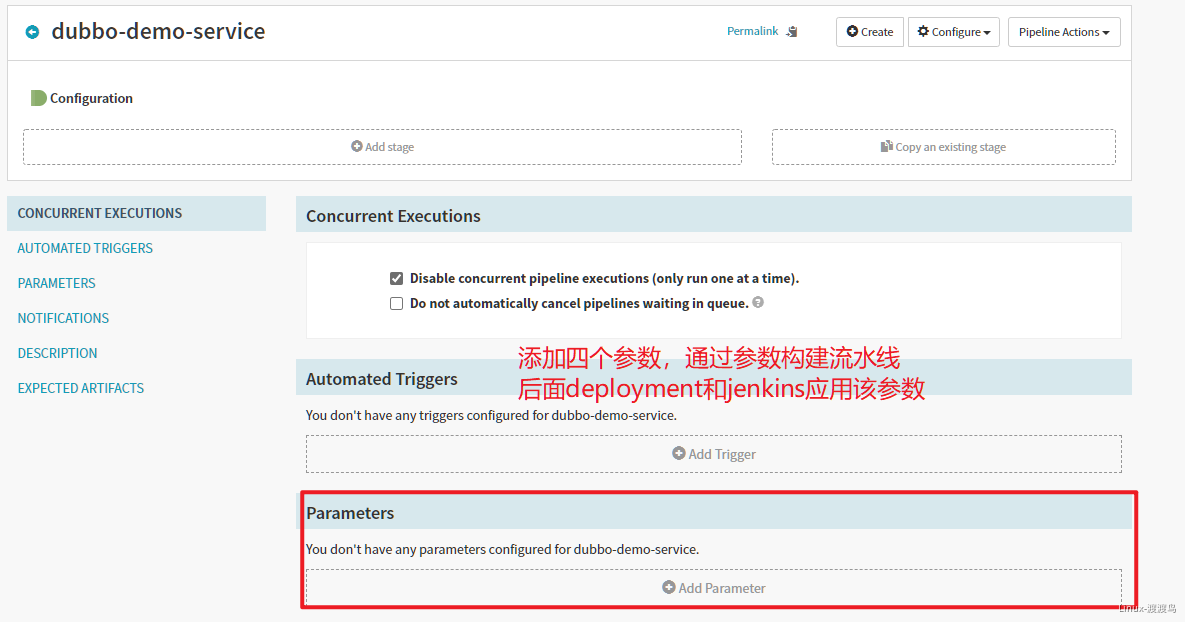

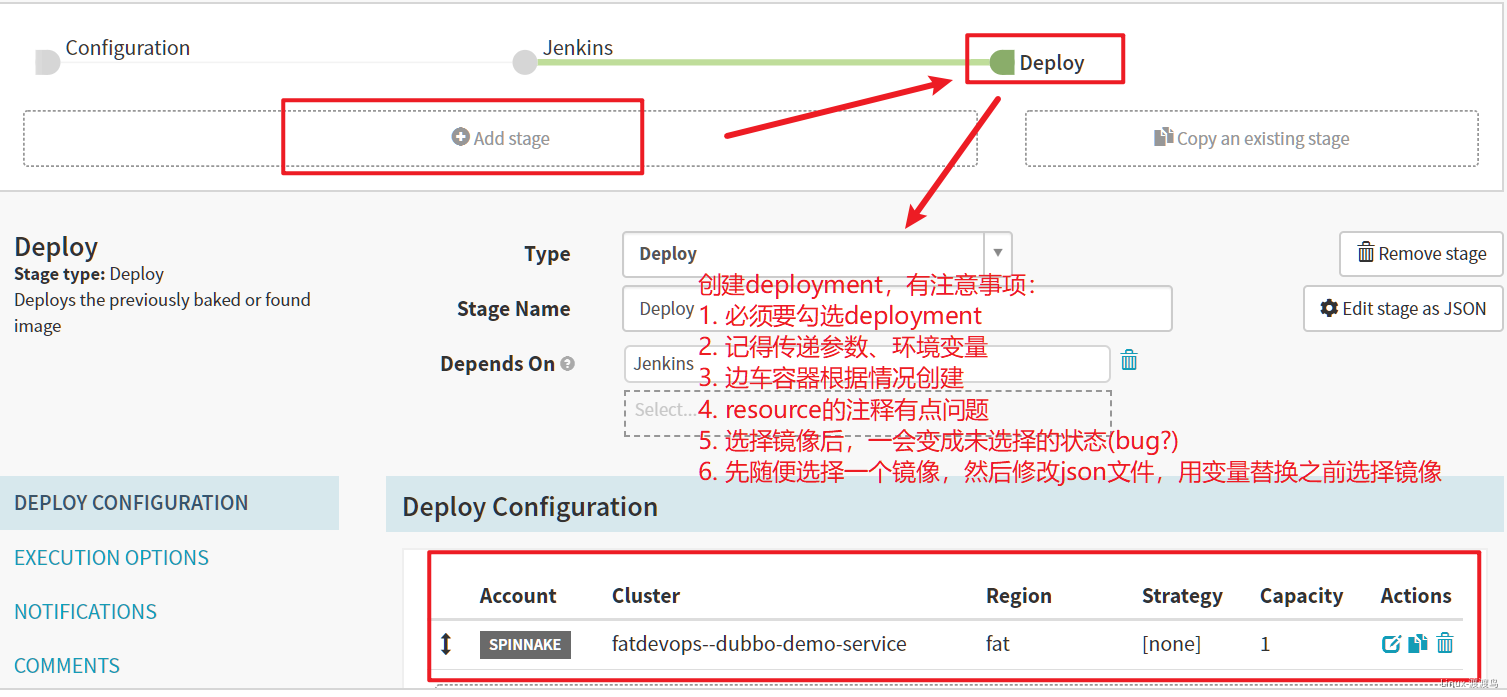

3.1.4. 创建Pipeline

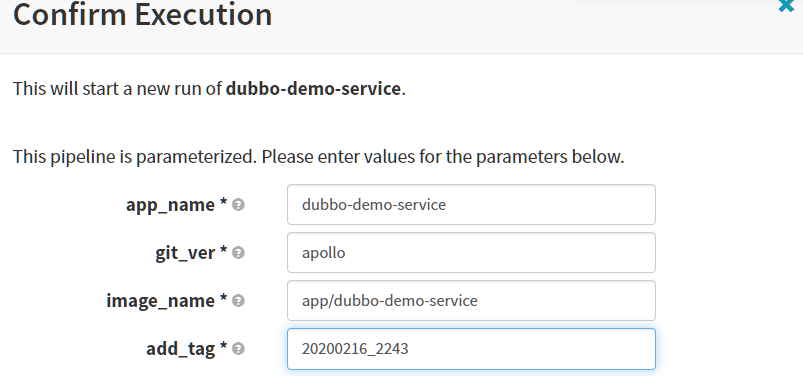

# 增加以下四个参数,本次编译和发布 dubbo-demo-service,因此默认的项目名称和镜像名称是基本确定的

1. name: app_name

required: true

default: dubbo-demo-service

description: 项目在Git仓库名称

2. name: git_ver

required: true

description: 项目的版本或者commit ID或者分支

3. image_name

required: true

default: app/dubbo-demo-service

description: 镜像名称,仓库/image

4. name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

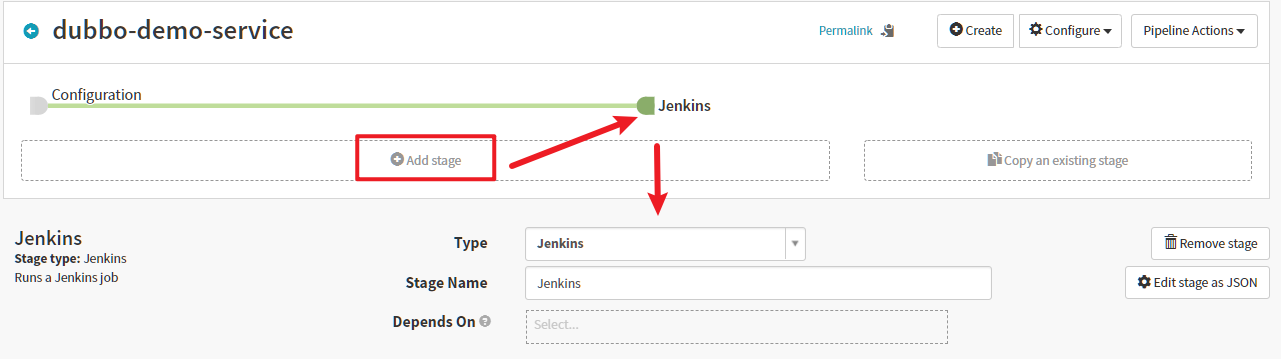

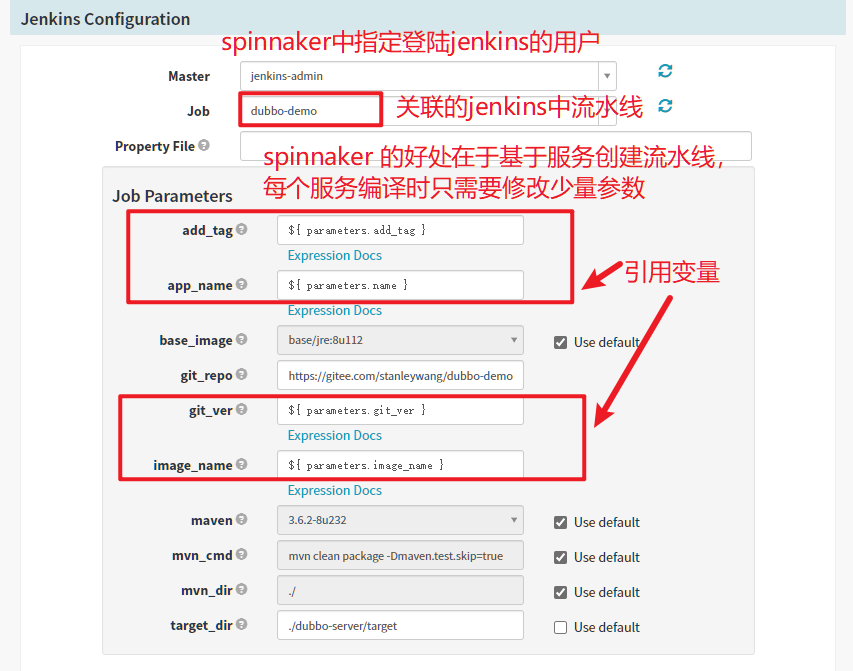

3.1.5. 创建Jenkins构建步骤

如果在测试环境中,Jenkins一般是流水线的一部分,而在生产环境中,一般跳过Jenkins这个步骤。

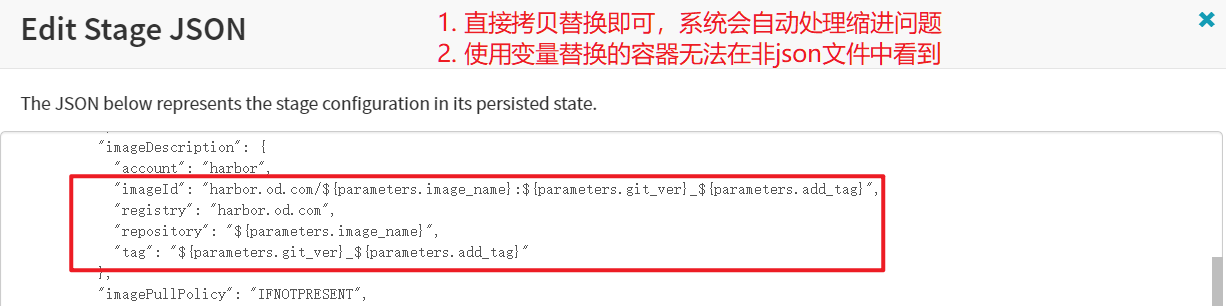

# 对json中以下部分内容进行调整未变量格式

"imageId": "harbor.od.com/${parameters.image_name}:${parameters.git_ver}_${parameters.add_tag}",

"registry": "harbor.od.com",

"repository": "${parameters.image_name}",

"tag": "${parameters.git_ver}_${parameters.add_tag}"

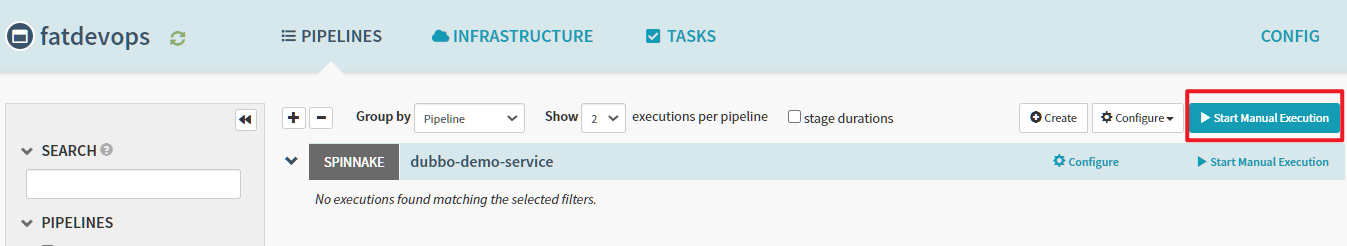

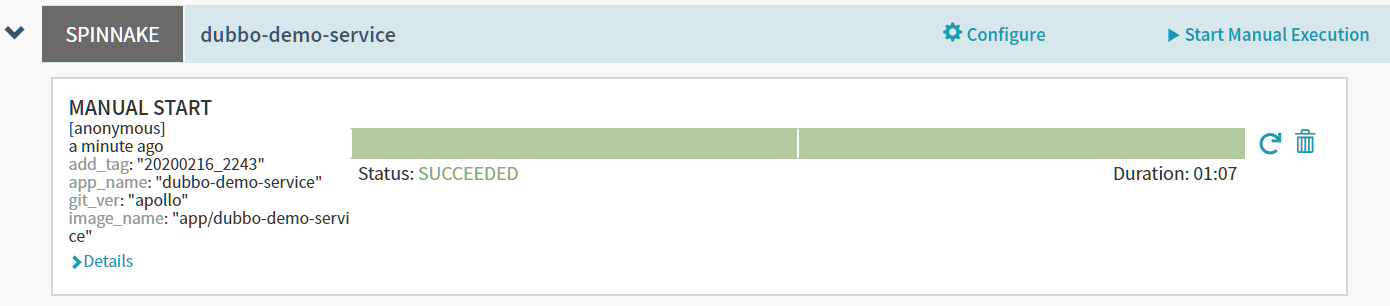

3.1.6. 执行流水线

3.1.7. 各个资源名称

[root@hdss7-22 ~]# kubectl get all -n fat | grep devops|grep -v '^$'

pod/fatdevops--dubbo-demo-service-v001-v97fh 1/1 Running 0 14m

pod/fatdevops--dubbo-demo-web-v000-rz758 1/1 Running 0 4m31s

service/fatdevops--demo-web ClusterIP 192.168.148.45 <none> 80/TCP 75m

deployment.apps/fatdevops--dubbo-demo-service 1/1 1 1 28m

deployment.apps/fatdevops--dubbo-demo-web 1/1 1 1 4m31s

replicaset.apps/fatdevops--dubbo-demo-service-v000 0 0 0 28m

replicaset.apps/fatdevops--dubbo-demo-service-v001 1 1 1 14m

replicaset.apps/fatdevops--dubbo-demo-web-v000 1 1 1 4m32

3.2. 遗留问题

- Spinnaker的账号认证系统如何实现?

- 当前灰度发布、金丝雀发布、蓝绿发布如何实现?