04-4-日志收集

1. 日志收集方式

Kubernetes的业务Pod日志有两种输出方式:一种是直接打到标准输出或者标准错误,第二种是将日志写到特定目录下的文件种。针对这两种不同场景,提供了不同的容器日志收集思路。

1.1. Kubernetes日志收集思路

1.1.1. 使用节点代理收集日志

在各个Node节点上以Deamonset方式部署log-agent-pod,将宿主机上的日志目录挂载到log-agent-pod里面,由log-agent-pod将日志发送出去。常用的log-agent是通过Fluentd实现的。

1.1.2. 使用边车模式收集日志

在每个业务Pod中,启动一个辅助容器,将主容器的日志目录挂载到辅助容器中,将主容器刷盘的日志推送出去。这种模式下,辅助容器必须要在主容器之前启动,避免主容器丢日志。常用的辅助容器是filebeat实现的。

1.1.3. 日志输出方式的转换

- 标准输出—>写文件:command >/logs/stdout.log 2>/logs/stderr.log

- 写文件—>标准输出:tail -f xxx.log

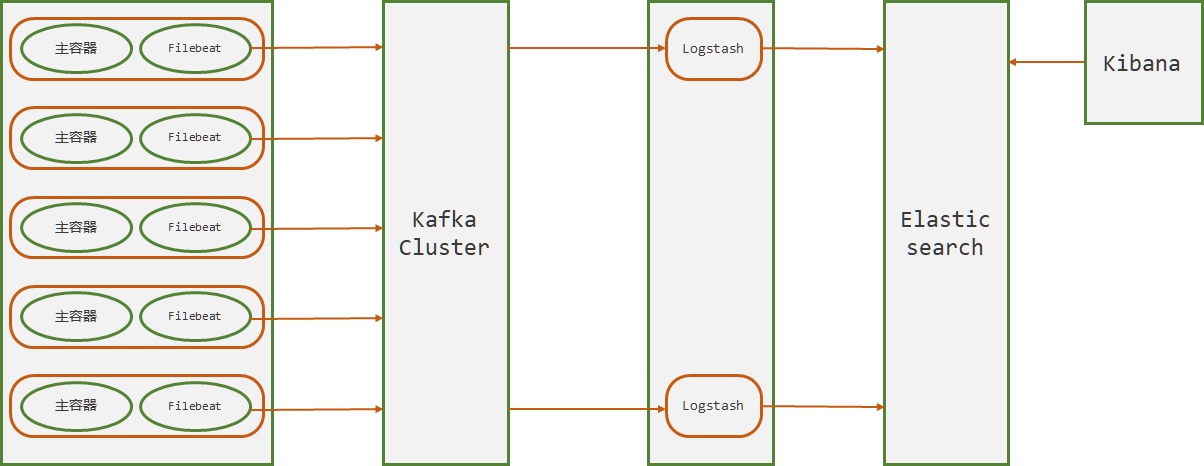

1.2. 拓扑图

本实验采用辅助容器(边车模式)收集日志到ELK集群系统,本实验先将日志写到Kafka集群,然后再通过logstash消费Kafka中的数据,并写入Elasticsearch,这种方式是异步的,虽然消息的及时性不是特别高,但是性能比直接写Elasticsearch高很高。

2. 准备业务APP

2.1. 准备Tomcat基础镜像

2.1.1. 准备Tomcat

[root@hdss7-200 src]# wget http://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-8/v8.5.50/bin/apache-tomcat-8.5.50.tar.gz[root@hdss7-200 src]# tar -xf apache-tomcat-8.5.50.tar.gz -C docker_files/tomcat/[root@hdss7-200 src]# cd docker_files/tomcat/[root@hdss7-200 tomcat]# rm -fr apache-tomcat-8.5.50/webapps/*[root@hdss7-200 tomcat]# vim apache-tomcat-8.5.50/conf/server.xml # 关闭AJP<!-- <Connector port="8009" protocol="AJP/1.3" redirectPort="8443" /> -->[root@hdss7-200 tomcat]# vim apache-tomcat-8.5.50/conf/logging.properties# 删除3manager和4host-manager日志# 修改日志级别为 INFOhandlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler, java.util.logging.ConsoleHandler......1catalina.org.apache.juli.AsyncFileHandler.level = INFO1catalina.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs1catalina.org.apache.juli.AsyncFileHandler.prefix = catalina.1catalina.org.apache.juli.AsyncFileHandler.encoding = UTF-82localhost.org.apache.juli.AsyncFileHandler.level = INFO2localhost.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs2localhost.org.apache.juli.AsyncFileHandler.prefix = localhost.2localhost.org.apache.juli.AsyncFileHandler.encoding = UTF-8# 3manager.org.apache.juli.AsyncFileHandler.level = FINE# 3manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs# 3manager.org.apache.juli.AsyncFileHandler.prefix = manager.# 3manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8## 4host-manager.org.apache.juli.AsyncFileHandler.level = FINE# 4host-manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs# 4host-manager.org.apache.juli.AsyncFileHandler.prefix = host-manager.# 4host-manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8java.util.logging.ConsoleHandler.level = INFOjava.util.logging.ConsoleHandler.formatter = org.apache.juli.OneLineFormatterjava.util.logging.ConsoleHandler.encoding = UTF-8......

2.1.2. 制作Tomcat底包

From harbor.od.com/public/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

ENV CATALINA_HOME /opt/tomcat

ENV LANG zh_CN.UTF-8

ADD apache-tomcat-8.5.50/ /opt/tomcat

# 配合Pormetheus使用,且在K8S集群外的情况下使用

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/jmx_javaagent-0.3.1.jar

WORKDIR /opt/tomcat

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

[root@hdss7-200 tomcat]# cat entrypoint.sh

#!/bin/bash

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS} # 连接Apollo信息

MIN_HEAP=${MIN_HEAP:-"128m"}

MAX_HEAP=${MAX_HEAP:-"128m"}

JAVA_OPTS=${JAVA_OPTS:-"-Xmn384m -Xss256k -Duser.timezone=GMT+08 -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:CMSFullGCsBeforeCompaction=0 -XX:+CMSClassUnloadingEnabled -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+PrintClassHistogram -Dfile.encoding=UTF8 -Dsun.jnu.encoding=UTF8"}

CATALINA_OPTS=${CATALINA_OPTS}

JAVA_OPTS="${M_OPTS} ${C_OPTS} -Xms${MIN_HEAP} -Xmx${MAX_HEAP} ${JAVA_OPTS}"

sed -i -e "1a\JAVA_OPTS=\"$JAVA_OPTS\"" -e "1a\CATALINA_OPTS=\"$CATALINA_OPTS\"" /opt/tomcat/bin/catalina.sh

# 让tomcat在前台启动

cd /opt/tomcat && /opt/tomcat/bin/catalina.sh run 2>&1 >> /opt/tomcat/logs/stdout.log

[root@hdss7-200 tomcat]# vim config.yml

rules:

- pattern: '.*'

[root@hdss7-200 tomcat]# chmod +x entrypoint.sh

[root@hdss7-200 tomcat]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

[root@hdss7-200 tomcat]# docker image build . -t harbor.od.com/public/tomcat:v8.5.50

[root@hdss7-200 tomcat]# docker image push harbor.od.com/public/tomcat:v8.5.50

2.2. 配置Jenkins流水线

采用参数化构建,参考: https://www.yuque.com/duduniao/ww8pmw/gp8n04#VNfSK

1. name: git_repo

type: string

description: 项目在git版本仓库的地址,如 https://gitee.com/xxx/dubbo-demo-service.git

2. name: app_name

type: string

description: 项目名称,如 dubbo-demo-service

3. name: git_ver

type: string

description: 项目在git仓库中对应的分支或者版本号

4. name: maven

type: choice

description: 编译时使用的maven目录中的版本号部分

5. name: mvn_cmd

type: string

default: mvn clean package -Dmaven.test.skip=true

description: 执行编译所用的指令

6. name: mvn_dir

type: string

default: ./

description: 在哪个目录执行编译,由开发同事提供

7. name: target_dir

type: string

default: ./target

description: 编译的jar/war文件存放目录,由开发同事提供

8. name: root_dir

type: string

default: ROOT

description: 项目放到webapps下面的哪个目录中

9. name: base_image

type: choice

default:

description: 项目使用的jre底包

10. name: image_name

type: string

description: docker镜像名称,如 app/dubbo-demo-service

11. name: add_tag

type: string

default:

description: 日期-时间,和git_ver拼在一起组成镜像的tag,如: 202002011001

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('unzip') { //unzip target/*.war -c target/project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && unzip *.war -d ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/tomcat/webapps/${params.root_dir}"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

2.3. 构建dubbo-demo-web

2.4. 部署微服务

当前微服务启动时,需要依赖于 Apollo、dubbo-demo-service部件。

参考:https://www.yuque.com/duduniao/ww8pmw/eaw7s4

[root@hdss7-200 ~]# cat /data/k8s-yaml/dev/dubbo-demo-consumer/deployment.yaml # 只改镜像

......

image: harbor.od.com/app/dubbo-demo-consumer:tomcat_20200209_1149

......

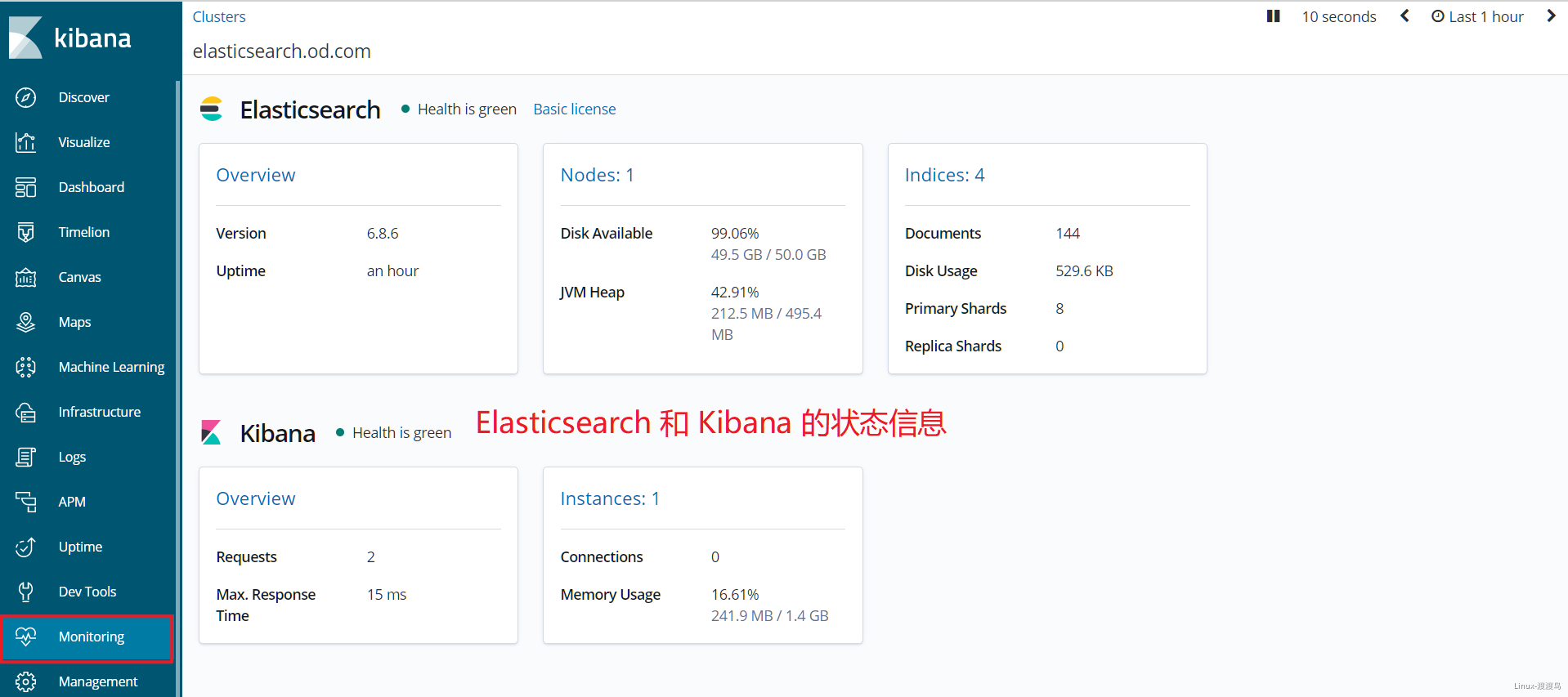

2. 部署Elasticsearch

Elasticsearch 是一个有状态的服务,不建议部署在Kubernetes集群中,本次实验采用单节点部署Elasticsearch,部署节点为 hdss7-12.host.com (10.4.7.12)。

[root@hdss7-12 src]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.6.tar.gz

[root@hdss7-12 src]# tar -xf elasticsearch-6.8.6.tar.gz -C /opt/release/

[root@hdss7-12 src]# ln -s /opt/release/elasticsearch-6.8.6 /opt/apps/elasticsearch

[root@hdss7-12 ~]# grep -Ev "^$|^#" /opt/apps/elasticsearch/config/elasticsearch.yml # 调整以下配置

cluster.name: elasticsearch.od.com

node.name: hdss7-12.host.com

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

network.host: 10.4.7.12

http.port: 9200

[root@hdss7-12 ~]# vim /opt/apps/elasticsearch/config/jvm.options # 默认1g

......

# 生产中一般不超过32G

-Xms512m

-Xmx512m

[root@hdss7-12 ~]# useradd -M es

[root@hdss7-12 ~]# mkdir -p /data/elasticsearch/{logs,data}

[root@hdss7-12 ~]# chown -R es.es /data/elasticsearch /opt/release/elasticsearch-6.8.6

[root@hdss7-12 ~]# vim /etc/security/limits.conf

......

es hard nofile 65536

es soft fsize unlimited

es hard memlock unlimited

es soft memlock unlimited

[root@hdss7-12 ~]# echo "vm.max_map_count=262144" >> /etc/sysctl.conf ; sysctl -p

[root@hdss7-12 ~]# su es -c "/opt/apps/elasticsearch/bin/elasticsearch -d" # 启动

[root@hdss7-12 ~]# su es -c "/opt/apps/elasticsearch/bin/elasticsearch -d -p /data/elasticsearch/logs/pid" # 指定pid记录的文件

[root@hdss7-12 ~]# netstat -lntp | grep 9.00

tcp6 0 0 10.4.7.12:9200 :::* LISTEN 69352/java

tcp6 0 0 10.4.7.12:9300 :::* LISTEN 69352/java

[root@hdss7-12 ~]# su es -c "ps aux|grep -v grep|grep java|grep elasticsearch|awk '{print \$2}'|xargs kill" # kill Pid

[root@hdss7-12 ~]# pkill -F /data/elasticsearch/logs/pid

# 添加k8s日志索引模板

[root@hdss7-12 ~]# curl -H "Content-Type:application/json" -XPUT http://10.4.7.12:9200/_template/k8s -d '{

"template" : "k8s*",

"index_patterns": ["k8s*"],

"settings": {

"number_of_shards": 5,

"number_of_replicas": 0

}

}'

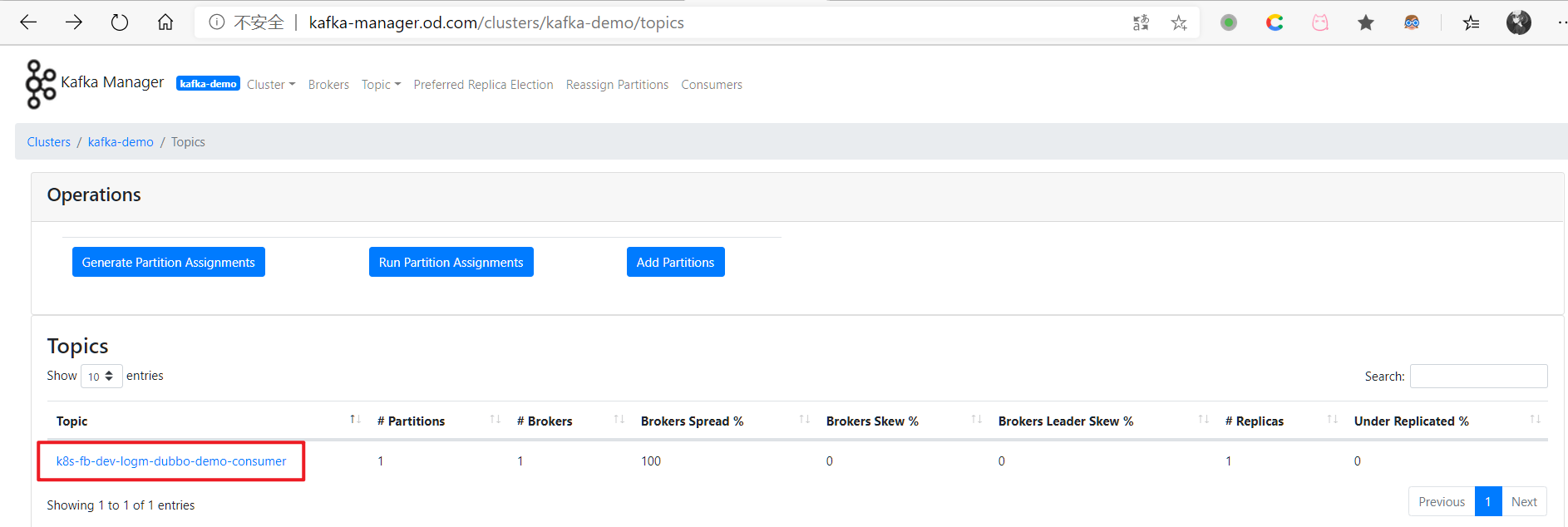

3. 部署Kafka

3.1. 部署Kafka

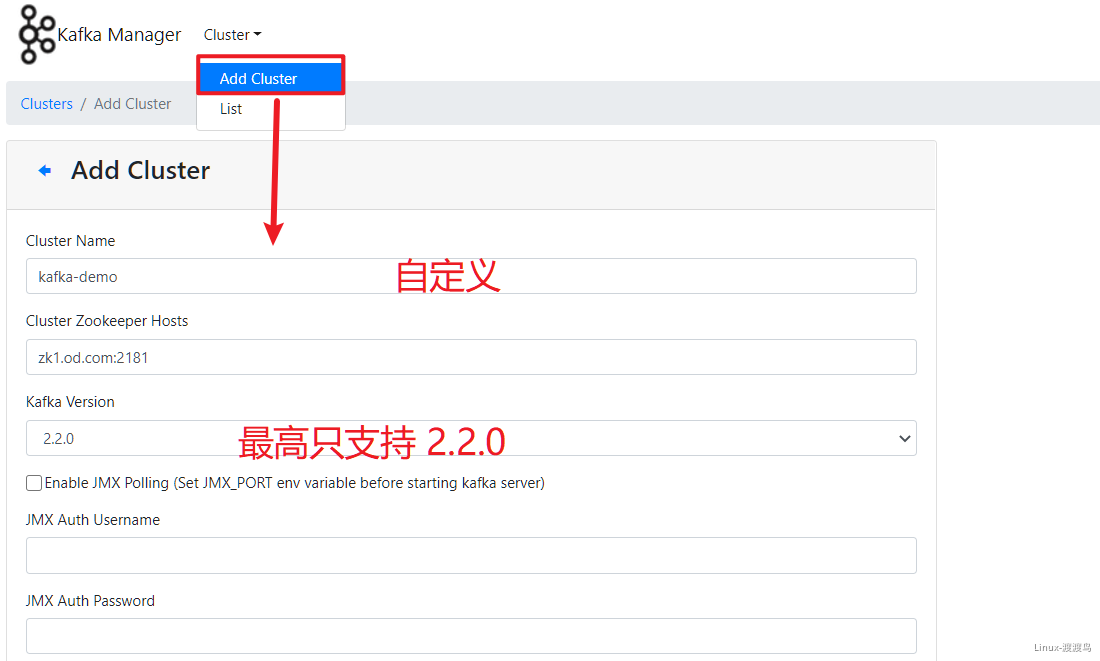

Kafka需要是有状态的服务,一般部署在Kubernetes之外,本次部署在 hdss7-11.host.com(10.4.7.11)。由于后面需要部署的Kafka-manager只支持到 2.2.0 版本,因此这次部署采用kafka_2.12-2.2.0版本,其中2.12为Scala版本号。

[root@hdss7-11 src]# wget https://archive.apache.org/dist/kafka/2.2.0/kafka_2.12-2.2.0.tgz

[root@hdss7-11 src]# tar -xf kafka_2.12-2.2.0.tgz -C /opt/release/

[root@hdss7-11 src]# ln -s /opt/release/kafka_2.12-2.2.0 /opt/apps/kafka

[root@hdss7-11 ~]# vim /opt/apps/kafka/config/server.properties

......

log.dirs=/data/kafka/logs

# 超过10000条日志强制刷盘,超过1000ms刷盘

log.flush.interval.messages=10000

log.flush.interval.ms=1000

# 填写需要连接的 zookeeper 集群地址,当前连接本地的 zk 集群。

zookeeper.connect=localhost:2181

# 新增以下两项

delete.topic.enable=true

host.name=hdss7-11.host.com

[root@hdss7-11 ~]# mkdir -p /data/kafka/logs

[root@hdss7-11 ~]# /opt/apps/kafka/bin/kafka-server-start.sh -daemon /opt/apps/kafka/config/server.properties

[root@hdss7-11 ~]# netstat -lntp|grep 121952

tcp6 0 0 10.4.7.11:9092 :::* LISTEN 121952/java

tcp6 0 0 :::41211 :::* LISTEN 121952/java

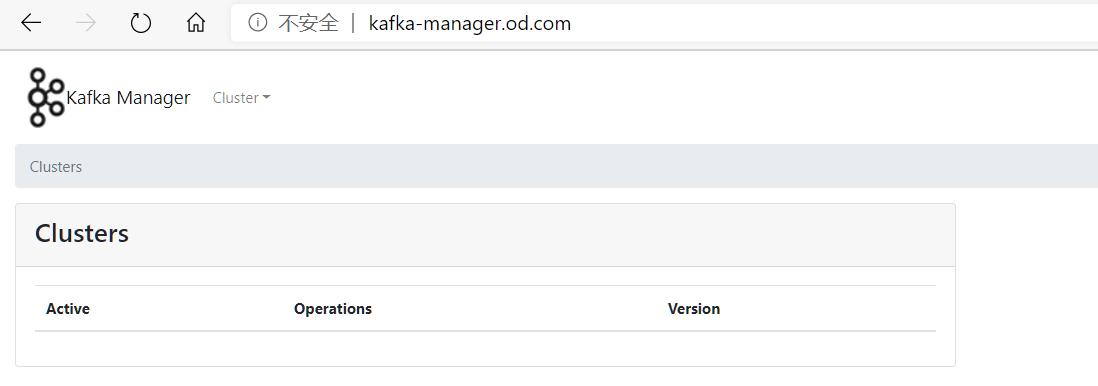

3.2. 部署Kafka-manager

Kafka-manager是一款管理Kafka集群的软件,建议安装,github地址: https://github.com/yahoo/CMAK。如果采用交付到k8s集群,会稍微麻烦一些。

3.2.1. 镜像制作

# 这里存在几个问题:

# 1. kafka-manager 改名为 CMAK,压缩包名称和内部目录名发生了变化

# 2. sbt 编译需要下载很多依赖,因为不可描述的原因,速度非常慢,个人非VPN网络大概率失败

# 3. 因本人不具备VPN条件,编译失败。又因为第一条,这个dockerfile大概率需要修改

# 4. 生产环境中一定要自己重新做一份!

FROM hseeberger/scala-sbt

ENV ZK_HOSTS=localhost:2181 \

KM_VERSION=2.0.0.2

RUN mkdir -p /tmp && \

cd /tmp && \

wget https://github.com/yahoo/kafka-manager/archive/${KM_VERSION}.tar.gz && \

tar xf ${KM_VERSION}.tar.gz && \

cd /tmp/kafka-manager-${KM_VERSION} && \

sbt clean dist && \

unzip -d / ./target/universal/kafka-manager-${KM_VERSION}.zip && \

rm -fr /tmp/${KM_VERSION} /tmp/kafka-manager-${KM_VERSION}

WORKDIR /kafka-manager-${KM_VERSION}

EXPOSE 9000

ENTRYPOINT ["./bin/kafka-manager","-Dconfig.file=conf/application.conf"]

[root@hdss7-200 ~]# docker image pull linuxduduniao/kafka-manager:v2.0.0.2

[root@hdss7-200 ~]# docker image tag linuxduduniao/kafka-manager:v2.0.0.2 harbor.od.com/public/kafka-manager:v2.0.0.2

[root@hdss7-200 ~]# docker image push harbor.od.com/public/kafka-manager:v2.0.0.2

3.2.2. 资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-manager

namespace: infra

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

app: kafka-manager

template:

metadata:

labels:

app: kafka-manager

spec:

containers:

- name: kafka-manager

image: harbor.od.com/public/kafka-manager:v2.0.0.2

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: zk1.od.com:2181

- name: APPLICATION_SECRET

value: letmein

apiVersion: v1

kind: Service

metadata:

name: kafka-manager

namespace: infra

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kafka-manager

namespace: infra

spec:

rules:

- host: kafka-manager.od.com

http:

paths:

- path: /

backend:

serviceName: kafka-manager

servicePort: 9000

3.2.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kafka-manager/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kafka-manager/service.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kafka-manager/ingress.yaml

[root@hdss7-11 ~]# cat /var/named/od.com.zone

......

kafka-manager A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# host kafka-manager.od.com

kafka-manager.od.com has address 10.4.7.10

4. 使用filebeat

4.1. 制作filebeat镜像

# 当前的Dockerfile 是从老男孩教育的资料中拷贝的

# 官网有现成的docker镜像可以考虑使用: https://www.elastic.co/guide/en/beats/filebeat/current/running-on-docker.html

FROM debian:jessie

ENV FILEBEAT_VERSION=7.4.0 \

FILEBEAT_SHA1=c63bb1e16f7f85f71568041c78f11b57de58d497ba733e398fa4b2d071270a86dbab19d5cb35da5d3579f35cb5b5f3c46e6e08cdf840afb7c347777aae5c4e11

RUN set -x && \

apt-get update && \

apt-get install -y wget && \

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-${FILEBEAT_VERSION}-linux-x86_64.tar.gz -O /opt/filebeat.tar.gz && \

cd /opt && \

echo "${FILEBEAT_SHA1} filebeat.tar.gz" | sha512sum -c - && \

tar xzvf filebeat.tar.gz && \

cd filebeat-* && \

cp filebeat /bin && \

cd /opt && \

rm -rf filebeat* && \

apt-get purge -y wget && \

apt-get autoremove -y && \

apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

COPY docker-entrypoint.sh /

ENTRYPOINT ["/docker-entrypoint.sh"]

# 因为通过wget下载filebeat速度太慢,采用windows环境中下载好的压缩包直接ADD到镜像中

FROM debian:jessie

ADD filebeat-7.4.0-linux-x86_64.tar.gz /opt/

RUN set -x && cp /opt/filebeat-*/filebeat /bin && rm -fr /opt/filebeat*

COPY entrypoint.sh /

ENTRYPOINT ["/entrypoint.sh"]

[root@hdss7-200 filebeat]# cat entrypoint.sh

#!/bin/bash

ENV=${ENV:-"dev"} # 运行环境

PROJ_NAME=${PROJ_NAME:-"no-define"} # project 名称,关系到topic

MULTILINE=${MULTILINE:-"^\d{2}"} # 多行匹配,根据日志格式来定

KAFKA_ADDR=${KAFKA_ADDR:-'"10.4.7.11:9092"'}

cat > /etc/filebeat.yaml << EOF

filebeat.inputs:

- type: log

fields_under_root: true

fields:

topic: logm-${PROJ_NAME}

paths:

- /logm/*.log

- /logm/*/*.log

- /logm/*/*/*.log

- /logm/*/*/*/*.log

- /logm/*/*/*/*/*.log

scan_frequency: 120s

max_bytes: 10485760

multiline.pattern: '$MULTILINE'

multiline.negate: true

multiline.match: after

multiline.max_lines: 100

- type: log

fields_under_root: true

fields:

topic: logu-${PROJ_NAME}

paths:

- /logu/*.log

- /logu/*/*.log

- /logu/*/*/*.log

- /logu/*/*/*/*.log

- /logu/*/*/*/*/*.log

- /logu/*/*/*/*/*/*.log

output.kafka:

hosts: [${KAFKA_ADDR}]

topic: k8s-fb-$ENV-%{[topic]}

version: 2.0.0

required_acks: 0

max_message_bytes: 10485760

EOF

set -xe

if [[ "$1" == "" ]]; then

exec filebeat -c /etc/filebeat.yaml

else

exec "$@"

fi

[root@hdss7-200 filebeat]# chmod +x entrypoint.sh

[root@hdss7-200 filebeat]# docker image build . -t harbor.od.com/public/filebeat:v7.4.0

[root@hdss7-200 filebeat]# docker image push harbor.od.com/public/filebeat:v7.4.0

4.2. 运行dubbo消费者

# 本deployment修改的是 apollo 章节中 dev 环境下 dubbo-demo-consumer 服务

# 需要apollo、生产者启动

# reference: https://www.yuque.com/duduniao/ww8pmw/pvwdlq#CPLkT

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-demo-consumer

namespace: dev

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-consumer:tomcat_20200209_1149

ports:

- containerPort: 8080

protocol: TCP

env:

- name: C_OPTS

value: -Denv=dev -Dapollo.meta=http://config-dev.od.com

volumeMounts:

- mountPath: /opt/tomcat/logs

name: logm

- name: filebeat

image: harbor.od.com/public/filebeat:v7.4.0

env:

- name: ENV

value: dev

- name: PROJ_NAME

value: dubbo-demo-consumer

- name: KAFKA_ADDR

value: '"10.4.7.11:9092"'

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- emptyDir: {}

name: logm

imagePullSecrets:

- name: harbor

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dev/dubbo-demo-consumer/deployment.yaml

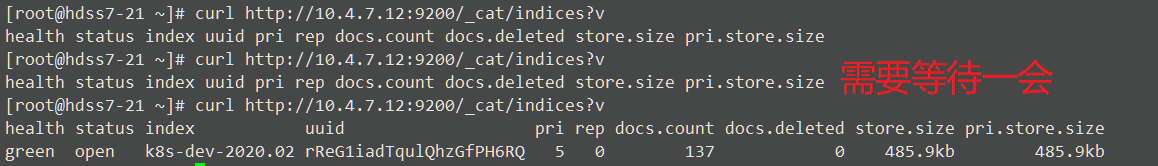

5. 部署logstash

5.1. 制作logstash镜像

[root@hdss7-200 ~]# docker image pull logstash:6.8.3

[root@hdss7-200 ~]# docker image tag logstash:6.8.3 harbor.od.com/public/logstash:v6.8.3

[root@hdss7-200 ~]# docker image push harbor.od.com/public/logstash:v6.8.3

5.2. 启动logstash

# 启动logstash,可以交付到k8s中,相关配置采用configmap方式挂载

# k8s在不同项目的名称空间中,可以创建不同的logstash,制定不同的索引

[root@hdss7-200 ~]# cat /etc/logstash/logstash-dev.conf

input {

kafka {

bootstrap_servers => "10.4.7.11:9092"

client_id => "10.4.7.200"

consumer_threads => 4

group_id => "k8s_dev"

topics_pattern => "k8s-fb-dev-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["10.4.7.12:9200"]

index => "k8s-dev-%{+YYYY.MM}"

}

}

[root@hdss7-200 ~]# docker run -d --name logstash-dev -v /etc/logstash:/etc/logstash harbor.od.com/public/logstash:v6.8.3 -f /etc/logstash/logstash-dev.conf

024fa2bda8157710212a860a68ca869ffc09fef706907ca63e8b920db394cade

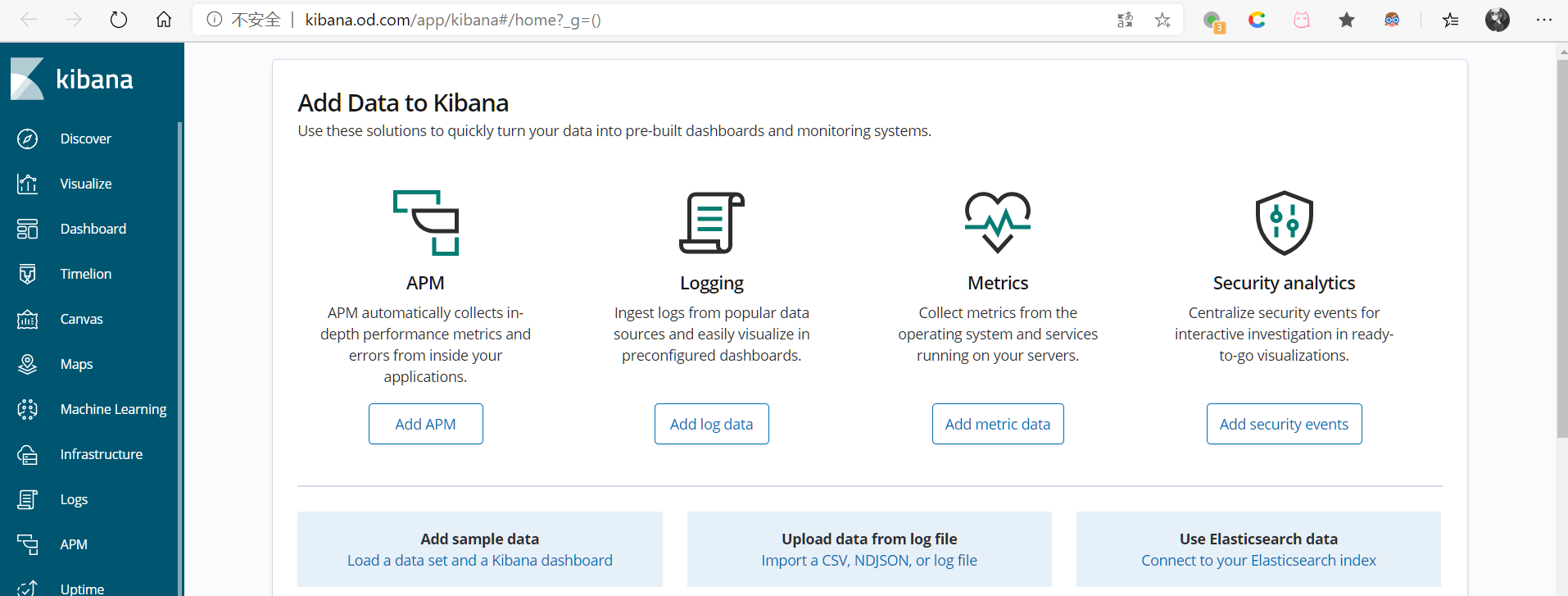

6. Kibana部署

6.1. 准备镜像

[root@hdss7-200 ~]# docker pull kibana:6.8.3

[root@hdss7-200 ~]# docker image tag kibana:6.8.3 harbor.od.com/public/kibana:v6.8.3

[root@hdss7-200 ~]# docker image push harbor.od.com/public/kibana:v6.8.3

6.2. 准备资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: infra

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: harbor.od.com/public/kibana:v6.8.3

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://10.4.7.12:9200

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 5601

selector:

app: kibana

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: infra

spec:

rules:

- host: kibana.od.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 80

6.3. 应用资源配置清单

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kibana/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kibana/ingress.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/devops/ELK/kibana/service.yaml

[root@hdss7-11 ~]# vim /var/named/od.com.zone

......

kibana A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

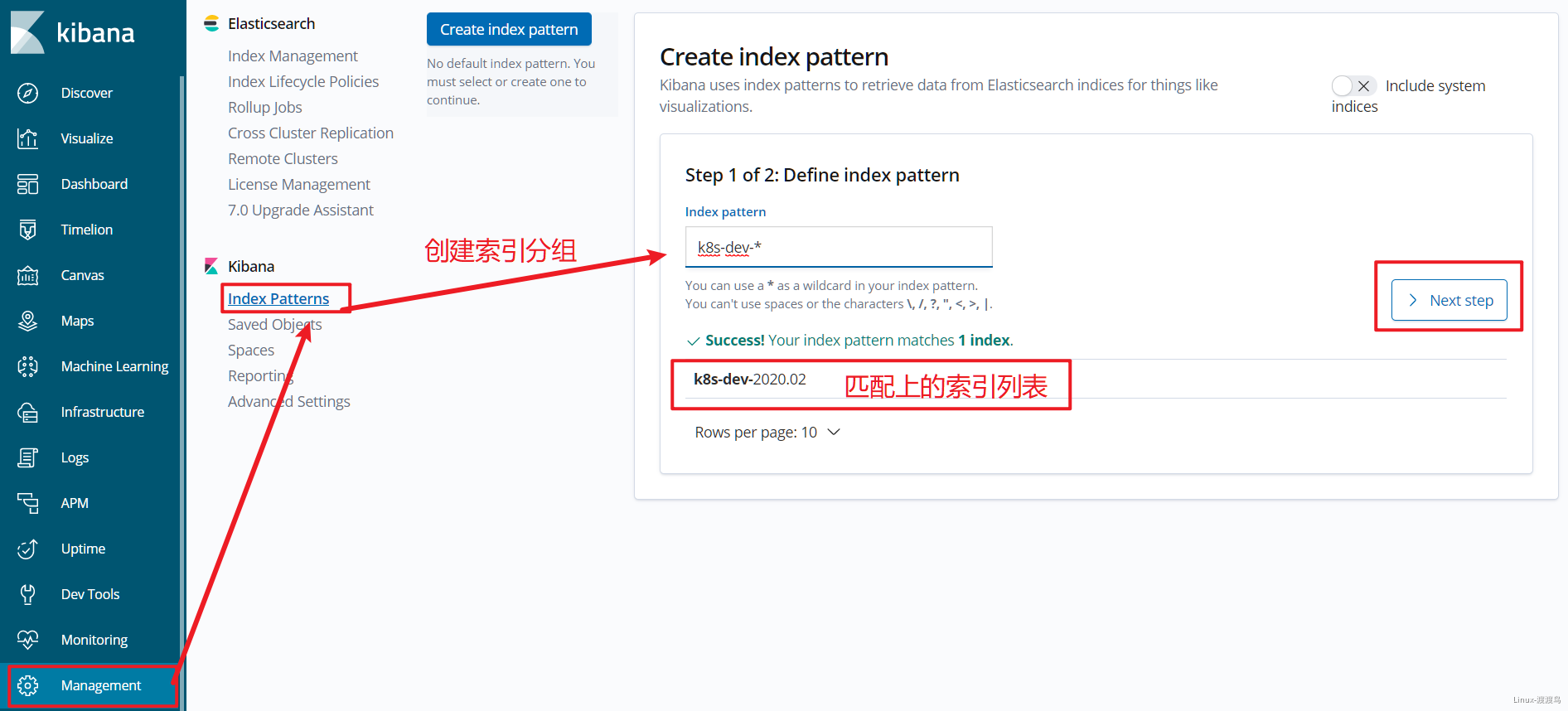

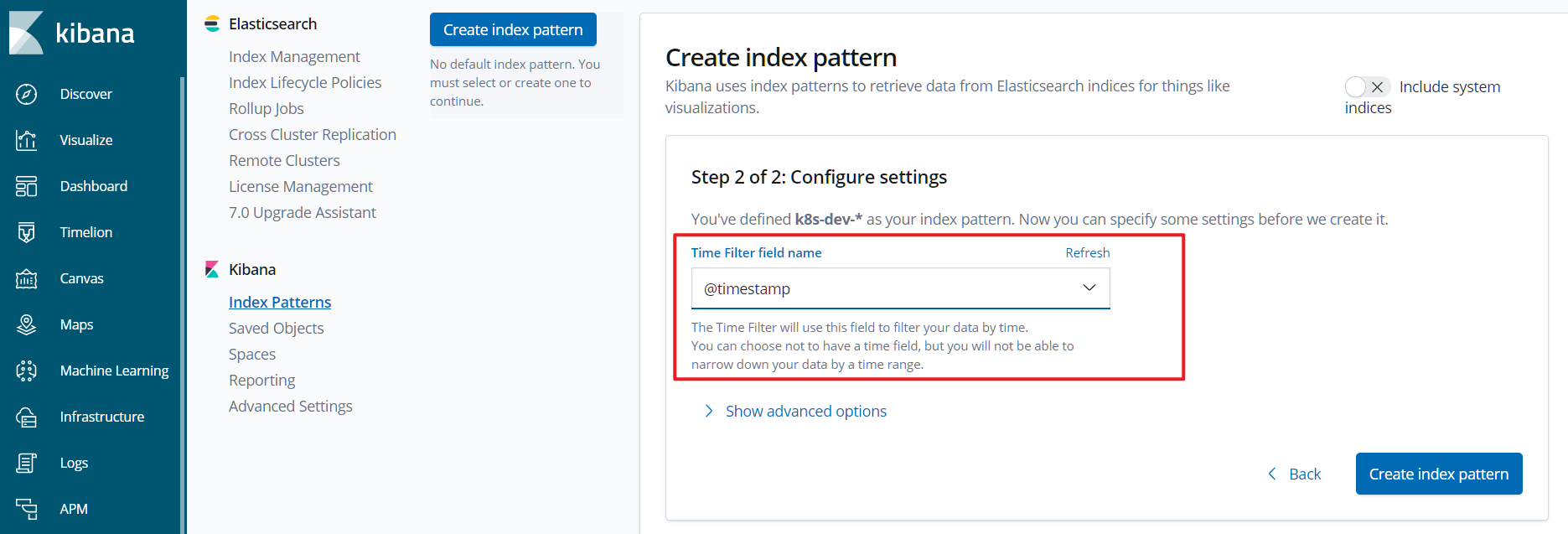

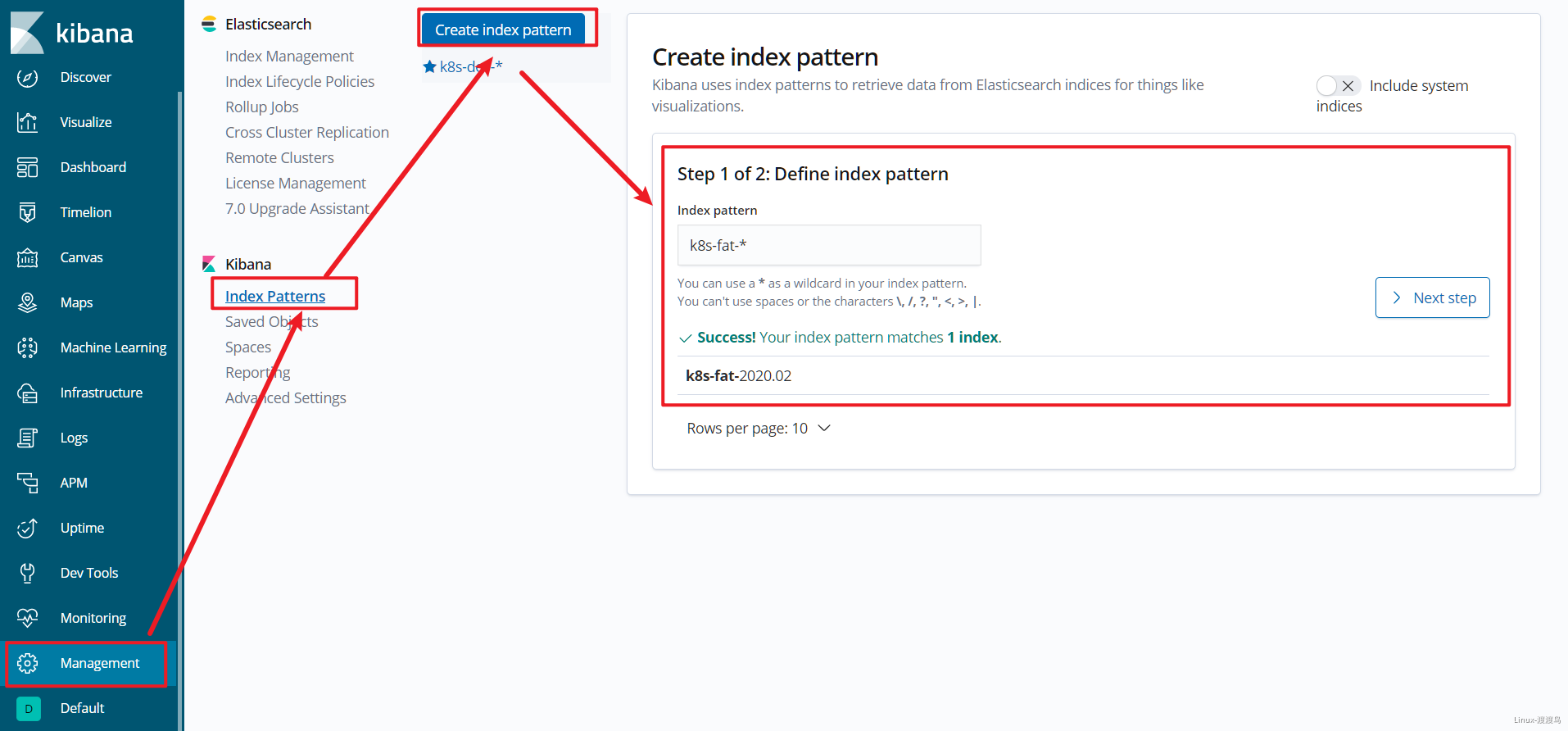

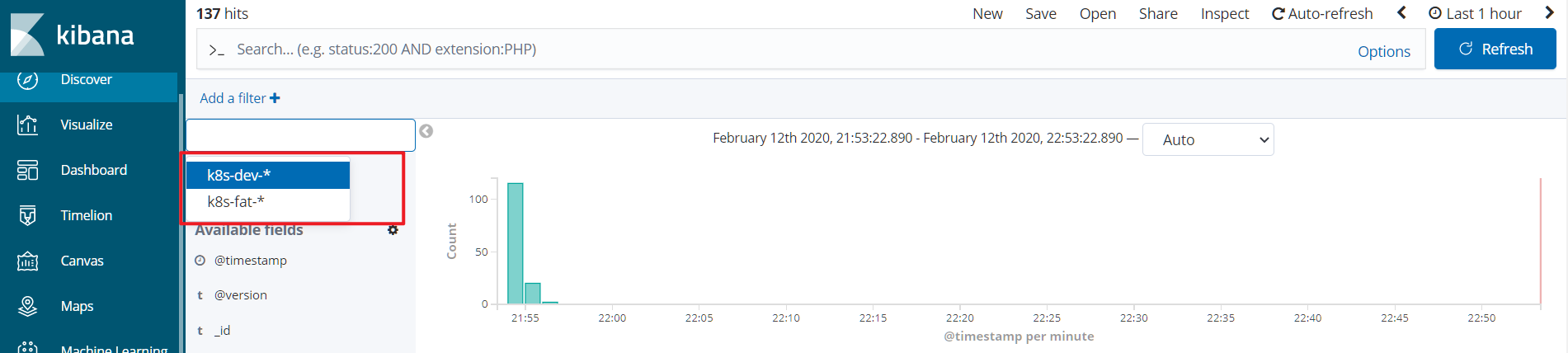

6.4. 配置Kibana索引分组

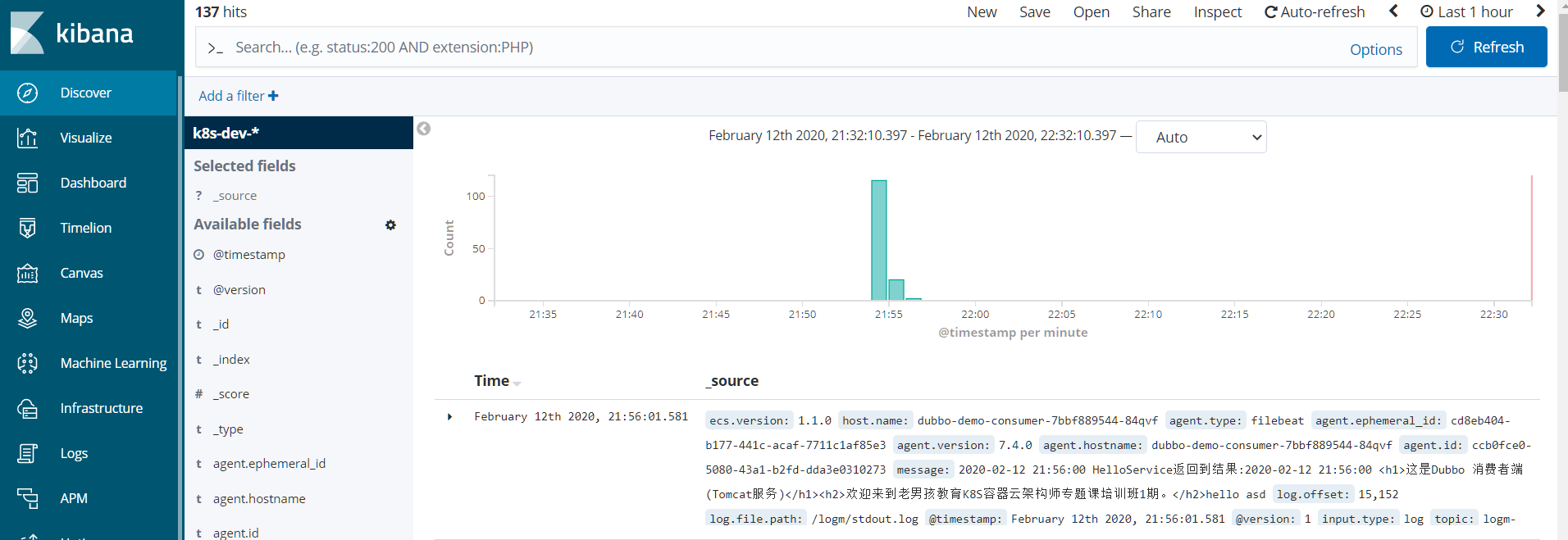

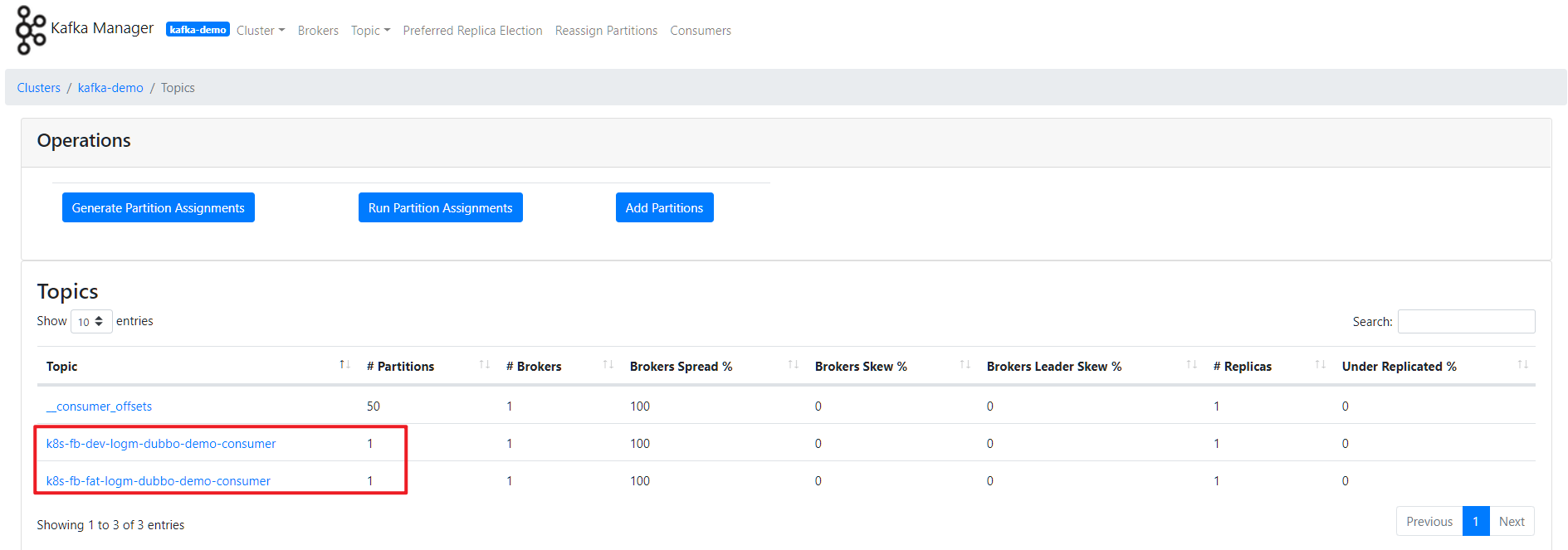

6.5. 多环境收日志

# 环境准备分为两步

# 1. 启动测试环境(fat)中dubbo-demo-consumer(tomcat)版本pod,参考4.2

# 2. 启动收集fat日志的logstash

[root@hdss7-200 ~]# docker run -d --name logstash-fat -v /etc/logstash:/etc/logstash harbor.od.com/public/logstash:v6.8.3 -f /etc/logstash/logstash-fat.conf

[root@hdss7-21 ~]# curl http://10.4.7.12:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open k8s-dev-2020.02 rReG1iadTqulQhzGfPH6RQ 5 0 137 0 488.3kb 488.3kb

green open k8s-fat-2020.02 8TaKkfvCQzeDgSOba2-R4Q 5 0 8 0 63kb 63kb

......

7. 说明

当前实验中,仅仅是演示一种在Kubernetes环境下日志采集方案,实际生产环境下,需要对日志字段进行更加详细的补充和拆分,否则无法高效的检索和排错,一般在两个方面去实现,在filebeat输入侧添加更多的字段信息,在logstash侧进行日志字段拆分和清洗。另外还需要在ELK进行安全加固。这部分内容可以参考我的另一篇ELK博客,实现举一反三。

https://www.yuque.com/docs/share/36e6efbc-4eea-4082-973f-631eca4cdde5?# 《01-1-ELK集群安装》