03-3-Pod控制器

1. Pod

1.1. Pod介绍

1.1.1. Pod简介

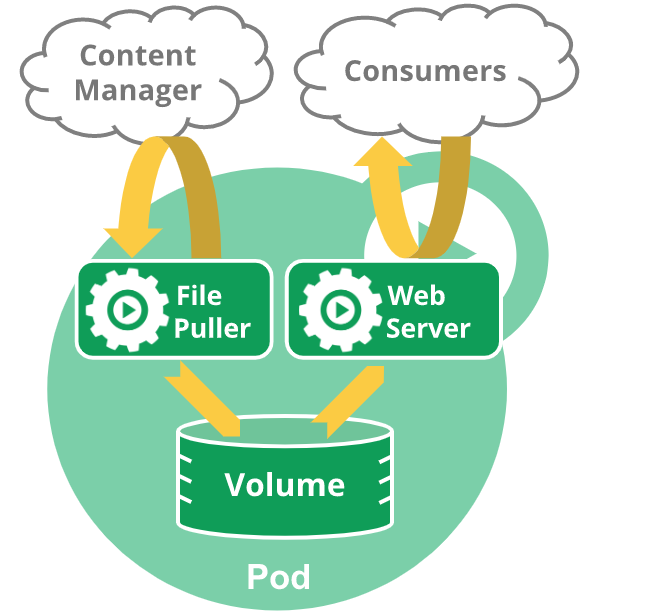

Pod 是 Kubernetes 的基本构建块,它是 Kubernetes 对象模型中创建或部署的最小和最简单的单元。 Pod 表示集群上正在运行的进程。Pod 封装了应用程序容器(或者在某些情况下封装多个容器)、存储资源、唯一网络 IP 以及控制容器应该如何运行的选项。 Pod 表示部署单元:Kubernetes 中应用程序的单个实例,它可能由单个容器或少量紧密耦合并共享资源的容器组成。

一个pod内部一般仅运行一个pod,也可以运行多个pod,如果存在多个pod时,其中一个为主容器,其它作为辅助容器,也被称为边车模式。同一个pod共享一个网络名称空间和外部存储卷。

1.1.2. Pod生命周期

Pod的生命周期中可以经历多个阶段,在一个Pod中在主容器(Main Container)启动前可以由init container来完成一些初始化操作。初始化完毕后,init Container 退出,Main Container启动。

在主容器启动后可以执行一些特定的指令,称为启动后钩子(PostStart),在主容器退出前也可以执行一些特殊指令完成清理工作,称为结束前钩子(PreStop)。

在主容器工作周期内,并不是刚创建就能对外提供服务,容器内部可能需要加载相关配置,因此可以使用特定命令确定容器是否就绪,称为就绪性检测(ReadinessProbe),完成就绪性检测才能成为Ready状态。

主容器对外提供服务后,可能出现意外导致容器异常,虽然此时容器仍在运行,但是不具备对外提供业务的能力,因此需要对其做存活性探测(LivenessProbe)。

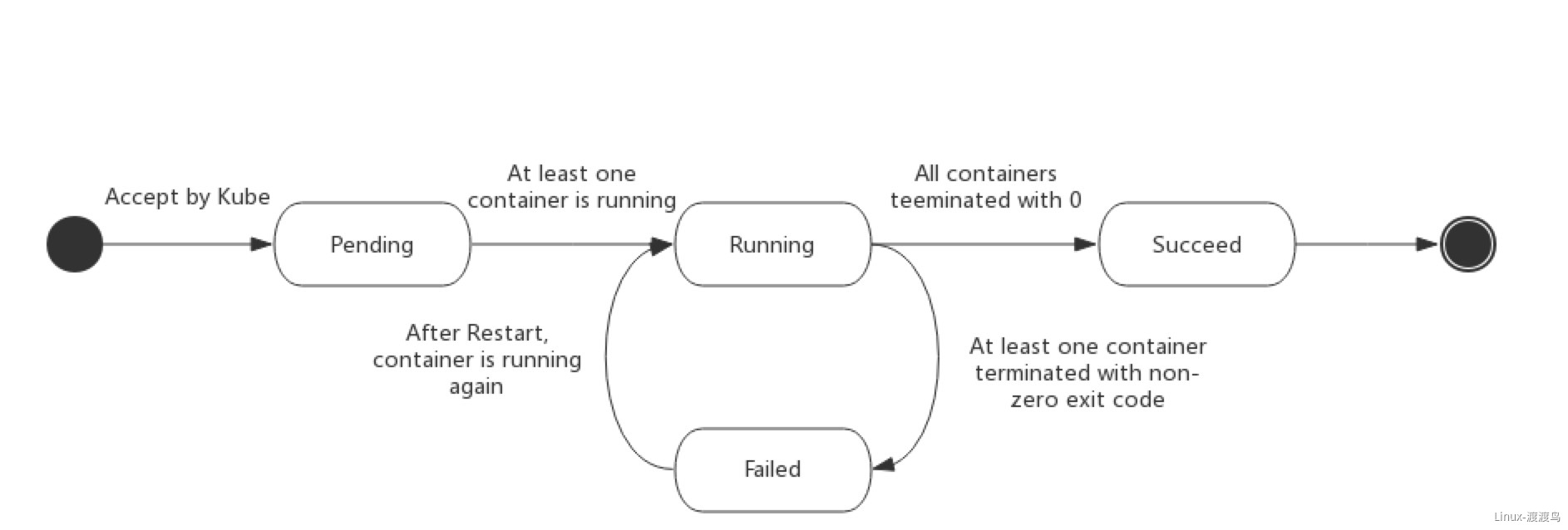

1.1.3. Pod状态

- Pending: Pod 已被 Kubernetes 系统接受,但有一个或者多个容器尚未创建。

- Running: 该 Pod 已经绑定到了一个节点上,Pod 中所有的容器都已被创建。至少有一个容器正在运行,或者正处于启动或重启状态。

- Succeeded: Pod 中的所有容器都被成功终止,并且不会再重启。

- Failed: Pod 中的所有容器都已终止了,并且至少有一个容器是因为失败终止。

- Unknown: 因为某些原因无法取得 Pod 的状态,通常是因为与 Pod 所在主机通信失败。

1.2. Pod模板

1.2.1. apiversion/kind

apiVersion: v1kind: Pod

1.2.2. metadata

metadata

name <string> # 在一个名称空间内不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释,不能作为被筛选

1.2.3. spec

spec

containers <[]Object> -required- # 必选参数

name <string> -required- # 指定容器名称,不可更新

image <string> -required- # 指定镜像

imagePullPolicy <string> # 指定镜像拉取方式

# Always: 始终从registory拉取镜像。如果镜像标签为latest,则默认值为Always

# Never: 仅使用本地镜像

# IfNotPresent: 本地不存在镜像时才去registory拉取。默认值

env <[]Object> # 指定环境变量

name <string> -required- # 变量名称

value <string> # 变量值

valueFrom <Object> # 从文件中读取,不常用

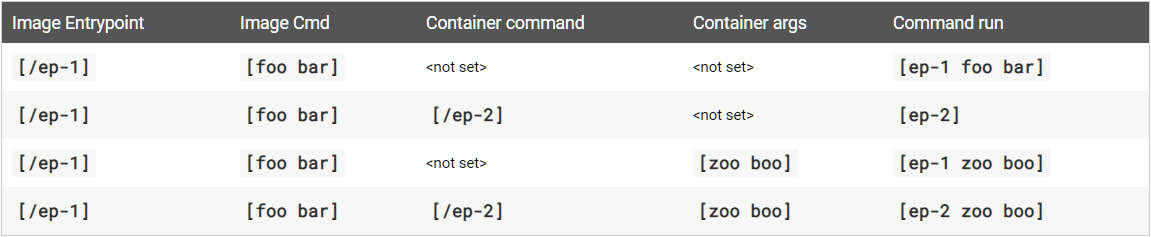

command <[]string> # 以数组方式指定容器运行指令,替代docker的ENTRYPOINT指令

args <[]string> # 以数组方式指定容器运行参数,替代docker的CMD指令

workingDir <string> # 指定工作目录,不指定则使用镜像默认值

ports <[]Object> # 指定容器暴露的端口

containerPort <integer> -required- # 容器的监听端口

name <string> # 为端口取名,该名称可以在service种被引用

protocol <string> # 指定协议:UDP, TCP, SCTP,默认TCP

hostIP <string> # 绑定到宿主机的某个IP

hostPort <integer> # 绑定到宿主机的端口

resources <Object> # 资源设置

limits <map[string]string> # 消耗的最大资源限制,通常设置cpu和memory

requests <map[string]string> # 最低资源要求,在scheduler中被用到,通常设置cpu和memory

volumeMounts <[]Object> # 指定存储卷挂载

name <string> -required- # 存储卷名称

mountPath <string> -required- # 容器内挂载路径

subPath <string> # 存储卷的子目录

readOnly <boolean> # 是否为只读方式挂载

volumeDevices <[]Object> # 配置块设备的挂载

devicePath <string> -required- # 容器内挂载路径

name <string> -required- # pvc名称

readinessProbe <Object> # 就绪性探测,确认就绪后提供服务

initialDelaySeconds <integer> # 容器启动后到开始就绪性探测中间的等待秒数

periodSeconds <integer> # 两次探测的间隔多少秒,默认值为10

successThreshold <integer> # 连续多少次检测成功认为容器正常,默认值为1。不支持修改

failureThreshold <integer> # 连续多少次检测失败认为容器异常,默认值为3

timeoutSeconds <integer> # 探测请求超时时间

exec <Object> # 通过执行特定命令来探测容器健康状态

command <[]string> # 执行命令,返回值为0表示健康,不自持shell模式

tcpSocket <Object> # 检测TCP套接字

host <string> # 指定检测地址,默认pod的IP

port <string> -required- # 指定检测端口

httpGet <Object> # 以HTTP请求方式检测

host <string> # 指定检测地址,默认pod的IP

httpHeaders <[]Object> # 设置请求头,很少会需要填写

path <string> # 设置请求的location

port <string> -required- # 指定检测端口

scheme <string> # 指定协议,默认HTTP

livenessProbe <Object> # 存活性探测,确认pod是否具备对外服务的能力,该对象中字段和readinessProbe一致

lifecycle <Object> # 生命周期

postStart <Object> # pod启动后钩子,执行指令或者检测失败则退出容器或者重启容器

exec <Object> # 执行指令,参考readinessProbe.exec

httpGet <Object> # 执行HTTP,参考readinessProbe.httpGet

tcpSocket <Object> # 检测TCP套接字,参考readinessProbe.tcpSocket

preStop <Object> # pod停止前钩子,停止前执行清理工作,该对象中字段和postStart一致

startupProbe <Object> # 容器启动完毕的配置,该配置与readinessProbe一致,在lifecycle和Probe之前运行,失败则重启

securityContext <Object> # 与容器安全相关的配置,如运行用户、特权模式等

initContainers <[]Object> # 初始化容器,执行完毕会退出,用户数据迁移、文件拷贝等

volumes <[]Object> # 存储卷配置,https://www.yuque.com/duduniao/k8s/vgms23#Ptdfs

restartPolicy <string> # Pod重启策略,Always, OnFailure,Never,默认Always

nodeName <string> # 调度到指定的node节点, 强制要求满足

nodeSelector <map[string]string> # 指定预选的node节点, 强制要求满足

affinity <Object> # 调度亲和性配置

nodeAffinity <Object> # node亲和性配置

preferredDuringSchedulingIgnoredDuringExecution <[]Object> # 首选配置

preference <Object> -required- # 亲和偏好

matchExpressions <[]Object> # 表达式匹配

key <string> -required- # label的key

values <[]string> # label的value,当operator为Exists和DoesNotExist时为空

operator <string> -required- # key和value的连接符,In,NotIn,Exists,DoesNotExist,Gt,Lt

matchFields <[]Object> # 字段匹配,与matchExpressions一致

weight <integer> -required- # 权重

requiredDuringSchedulingIgnoredDuringExecution <Object> # 强制要求的配置

nodeSelectorTerms <[]Object> -required- # nodeselect配置,与preferredDuringSchedulingIgnoredDuringExecution.preference一致

podAffinity <Object> # pod亲和性配置

preferredDuringSchedulingIgnoredDuringExecution <[]Object> # 首选配置

podAffinityTerm <Object> -required- # 选择器

labelSelector <Object> # pod标签选择器

matchExpressions<[]Object> # 表达式匹配

matchLabels <map[string]string> # 标签匹配

namespaces <[]string> # 对方Pod的namespace,为空时表示与当前Pod同一名称空间

topologyKey <string> -required- # 与对方Pod亲和的Node上具备的label名称

weight <integer> -required- # 权重

requiredDuringSchedulingIgnoredDuringExecution <[]Object> # 强制配置,与requiredDuringSchedulingIgnoredDuringExecution.podAffinityTerm一致

podAntiAffinity <Object> # Pod反亲和性配置,与podAffinity一致

tolerations <[]Object> # 污点容忍配置

key <string> # 污点的Key,为空表示所有污点的Key

operator <string> # key和value之间的操作符,Exists,Equal。Exists时value为空,默认值 Equal

value <string> # 污点的值

effect <string> # 污点的影响行为,空表示容忍所有的行为

tolerationSeconds <integer> # 当Pod被节点驱逐时,延迟多少秒

hostname <string> # 指定pod主机名

hostIPC <boolean> # 使用宿主机的IPC名称空间,默认false

hostNetwork <boolean> # 使用宿主机的网络名称空间,默认false

hostPID <boolean> # 使用宿主机的PID名称空间,默认false

serviceAccountName <string> # Pod运行时的使用的serviceAccount

imagePullSecrets <[]Object> # 当拉取私密仓库镜像时,需要指定的密码密钥信息

name <string> # secrets 对象名

1.2.4. k8s和image中的命令

1.2.4. 就绪性探测和存活性探测

- 就绪性探测失败不会重启pod,只是让pod不处于ready状态。存活性探测失败会触发pod重启。

- 就绪性探测和存活性探测会持续进行下去,直到pod终止。

1.3. 案例

一般不会单独创建pod,而是通过控制器的方式创建。

1.3.1. 创建简单pod

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: app

labels:

app: centos7

release: stable

environment: dev

spec:

containers:

- name: centos

image: harbor.od.com/public/centos:7

command:

- /bin/bash

- -c

- "sleep 3600"

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/base_resource/pods/myapp.yaml

[root@hdss7-21 ~]# kubectl get pod -o wide -n app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-demo 1/1 Running 0 16s 172.7.22.2 hdss7-22.host.com <none> <none>

[root@hdss7-21 ~]# kubectl exec pod-demo -n app -- ps uax

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.0 4364 352 ? Ss 04:41 0:00 sleep 3600

root 11 0.0 0.0 51752 1696 ? Rs 04:42 0:00 ps uax

[root@hdss7-21 ~]# kubectl describe pod pod-demo -n app | tail

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m46s default-scheduler Successfully assigned app/pod-demo to hdss7-22.host.com

Normal Pulling 3m45s kubelet, hdss7-22.host.com Pulling image "harbor.od.com/public/centos:7"

Normal Pulled 3m45s kubelet, hdss7-22.host.com Successfully pulled image "harbor.od.com/public/centos:7"

Normal Created 3m45s kubelet, hdss7-22.host.com Created container centos

Normal Started 3m45s kubelet, hdss7-22.host.com Started container centos

1.3.2. 带健康检测的pod

apiVersion: v1

kind: Pod

metadata:

name: pod-01

namespace: app

labels:

app: centos7

release: stable

version: t1

spec:

containers:

- name: centos

image: harbor.od.com/public/centos:7

command:

- /bin/bash

- -c

- "echo 'abc' > /tmp/health;sleep 60;rm -f /tmp/health;sleep 600"

livenessProbe:

exec:

command:

- /bin/bash

- -c

- "[ -f /tmp/health ]"

2. Deployment

2.1. 介绍

2.1.1. 简介

Pod控制器有很多种,最初的是使用 ReplicationController,即副本控制器,用于控制pod数量。随着版本升级,出现了ReplicaSet,跟ReplicationController没有本质的不同,只是名字不一样,并且ReplicaSet支持集合式的selector。ReplicaSet的核心管理对象有三种:用户期望的副本数、标签选择器、pod模板。

ReplicaSet一般不会直接使用,而是采用Deployment,Deployment是用来管理Replicaset,ReplicaSet来管理Pod。Deployment为ReplicaSet 提供了一个声明式定义(declarative)方法,用来替代以前的 ReplicationController 来方便的管理应用,比ReplicaSet的功能更加强大,且包含了ReplicaSet的功能。Deployment支持以下功能:

- 定义Deployment来创建Pod和ReplicaSet

- 滚动升级和回滚应用

- 扩容和缩容

- 暂停部署功能和手动部署

2.1.2. 部署方式

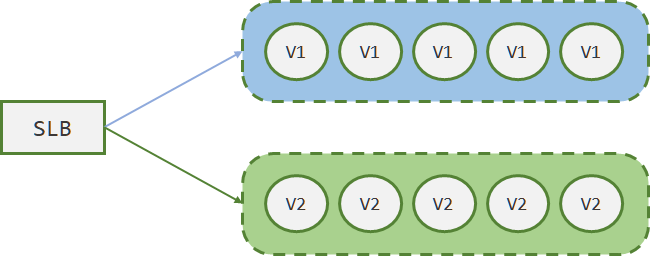

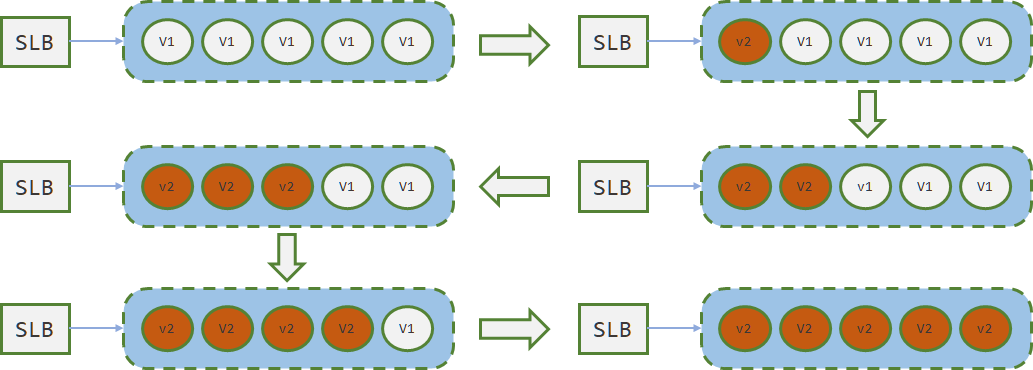

- 蓝绿发布

如图,假设副本数是5,目标是从v1升级到v2。先部署5个v2版本的业务机器,再将SLB的流量全部切换到v2上。如果出现异常,可以快速切换到v1版本。但是实际上用的不多,因为需要消耗大量的额外机器资源。

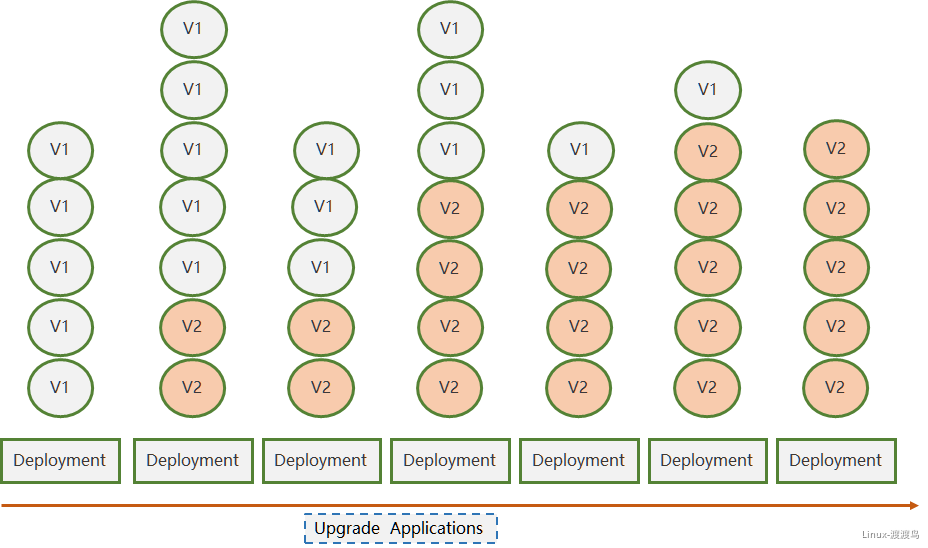

- 滚动发布

滚动发布是逐台(批次)升级,需要占用的额外资源少。比如先升级一台,再升级一台,直到全部升级完毕。也可以每次升级10%数量的机器,逐批次升级。

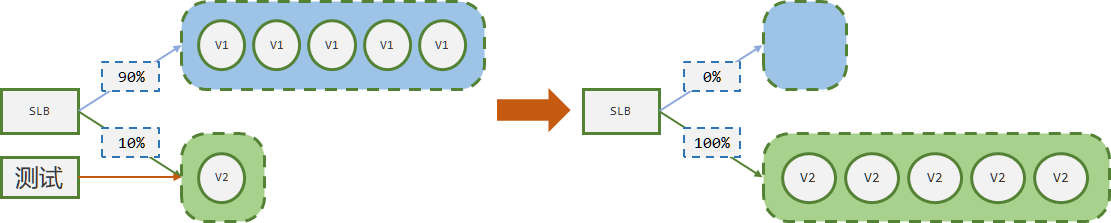

- 灰度发布(金丝雀发布)

灰度发布也叫金丝雀发布,起源是,矿井工人发现,金丝雀对瓦斯气体很敏感,矿工会在下井之前,先放一只金丝雀到井中,如果金丝雀不叫了,就代表瓦斯浓度高。

灰度发布会先升级一台灰度机器,将版本升级为v2,此时先经过测试验证,确认没有问题后。从LB引入少量流量进入灰度机器,运行一段时间后,再将其它机器升级为v2版本,引入全部流量。

2.1.3. Deployment升级方案

Deployment的升级方案默认是滚动升级,支持升级暂停,支持指定最大超过预期pod数量,支持指定最小低于预期pod数量。可以实现上述三种部署方案(以目标预期pod数量5个,v1版本升级到v2版本为案例):

- 蓝绿发布场景实现方案:新创建5个v2版本pod,等待5个v2版本Pod就绪后,下掉5个v1版本pod。

- 灰度发布场景实现案例:新创建的第一个pod最为灰度pod,此时暂定升级,等待灰度成功后再升级v1版本Pod

- 滚动发布:通过控制超出预期pod数量和低于预期Pod数量来控制滚动发布的节奏。

如下图,预期pod数量5个,滚动升级,最大超出副本数为2个,最大低于期望值2个的升级方式:

2.2. 模板

apiVersion: apps/v1

kind: Deployment

metadata

name <string> # 在一个名称空间不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释

apiVersion: apps/v1

kind: Deployment

metadata

name <string> # 在一个名称空间不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释

spec

replicas <integer> # 期望副本数,默认值1

selector <Object> # 标签选择器

matchExpressions <[]Object> # 标签选择器的一种形式,多个条件使用AND连接

key <string> -required- # 标签中的Key

operator <string> -required- # 操作符,支持 In, NotIn, Exists, DoesNotExist

values <[]string> # value的数组集合,当操作符为In或NotIn时不能为空

matchLabels <map[string]string> # 使用key/value的格式做筛选

strategy <Object> # pod更新策略,即如何替换已有的pod

type <string> # 更新类型,支持 Recreate, RollingUpdate。默认RollingUpdate

rollingUpdate <Object> # 滚动更新策略,仅在type为RollingUpdate时使用

maxSurge <string> # 最大浪涌pod数,即滚动更新时最多可多于出期望值几个pod。支持数字和百分比格式

maxUnavailable <string> # 最大缺失Pod数,即滚动更新时最多可少于期望值出几个pod。支持数字和百分比格式

revisionHistoryLimit <integer> # 历史版本记录数,默认为最大值(2^32)

template <Object> -required- # Pod模板,和Pod管理器yaml几乎格式一致

metadata <Object> # Pod的metadata

spec <Object> # Pod的spec

2.3. 案例

2.3.1. 创建deployment

[root@hdss7-200 deployment]# vim /data/k8s-yaml/base_resource/deployment/nginx-v1.12.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: app

spec:

replicas: 5

selector:

matchLabels:

app: nginx

release: stable

tier: slb

partition: website

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

template:

metadata:

labels:

app: nginx

release: stable

tier: slb

partition: website

version: v1.12

spec:

containers:

- name: nginx-pod

image: harbor.od.com/public/nginx:v1.12

lifecycle:

postStart:

exec:

command:

- /bin/bash

- -c

- "echo 'health check ok!' > /usr/share/nginx/html/health.html"

readinessProbe:

initialDelaySeconds: 5

httpGet:

port: 80

path: /health.html

livenessProbe:

initialDelaySeconds: 10

periodSeconds: 5

httpGet:

port: 80

path: /health.html

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record

[root@hdss7-21 ~]# kubectl get pods -n app -l partition=website # 查看

NAME READY STATUS RESTARTS AGE

nginx-deploy-5597c8b45-425ms 1/1 Running 0 5m12s

nginx-deploy-5597c8b45-5p2rz 1/1 Running 0 9m34s

nginx-deploy-5597c8b45-dw7hd 1/1 Running 0 9m34s

nginx-deploy-5597c8b45-fg82k 1/1 Running 0 5m12s

nginx-deploy-5597c8b45-sfxmg 1/1 Running 0 9m34s

[root@hdss7-21 ~]# kubectl get rs -n app -l partition=website -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

nginx-deploy-5597c8b45 8 8 8 10m nginx-pod harbor.od.com/public/nginx:v1.12 app=nginx,partition=website,pod-template-hash=5597c8b45,release=stable,tier=slb

[root@hdss7-21 ~]# kubectl get deployment -n app -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deploy 8/8 8 8 11m nginx-pod harbor.od.com/public/nginx:v1.12 app=nginx,partition=website,release=stable,tier=slb

2.3.2. 模拟蓝绿发布

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: app

spec:

replicas: 5

selector:

matchLabels:

app: nginx

release: stable

tier: slb

partition: website

strategy:

rollingUpdate:

# 最大浪涌数量为5

maxSurge: 5

maxUnavailable: 0

template:

metadata:

labels:

app: nginx

release: stable

tier: slb

partition: website

# 修改版本信息,用于查看当前版本

version: v1.13

spec:

containers:

- name: nginx-pod

# 修改镜像

image: harbor.od.com/public/nginx:v1.13

lifecycle:

postStart:

exec:

command:

- /bin/bash

- -c

- "echo 'health check ok!' > /usr/share/nginx/html/health.html"

readinessProbe:

initialDelaySeconds: 5

httpGet:

port: 80

path: /health.html

livenessProbe:

initialDelaySeconds: 10

periodSeconds: 5

httpGet:

port: 80

path: /health.html

[root@hdss7-21 ~]# kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

[root@hdss7-21 ~]# kubectl rollout history deployment nginx-deploy -n app

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

[root@hdss7-21 ~]# kubectl get rs -n app -l tier=slb -L version # 多个ReplicaSet对应不同版本

NAME DESIRED CURRENT READY AGE VERSION

nginx-deploy-5597c8b45 0 0 0 10m v1.12

nginx-deploy-6bd88df699 5 5 5 9m31s v1.13

# 升级过程中的状态变化:

[root@hdss7-21 ~]# kubectl rollout status deployment nginx-deploy -n app

Waiting for deployment "nginx-deploy" rollout to finish: 5 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 5 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 5 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 4 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 4 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 4 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 3 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 3 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 3 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

deployment "nginx-deploy" successfully rolled out

[root@hdss7-21 ~]# kubectl get pod -n app -l partition=website -L version -w

NAME READY STATUS RESTARTS AGE VERSION

nginx-deploy-5597c8b45-t5plt 1/1 Running 0 19s v1.12

nginx-deploy-5597c8b45-tcq69 1/1 Running 0 19s v1.12

nginx-deploy-5597c8b45-vdjxg 1/1 Running 0 19s v1.12

nginx-deploy-5597c8b45-vqn9x 1/1 Running 0 19s v1.12

nginx-deploy-5597c8b45-zl6qr 1/1 Running 0 19s v1.12

---- 立刻创建5个新版本pod,Pending调度中

nginx-deploy-6bd88df699-242fr 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-242fr 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-8pmdg 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-4kj8z 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-n7x6n 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-8pmdg 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-4kj8z 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-8j85n 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-n7x6n 0/1 Pending 0 0s v1.13

nginx-deploy-6bd88df699-8j85n 0/1 Pending 0 0s v1.13

---- 创建pod中

nginx-deploy-6bd88df699-242fr 0/1 ContainerCreating 0 0s v1.13

nginx-deploy-6bd88df699-8pmdg 0/1 ContainerCreating 0 0s v1.13

nginx-deploy-6bd88df699-4kj8z 0/1 ContainerCreating 0 0s v1.13

nginx-deploy-6bd88df699-n7x6n 0/1 ContainerCreating 0 0s v1.13

nginx-deploy-6bd88df699-8j85n 0/1 ContainerCreating 0 0s v1.13

---- 启动pod

nginx-deploy-6bd88df699-242fr 0/1 Running 0 1s v1.13

nginx-deploy-6bd88df699-8j85n 0/1 Running 0 1s v1.13

nginx-deploy-6bd88df699-4kj8z 0/1 Running 0 1s v1.13

nginx-deploy-6bd88df699-n7x6n 0/1 Running 0 1s v1.13

nginx-deploy-6bd88df699-8pmdg 0/1 Running 0 1s v1.13

---- Pod逐个就绪,且替换旧版本的pod

nginx-deploy-6bd88df699-242fr 1/1 Running 0 6s v1.13

nginx-deploy-5597c8b45-t5plt 1/1 Terminating 0 50s v1.12

nginx-deploy-6bd88df699-8j85n 1/1 Running 0 7s v1.13

nginx-deploy-5597c8b45-vdjxg 1/1 Terminating 0 51s v1.12

nginx-deploy-5597c8b45-t5plt 0/1 Terminating 0 51s v1.12

nginx-deploy-5597c8b45-t5plt 0/1 Terminating 0 51s v1.12

nginx-deploy-6bd88df699-4kj8z 1/1 Running 0 7s v1.13

nginx-deploy-5597c8b45-zl6qr 1/1 Terminating 0 51s v1.12

nginx-deploy-5597c8b45-vdjxg 0/1 Terminating 0 52s v1.12

nginx-deploy-5597c8b45-vdjxg 0/1 Terminating 0 52s v1.12

nginx-deploy-5597c8b45-zl6qr 0/1 Terminating 0 53s v1.12

nginx-deploy-5597c8b45-t5plt 0/1 Terminating 0 54s v1.12

nginx-deploy-5597c8b45-t5plt 0/1 Terminating 0 54s v1.12

nginx-deploy-5597c8b45-zl6qr 0/1 Terminating 0 56s v1.12

nginx-deploy-5597c8b45-zl6qr 0/1 Terminating 0 56s v1.12

nginx-deploy-6bd88df699-n7x6n 1/1 Running 0 13s v1.13

nginx-deploy-5597c8b45-tcq69 1/1 Terminating 0 57s v1.12

nginx-deploy-5597c8b45-tcq69 0/1 Terminating 0 58s v1.12

nginx-deploy-5597c8b45-tcq69 0/1 Terminating 0 59s v1.12

nginx-deploy-6bd88df699-8pmdg 1/1 Running 0 15s v1.13

nginx-deploy-5597c8b45-vqn9x 1/1 Terminating 0 59s v1.12

nginx-deploy-5597c8b45-vqn9x 0/1 Terminating 0 60s v1.12

nginx-deploy-5597c8b45-vqn9x 0/1 Terminating 0 61s v1.12

nginx-deploy-5597c8b45-vqn9x 0/1 Terminating 0 61s v1.12

nginx-deploy-5597c8b45-vdjxg 0/1 Terminating 0 64s v1.12

nginx-deploy-5597c8b45-vdjxg 0/1 Terminating 0 64s v1.12

nginx-deploy-5597c8b45-tcq69 0/1 Terminating 0 64s v1.12

nginx-deploy-5597c8b45-tcq69 0/1 Terminating 0 64s v1.12

2.3.3. 滚动发布

通过定义 maxsurge 和 maxUnavailable 来实现滚动升级的速度,滚动升级中,可以使用 kubectl rollout pause 来实现暂停。

[root@hdss7-200 deployment]# vim /data/k8s-yaml/base_resource/deployment/nginx-v1.14.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: app

spec:

replicas: 5

selector:

matchLabels:

app: nginx

release: stable

tier: slb

partition: website

strategy:

rollingUpdate:

# 以下两项,控制升级速度

maxSurge: 1

maxUnavailable: 0

template:

metadata:

labels:

app: nginx

release: stable

tier: slb

partition: website

# 修改版本

version: v1.14

spec:

containers:

- name: nginx-pod

# 修改镜像版本

image: harbor.od.com/public/nginx:v1.14

lifecycle:

postStart:

exec:

command:

- /bin/bash

- -c

- "echo 'health check ok!' > /usr/share/nginx/html/health.html"

readinessProbe:

initialDelaySeconds: 5

httpGet:

port: 80

path: /health.html

livenessProbe:

initialDelaySeconds: 10

periodSeconds: 5

httpGet:

port: 80

path: /health.html

[root@hdss7-21 ~]# kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.14.yaml --record=true

[root@hdss7-21 ~]# kubectl get rs -n app -l tier=slb -L version # replicaset 数量增加

NAME DESIRED CURRENT READY AGE VERSION

nginx-deploy-5597c8b45 0 0 0 155m v1.12

nginx-deploy-6bd88df699 0 0 0 154m v1.13

nginx-deploy-7c5976dcd9 5 5 5 83s v1.14

[root@hdss7-21 ~]# kubectl rollout history deployment nginx-deploy -n app # 升级记录

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

3 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.14.yaml --record=true

[root@hdss7-21 ~]# kubectl get pod -n app -l partition=website -L version -w # 逐个滚动升级

NAME READY STATUS RESTARTS AGE VERSION

nginx-deploy-6bd88df699-242fr 1/1 Running 0 152m v1.13

nginx-deploy-6bd88df699-4kj8z 1/1 Running 0 152m v1.13

nginx-deploy-6bd88df699-8j85n 1/1 Running 0 152m v1.13

nginx-deploy-6bd88df699-8pmdg 1/1 Running 0 152m v1.13

nginx-deploy-6bd88df699-n7x6n 1/1 Running 0 152m v1.13

nginx-deploy-7c5976dcd9-ttlqx 0/1 Pending 0 0s v1.14

nginx-deploy-7c5976dcd9-ttlqx 0/1 Pending 0 0s v1.14

nginx-deploy-7c5976dcd9-ttlqx 0/1 ContainerCreating 0 0s v1.14

nginx-deploy-7c5976dcd9-ttlqx 0/1 Running 0 1s v1.14

nginx-deploy-7c5976dcd9-ttlqx 1/1 Running 0 9s v1.14

nginx-deploy-6bd88df699-8pmdg 1/1 Terminating 0 153m v1.13

......

2.3.4. 模拟灰度(金丝雀)发布

灰度发布在不同场景中实现方式不同,如果当前灰度机器仅对测试开放,可以定义一个新的deployment来配合service来实现。如果需要切入一部分随机真实用户的流量,可以将生产机器中一台机器作为灰度机器,通过灰度后再升级其它的机器。

# nginx-v1.15.yaml 与 nginx-v1.14.yaml 一致,仅仅修改了镜像文件

[root@hdss7-21 ~]# kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.15.yaml --record=true && kubectl rollout pause deployment nginx-deploy -n app

[root@hdss7-21 ~]# kubectl rollout history deployment nginx-deploy -n app

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

3 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.14.yaml --record=true

4 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.15.yaml --record=true

[root@hdss7-21 ~]# kubectl get rs -n app -l tier=slb -L version # 存在两个ReplicaSet对外提供服务

NAME DESIRED CURRENT READY AGE VERSION

nginx-deploy-5597c8b45 0 0 0 177m v1.12

nginx-deploy-6695fd9655 1 1 1 2m22s v1.15

nginx-deploy-6bd88df699 0 0 0 176m v1.13

nginx-deploy-7c5976dcd9 5 5 5 23m v1.14

[root@hdss7-21 ~]# kubectl get pod -n app -l partition=website -L version -w # 新老共存

NAME READY STATUS RESTARTS AGE VERSION

nginx-deploy-6695fd9655-tcm76 1/1 Running 0 17s v1.15

nginx-deploy-7c5976dcd9-4tnv4 1/1 Running 0 21m v1.14

nginx-deploy-7c5976dcd9-bpjc2 1/1 Running 0 20m v1.14

nginx-deploy-7c5976dcd9-gv8qm 1/1 Running 0 20m v1.14

nginx-deploy-7c5976dcd9-ttlqx 1/1 Running 0 21m v1.14

nginx-deploy-7c5976dcd9-xq2qs 1/1 Running 0 21m v1.14

# 手动暂停

[root@hdss7-21 ~]# kubectl rollout resume deployment nginx-deploy -n app && kubectl rollout pause deployment nginx-deploy -n app

[root@hdss7-21 ~]# kubectl get pod -n app -l partition=website -L version -w

NAME READY STATUS RESTARTS AGE VERSION

nginx-deploy-6695fd9655-jmb94 1/1 Running 0 19s v1.15

nginx-deploy-6695fd9655-tcm76 1/1 Running 0 6m19s v1.15

nginx-deploy-7c5976dcd9-4tnv4 1/1 Running 0 27m v1.14

nginx-deploy-7c5976dcd9-gv8qm 1/1 Running 0 26m v1.14

nginx-deploy-7c5976dcd9-ttlqx 1/1 Running 0 27m v1.14

nginx-deploy-7c5976dcd9-xq2qs 1/1 Running 0 27m v1.14

# 升级剩余所有机器

[root@hdss7-21 ~]# kubectl rollout resume deployment nginx-deploy -n app

2.3.5. 版本回滚

当升级出现异常时,执行回滚即可。

[root@hdss7-21 ~]# kubectl rollout history deployment nginx-deploy -n app # 查看历史版本记录

deployment.extensions/nginx-deploy

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

3 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.14.yaml --record=true

4 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.15.yaml --record=true

[root@hdss7-21 ~]# kubectl rollout undo deployment nginx-deploy -n app

[root@hdss7-21 ~]# kubectl rollout history deployment nginx-deploy -n app # 版本3已经被版本5替代

deployment.extensions/nginx-deploy

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.13.yaml --record=true

4 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.15.yaml --record=true

5 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.14.yaml --record=true

[root@hdss7-21 ~]# kubectl get pod -n app -l partition=website -L version

NAME READY STATUS RESTARTS AGE VERSION

nginx-deploy-7c5976dcd9-2kps8 1/1 Running 0 2m20s v1.14

nginx-deploy-7c5976dcd9-bqs28 1/1 Running 0 2m6s v1.14

nginx-deploy-7c5976dcd9-jdvps 1/1 Running 0 2m13s v1.14

nginx-deploy-7c5976dcd9-vs8l4 1/1 Running 0 116s v1.14

nginx-deploy-7c5976dcd9-z99mb 1/1 Running 0 101s v1.14

[root@hdss7-21 ~]# kubectl get rs -n app -l tier=slb -L version

NAME DESIRED CURRENT READY AGE VERSION

nginx-deploy-5597c8b45 0 0 0 3h7m v1.12

nginx-deploy-6695fd9655 0 0 0 12m v1.15

nginx-deploy-6bd88df699 0 0 0 3h7m v1.13

nginx-deploy-7c5976dcd9 5 5 5 34m v1.14

2.3.6. 常用命令

kubectl rollout status deployment nginx-deploy -n app # 查看版本升级过程

kubectl rollout history deployment nginx-deploy -n app # 查看版本升级历史

kubectl apply -f http://k8s-yaml.od.com/base_resource/deployment/nginx-v1.15.yaml --record=true # 升级且记录升级命令

kubectl rollout undo deployment nginx-deploy -n app # 回滚到上个版本

kubectl rollout undo deployment nginx-deploy --to-revision=3 -n app # 回滚到版本3

3. DaemonSet

3.1. DaemonSet介绍

DaemonSet 确保全部(或者一些)Node 上运行一个 Pod 的副本。当有 Node 加入集群时,也会为他们新增一个 Pod 。当有 Node 从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod。使用 DaemonSet 的一些典型用法:

- 运行集群存储 daemon,例如在每个 Node 上运行 glusterd、ceph。

- 在每个 Node 上运行日志收集 daemon,例如fluentd、logstash。

- 在每个 Node 上运行监控 daemon,例如 Prometheus Node Exporter。

3.2. 模板

apiVersion: apps/v1

kind: DaemonSet

metadata

name <string> # 在一个名称空间不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释

spec

selector <Object> # 标签选择器

matchExpressions <[]Object> # 标签选择器的一种形式,多个条件使用AND连接

key <string> -required- # 标签中的Key

operator <string> -required- # 操作符,支持 In, NotIn, Exists, DoesNotExist

values <[]string> # value的数组集合,当操作符为In或NotIn时不能为空

matchLabels <map[string]string> # 使用key/value的格式做筛选

updateStrategy <Object> # 更新策略

type <string> # 更新类型,支持 Recreate, RollingUpdate。默认RollingUpdate

rollingUpdate <Object> # 滚动更新策略,仅在type为RollingUpdate时使用

maxUnavailable <string> # 最大缺失Pod数,即滚动更新时最多可少于期望值出几个pod。支持数字和百分比格式

template <Object> -required- # Pod模板,和Pod管理器yaml几乎格式一致

metadata <Object> # Pod的metadata

spec <Object> # Pod的spec

3.3. 案例

3.3.1. 创建daemonset

[root@hdss7-200 base_resource]# cat /data/k8s-yaml/base_resource/daemonset/proxy-v1.12.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: proxy-daemonset

namespace: app

labels:

app: nginx

release: stable

partition: CRM

spec:

selector:

matchLabels:

app: nginx

release: stable

tier: proxy

partition: CRM

updateStrategy:

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

app: nginx

release: stable

tier: proxy

partition: CRM

version: v1.12

spec:

containers:

- name: nginx-proxy

image: harbor.od.com/public/nginx:v1.12

ports:

- name: http

containerPort: 80

hostPort: 10080

lifecycle:

postStart:

exec:

command:

- /bin/bash

- -c

- "echo 'health check ok!' > /usr/share/nginx/html/health.html"

readinessProbe:

initialDelaySeconds: 5

httpGet:

port: 80

path: /health.html

livenessProbe:

initialDelaySeconds: 10

periodSeconds: 5

httpGet:

port: 80

path: /health.html

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/base_resource/daemonset/proxy-v1.12.yaml --record

[root@hdss7-21 ~]# kubectl get daemonset -n app

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

proxy-daemonset 2 2 2 2 2 <none> 56s

[root@hdss7-21 ~]# kubectl get pod -n app -l tier=proxy -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

proxy-daemonset-7stgs 1/1 Running 0 8m31s 172.7.22.9 hdss7-22.host.com <none> <none>

proxy-daemonset-dxgdp 1/1 Running 0 8m31s 172.7.21.10 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# curl -s 10.4.7.22:10080/info # 通过宿主机的端口访问

2020-01-22T13:15:58+00:00|172.7.22.9|nginx:v1.12

[root@hdss7-21 ~]# curl -s 10.4.7.21:10080/info

2020-01-22T13:16:05+00:00|172.7.21.10|nginx:v1.12

3.3.2. 升级daemonset

daemonset的升级方式和deployment一致

[root@hdss7-21 ~]# kubectl rollout history daemonset proxy-daemonset -n app

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/daemonset/proxy-v1.12.yaml --record=true

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/base_resource/daemonset/proxy-v1.13.yaml --record

[root@hdss7-21 ~]# kubectl rollout history daemonset proxy-daemonset -n app

daemonset.extensions/proxy-daemonset

REVISION CHANGE-CAUSE

1 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/daemonset/proxy-v1.12.yaml --record=true

2 kubectl apply --filename=http://k8s-yaml.od.com/base_resource/daemonset/proxy-v1.13.yaml --record=true

[root@hdss7-21 ~]# kubectl get pod -n app -l tier=proxy -L version

NAME READY STATUS RESTARTS AGE VERSION

proxy-daemonset-7wr4f 1/1 Running 0 119s v1.13

proxy-daemonset-clhqk 1/1 Running 0 2m11s v1.13

4. Job

4.1. Job介绍

Job也是一种很常用的控制器,在执行自动化脚本的时候就可以使用,比如使用ansible脚本job创建一个新的k8s集群,使用job创建MySQL账号密码,使用job去执行helm脚本等等。

4.2. 模板

Job的spec中没有selector!

apiVersion: batch/v1

kind: Job

metadata

name <string> # 在一个名称空间不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释

spec

backoffLimit <integer> # 重试次数,默认6

completions <integer> # 指定当前job要执行多少次pod

parallelism <integer> # 指定多个pod执行时的并发数

template <Object> -required- # Pod模板,和Pod管理器yaml几乎格式一致

metadata <Object> # Pod的metadata

spec <Object> # Pod的spec

4.3. 案例

[root@centos-7-51 ~]# cat /tmp/job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: test-job

namespace: default

spec:

template:

metadata:

labels:

jobType: sleep

spec:

restartPolicy: Never

containers:

- name: job-sleep

image: busybox:latest

command:

- sleep

- "60"

[root@centos-7-51 ~]# kubectl apply -f /tmp/job.yaml

[root@centos-7-51 ~]# kubectl get pod -o wide| grep job

test-job-2fccp 0/1 Completed 0 3m21s 172.16.4.43 centos-7-55 <none> <none>

[root@centos-7-51 ~]# kubectl get job -o wide

NAME COMPLETIONS DURATION AGE CONTAINERS IMAGES SELECTOR

test-job 1/1 68s 3m26s job-sleep busybox:latest controller-uid=3ba88fdd-ad1c-46c7-86b4-dc6e641ebdb4

5. CronJob

5.1. cronjob介绍

cronJob 是类似于Linux中的计划任务,用于执行周期性任务,如数据备份、周期性采集相关信息并发送邮件等。

https://kubernetes.io/zh/docs/tasks/job/automated-tasks-with-cron-jobs/

5.2. 模板

apiVersion: batch/v1beta1

kind: batch/v1beta1

metadata <Object>

name <string> # 在一个名称空间不能重复

namespace <string> # 指定名称空间,默认defalut

labels <map[string]string> # 标签

annotations <map[string]string> # 注释

spec <Object>

concurrencyPolicy <string> # 新任务执行时上个任务还未结束时如何处理,Allow(默认值):允许新老任务并发执行;Forbid:忽略新任务;Replace:停止老任务,运行新任务

failedJobsHistoryLimit <integer> # 失败历史记录保留次数,默认3

successfulJobsHistoryLimit <integer> # 成功历史记录保留次数,默认1

schedule <string> -required- # 计划任务的cron,与Linux的一致

startingDeadlineSeconds <integer> # 如果任务错过调度的时间超过秒数表示任务失败,超过100次不再调度,参考 https://kubernetes.io/zh/docs/tasks/job/automated-tasks-with-cron-jobs/#starting-deadline

suspend <boolean> # https://kubernetes.io/zh/docs/tasks/job/automated-tasks-with-cron-jobs/#%E6%8C%82%E8%B5%B7

jobTemplate <Object> -required- # job 的任务模板

metadata <Object>

spec <Object>

5.3. 案例

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

concurrencyPolicy: Forbid

successfulJobsHistoryLimit: 3

failedJobsHistoryLimit: 10

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

imagePullPolicy: IfNotPresent

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

[root@centos-7-52 ~]# kubectl apply -f /tmp/cronjob.yaml

[root@centos-7-52 ~]# kubectl get cronjob -o wide #查看cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE CONTAINERS IMAGES SELECTOR

hello */1 * * * * False 0 52s 6m36s hello busybox <none>

[root@centos-7-52 ~]# kubectl get job -o wide | grep hello # 查看执行的job历史,Job名称中的数字为时间戳

hello-1607757060 1/1 1s 2m48s hello busybox controller-uid=194350a4-21ca-4d01-b264-34a963e78ce7

hello-1607757120 1/1 1s 108s hello busybox controller-uid=e30a318c-6c0f-4d1d-b200-3dfaffe083c5

hello-1607757180 1/1 1s 48s hello busybox controller-uid=a00d02fc-8d7e-40b3-8e2a-25620ecab427

[root@centos-7-52 ~]# date -d @1607757180

Sat Dec 12 15:13:00 CST 2020

6. StatefulSet

6.1. 介绍

Deployment 是用来发布和管理无状态服务的,所有节点之间是相同的,他们挂载相同的共享存储,且Pod名称后缀也是随机生成的,异常后可以随时重启。而有状态应用程序,他们的Pod之间是有区别的,启动也是有顺序要求,而且往往需要挂载不同的存储卷。由于有状态的应用程序本身比较复杂,尤其是发生故障重启时可能需要人为干预,因此复杂的有状态程序不建议放到K8s集群中,如MySQL集群。

K8S中StatefulSet 是用来管理有状态应用的工作负载 API 对象,StatefulSet 用来管理某 Pod 集合的部署和扩缩, 并为这些 Pod 提供持久存储和持久标识符。和 Deployment 类似, StatefulSet 管理基于相同容器规约的一组 Pod。但和 Deployment 不同的是, StatefulSet 为它们的每个 Pod 维护了一个有粘性的 ID。这些 Pod 是基于相同的规约来创建的, 但是不能相互替换:无论怎么调度,每个 Pod 都有一个永久不变的 ID。如果希望使用存储卷为工作负载提供持久存储,可以使用 StatefulSet 作为解决方案的一部分。 尽管 StatefulSet 中的单个 Pod 仍可能出现故障, 但持久的 Pod 标识符使得将现有卷与替换已失败 Pod 的新 Pod 相匹配变得更加容易。

6.1.1. Statefulset使用场景

- 稳定的、唯一的网络标识符,即每个Pod有自己独有的域名,无论重启多少次都不会变

- 稳定的、持久的存储,即每个Pod有自己独享的PVC

- 有序的、优雅的部署和缩放,即每个Pod会按照序号从小到大启动,按照需要从大到小被停止

- 有序的、自动的滚动更新,即每个Pod按照需要从大到小进行重启升级

6.1.2. Statefulset注意项

- 需要定义pvc申请模板,每个pod使用不同的pvc。可以通过storageclass分配或者提前创建好pv

- 需要一个headless的service作为服务发现

- 如果需要删除statefulset,推荐将副本数置为0再删除

- 删除statefulset,并不会删除申请的pvc和pv

- 升级过程中,如果出现问题,可能需要人为干预

6.2. 模板

apiVersion: apps/v1

kind: StatefulSet

metadata <Object>

spec:

replicas <integer>

serviceName <string> -required-

selector <Object> -required- # Pod选择器

template <Object> -required- # Pod模板

volumeClaimTemplates <[]Object>

metadata <Object>

spec <Object>

accessModes <[]string> # RWO:单路读写;ROX:单路只读;RWX:多路读写

resources <Object> # 指定当前PVC需要的系统最小资源限制

limits <map[string]string> # 资源限制,一般不配置

requests <map[string]string> # 资源限制,常用为 storage: xGi

storageClassName <string> # 声明使用的存储类,用于动态分配

volumeMode <string> # 使用卷的文件系统还是当成块设备来用,一般不配置

selector <Object> # 标签选择器,选择PV的标签,默认在所有PV中寻找

volumeName <string> # 指定PV名称,直接绑定PV

revisionHistoryLimit <integer> # 保留历史版本数量,默认10

podManagementPolicy <string> # 控制Pod启停顺序,默认OrderedReady,启动顺序,停止倒序。Parallel表示并行

updateStrategy <Object> # 更新策略

rollingUpdate <Object> # 滚动更新策略

partition <integer> # 指定更新到哪个分区,N表示更新序号大于等于N的Pod

type <string> # 更新策略,默认滚动更新RollingUpdate.

6.3. 案例

6.3.1. 创建Statefulset资源

---

apiVersion: v1

kind: Service

metadata:

name: my-svc

namespace: apps

spec:

selector:

app: nginx-web

role: web

state: "true"

clusterIP: None

ports:

- name: http

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx-web

namespace: apps

labels:

app: nginx

role: web

spec:

replicas: 2

serviceName: my-svc

selector:

matchLabels:

app: nginx-web

role: web

state: "true"

template:

metadata:

labels:

app: nginx-web

role: web

state: "true"

spec:

containers:

- name: nginx-web

image: linuxduduniao/nginx:v1.0.0

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: 80

path: /health

volumeMounts:

- name: nginx-web

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: nginx-web

namespace: apps

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 2Gi

storageClassName: managed-nfs-storage # nfs classstorage,参考https://www.yuque.com/duduniao/k8s/vgms23#3W9oz

[root@duduniao local-k8s-yaml]# kubectl -n apps get sts -o wide # 当前sts 状态

NAME READY AGE CONTAINERS IMAGES

nginx-web 2/2 19s nginx-web linuxduduniao/nginx:v1.0.0

[root@duduniao local-k8s-yaml]# kubectl -n apps get pod -o wide # 注意Pod名称

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-web-0 1/1 Running 0 86s 172.16.4.158 centos-7-55 <none> <none>

nginx-web-1 1/1 Running 0 77s 172.16.3.98 centos-7-54 <none> <none>

[root@duduniao local-k8s-yaml]# kubectl -n apps get pvc -o wide # 注意pvc名称

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

nginx-web-nginx-web-0 Bound pvc-b36b90f1-a268-4815-b55b-6f7fda587193 2Gi RWX managed-nfs-storage 93s Filesystem

nginx-web-nginx-web-1 Bound pvc-1461c537-e784-41fd-9e78-b1e6b212912c 2Gi RWX managed-nfs-storage 84s Filesystem

[root@duduniao local-k8s-yaml]# kubectl -n apps get pv -o wide # 注意pv名称

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE

pvc-1461c537-e784-41fd-9e78-b1e6b212912c 2Gi RWX Delete Bound apps/nginx-web-nginx-web-1 managed-nfs-storage 88s Filesystem

pvc-b36b90f1-a268-4815-b55b-6f7fda587193 2Gi RWX Delete Bound apps/nginx-web-nginx-web-0 managed-nfs-storage 97s Filesystem

[root@duduniao local-k8s-yaml]# kubectl -n apps describe svc my-svc

Name: my-svc

Namespace: apps

Labels: <none>

Annotations: Selector: app=nginx-web,role=web,state=true

Type: ClusterIP

IP: None

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 172.16.3.98:80,172.16.4.158:80

Session Affinity: None

Events: <none>

[root@centos-7-51 ~]# dig -t A my-svc.apps.svc.cluster.local @10.96.0.10 +short # 无头服务特性,用于服务发现

172.16.4.216

172.16.3.105

[root@centos-7-51 ~]# dig -t A nginx-web-0.my-svc.apps.svc.cluster.local @10.96.0.10 +short # statefulset 特性,可以用pod名称解析到Pod的地址

172.16.4.216

[root@centos-7-51 ~]# dig -t A nginx-web-1.my-svc.apps.svc.cluster.local @10.96.0.10 +short

172.16.3.105

6.3.2. 扩缩容

在默认的策略下,statefulset启停Pod都是按照顺序执行的,新建Pod按照序号从小到大进行,删除Pod从大到小进行,通常需要在Pod配置就绪性探针,严格保证次序。

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"replicas":5}}' # 增加副本数

[root@duduniao ~]# kubectl get pod -n apps -w # 观察启动顺序,前一个就绪后,再启动下一个

NAME READY STATUS RESTARTS AGE

nginx-web-0 1/1 Running 2 10h

nginx-web-1 1/1 Running 2 10h

nginx-web-2 0/1 Pending 0 0s

nginx-web-2 0/1 Pending 0 0s

nginx-web-2 0/1 Pending 0 1s

nginx-web-2 0/1 ContainerCreating 0 2s

nginx-web-2 0/1 Running 0 3s

nginx-web-2 1/1 Running 0 5s

nginx-web-3 0/1 Pending 0 0s

nginx-web-3 0/1 Pending 0 0s

nginx-web-3 0/1 Pending 0 1s

nginx-web-3 0/1 ContainerCreating 0 2s

nginx-web-3 0/1 Running 0 3s

nginx-web-3 1/1 Running 0 9s

nginx-web-4 0/1 Pending 0 0s

nginx-web-4 0/1 Pending 0 0s

nginx-web-4 0/1 Pending 0 1s

nginx-web-4 0/1 ContainerCreating 0 2s

nginx-web-4 0/1 Running 0 3s

nginx-web-4 1/1 Running 0 7s

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"replicas":2}}' # 减少副本数

[root@duduniao ~]# kubectl get pod -n apps -w # 观察停止顺序,按序号倒序停止

NAME READY STATUS RESTARTS AGE

nginx-web-0 1/1 Running 2 10h

nginx-web-1 1/1 Running 2 10h

nginx-web-2 1/1 Running 0 117s

nginx-web-3 1/1 Running 0 112s

nginx-web-4 1/1 Running 0 103s

nginx-web-4 1/1 Terminating 0 109s

nginx-web-4 0/1 Terminating 0 110s

nginx-web-4 0/1 Terminating 0 111s

nginx-web-4 0/1 Terminating 0 111s

nginx-web-4 0/1 Terminating 0 111s

nginx-web-3 1/1 Terminating 0 2m

nginx-web-3 0/1 Terminating 0 2m

nginx-web-3 0/1 Terminating 0 2m1s

nginx-web-3 0/1 Terminating 0 2m1s

nginx-web-2 1/1 Terminating 0 2m7s

nginx-web-2 0/1 Terminating 0 2m7s

nginx-web-2 0/1 Terminating 0 2m13s

nginx-web-2 0/1 Terminating 0 2m13s

6.3.3. 滚动更新

滚动更新是按照倒序方式进行,可以指定分区号,如指定分区号N,则表示更新序号大于等于N的Pod,默认分区为0,表示更新所有Pod

[root@duduniao local-k8s-yaml]# kubectl -n apps set image sts nginx-web nginx-web=linuxduduniao/nginx:v1.0.1

[root@duduniao ~]# kubectl get pod -n apps -w # 注意是倒序更新所有Pod

NAME READY STATUS RESTARTS AGE

nginx-web-0 1/1 Running 2 10h

nginx-web-1 1/1 Running 2 10h

nginx-web-1 1/1 Terminating 2 10h

nginx-web-1 0/1 Terminating 2 10h

nginx-web-1 0/1 Terminating 2 10h

nginx-web-1 0/1 Terminating 2 10h

nginx-web-1 0/1 Pending 0 0s

nginx-web-1 0/1 Pending 0 0s

nginx-web-1 0/1 ContainerCreating 0 0s

nginx-web-1 0/1 Running 0 22s

nginx-web-1 1/1 Running 0 27s

nginx-web-0 1/1 Terminating 2 10h

nginx-web-0 0/1 Terminating 2 10h

nginx-web-0 0/1 Terminating 2 10h

nginx-web-0 0/1 Terminating 2 10h

nginx-web-0 0/1 Pending 0 0s

nginx-web-0 0/1 Pending 0 0s

nginx-web-0 0/1 ContainerCreating 0 0s

nginx-web-0 0/1 ErrImagePull 0 29s

nginx-web-0 0/1 ImagePullBackOff 0 44s

nginx-web-0 0/1 Running 0 62s

nginx-web-0 1/1 Running 0 70s

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"replicas":5}}' # 扩容到5个副本,方便演示

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"updateStrategy":{"type":"RollingUpdate","rollingUpdate":{"partition":3}}}}' # 指定更新分区为3

[root@duduniao local-k8s-yaml]# kubectl -n apps set image sts nginx-web nginx-web=linuxduduniao/nginx:v1.0.2 # 开始更新

[root@duduniao ~]# kubectl get pod -n apps -w # 只更新序号4和3的Pod

NAME READY STATUS RESTARTS AGE

nginx-web-0 1/1 Running 0 6m31s

nginx-web-1 1/1 Running 0 7m12s

nginx-web-2 1/1 Running 0 3m38s

nginx-web-3 1/1 Running 0 3m33s

nginx-web-4 1/1 Running 0 3m23s

nginx-web-4 1/1 Terminating 0 3m25s

nginx-web-4 0/1 Terminating 0 3m26s

nginx-web-4 0/1 Terminating 0 3m32s

nginx-web-4 0/1 Terminating 0 3m32s

nginx-web-4 0/1 Pending 0 0s

nginx-web-4 0/1 Pending 0 0s

nginx-web-4 0/1 ContainerCreating 0 0s

nginx-web-4 0/1 Running 0 10s

nginx-web-4 1/1 Running 0 17s

nginx-web-3 1/1 Terminating 0 3m59s

nginx-web-3 0/1 Terminating 0 4m

nginx-web-3 0/1 Terminating 0 4m1s

nginx-web-3 0/1 Terminating 0 4m1s

nginx-web-3 0/1 Pending 0 0s

nginx-web-3 0/1 Pending 0 0s

nginx-web-3 0/1 ContainerCreating 0 0s

nginx-web-3 0/1 Running 0 22s

nginx-web-3 1/1 Running 0 25s

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"updateStrategy":{"type":"RollingUpdate","rollingUpdate":{"partition":0}}}}' # 修改分区为0

[root@duduniao ~]# kubectl get pod -n apps -w # 会更新剩下来的三个Pod

NAME READY STATUS RESTARTS AGE

nginx-web-0 1/1 Running 0 9m10s

nginx-web-1 1/1 Running 0 9m51s

nginx-web-2 1/1 Running 0 6m17s

nginx-web-3 1/1 Running 0 2m11s

nginx-web-4 1/1 Running 0 2m30s

nginx-web-2 1/1 Terminating 0 6m24s

nginx-web-2 0/1 Terminating 0 6m24s

nginx-web-2 0/1 Terminating 0 6m25s

nginx-web-2 0/1 Terminating 0 6m25s

nginx-web-2 0/1 Pending 0 0s

nginx-web-2 0/1 Pending 0 0s

nginx-web-2 0/1 ContainerCreating 0 0s

nginx-web-2 0/1 Running 0 1s

nginx-web-2 1/1 Running 0 9s

nginx-web-1 1/1 Terminating 0 10m

nginx-web-1 0/1 Terminating 0 10m

nginx-web-1 0/1 Terminating 0 10m

nginx-web-1 0/1 Terminating 0 10m

nginx-web-1 0/1 Pending 0 0s

nginx-web-1 0/1 Pending 0 0s

nginx-web-1 0/1 ContainerCreating 0 0s

nginx-web-1 0/1 Running 0 1s

nginx-web-1 1/1 Running 0 8s

nginx-web-0 1/1 Terminating 0 9m47s

nginx-web-0 0/1 Terminating 0 9m48s

nginx-web-0 0/1 Terminating 0 10m

nginx-web-0 0/1 Terminating 0 10m

nginx-web-0 0/1 Pending 0 0s

nginx-web-0 0/1 Pending 0 0s

nginx-web-0 0/1 ContainerCreating 0 0s

nginx-web-0 0/1 Running 0 1s

nginx-web-0 1/1 Running 0 9s

6.3.4. 删除Statefulset

与statefulset相关资源有三部分:statefulset、pv/pvc、svc,svc和pv/pvc需要手动删除。

通常删除statefulset时,推荐将其副本数置为0,实现有序停止,然后再删除statefulset,最后考虑是否需要删除svc和存储卷!

statefulset还提供了 --cascade=false 选项,表示不删除对应Pod,不过此项使用较少。

[root@duduniao local-k8s-yaml]# kubectl -n apps patch sts nginx-web -p '{"spec":{"replicas":0}}' # 有序停止

[root@duduniao local-k8s-yaml]# kubectl delete -n apps sts nginx-web # 删除statefulset

[root@duduniao local-k8s-yaml]# kubectl delete svc -n apps my-svc # 删除svc

[root@duduniao local-k8s-yaml]# kubectl get pv -n apps

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-1461c537-e784-41fd-9e78-b1e6b212912c 2Gi RWX Delete Bound apps/nginx-web-nginx-web-1 managed-nfs-storage 11h

pvc-277cff8f-c5e6-43fc-9498-ddc9ba788cb9 2Gi RWO Delete Bound apps/nginx-web-nginx-web-4 managed-nfs-storage 30m

pvc-7d1a1169-6e34-4331-9549-f2f4d2bf6f94 2Gi RWO Delete Bound apps/nginx-web-nginx-web-3 managed-nfs-storage 30m

pvc-b36b90f1-a268-4815-b55b-6f7fda587193 2Gi RWX Delete Bound apps/nginx-web-nginx-web-0 managed-nfs-storage 11h

pvc-d804a299-95e1-486c-a9cd-73c925ed21d6 2Gi RWO Delete Bound apps/nginx-web-nginx-web-2 managed-nfs-storage 31m

[root@duduniao local-k8s-yaml]# kubectl get pvc -n apps # pv和pvc即使删除Pod还是处于绑定状态,需要手动释放

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-web-nginx-web-0 Bound pvc-b36b90f1-a268-4815-b55b-6f7fda587193 2Gi RWX managed-nfs-storage 11h

nginx-web-nginx-web-1 Bound pvc-1461c537-e784-41fd-9e78-b1e6b212912c 2Gi RWX managed-nfs-storage 11h

nginx-web-nginx-web-2 Bound pvc-d804a299-95e1-486c-a9cd-73c925ed21d6 2Gi RWO managed-nfs-storage 31m

nginx-web-nginx-web-3 Bound pvc-7d1a1169-6e34-4331-9549-f2f4d2bf6f94 2Gi RWO managed-nfs-storage 31m

nginx-web-nginx-web-4 Bound pvc-277cff8f-c5e6-43fc-9498-ddc9ba788cb9 2Gi RWO managed-nfs-storage 30m

[root@duduniao local-k8s-yaml]# kubectl get pvc -n apps | awk '/nginx-web-nginx-web/{print "kubectl -n apps delete pvc", $1}'|bash

6.3.5. 部署有状态应用一般思路

有状态应用通常会组成集群,如MySQL。而且在启动之初还需要作初始化工作,如数据拷贝、数据导入等,通常需要initcontainer来实现。当出现故障时,如何顺利的进行故障转移也是需要考虑的,在实际操作中往往伴随着比较大的风险(相对于虚拟机部署而言)。一般性的建议是在对应官网找到kubernetes中部署方式,通常是helm脚本,通过配置合适的 values.yaml 来实现部署,但是即使如此,还是谨慎一些!

官方提供了两个示例:cassandra集群部署 、 MySQL集群部署。这里再推荐一个helm chart项目,以供学习使用:charts

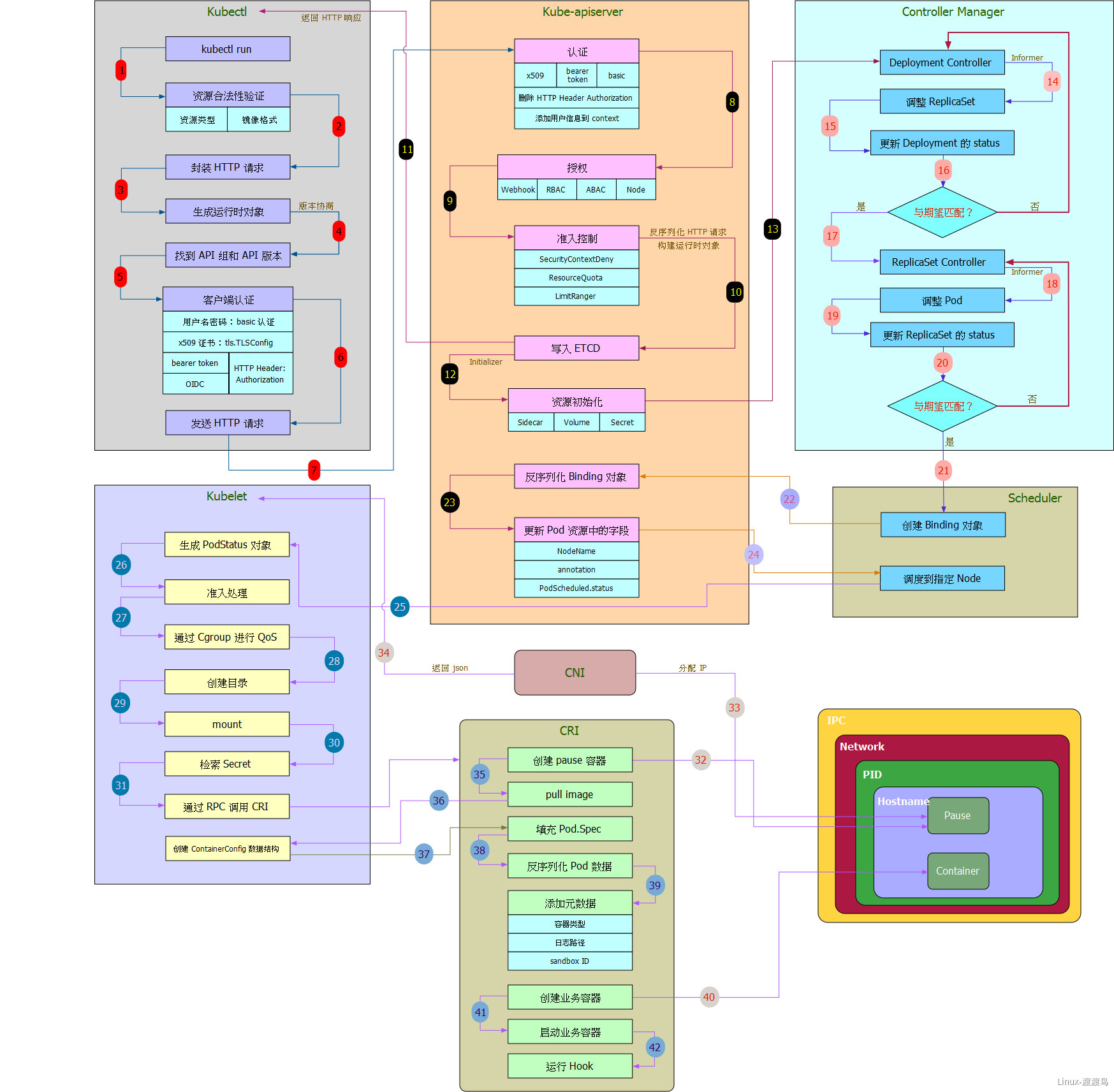

7. 原理部分

7.1. Deployment创建过程

以 kubectl apply -f deployment.yaml 为例,了解 Deployment 创建的过程。

Kubectl 生成 API 请求

- Kubectl 校验 deployment.yaml 中的字段,确保资源清单的合法性

- Kubectl 检查 deployment.yaml 中的API字段,填充kubeconfig的认证信息,填充 deployment 请求体信息。

- 将封装好的请求发送到 ApiServer

ApiServer 身份认证

- apiServer 通过认证信息确认用户合法身份

- 通过RBAC鉴权,确认用户具备资源操作权限

- 通过准入控制,确保满足Kubernetes部分高级功能,参考文档

- 将HTTP请求反序列化,存入ECTD中

- 执行资源初始化逻辑,如边车容器注入、证书注入等操作

ControllerManager创建资源对象

- Deployment Controller 通过ApiServer发现有Deployment需要创建

- Deployment Controller 通过ApiServer查询对应ReplicaSet是否满足,不满则则创建新的ReplicaSet,并设置版本号

- ReplicaSet Controller 通过ApiServer发现有新的 ReplicaSet资源,通过查询是否有满足条件的Pod,如果Pod不满足条件,则创建Pod对象

Scheduler 调度资源

- Scheduler 通过ApiServer监听创建新Pod的请求,一旦需要创建新Pod,则通过调度算法选择Node

- 先通过一组预算策略进行评估,筛选可以调度的Node

- 再通过优选策略,在符合条件的Node中选择得分最高的Node作为运行该Pod的节点,最高分有多个节点时,随机选择一个

- Scheduler 创建一个Binding对象并且请求ApiServer,该对象包含了Pod的唯一信息和选择的Nodename

- Kubelet 初始化Pod

之前所有的操作,都是在操作 etcd 数据库,只有 Kubelet 这一步才开始正式创建Pod

- Kubelet 通过ApiServer查询到当前自身节点上Pod清单,与自己缓存中记录进行比对,如果是新的Pod则进入创建流程

- Kubelet 生成Podstatus对象,填充Pod状态(Pending,Running,Succeeded,Failed,Unkown)

- Kubelet 检查Pod是否具备对应的准入权限,如果不具备则处于Pending状态

- 如果Pod存在资源限制,则通过Cgroups 对Pod进行资源限制

- 创建容器目录,并挂载存储卷的目录

- 检索ImagePullSecret,用于拉取镜像

- 通过CRI 接接口调用容器runtime创建容器

CRI 创建容器

- 创建Pause容器,用于为其他容器提供共享的网络名称空间和启动pid名称空间,从而实现网络共享和僵尸进程回收

- docker runtime 调用CNI插件,从IP资源池中分配一个IP地址给当前Pause容器

- 拉取业务镜像,如果填充了 imagepullsecret 会通过指定的密钥去拉取镜像

- 挂载configmap到容器

- 填充Pod元数据信息

- 启动容器,监听容器的event,执行对应钩子

8. 调度

8.1. 调度器

Kubernetes 中调度是指为Pod分配合适的工作节点,该过程是由Scheduler组件完成,调度结果写入 etcd 数据库中,交由kubelet组件去完成Pod启动。scheduler 调度过程分两个阶段:

- 预选策略:过滤出可调度节点。根据Pod清单筛选处集群中所有满足Pod运行条件的节点,这些节点称为可调度节点。

- 优选策略:打分并选择最高分。对可调度节点进行打分,选择最高峰的节点,如果存在多个最高分则随机选一个。

Scheduler 的策略可以通过kube-scheduler进行修改,但是一般很少操作。

scheduler 有一个配置 percentageOfNodesToScore ,值是0-100之间,0表示使用默认值,100以上等价于100。当该值为N,节点数为 M时,表示当可调度节点数量达到 N*M*0.01 时,停止执行预选函数,直接进入优选打分环节,这样可以避免轮询过多的节点影响性能。scheduler 默认计算方式:在 100-节点集群 下取 50%,在 5000-节点的集群下取 10%,这个参数默认的最低值是 5%,另外如果最小可调度节点数是50,这是程序中写死的,无法调整。从官网的描述来看,当节点数在几百个甚至更少的情况,调整percentageOfNodesToScore没有明显效果。

8.2. 节点选择器

# 为了方面演示,增加到三个节点,效果更加明显

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 Ready master 10d v1.18.12

centos-7-52 Ready master 10d v1.18.12

centos-7-53 Ready master 10d v1.18.12

centos-7-54 Ready worker 10d v1.18.12

centos-7-55 Ready worker 10d v1.18.12

centos-7-56 Ready worker 7m58s v1.18.12

[root@centos-7-51 ~]# kubectl label node centos-7-54 ssd=true # 打上标签方便区分

[root@centos-7-51 ~]# kubectl label node centos-7-55 ssd=true

[root@centos-7-51 ~]# kubectl label node centos-7-54 cpu=high

[root@centos-7-51 ~]# kubectl label node centos-7-56 cpu=high

节点选择器有两种,一种时直接指定nodeName,另一种是通过 nodeSelector 来根据标签选择:

- 指定nodeName

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

nodeName: centos-7-56

[root@centos-7-51 ~]# kubectl get pod -o wide # 全部调度到 centos-7-56 节点

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5648cd896-64ktx 1/1 Running 0 5m54s 172.16.5.11 centos-7-56 <none> <none>

nginx-deploy-5648cd896-fgx75 1/1 Running 0 5m54s 172.16.5.13 centos-7-56 <none> <none>

nginx-deploy-5648cd896-fvrlq 1/1 Running 0 5m54s 172.16.5.12 centos-7-56 <none> <none>

nginx-deploy-5648cd896-hzljl 1/1 Running 0 5m54s 172.16.5.15 centos-7-56 <none> <none>

nginx-deploy-5648cd896-qwrb5 1/1 Running 0 5m54s 172.16.5.14 centos-7-56 <none> <none>

- 使用nodeSelector

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

nodeSelector:

ssd: "true"

cpu: high

[root@centos-7-51 ~]# kubectl get pod -o wide # nodeSelector多个选项之间是 and 关系

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-6d5b594bf5-b7s68 1/1 Running 0 14s 172.16.3.134 centos-7-54 <none> <none>

nginx-deploy-6d5b594bf5-kv5kn 1/1 Running 0 14s 172.16.3.132 centos-7-54 <none> <none>

nginx-deploy-6d5b594bf5-sxsgv 1/1 Running 0 11s 172.16.3.135 centos-7-54 <none> <none>

nginx-deploy-6d5b594bf5-t2p8n 1/1 Running 0 11s 172.16.3.136 centos-7-54 <none> <none>

nginx-deploy-6d5b594bf5-xrrhp 1/1 Running 0 14s 172.16.3.133 centos-7-54 <none> <none>

8.3. 节点亲和性

节点亲和性分为强制选择(硬亲和)和优先选择(软亲和):

强制选择requiredDuringSchedulingIgnoredDuringExecution

- 满足则调度(案例一)

- 不满足则Pending(案例二)

优先选择preferredDuringSchedulingIgnoredDuringExecution

- 不满足则调度到其它节点(案例三)

- 满足则优先调度到该节点,并不是一定调度到该节点,而且该节点优先级较高(案例四)

- 案例一

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ssd

operator: DoesNotExist

- key: cpu

operator: In

values: ["high"]

[root@duduniao ~]# kubectl get pod -o wide # 不存在ssd标签,并且cpu值为 high

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-6f8b6d748c-4pt2x 1/1 Running 0 8s 172.16.5.20 centos-7-56 <none> <none>

nginx-deploy-6f8b6d748c-m9kb4 1/1 Running 0 8s 172.16.5.19 centos-7-56 <none> <none>

nginx-deploy-6f8b6d748c-st8mw 1/1 Running 0 5s 172.16.5.22 centos-7-56 <none> <none>

nginx-deploy-6f8b6d748c-w4mc9 1/1 Running 0 5s 172.16.5.21 centos-7-56 <none> <none>

nginx-deploy-6f8b6d748c-wjvxx 1/1 Running 0 8s 172.16.5.18 centos-7-56 <none> <none>

- 案例二

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ssd

operator: DoesNotExist

# 此处改为cpu不等于high

- key: cpu

operator: NotIn

values: ["high"]

[root@duduniao ~]# kubectl describe pod nginx-deploy-746f88c86-96dbp # 注意message

Name: nginx-deploy-746f88c86-96dbp

Namespace: default

Priority: 0

Node: <none>

......

Status: Pending

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 30s (x3 over 32s) default-scheduler 0/6 nodes are available: 3 node(s) didn't match node selector, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

- 案例三

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

# 系统中并没有这个的Node

- key: ssd

operator: DoesNotExist

- key: cpu

operator: NotIn

values: ["high"]

weight: 5

[root@duduniao ~]# kubectl get pod -o wide # 没有满足的节点,所以随机分布了

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5b9b5b66bc-5zrps 1/1 Running 0 31s 172.16.3.153 centos-7-54 <none> <none>

nginx-deploy-5b9b5b66bc-brwgb 1/1 Running 0 31s 172.16.4.249 centos-7-55 <none> <none>

nginx-deploy-5b9b5b66bc-f49j9 1/1 Running 0 31s 172.16.5.23 centos-7-56 <none> <none>

nginx-deploy-5b9b5b66bc-jnh45 1/1 Running 0 30s 172.16.4.250 centos-7-55 <none> <none>

nginx-deploy-5b9b5b66bc-njft8 1/1 Running 0 29s 172.16.3.154 centos-7-54 <none> <none>

- 案例四

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

# 满足该条件的节点为 centos-7-56,当并不是一把梭全部压在该节点,而且该节点的优先级更高,通过weight可以增加优先级

- preference:

matchExpressions:

- key: ssd

operator: DoesNotExist

- key: cpu

operator: In

values:

- high

weight: 10

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5996df99f8-4cmfv 1/1 Running 0 7s 172.16.4.4 centos-7-55 <none> <none>

nginx-deploy-5996df99f8-77k5l 1/1 Running 0 7s 172.16.5.38 centos-7-56 <none> <none>

nginx-deploy-5996df99f8-8kxvc 1/1 Running 0 7s 172.16.5.37 centos-7-56 <none> <none>

nginx-deploy-5996df99f8-t55hj 1/1 Running 0 7s 172.16.3.161 centos-7-54 <none> <none>

nginx-deploy-5996df99f8-zbpf2 1/1 Running 0 7s 172.16.5.39 centos-7-56 <none> <none>

8.4. Pod亲和性

pod亲和性和节点亲和性类似,也非为硬亲和软亲和

- 案例一(硬亲和)

[root@duduniao local-k8s-yaml]# kubectl label node centos-7-55 cpu=slow # 此时三个节点都存在cpu标签,仅centos-7-55为cpu=slow其它为high

[root@duduniao local-k8s-yaml]# kubectl get pod -l app=nginx -o wide # 为了效果明显,建nginx-deploy减少为1个副本,当前在cnetos-7-56上

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-cfbbb7cbd-jb4xz 1/1 Running 0 13m 172.16.5.40 centos-7-56 <none> <none>

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

spec:

replicas: 5

selector:

matchLabels:

app: slb

template:

metadata:

labels:

app: slb

spec:

containers:

- name: slb-demo

image: linuxduduniao/nginx:v1.0.1

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

# centos-7-54和centos-7-56都满足

- labelSelector:

matchLabels:

app: nginx

namespaces: [default]

topologyKey: cpu

[root@duduniao local-k8s-yaml]# kubectl get pod -l app=slb -o wide # centos-7-55不满足 cpu=high,所以不会调度

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

slb-deploy-7d9b6c47d-drjls 1/1 Running 0 4m19s 172.16.3.165 centos-7-54 <none> <none>

slb-deploy-7d9b6c47d-dsw7t 1/1 Running 0 4m19s 172.16.5.43 centos-7-56 <none> <none>

slb-deploy-7d9b6c47d-fn6k2 1/1 Running 0 4m19s 172.16.5.44 centos-7-56 <none> <none>

slb-deploy-7d9b6c47d-kw9vh 1/1 Running 0 4m19s 172.16.3.166 centos-7-54 <none> <none>

slb-deploy-7d9b6c47d-pl6lz 1/1 Running 0 4m19s 172.16.5.45 centos-7-56 <none> <none>

- 案例二(软亲和)

[root@duduniao local-k8s-yaml]# kubectl label node centos-7-54 cpu=slow --overwrite # 使得仅cnetos-7-56满足cpu=high

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

spec:

replicas: 5

selector:

matchLabels:

app: slb

template:

metadata:

labels:

app: slb

spec:

containers:

- name: slb-demo

image: linuxduduniao/nginx:v1.0.1

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchLabels:

app: nginx

namespaces: [default]

topologyKey: cpu

weight: 10

[root@duduniao local-k8s-yaml]# kubectl get pod -l app=slb -o wide # 大部分选择了cnetos-7-56,而不是全部梭哈

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

slb-deploy-6b5fc7bd96-78cj4 1/1 Running 0 16s 172.16.5.48 centos-7-56 <none> <none>

slb-deploy-6b5fc7bd96-clchc 1/1 Running 0 18s 172.16.3.167 centos-7-54 <none> <none>

slb-deploy-6b5fc7bd96-fxwjf 1/1 Running 0 16s 172.16.5.47 centos-7-56 <none> <none>

slb-deploy-6b5fc7bd96-ls8zr 1/1 Running 0 18s 172.16.5.46 centos-7-56 <none> <none>

slb-deploy-6b5fc7bd96-zn9t8 1/1 Running 0 18s 172.16.4.5 centos-7-55 <none> <none>

8.5. 污点和污点容忍度

- Node节点上污点管理

用法:

增加污点: kubectl taint node <node_name> key=value:effect

取消污点: kubectl taint node <node_name> key=value:effect-

查看污点: kubectl describe node <node_name>

effect:

PreferNoSchedule: 优先不调度,但是其它节点不满足时可以调度

NoSchedule: 禁止新的Pod调度,已经调度的Pod不会被驱逐

NoExecute: 禁止新的Pod调度,并且已经运行在该节点时的,其不能容忍污点的Pod将被驱逐

# 查看master的污点

[root@duduniao local-k8s-yaml]# kubectl describe node centos-7-51

Name: centos-7-51

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=centos-7-51

kubernetes.io/os=linux

node-role.kubernetes.io/master=

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"52:8a:0e:48:b4:92"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 10.4.7.51

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Fri, 04 Dec 2020 21:49:43 +0800

Taints: node-role.kubernetes.io/master:NoSchedule # 不可调度

......

# 以此作为示例Pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 6

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: linuxduduniao/nginx:v1.0.0

# kubectl apply 部署上述的deployment后,Pod分散在三个不同的node上

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-8697d45cb8-4x564 1/1 Running 0 4m9s 172.16.4.9 centos-7-55 <none> <none>

nginx-deploy-8697d45cb8-bxms4 1/1 Running 0 4m9s 172.16.5.55 centos-7-56 <none> <none>

nginx-deploy-8697d45cb8-c4rbf 1/1 Running 0 4m9s 172.16.3.171 centos-7-54 <none> <none>

nginx-deploy-8697d45cb8-hvs92 1/1 Running 0 4m9s 172.16.3.172 centos-7-54 <none> <none>

nginx-deploy-8697d45cb8-sbfvj 1/1 Running 0 4m9s 172.16.5.54 centos-7-56 <none> <none>

nginx-deploy-8697d45cb8-sw5m4 1/1 Running 0 4m9s 172.16.4.10 centos-7-55 <none> <none>

# 使用NoSchedule污点,发现并不会使得现有的Pod发生重调度

[root@duduniao local-k8s-yaml]# kubectl taint node centos-7-54 monitor=true:NoSchedule

node/centos-7-54 tainted

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-8697d45cb8-4x564 1/1 Running 0 5m18s 172.16.4.9 centos-7-55 <none> <none>

nginx-deploy-8697d45cb8-bxms4 1/1 Running 0 5m18s 172.16.5.55 centos-7-56 <none> <none>

nginx-deploy-8697d45cb8-c4rbf 1/1 Running 0 5m18s 172.16.3.171 centos-7-54 <none> <none>

nginx-deploy-8697d45cb8-hvs92 1/1 Running 0 5m18s 172.16.3.172 centos-7-54 <none> <none>

nginx-deploy-8697d45cb8-sbfvj 1/1 Running 0 5m18s 172.16.5.54 centos-7-56 <none> <none>

nginx-deploy-8697d45cb8-sw5m4 1/1 Running 0 5m18s 172.16.4.10 centos-7-55 <none> <none>

# 更新deployment后,新的Pod不再调到 NoSchedule 节点

root@duduniao local-k8s-yaml]# kubectl set image deployment nginx-deploy nginx-demo=linuxduduniao/nginx:v1.0.1

deployment.apps/nginx-deploy image updated

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-8494c5b6c5-5ddd9 1/1 Running 0 8s 172.16.4.13 centos-7-55 <none> <none>

nginx-deploy-8494c5b6c5-5zdjg 1/1 Running 0 10s 172.16.5.56 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-bqpgn 1/1 Running 0 10s 172.16.5.57 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-dq44w 1/1 Running 0 9s 172.16.4.12 centos-7-55 <none> <none>

nginx-deploy-8494c5b6c5-pnvw6 1/1 Running 0 8s 172.16.5.58 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-xnf77 1/1 Running 0 10s 172.16.4.11 centos-7-55 <none> <none>

# 设置NoExecute后,现有Pod会被驱逐

[root@duduniao local-k8s-yaml]# kubectl taint node centos-7-55 monitor=true:NoExecute

node/centos-7-55 tainted

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-8494c5b6c5-5zdjg 1/1 Running 0 3m8s 172.16.5.56 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-bqpgn 1/1 Running 0 3m8s 172.16.5.57 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-bzc2c 1/1 Running 0 14s 172.16.5.60 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-f7k2b 1/1 Running 0 14s 172.16.5.62 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-pnvw6 1/1 Running 0 3m6s 172.16.5.58 centos-7-56 <none> <none>

nginx-deploy-8494c5b6c5-s57tv 1/1 Running 0 14s 172.16.5.61 centos-7-56 <none> <none>

- Pod的污点容忍度

# api-server 能容忍所有NoExecute的污点,因此能在Master上运行

[root@duduniao local-k8s-yaml]# kubectl describe pod -n kube-system kube-apiserver-centos-7-51

......

Tolerations: :NoExecute

# 清除所有节点的污点后,执行以下操作。模拟Prometheus(Prometheus占用内存巨大,推荐单独部署到一个固有节点)的部署

[root@duduniao local-k8s-yaml]# kubectl label node centos-7-56 prometheus=true

node/centos-7-56 labeled

[root@duduniao local-k8s-yaml]# kubectl taint node centos-7-56 monitor=true:NoSchedule

node/centos-7-56 tainted

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus-demo

image: linuxduduniao/nginx:v1.0.1

nodeSelector:

prometheus: "true"

tolerations:

- key: monitor

operator: Exists

effect: NoSchedule

# 通过节点选择器和污点容忍度,实现独占一个节点

[root@duduniao local-k8s-yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

prometheus-76f64854b7-sxdq9 1/1 Running 0 2m21s 172.16.5.71 centos-7-56 <none> <none>

8.6. Pod驱逐

在升级节点、移除节点之前,需要将该节上的Pod进行驱逐,并且保证不会有新的Pod调度进来,通常使用 kubectl drain options