背景

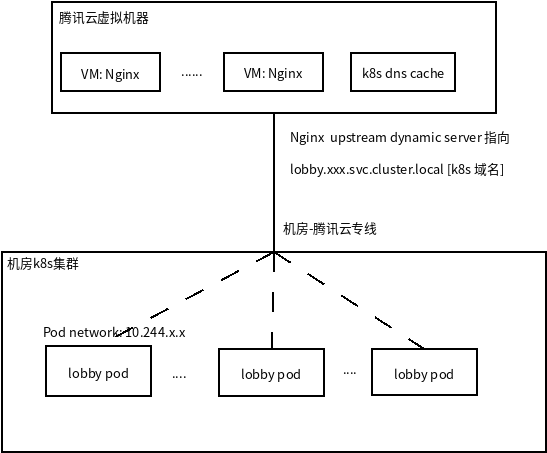

在最近一直在准备k8s 发布20000 ops吞吐量图片识别服务。服务外部Nginx入口部署在多台腾讯云虚拟机器上,后端计算服务部署在机房k8s实例上,入口虚拟和公司机房通过专线和腾讯云虚拟打通。【上一篇文章】<br /> 在使用的时候,k8s 实例在滚动更新,nginx-upstream-dynamic-server 可以通过dns发现最新实例,如果实例变化后自动reload `重新创建` 新worker, 如果流量大的时候出现, 通过k8s滚动更新,更新后端实例, nginx死锁, cpu 100%, nginx 基本不可用:

#0 0x0000000000437e7e in ngx_rwlock_wlock ()

#1 0x000000000049e6fd in ngx_http_upstream_free_round_robin_peer ()

#2 0x00000000004eade3 in ngx_http_upstream_free_keepalive_peer ()

#3 0x0000000000498820 in ngx_http_upstream_finalize_request ()

#4 0x0000000000497d14 in ngx_http_upstream_process_request ()

#5 0x0000000000497a52 in ngx_http_upstream_process_upstream ()

#6 0x0000000000491f1a in ngx_http_upstream_handler ()

#7 0x000000000045a8ba in ngx_epoll_process_events ()

#8 0x000000000044a6b5 in ngx_process_events_and_timers ()

#9 0x00000000004583ff in ngx_worker_process_cycle ()

#10 0x0000000000454cfb in ngx_spawn_process ()

#11 0x000000000045723b in ngx_start_worker_processes ()

#12 0x0000000000456858 in ngx_master_process_cycle ()

#13 0x000000000041a519 in main ()

可以参考: [https://github.com/GUI/nginx-upstream-dynamic-servers/issues/18](https://github.com/GUI/nginx-upstream-dynamic-servers/issues/18)。<br /> 如果实现无reload更新实例可以用tengine, 但是确定,如果更新频率比较低,k8s实例关闭以后,继续往关闭实例发送,导致更新过程很多失败请求。通过 openresty 提供[LuaJIT](http://luajit.org/download.html), 动态修改upstream, 实现无reload。

Nginx lua upstream dynamic

仿照 kube-ingress-nginx 打造一个可以通过 kube-dns 动态配置 upstream 代码。架构图依然外面nginx可以通过域名解析解析 headless service 所用 pod 地址, 采用了在外部部署nginx来实现负载均衡的方法,由于upstream里的pod ip会动态变化,所以我们不能直接在upstream里写死pod的ip地址,而只能用service的域名来替代,并让nginx自己去解析这个域名<br />

定时获取服务最新实例

lua 代码需要通过共享字典存储 k8s server 域名,共享存储可以在多个 `worker` 可见面。 在 `worker` 初始化的时候配置定时器回调函数,定时从 `dns` 获取服务最新实例。更新对应服务 loadbalancer, 保存到 `packer.load`

nginx.conf

user nginx;

worker_processes auto;

worker_cpu_affinity auto;

http {

# 共享辞典,存储dns域名

lua_shared_dict _upstreams_dns 64m;

# 主进程执行

init_by_lua_block {

local cjson = require("cjson")

local ups = ngx.shared._upstreams_dns

package.loaded.rr_balancers = {}

-- 可以配置一个或者多个获取实例域名

ups:set("image-lobby-production.cloud.svc.cluster.local", cjson.encode(nil))

}

# worker 进程执行

init_worker_by_lua_block {

local cjson = require("cjson")

local handler = function()

local resolver = require("resty.dns.resolver")

local resty_roundrobin = require("resty.roundrobin")

local r, err = resolver:new{

-- 配置腾讯云 dns cache

nameservers = { "10.xx.xx.xx", "10.xx.xx.xx", },

retrans = 5, -- 5 retransmissions on receive timeout

timeout = 2000, -- 2 sec

}

if not r then

ngx.log(ngx.ERR, "failed to instantiate the resolver: ", err)

return

end

-- 遍历需要更新实例服务名称

local keys = ngx.shared._upstreams_dns:get_keys()

for idx, v in ipairs(keys) do

ngx.log(ngx.NOTICE, "query:"..v)

local answers, err, tries = r:query(v, nil, {})

if not answers then

ngx.log(ngx.ERR, "failed to query the DNS server: ", err)

return

end

if answers.errcode then

ngx.log(ngx.ERR, "server returned error code: ",answers.errcode,": ",answers.errstr)

end

local endpoints = {}

ngx.log(ngx.NOTICE, v, ", instance count:", #answers)

if (#answers) then

for i, ans in ipairs(answers) do

endpoints[ans.address] = 1

ngx.log(ngx.NOTICE, v, ", resvoled:", ans.address)

end

-- 用新实例重建balancer, 支持指定服务表中

local upstream_rr_balancer = resty_roundrobin:new(endpoints)

-- package.loaded worker 里面使用

package.loaded.rr_balancers[v] = upstream_rr_balancer

-- ngx.shared._upstreams_dns:set(v, upstream_rr_balancer)

end

end

end

ngx.timer.at(0, handler)

-- 每 30 秒更新一次

local ok, err = ngx.timer.every(30, handler)

if not ok then

ngx.log(ngx.ERR, "failed to create the timer:", err)

end

}

}

resty.roundrobin 需要安装 lua-resty-balancer 插件,可以参考 https://github.com/openresty/lua-resty-balancer.git

$ git clone https://github.com/openresty/lua-resty-balancer.git

$ cd lua-resty-balancer

$ make

$ cp librestychash.so /usr/local/openresty/lualib/

$ cp -r lib/resty/* /usr/local/openresty/lualib/rest/

动态修改 upstream

看过 `kube-ingress-nginx` nginx 配置代码,服务都指向同一个 `upstream` 代码如下:

upstream upstream_balancer {

server 0.0.0.1; # placeholder

# $proxy_upstream_name 变量获取对应 upstream balancer 在从balancer轮询实例,

balancer_by_lua_block {

balancer.balance()

}

# nginx lua upstream 支持配置keepalive 连接个数

keepalive 300;

}

动态 upstream 需要解决问题

- 支持 upstream 负载均衡 [暂定轮询]

- 需要自己支持max_fail

-

支持负载均衡

以最简单轮询方式为例子, lua怎么实现一个轮询负载均衡器。lua balancer block 覆盖配置文件上balancer ip地址,实现用户动态配置, 代码如下: ``` upstream upstream_balancer { server 0.0.0.1; # placeholder

$proxy_upstream_name 变量获取对应 upstream balancer 在从balancer轮询实例,

balancer_by_lua_block {

local balancer = require("ngx.balancer") local host = ngx.var.proxy_upstream_name local port = ngx.var.proxy_upstream_port ngx.log(ngx.NOTICE, string.format("proxy upstream peer %s", host)) -- 获取对应域名负载均衡对象 local rr_balancer = package.loaded.rr_balancers[host] -- 获取随机server ip (ip work 定时器里面配置balancer上) local server = rr_balancer:find() -- 支持重试 balancer.set_more_tries(1) -- nginx upstream 切换指定 server 上 local ok, err = balancer.set_current_peer(server, port) if not ok then ngx.log(ngx.ERR, string.format("error while setting current upstream peer %s: %s", peer, err)) end}

nginx lua upstream 支持配置keepalive 连接个数

keepalive 300;

}

上面代码基本可以实现每个动态修改 balancer 了。

<a name="XkxP7"></a>

#### 实现自动屏蔽问题实例

在高流量环境下,对于有问题实例/已经关闭需要立刻屏蔽,否者出现请求处理时间延长,在滚动更新时候,实例逐个替换的,实例关闭了和Nginx更新实例列表有一定间隔,不剔除这个关闭实例,在间隔时间内很多请求需要重试。 <br /> 在容器关闭过程中,nginx出现三种不同错误日志,三种不同错误日志,消耗请求时间

容器 http 服务关闭阶段, 消耗时间<=业务等待时间, 有优雅退出tcp建立连接时间

2020/12/24 12:15:01 [error] 27219#27219: *46546 upstream prematurely closed connection while reading response header from upstream

容器 http 服务已经关闭, 消耗tcp建立连接时间

2020/12/23 15:28:32 [error] 11173#11173: *98909 connect() failed (111: Connection refused) while connecting to upstream

容器销毁, ip已经释放, 连接超时, 消耗 proxy_connection_timeout

2020/12/23 20:05:30 [error] 27122#27122: *37302 connect() failed (113: No route to host) while connecting to upstream

没有实现自动剔除, `proxy_connection_timeout 8s` k8s 动态更新代码时候出现大量错误,处理请求数目下降, 平均延迟4s<br /><br /> 出现第三种错误,容器关闭,nginx没有剔除upstream, 客户端请求平均时长托很长,被投诉了。解决方法:

- 配置 `proxy_connection_timeout`

- k8s 配置滚动更新策略: maxUnavailable < proxy_next_upstream_tries

- 配置 nginx 重试策略

- proxy_next_upstream error invalid_header http_502 http_500 non_idempotent;

- proxy_next_upstream_tries 3;

- lua upstream 剔除关闭实例

- 代码上可以支持优雅退出【减少不必要的重试】

Lua upstream 可以通过 `ngx.ctx` 保存此请求当前使用upstream ip, 此请求失败,下次重试,剔除这个失败实例,避免其他请求继续使用

upstream upstream_balancer { server 0.0.0.1; # placeholder

# $proxy_upstream_name 变量获取对应 upstream balancer 在从balancer轮询实例,

balancer_by_lua_block {

local balancer = require("ngx.balancer")

local host = ngx.var.proxy_upstream_name

local port = ngx.var.proxy_upstream_port

ngx.log(ngx.NOTICE, string.format("proxy upstream peer %s", host))

-- 获取对应域名负载均衡对象

local rr_balancer = package.loaded.rr_balancers[host]

-- 判断当前请求是否是重试, 连接第一次时候是空的

if (ngx.ctx.last_upstream_addr) then

-- 502代表 upstream 连接出现问题, 500 upstram 当前请求处理异常(代码异常)

if string.find(ngx.var.upstream_status, "502") then

-- 只剔除连接有问题 upstream

ngx.log(ngx.ERR, "last upstream peer fail with 502: ", ngx.ctx.last_upstream_addr, ":", port, " disable it.")

rr_balancer:set(ngx.ctx.last_upstream_addr, 0)

end

end

-- 获取随机server ip (ip work 定时器里面配置balancer上)

local server = rr_balancer:find()

-- 支持重试

balancer.set_more_tries(1)

-- 记录当前使用upstream

ngx.ctx.last_upstream_addr = server

-- nginx upstream 切换指定 server 上

local ok, err = balancer.set_current_peer(server, port)

if not ok then

ngx.log(ngx.ERR, string.format("error while setting current upstream peer %s: %s", peer, err))

end

}

# nginx lua upstream 支持配置keepalive 连接个数

keepalive 300;

}

当然可以使用健康检查,但是后端实例比较多情况下,健康检查对nginx压力非常大,这样主动剔除性能消耗比较少,基本容器关闭http端口死<br /> 修改以后,滚动更新基本客户不会感知:<br /><br /> 下面整个api.conf server 配置

upstream upstream_balancer { server 0.0.0.1; # placeholder

# $proxy_upstream_name 变量获取对应 upstream balancer 在从balancer轮询实例,

balancer_by_lua_block {

local balancer = require("ngx.balancer")

local host = ngx.var.proxy_upstream_name

local port = ngx.var.proxy_upstream_port

ngx.log(ngx.NOTICE, string.format("proxy upstream peer %s", host))

-- 获取对应域名负载均衡对象

local rr_balancer = package.loaded.rr_balancers[host]

-- 判断当前请求是否是重试, 连接第一次时候是空的

if (ngx.ctx.last_upstream_addr) then

-- 502代表 upstream 连接出现问题, 500 upstram 当前请求处理异常(代码异常)

if string.find(ngx.var.upstream_status, "502") then

-- 只剔除连接有问题 upstream

ngx.log(ngx.ERR, "last upstream peer fail with 502: ", ngx.ctx.last_upstream_addr, ":", port, " disable it.")

rr_balancer:set(ngx.ctx.last_upstream_addr, 0)

end

end

-- 获取随机server ip (ip work 定时器里面配置balancer上)

local server = rr_balancer:find()

-- 支持重试

balancer.set_more_tries(1)

-- 记录当前使用upstream

ngx.ctx.last_upstream_addr = server

-- nginx upstream 切换指定 server 上

local ok, err = balancer.set_current_peer(server, port)

if not ok then

ngx.log(ngx.ERR, string.format("error while setting current upstream peer %s: %s", peer, err))

end

}

# nginx lua upstream 支持配置keepalive 连接个数

keepalive 300;

}

server { listen 80 reuseport; server_name your-service.com;

# 图片接口

location / {

client_body_buffer_size 1m; # max requst body size 1m

# 应用内部k8s服务名称

set $proxy_upstream_name "image-lobby-prod.<you-name-space>.svc.cluster.local";

# 填写你应用port

set $proxy_upstream_port 8080;

# 当前upstream出现错误时,用下一个upstream重试,可指定错误类型

proxy_next_upstream error invalid_header http_502 http_500 non_idempotent;

# 重试次数

proxy_next_upstream_tries 3;

# 连接超时

proxy_connect_timeout 5s;

proxy_pass http://cg-k8s;

# for keepalive upstream

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_redirect off;

}

}

```