Loki

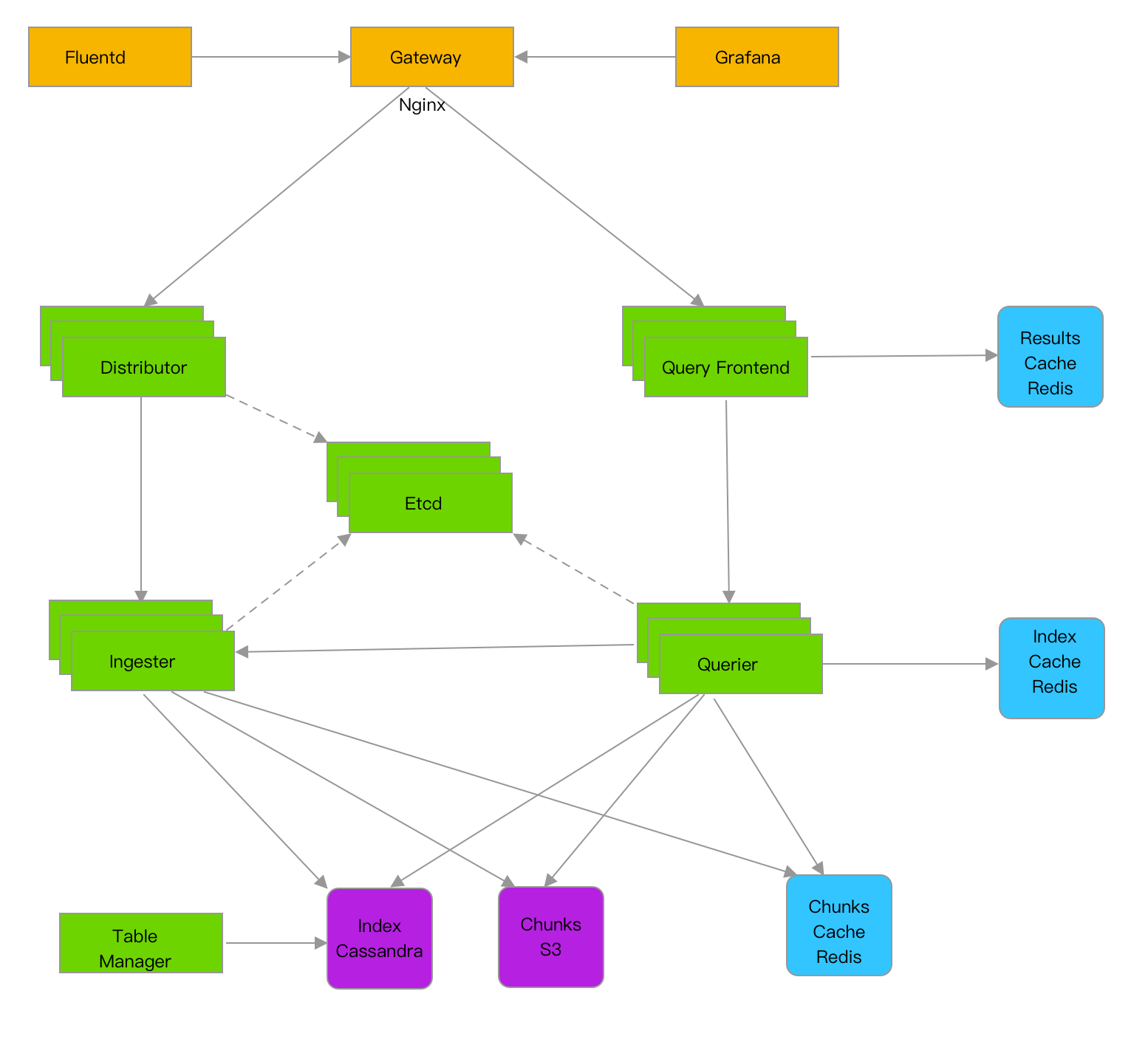

标准架构

| 组件 | 副本数 | 说明 |

|---|---|---|

| Cassandra | Loki Index存储 | |

| S3 | Loki S3存储 | |

| Consul/Etcd | Loki组件状态和哈希环存储 | |

| Redis | Loki缓存 | |

| Gateway | Loki网关 | |

| Distributor | Loki组件Distributor是Loki日志写入的最前端,当它收到日志时会验证其正确性,之后会将日志切成块(chunk)后,转给Ingester负责存储 |

|

| Ingester | Loki组件Ingester主要负责将收到的日志数据写入到后端存储,如DynamoDB,S3,Cassandra等),同时它还会将日志信息发送给Querier组件 |

|

| Querier | Loki组件Querier主要负责从Ingester和后端存储里面提取日志,并用LogQL查询语言处理后返回给客户端 |

|

| Query-Frontend | Loki组件Query frontend主要提供查询API,它可以将一条大的查询请求拆分成多条让Querier并行查询,并汇总后返回。它是一个可选的部署组件,通常我们部署它用来防止大型查询在单个查询器中引起内存不足的问题 |

|

| Table-Manager | Loki组件 Table Manager主要负责在其时间段开始之前创建周期表,并在其数据时间范围超出保留期限时将其删除 |

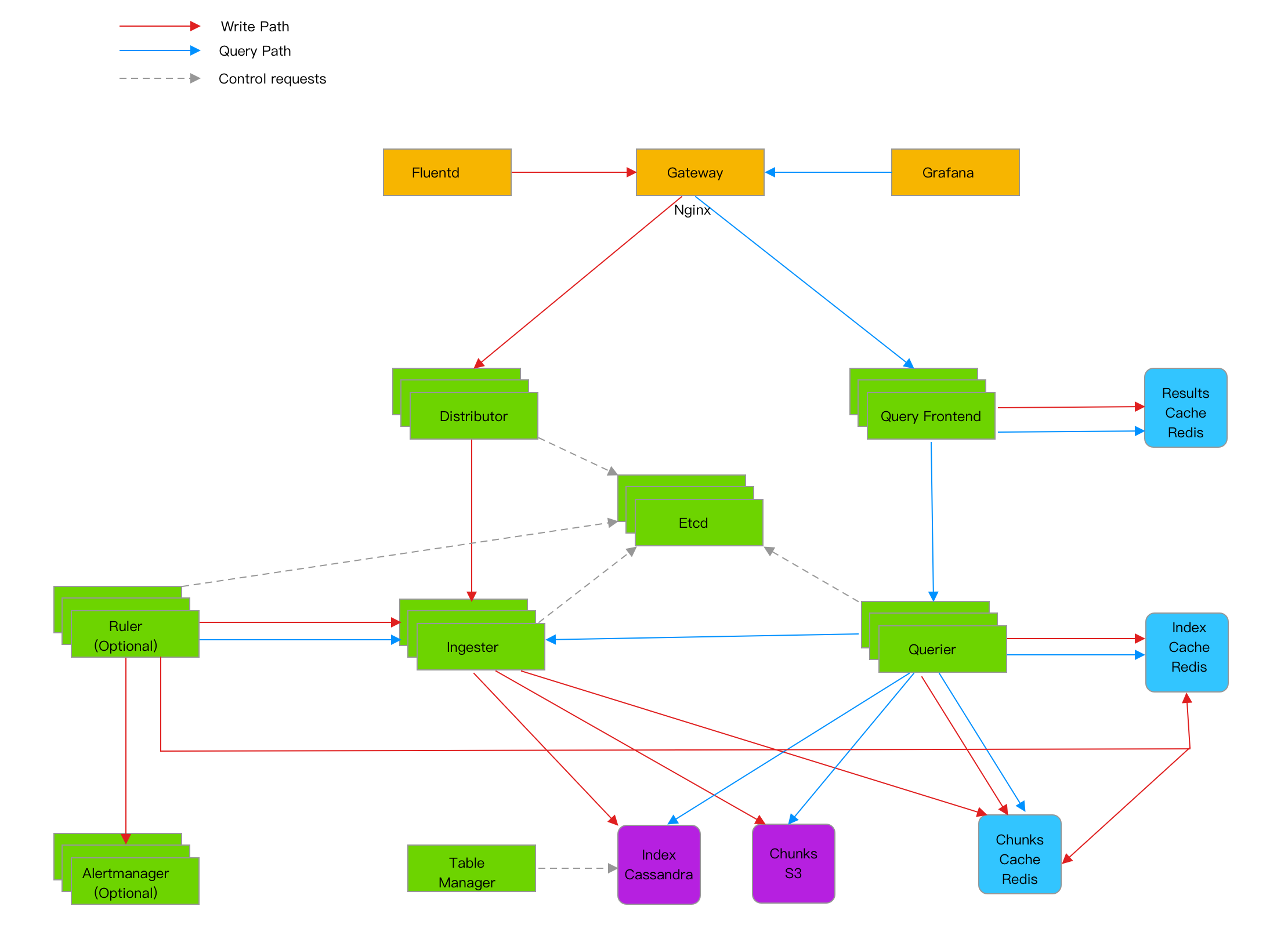

Ruler架构

| 组件 | 副本数 | 说明 |

|---|---|---|

| Cassandra | Loki Index存储 | |

| S3 | Loki S3存储 | |

| Consul/Etcd | Loki组件状态和哈希环存储 | |

| Redis | Loki缓存 | |

| Gateway | Loki网关 | |

| Distributor | Loki组件Distributor是Loki日志写入的最前端,当它收到日志时会验证其正确性,之后会将日志切成块(chunk)后,转给Ingester负责存储 |

|

| Ingester | Loki组件Ingester主要负责将收到的日志数据写入到后端存储,如DynamoDB,S3,Cassandra等),同时它还会将日志信息发送给Querier组件 |

|

| Querier | Loki组件Querier主要负责从Ingester和后端存储里面提取日志,并用LogQL查询语言处理后返回给客户端 |

|

| Query-Frontend | Loki组件Query frontend主要提供查询API,它可以将一条大的查询请求拆分成多条让Querier并行查询,并汇总后返回。它是一个可选的部署组件,通常我们部署它用来防止大型查询在单个查询器中引起内存不足的问题 |

|

| Table-Manager | Loki组件 Table Manager主要负责在其时间段开始之前创建周期表,并在其数据时间范围超出保留期限时将其删除 |

|

| Ruler | Loki组件 Ruler的主要功能是持续查询rules规则,并将超过阈值的事件推送给Alert-Manager或者其他Webhook服务 |

| Index store |

|---|

- Single Store (boltdb-shipper) - Recommended for 2.0 and newer index store which stores boltdb index files in the object store |

- Amazon DynamoDB |

- Google Bigtable |

- Apache Cassandra |

- BoltDB (doesn’t work when clustering Loki) |

| Chunk store |

|---|

- Amazon DynamoDB |

- Google Bigtable |

- Apache Cassandra |

- Amazon S3 |

- Google Cloud Storage |

- Filesystem (please read more about the filesystem to understand the pros/cons before using with production data) |

| Cloud Storage Permissions |

|---|

- s3:ListBucket |

- s3:PutObject |

- s3:GetObject |

- s3:DeleteObject (if running the Single Store (boltdb-shipper) compactor) |

环境

Loki组件:

[root@LF-MYSQL-136-130 ll]# /root/k8s.sh ht -n loki get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

loki-distributor 1/1 Running 0 6h 11.45.198.244 11.5.80.36

loki-distributor2 1/1 Running 0 11m 11.45.241.196 11.5.32.39

loki-distributor3 1/1 Running 0 10m 11.45.199.231 11.5.80.130

loki-etcd 1/1 Running 0 6h 11.45.241.194 11.5.32.39

loki-frontend 1/1 Running 0 6h 11.45.198.245 11.5.80.130

loki-ingester 1/1 Running 0 6h 11.45.198.247 11.5.80.134

loki-ingester2 1/1 Running 0 9m 11.45.199.232 11.5.80.132

loki-ingester3 1/1 Running 0 9m 11.45.241.197 11.5.32.42

loki-minio 1/1 Running 0 6h 11.45.198.251 11.5.80.167

loki-querier 1/1 Running 0 6h 11.45.198.249 11.5.80.167

loki-querier-frontend 1/1 Running 0 6h 11.45.198.248 11.5.80.132

loki-querier-frontend2 1/1 Running 0 9m 11.45.241.198 11.5.31.42

loki-querier2 1/1 Running 0 8m 11.45.241.199 11.5.31.40

loki-querier3 1/1 Running 0 8m 11.45.235.43 11.5.98.170

loki-redis 1/1 Running 0 6h 11.45.198.243 11.5.80.130

loki-ruler 1/1 Running 0 6h 11.45.198.246 11.5.80.132

loki-table-manager 1/1 Running 0 6h 11.45.198.250 11.5.80.132

Nginx:

10.194.136.130

[root@LF-MYSQL-136-130 ll]# ps -ef|grep nginx|grep -v grep

root 46500 1 0 16:48 ? 00:00:00 nginx: master process /usr/local/nginx/sbin/nginx -c /export/.trash/haha/ll/nginx.conf

nobody 46501 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46502 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46503 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46504 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46505 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46506 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46507 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46508 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46509 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46510 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46511 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46512 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46513 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46514 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46515 46500 0 16:48 ? 00:00:00 nginx: worker process

nobody 46516 46500 0 16:48 ? 00:00:00 nginx: worker process

组件启动

distributor组件

loki -target=distributor -config.file=loki.yaml

ingester组件

loki -target=ingester -config.file=loki.yaml -log.level=debug

query-frontend组件

loki -target=query-frontend -config.file=loki.yaml

querier组件

loki -target=querier -config.file=loki.yaml

table-manager组件

loki -target=table-manager -config.file=loki.yaml

ruler组件

loki -target=ruler -config.file=loki.yaml —log.level=debug

配置文件(无cassandra)

authenabled: false

chunk_store_config:

chunk_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

max_look_back_period: 0

write_dedupe_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

distributor:

ring:

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

dial_timeout: 60s

store: etcd

frontend:

compress_responses: true

max_outstanding_per_tenant: 200

downstream_url: http://11.45.198.249:3100

frontend_worker:

frontend_address: 11.45.198.248:9095

grpc_client_config:

max_send_msg_size: 20971520

parallelism: 10

ingester:

chunk_block_size: 262144

chunk_idle_period: 30m

chunk_target_size: 0

max_chunk_age: 1h

lifecycler:

heartbeat_period: 5s

interface_names:

- eth0

join_after: 30s

num_tokens: 512

ring:

heartbeat_timeout: 1m

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

dial_timeout: 60s

store: etcd

replication_factor: 2

max_transfer_retries: 10

ingester_client:

grpc_client_config:

max_recv_msg_size: 20971520

remote_timeout: 1s

limits_config:

enforce_metric_name: false

ingestion_burst_size_mb: 100

ingestion_rate_mb: 10

ingestion_rate_strategy: global

max_global_streams_per_user: 10000

max_entries_limit_per_query: 10000

max_query_length: 12000h

max_query_parallelism: 32

max_streams_per_user: 0

reject_old_samples: true

reject_old_samples_max_age: 168h

query_range:

align_queries_with_step: true

cache_results: true

max_retries: 5

results_cache:

cache:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

#max_freshness: 10m

split_queries_by_interval: 6h

schema_config:

configs:

- from: 2020-09-20

store: boltdb-shipper

object_store: aws

schema: v11

index:

prefix: index

period: 24h

chunks:

prefix: chunks

period: 720h

server:

graceful_shutdown_timeout: 5s

grpc_server_max_concurrent_streams: 0

grpc_server_max_recv_msg_size: 100000000

grpc_server_max_send_msg_size: 100000000

http_listen_port: 3100

http_server_idle_timeout: 120s

http_server_write_timeout: 1m

storage_config:

boltdb_shipper:

active_index_directory: /loki/boltdb-shipper-active

cache_location: /loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: s3

aws:

s3: s3://key123456:password123456@11.45.198.251:9000/loki

s3forcepathstyle: true

index_queries_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

table_manager:

chunk_tables_provisioning:

inactive_read_throughput: 0

inactive_write_throughput: 0

provisioned_read_throughput: 0

provisioned_write_throughput: 0

index_tables_provisioning:

inactive_read_throughput: 0

inactive_write_throughput: 0

provisioned_read_throughput: 0

provisioned_write_throughput: 0

retention_deletes_enabled: false

retention_period: 0

ruler:

storage:

type: local

local:

directory: /data/rules/

ring:

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

store: etcd

rule_path: /tmp/scratch

enable_api: true

enable_sharding: true

enable_alertmanager_v2: true

alertmanager_url: “http://127.0.0.1:9093“

配置文件(有cassandra)

auth_enabled: false

chunk_store_config:

chunk_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

max_look_back_period: 0

write_dedupe_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

distributor:

ring:

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

dial_timeout: 60s

store: etcd

frontend:

compress_responses: true

max_outstanding_per_tenant: 200

downstream_url: http://11.45.198.249:3100

frontend_worker:

frontend_address: 11.45.198.248:9095

grpc_client_config:

max_send_msg_size: 20971520

parallelism: 10

ingester:

chunk_block_size: 262144

chunk_idle_period: 30m

chunk_target_size: 0

max_chunk_age: 1h

lifecycler:

heartbeat_period: 5s

interface_names:

- eth0

join_after: 30s

num_tokens: 512

ring:

heartbeat_timeout: 1m

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

dial_timeout: 60s

store: etcd

replication_factor: 2

max_transfer_retries: 10

ingester_client:

grpc_client_config:

max_recv_msg_size: 20971520

remote_timeout: 1s

limits_config:

enforce_metric_name: false

ingestion_burst_size_mb: 100

ingestion_rate_mb: 10

ingestion_rate_strategy: global

max_global_streams_per_user: 10000

max_entries_limit_per_query: 10000

max_query_length: 12000h

max_query_parallelism: 32

max_streams_per_user: 0

reject_old_samples: true

reject_old_samples_max_age: 168h

query_range:

align_queries_with_step: true

cache_results: true

max_retries: 5

results_cache:

cache:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

#max_freshness: 10m

split_queries_by_interval: 6h

schema_config:

configs:

- from: 2020-09-20

store: cassandra

object_store: aws

schema: v11

index:

prefix: index

period: 720h

chunks:

prefix: chunks

period: 720h

server:

gracefulshutdown_timeout: 5s

grpc_server_max_concurrent_streams: 0

grpc_server_max_recv_msg_size: 100000000

grpc_server_max_send_msg_size: 100000000

http_listen_port: 3100

http_server_idle_timeout: 120s

http_server_write_timeout: 1m

storage_config:

cassandra:

username: cassandra

password: cassandra

addresses: 10.194.136.130

auth: true

keyspace: lokiindex

consistency: LOCAL_ONE

aws:

s3: s3://key123456:password123456@11.45.198.251:9000/loki

s3forcepathstyle: true

index_queries_cache_config:

redis:

endpoint: 11.45.198.243:6379

expiration: 1h

table_manager:

chunk_tables_provisioning:

inactive_read_throughput: 0

inactive_write_throughput: 0

provisioned_read_throughput: 0

provisioned_write_throughput: 0

index_tables_provisioning:

inactive_read_throughput: 0

inactive_write_throughput: 0

provisioned_read_throughput: 0

provisioned_write_throughput: 0

retention_deletes_enabled: false

retention_period: 0

ruler:

storage:

type: local

local:

directory: /data/rules/

ring:

kvstore:

etcd:

endpoints: [“11.45.241.194:2379”]

store: etcd

rule_path: /tmp/scratch

enable_api: true

enable_sharding: true

enable_alertmanager_v2: true

alertmanager_url: “http://127.0.0.1:9093“

nginx

worker_processes 16;

error_log /dev/stderr;

pid /tmp/nginx.pid;

worker_rlimit_nofile 8192;

events {

worker_connections 4096;

}

http {

client_max_body_size 1024M;

default_type application/octet-stream;

resolver 127.0.0.11;

access_log /dev/stdout;

sendfile on;

tcp_nopush on;

server {

listen 3100 default_server;

location = / {

proxy_pass http://11.45.198.249:3100/ready;

}

location = /api/prom/push {

proxy_pass http://11.45.198.244:3100$request_uri;

}

location = /api/prom/tail {

proxy_pass http://11.45.198.249:3100$request_uri;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection “upgrade”;

}

location ~ /api/prom/rules. {

proxy_pass http://11.45.198.246:3100$request_uri;

}

location ~ /api/prom/. {

proxy_pass http://11.45.198.248:3100$request_uri;

}

location = /loki/api/v1/push {

proxy_pass http://11.45.198.244:3100$request_uri;

}

location = /loki/api/v1/tail {

proxy_pass http://11.45.198.249:3100$request_uri;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection “upgrade”;

}

location ~ /loki/api/v1/rules. {

proxy_pass http://11.45.198.246:3100$request_uri;

}

location ~ /loki/api/. {

proxy_pass http://11.45.198.248:3100$request_uri;

}

}

}

promtail配置

[root@niffler-server-dpl-2912797334-zgvd4 promtail]# more promtail.yaml

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://10.194.136.130:3100/loki/api/v1/push

scrape_configs:

- job_name: app

static_configs:

- targets:

- localhost

labels:

job: niffler-server-11.45.244.236

__path: /export/Niffler/niffler_server.log.*

- job_name: journal

journal:

max_age: 12h

labels:

job: journal-server-11.45.244.236

relabel_configs:

- source_labels: [‘journalsystemd_unit’]

target_label: ‘unit’

pod

apiVersion: v1

kind: Pod

metadata:

labels:

app: loki

cluster: loki

component: loki

name: loki-alertmanager

namespace: loki

spec:

containers:

- env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: is.ht1.n.jd.local/vitesss/crdb_base_etcd:v20201204182950

imagePullPolicy: IfNotPresent

name: loki

resources:

limits:

cpu: “1”

memory: 1Gi

requests:

cpu: “1”

memory: 1Gi

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /export

name: export

dnsPolicy: ClusterFirst

nodeSelector:

jdos.jd.com/disk.type: SSD

jdos.jd.com/status: online

jdos.jd.com/zone: CB-SQL

restartPolicy: Always

volumes:

- flexVolume:

driver: kubernetes.io/lvm

fsType: ext4

options:

size: 5Gi

volumeID: “1335778729351188491”

volumegroup: docker

name: export

可能的问题

https://www.jianshu.com/p/6b24340c2cf1