Leturer 曾充

Date 2022.7.5

Traditional Approach vs Machine Learning

- Traditional

- Hard-crafted rules / features

- Explainable

- Less tested on data

- Insufficient capacity

Machine Learning

Problems with long lists of rules

- Continually changing environments

Discovering insights within large collections of data

Why ML in an HPC Course?

ML ( especially DL ) is a special series of application

ML can be used to guide system optimization

Basic of machine learning

Machine Learning Problems

Categories of learning

Supervised Learning

- Unsupervised Learning

- Transfer Learning

- Reinforcement Learning

Problem domains

- Classification

- Regression

- Clustering

-

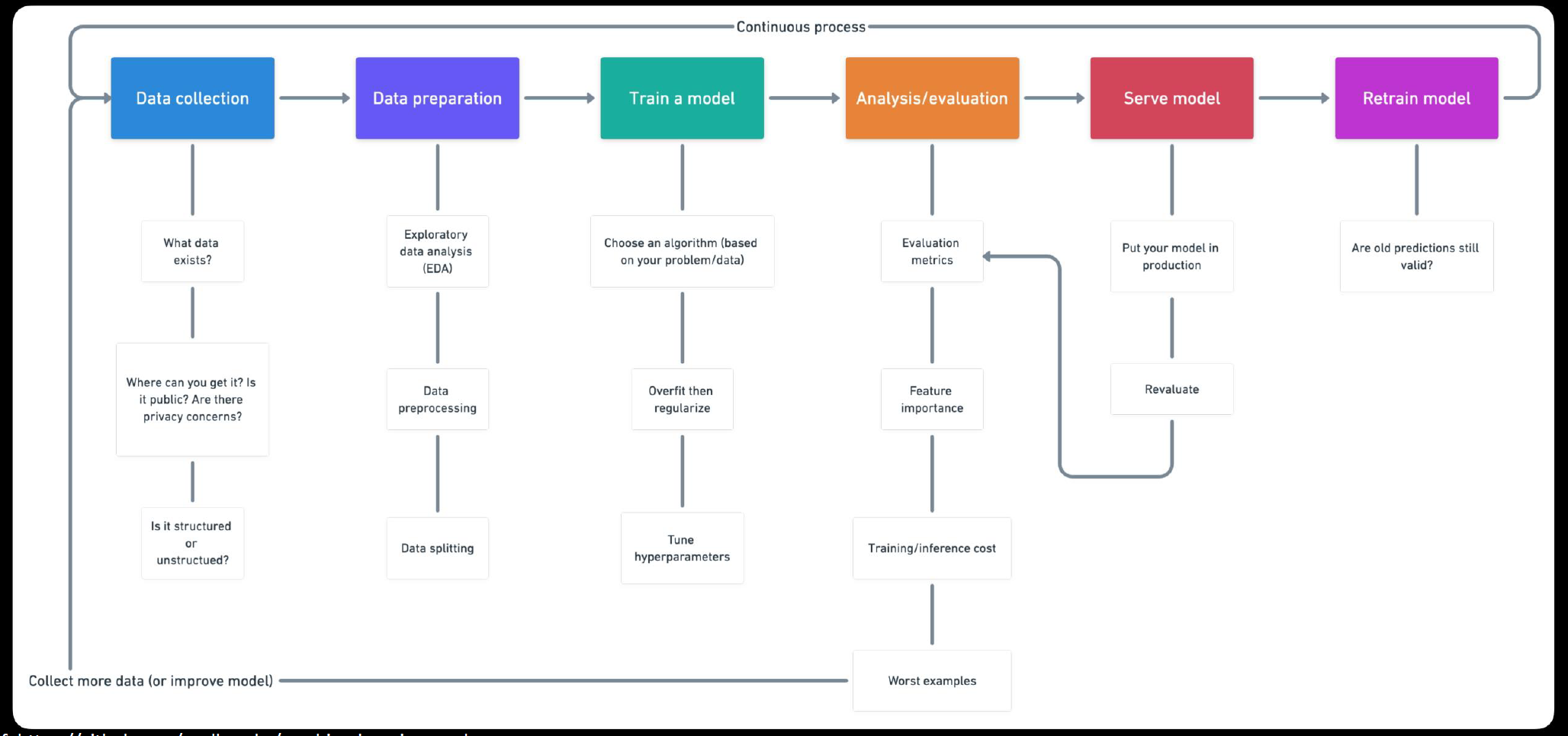

Machine Learning Process

Formulation of machine learning

y = f(x)

- What to use for f ?

- How to solve params in f

- How to get x from real-world data?

How to judge the quality of f ?

Easiest f : Linear Regression

How to solve params in f : Gradient Descent

Learning rate: the length of one step

- hyper-parameter

- Learning Rate Scheduler 调度器

- Linear Decay

- Linear Warmup

Minibatch Stochastic Gradient Descent

Input

- Hidden

-

Computation Graph: Forward Propagation

Instead of gradient descent

Back Propagation: Chain RuleOther Components in DL

Layer

- Activation Function

- Sigmoid

- tanh

- Rectified Linear Unit ( ReLU )

- Softmax

- Normalization

- Regularization

- Dropout

- Activation Function

-

Batch Normalization

Nomalization Methods

-

Dropout

Optimizers

SGD

- SGD + Momentum

- AdaGrad

-

CNN

Convolution