单机 Zookeeper + Hadoop + Hbase

1、Java环境

## 安装 jdkyum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel## 安装目录 --> /usr/lib/jvm## 配置java环境变量 /etc/profileexport JAVA_HOME=/usr/lib/jvm/jreexport JRE_HOME=/usr/lib/jvm/jreexport CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/libexport PATH=${JAVA_HOME}/bin:$PATH## 使配置生效source /etc/profile

2、安装 Zookeeper

下载: http://archive.apache.org/dist/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz

http://archive.apache.org/dist/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9.tar.gz

- 解压

tar -xzvf apache-zookeeper-3.5.9-bin.tar.gz

- 配置环境变量

vi /etc/profileexport ZOOKEEPER_HOME=/var/local/hbase/apache-zookeeper-3.5.9-binexport PATH=$ZOOKEEPER_HOME/bin:$PATH## 使配置生效source /etc/profile

- 复制配置文件

cp /var/local/hbase/apache-zookeeper-3.5.9-bin/conf/zoo_sample.cfg /var/local/hbase/apache-zookeeper-3.5.9-bin/conf/zoo.cfg

- 创建目录

mkdir /var/local/hbase/apache-zookeeper-3.5.9-bin/runmkdir /var/local/hbase/apache-zookeeper-3.5.9-bin/run/datamkdir /var/local/hbase/apache-zookeeper-3.5.9-bin/run/log

- 修改配置文件

vi /var/local/hbase/apache-zookeeper-3.5.9-bin/conf/zoo.cfg## 修改如下两处(没有就增加):dataDir=/var/local/hbase/apache-zookeeper-3.5.9-bin/run/datadataLogDir=/var/local/hbase/apache-zookeeper-3.5.9-bin/run/log

- 解启动zookeeper

/var/local/hbase/apache-zookeeper-3.5.9-bin/bin/zkServer.sh start

- zookeeper 使用

## 链接 zookeeper/var/local/hbase/apache-zookeeper-3.5.9-bin/bin/zkCli.sh## 使用 ls 命令来查看当前 ZooKeeper 中所包含的内容ls

3、安装 Hadoop

下载:https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

- 解压

tar -xzvf hadoop-3.2.2.tar.gz

- 配置环境变量

vi /etc/profileexport HADOOP_HOME=/var/local/hbase/hadoop-3.2.2export PATH=${HADOOP_HOME}/bin:$PATH## 使配置生效source /etc/profile

- 修改hadoop配置文件

vim /var/local/hbase/hadoop-3.2.2/etc/hadoop/hadoop-env.sh## 设置java_homeJAVA_HOME=/usr/lib/jvm/jre

- 创建目录:

mkdir /var/local/hbase/hadoop-3.2.2/runmkdir /var/local/hbase/hadoop-3.2.2/run/hadoop

- 修改hosts文件

vi /etc/hosts## 添加192.168.31.131 hadoop1 hadoop1

- 修改配置文件 core-site.xml

vi /var/local/hbase/hadoop-3.2.2/etc/hadoop/core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://hadoop1:8020</value></property><property><!--指定 hadoop 存储临时文件的目录--><name>hadoop.tmp.dir</name><value>/var/local/hbase/hadoop-3.2.2/run/hadoop</value></property><property><name>hadoop.native.lib</name><value>false</value><description></description></property></configuration>

- 修改 hdfs-site.xml 文件

vi /var/local/hbase/hadoop-3.2.2/etc/hadoop/hdfs-site.xml

<configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.secondary.http.address</name><value>hadoop1:50070</value></property></configuration>

- 修改文件:mapred-site.xml

vi /var/local/hbase/hadoop-3.2.2/etc/hadoop/mapred-site.xml

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>

- 修改文件:yarn-site.xml

vi /var/local/hbase/hadoop-3.2.2/etc/hadoop/yarn-site.xml

<configuration><property><!--配置 NodeManager 上运行的附属服务。需要配置成 mapreduce_shuffle 后才可以在 Yarn 上运行 MapReduce 程序。--><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property></configuration>

- 主机访问设置

## 在 /var/local/hbase 目录下ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsacat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keyschmod 0600 ~/.ssh/authorized_keys

- 格式化 hdfs

/var/local/hbase/hadoop-3.2.2/bin/hdfs namenode -format

- 修改 hdfs 启动脚本:

vi /var/local/hbase/hadoop-3.2.2/sbin/start-dfs.sh## 顶部增加HDFS_DATANODE_USER=rootHADOOP_SECURE_DN_USER=hdfsHDFS_NAMENODE_USER=rootHDFS_SECONDARYNAMENODE_USER=root

- 修改 hdfs 停止脚本:

vi /var/local/hbase/hadoop-3.2.2/sbin/stop-dfs.sh## 顶部增加HDFS_DATANODE_USER=rootHADOOP_SECURE_DN_USER=hdfsHDFS_NAMENODE_USER=rootHDFS_SECONDARYNAMENODE_USER=root

- 修改yarn启动脚本:

vi /var/local/hbase/hadoop-3.2.2/sbin/start-yarn.sh## 顶部增加YARN_RESOURCEMANAGER_USER=rootHADOOP_SECURE_DN_USER=yarnYARN_NODEMANAGER_USER=root

- 修改yarn停止脚本:

vi /var/local/hbase/hadoop-3.2.2/sbin/stop-yarn.sh## 顶部增加YARN_RESOURCEMANAGER_USER=rootHADOOP_SECURE_DN_USER=yarnYARN_NODEMANAGER_USER=root

- 启动 hdfs

export JAVA_HOME=/usr/lib/jvm/jre## 启动/var/local/hbase/hadoop-3.2.2/sbin/start-dfs.sh## 停止/var/local/hbase/hadoop-3.2.2/sbin/stop-dfs.sh

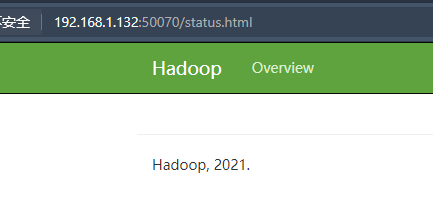

- 浏览器访问验证

http://192.168.1.132:50070/

- 启动 yarn

## 启动/var/local/hbase/hadoop-3.2.2/sbin/start-yarn.sh## 停止/var/local/hbase/hadoop-3.2.2/sbin/stop-yarn.sh

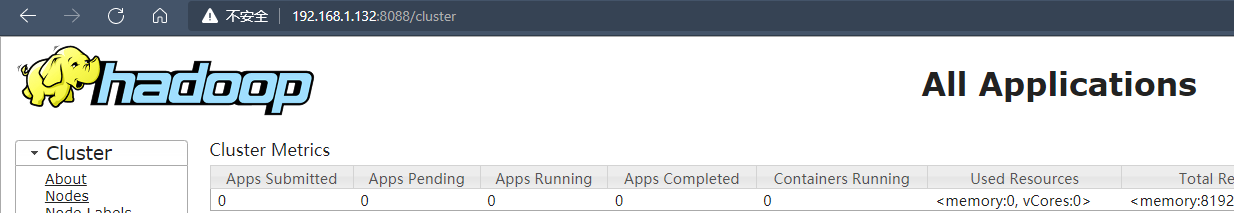

- 浏览器访问验证

http://192.168.1.132:8088/

4、安装 Hbase

下载: https://www.apache.org/dyn/closer.lua/hbase/2.3.6/hbase-2.3.6-bin.tar.gz

- 解压

tar -xzvf hbase-2.3.6-bin.tar.gz

- 修改环境变量

vi /etc/profileexport HBASE_HOME=/var/local/hbase/hbase-2.3.6export PATH=$HBASE_HOME/bin:$PATH## 使配置生效source /etc/profile

- 修改 hbase 配置文件

vi /var/local/hbase/hbase-2.3.6/conf/hbase-env.sh### 修改两处export JAVA_HOME=/usr/lib/jvm/jre ## 28 行export HBASE_MANAGES_ZK=flase ## 126 行

- 修改 hbase-site

vi /var/local/hbase/hbase-2.3.6/conf/hbase-site.xml

<configuration><!--指定 HBase 以分布式模式运行--><property><name>hbase.cluster.distributed</name><value>true</value></property><!--指定 HBase 数据存储路径为 HDFS 上的 hbase 目录,hbase 目录不需要预先创建,程序会自动创建--><property><name>hbase.rootdir</name><value>hdfs://hadoop1:8020/hbase</value></property><!--指定 zookeeper 数据的存储位置--><property><name>hbase.zookeeper.property.dataDir</name><value>/var/local/hbase/apache-zookeeper-3.5.9-bin/run/data</value></property><!--指定 Hbase Web UI 默认端口--><property><name>hbase.master.info.port</name><value>16010</value></property><!-- regionserver 信息 web界面接口 --><property><name>hbase.regionserver.info.port</name><value>16030</value></property><!--指定外置zookeeper--><property><name>hbase.zookeeper.quorum</name><value>hadoop1:2181</value></property><!--解决 hostname 为 localhost 问题--><property><name>hbase.master.ipc.address</name><value>0.0.0.0</value></property><!--解决 hostname 为 localhost 问题--><property><name>hbase.regionserver.ipc.address</name><value>0.0.0.0</value></property></configuration>

- 修改 regionservers 文件

vim /var/local/hbase/hbase-2.3.6/conf/regionservers## 将 localhost 修改为 hadoop1

- 启动 hbase

## 启动/var/local/hbase/hbase-2.3.6/bin/start-hbase.sh## 停止/var/local/hbase/hbase-2.3.6/bin/stop-hbase.sh/home/hbase/hbase-2.3.6/bin/start-hbase.sh/home/hbase/hbase-2.3.6/bin/stop-hbase.sh

- 浏览器访问

http://192.168.1.132:16010

/var/local/hbase/apache-zookeeper-3.5.9-bin/bin/zkServer.sh start/var/local/hbase/hadoop-3.2.2/sbin/start-dfs.sh/var/local/hbase/hadoop-3.2.2/sbin/start-yarn.sh/var/local/hbase/hbase-2.3.6/bin/start-hbase.sh