LossFunction

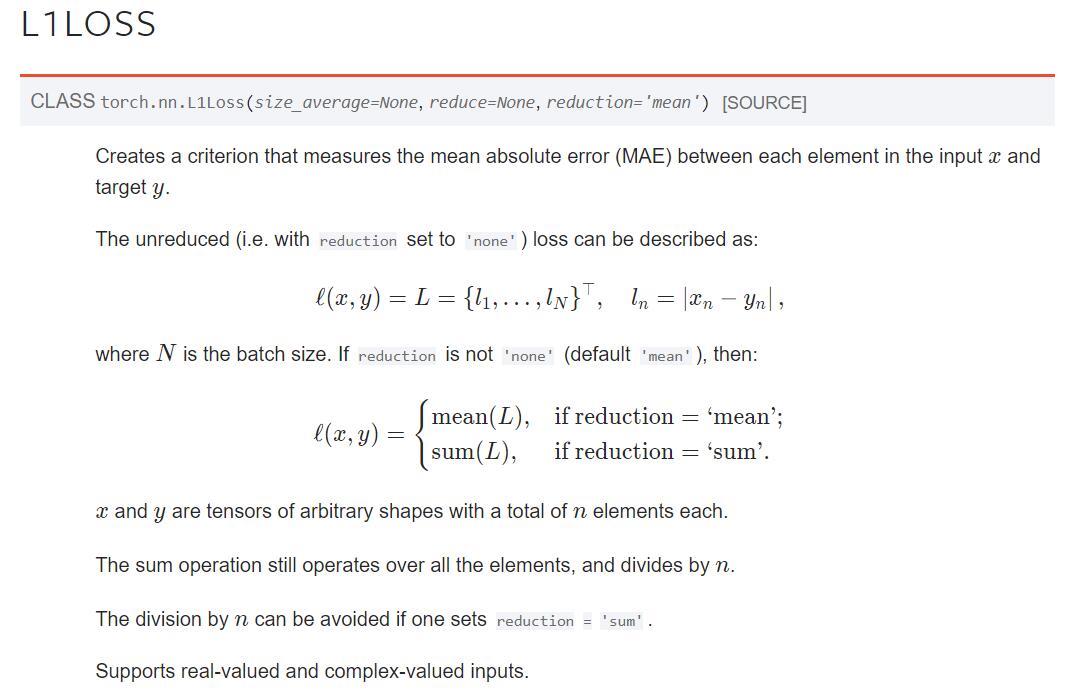

nn.LiLoss()

- 可以取平均也可以取和

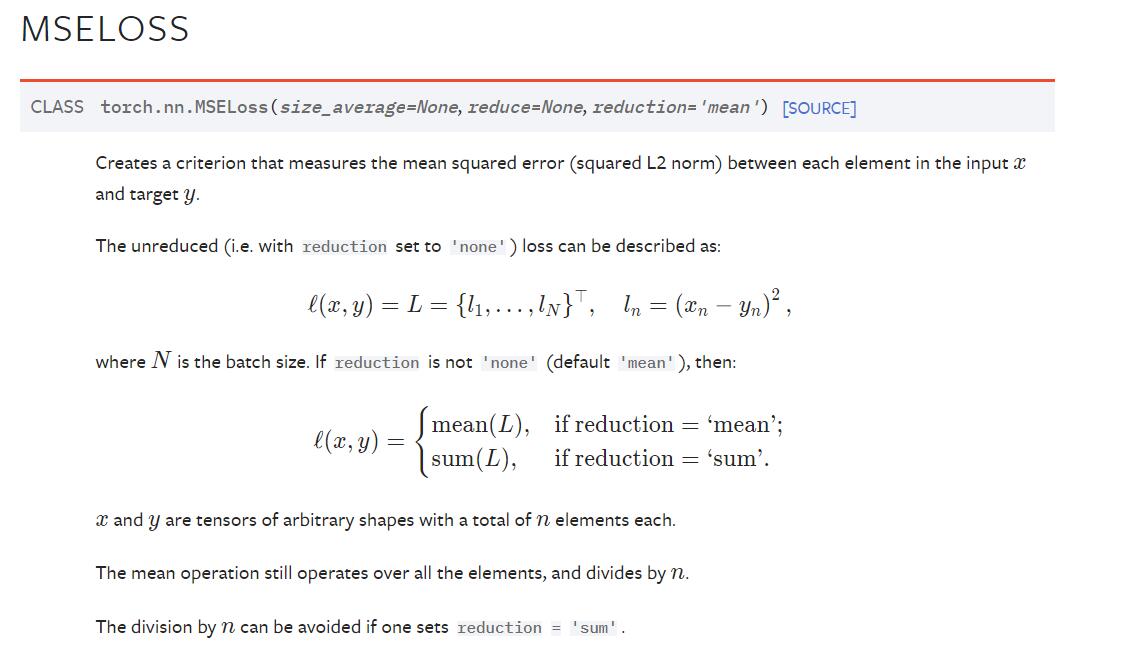

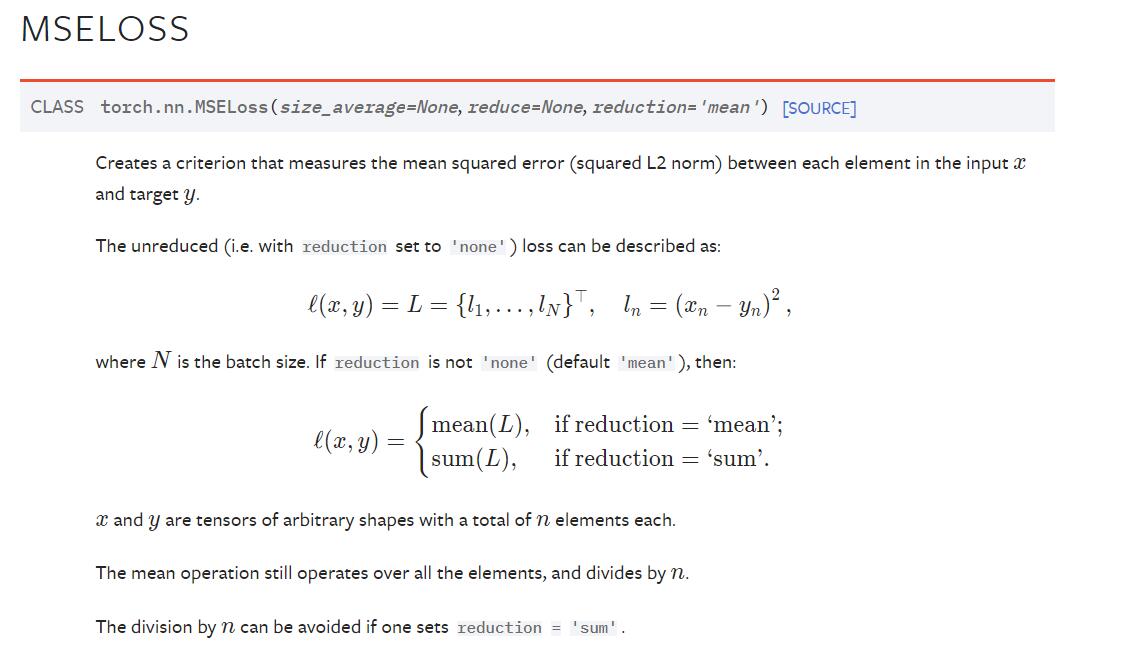

nn.MSELoss()

- 比LiLoss()多了一个平方

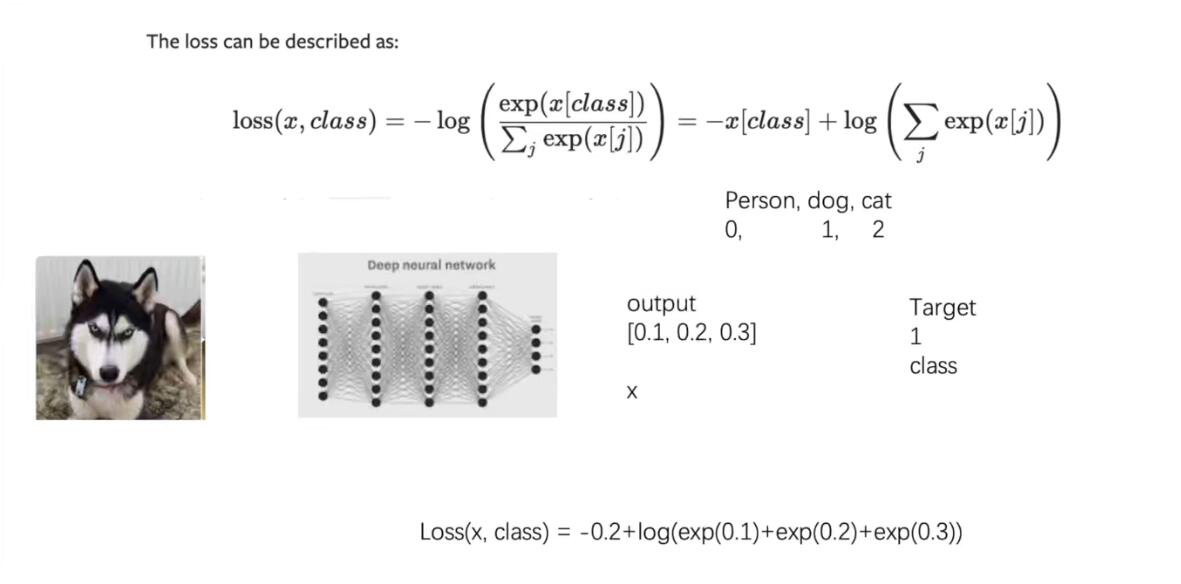

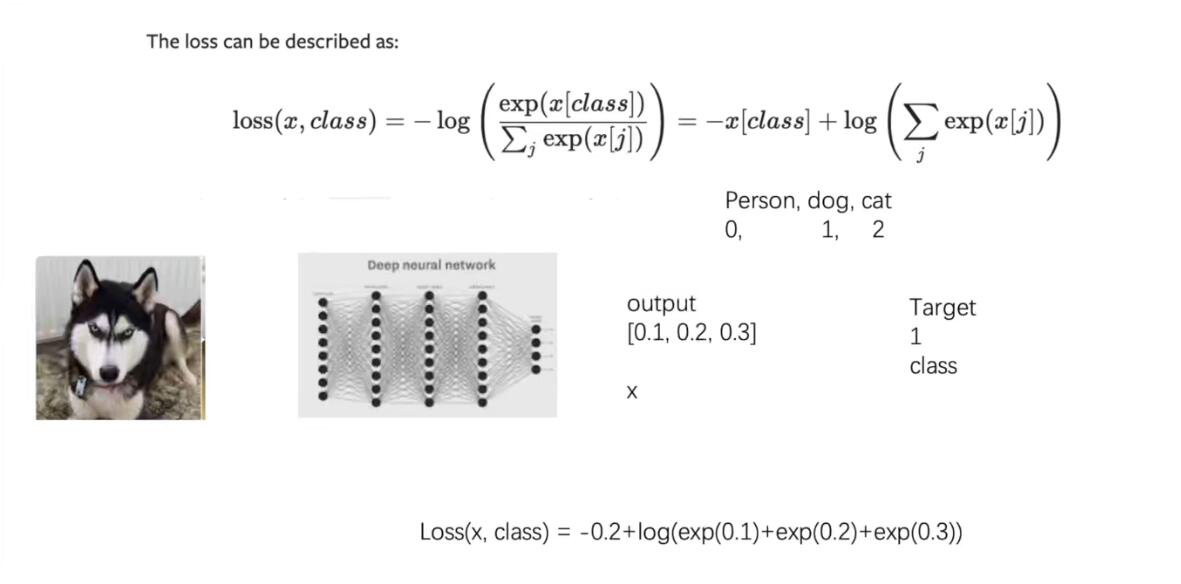

CrossEntropyLoss()

- 示例代码:

import torchfrom torch.nn import L1Loss, MSELoss, CrossEntropyLossinput = torch.tensor([1,2,5],dtype=torch.float32)target = torch.tensor([1,2,3],dtype=torch.float32)input = torch.reshape(input,(1,1,1,3))target = torch.reshape(target,(1,1,1,3))# L1Loss()loss = L1Loss(reduction='sum')result = loss(input,target)# MSELoss()mse_loss = MSELoss()result_mse = mse_loss(input,target)print(result)print(result_mse)# CrossEntropyLoss()x = torch.tensor([0.1,0.2,0.3])y = torch.tensor([1])x = torch.reshape(x,(1,3))loss_cross = CrossEntropyLoss()result_cross = loss_cross(x,y)print(result_cross)

反向传播

from torch import nnimport torchvisionfrom torch.nn import Conv2d, Sequential, MaxPool2d, Flatten, Linear, CrossEntropyLossfrom torch.utils.data import DataLoaderdataset = torchvision.datasets.CIFAR10("./download_data",transform=torchvision.transforms.ToTensor(), train=False, download=True)dataloader = DataLoader(dataset,batch_size=1)class Liucy(nn.Module): def __init__(self): super(Liucy,self).__init__() self.modle = Sequential( Conv2d(3, 32, 5, padding=2), MaxPool2d(2), Conv2d(32, 32, 5, padding=2), MaxPool2d(2), Conv2d(32, 64, 5, padding=2), MaxPool2d(2), Flatten(), Linear(1024, 64), Linear(64, 10) ) def forward(self,x): x = self.modle(x) return xliu = Liucy()cross = CrossEntropyLoss()for data in dataloader: img,label = data output = liu(img) # print(output) #最后会有十个分类 result = cross(output,label) result.backward() print(result)