GitHub

https://github.com/alibaba/DataX

https://github.com/alibaba/DataX/blob/master/introduction.md

https://github.com/alibaba/DataX/blob/master/userGuid.md

JDK安装

https://www.oracle.com/java/technologies/downloads/archive/

版本说明

Linux x86 RPM Package //适用于32bit的centos、rethat(linux)操作系统

Linux x64 RPM Package //适用于64bit的centos、rethat(linux)操作系统

Linux x86 Compressed Archive //适用于32bit的Linux操作系统

Linux x64 Compressed Archive //适用于64bit的Linux操作系统

简介

DataX 是阿里云 DataWorks数据集成 的开源版本,在阿里巴巴集团内被广泛使用的离线数据同步工具/平台。DataX 实现了包括 MySQL、Oracle、OceanBase、SqlServer、Postgre、HDFS、Hive、ADS、HBase、TableStore(OTS)、MaxCompute(ODPS)、Hologres、DRDS 等各种异构数据源之间高效的数据同步功能。

本示例测试使用的sqlserver将一部分表全量同步到mysql。

背景需求

将某个sql server数据库的某个表的数据以离线作业方式同步到另外一个sql server数据库的表中.

添加驱动

Microsoft JDBC Driver for SQL Server 发行说明

https://github.com/microsoft/mssql-jdbc/tags

查看配置模板

python3 /usr/local/datax/bin/datax.py -r sqlserverreader -w mysqlwriter

{"job": {"content": [{"reader": {"name": "sqlserverreader","parameter": {"connection": [{"jdbcUrl": [],"table": []}],"password": "","username": ""}},"writer": {"name": "mysqlwriter","parameter": {"column": [],"connection": [{"jdbcUrl": "","table": []}],"password": "","preSql": [],"session": [],"username": "","writeMode": ""}}}],"setting": {"speed": {"channel": ""}}}}

:::warning 注意,在sqlserverreader的parameter配置项里,是少了column的配置项的,需要自己手工加上 :::

同步cap_published表配置文件

USE [es_test]GO/****** Object: Table [dbo].[cap_published] Script Date: 2022-10-10 12:11:22 ******/SET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOCREATE TABLE [dbo].[cap_published]([Id] [bigint] NOT NULL,[Version] [nvarchar](20) NOT NULL,[Name] [nvarchar](200) NOT NULL,[Content] [nvarchar](max) NULL,[Retries] [int] NOT NULL,[Added] [datetime2](7) NOT NULL,[ExpiresAt] [datetime2](7) NULL,[StatusName] [nvarchar](50) NOT NULL,CONSTRAINT [PK_cap.Published] PRIMARY KEY CLUSTERED([Id] ASC)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY]) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]GO

{"job": {"content": [{"reader": {"name": "sqlserverreader","parameter": {"column": ["Id","Version","Name","Content","Retries","Added","ExpiresAt","StatusName"],"connection": [{"jdbcUrl": ["jdbc:sqlserver://192.168.3.40:1433;DatabaseName=es_test"],"table": ["cap_published"]}],"password": "longfuchu","username": "sa"}},"writer": {"name": "sqlserverwriter","parameter": {"column": ["Id","Version","Name","Content","Retries","Added","ExpiresAt","StatusName"],"connection": [{"jdbcUrl": "jdbc:sqlserver://192.168.3.40:1433;DatabaseName=es_test2","table": ["cap_published"]}],"password": "longfuchu","postSql": [],"preSql": [],"username": "sa"}}}],"setting": {"speed": {"channel": "5"}}}}

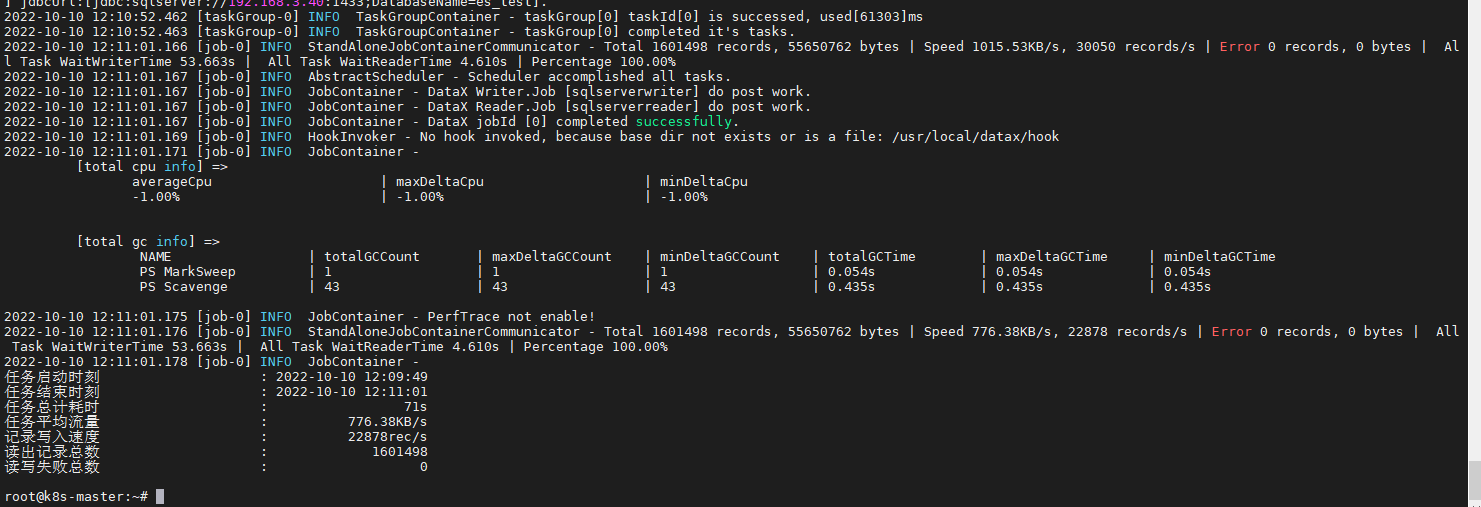

验证

python3 /usr/local/datax/bin/datax.py /usr/local/datax/job/mssqltomssql-published.json

同步V_Blood_BOutItem表配置文件

USE [es_test2]GO/****** Object: Table [dbo].[V_Blood_BOutItem] Script Date: 2022-10-10 12:12:19 ******/SET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOCREATE TABLE [dbo].[V_Blood_BOutItem]([id] [int] NOT NULL,[deptno] [int] NOT NULL,[deptname] [varchar](65) NULL,[bloodno] [varchar](20) NULL,[bloodname] [varchar](65) NULL,[boutcount] [float] NULL,[bloodunitname] [varchar](65) NULL,[bodate] [datetime] NULL,CONSTRAINT [PK_V_Blood_BOutItem] PRIMARY KEY CLUSTERED([id] ASC)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY]) ON [PRIMARY]GOALTER TABLE [dbo].[V_Blood_BOutItem] ADD CONSTRAINT [DF__V_Blood_BOut__id__239E4DCF] DEFAULT (NULL) FOR [id]GOALTER TABLE [dbo].[V_Blood_BOutItem] ADD CONSTRAINT [DF__V_Blood_B__boutc__24927208] DEFAULT (NULL) FOR [boutcount]GO

{"job": {"content": [{"reader": {"name": "sqlserverreader","parameter": {"column": ["id","deptno","deptname","bloodno","bloodname","boutcount","bloodunitname","bodate"],"connection": [{"jdbcUrl": ["jdbc:sqlserver://192.168.3.40:1433;DatabaseName=es_test"],"table": ["V_Blood_BOutItem"]}],"password": "longfuchu","username": "sa"}},"writer": {"name": "sqlserverwriter","parameter": {"column": ["id","deptno","deptname","bloodno","bloodname","boutcount","bloodunitname","bodate"],"connection": [{"jdbcUrl": "jdbc:sqlserver://192.168.3.40:1433;DatabaseName=es_test2","table": ["V_Blood_BOutItem"]}],"password": "longfuchu","postSql": [],"preSql": [],"username": "sa"}}}],"setting": {"speed": {"channel": "5"}}}}

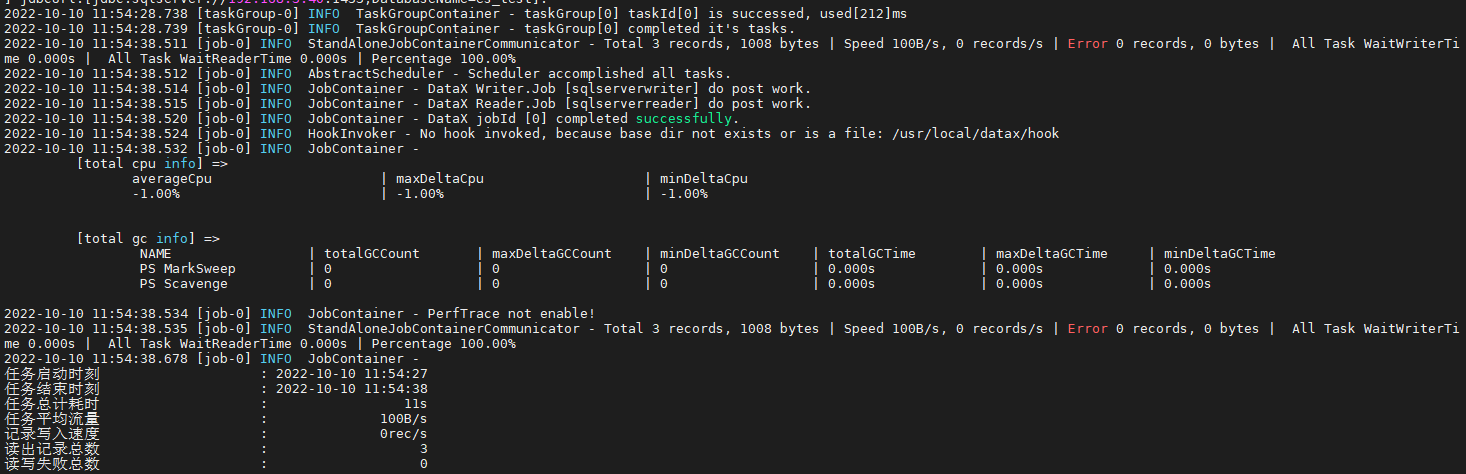

验证

python3 /usr/local/datax/bin/datax.py /usr/local/datax/job/mssqltomssql-outitems.json

执行结果