- Server

- 1. 创建ClusterIP

- 方法一:通过命令行创建

kubectl expose deployment—target-port=80 —port=80 —type=ClusterIP

#方法二:通过yaml文件创建 - 方法一:通过命令行创建

kubectl expose deployment—target-port=80 —port=80 —type=NodePort

#方法二:通过yaml文件创建 - 创建Ingress

kubectl run —image=nginx test1

kubectl run —image=nginx test2

kubectl expose deployment test1 —port=8081 —target-port=80

kubectl expose deployment test2 —port=8081 —target-port=80 - 编写ingress2.yaml,使用ip地址进行访问

Server

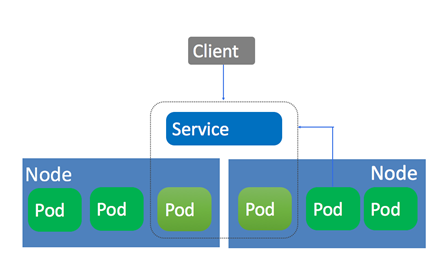

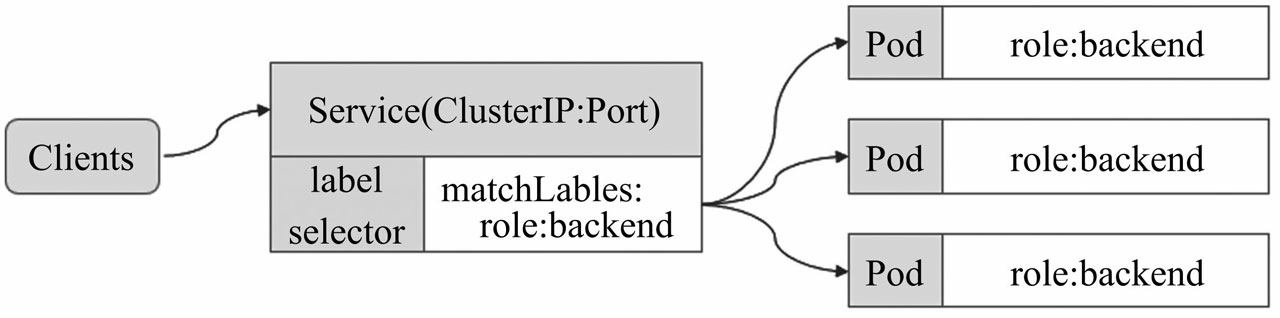

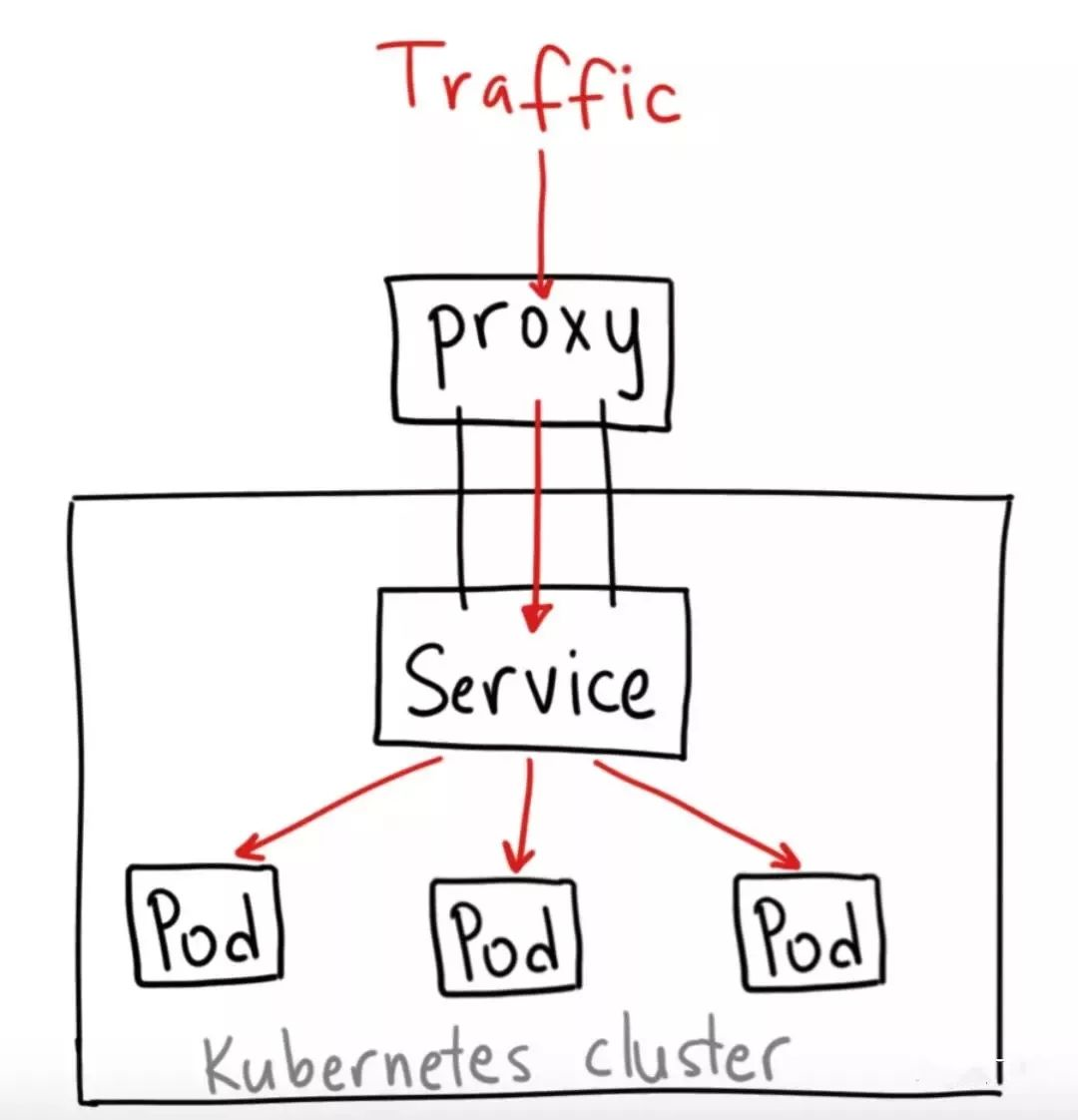

Service为一组功能相同的pod提供统一入口并为它们提供负载均衡和自动服务发现。Service所针对的一组Pod,通常是由Selector来确定。<br /> 为什么需要Service?<br /> 直接接通过pod的ip加端口去访问应用是不稳定的,因为pod的生命周期是不断变化的,每次触发了删除的动作后Pod会重新创建,那么Pod的IP地址就会产生变化。<br />

Service的转发后端的三种方式

Clusterip:通过集群的内部IP的创建,实现服务在集群内的访问。这也是集群默认的 ServiceType。

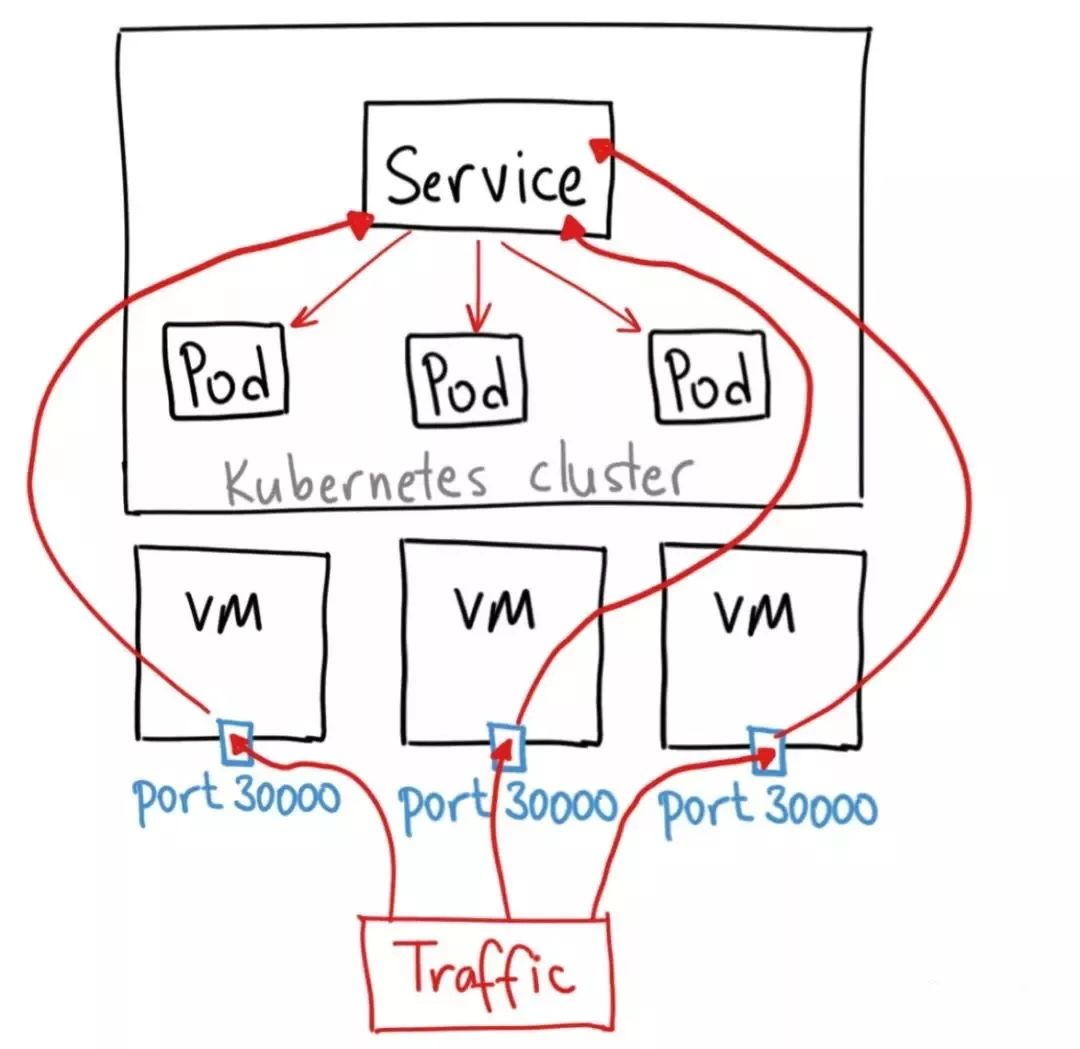

Nodeport:通过每个 Node 上的 IP 和静态端口(NodePort)暴露服务。通过请求

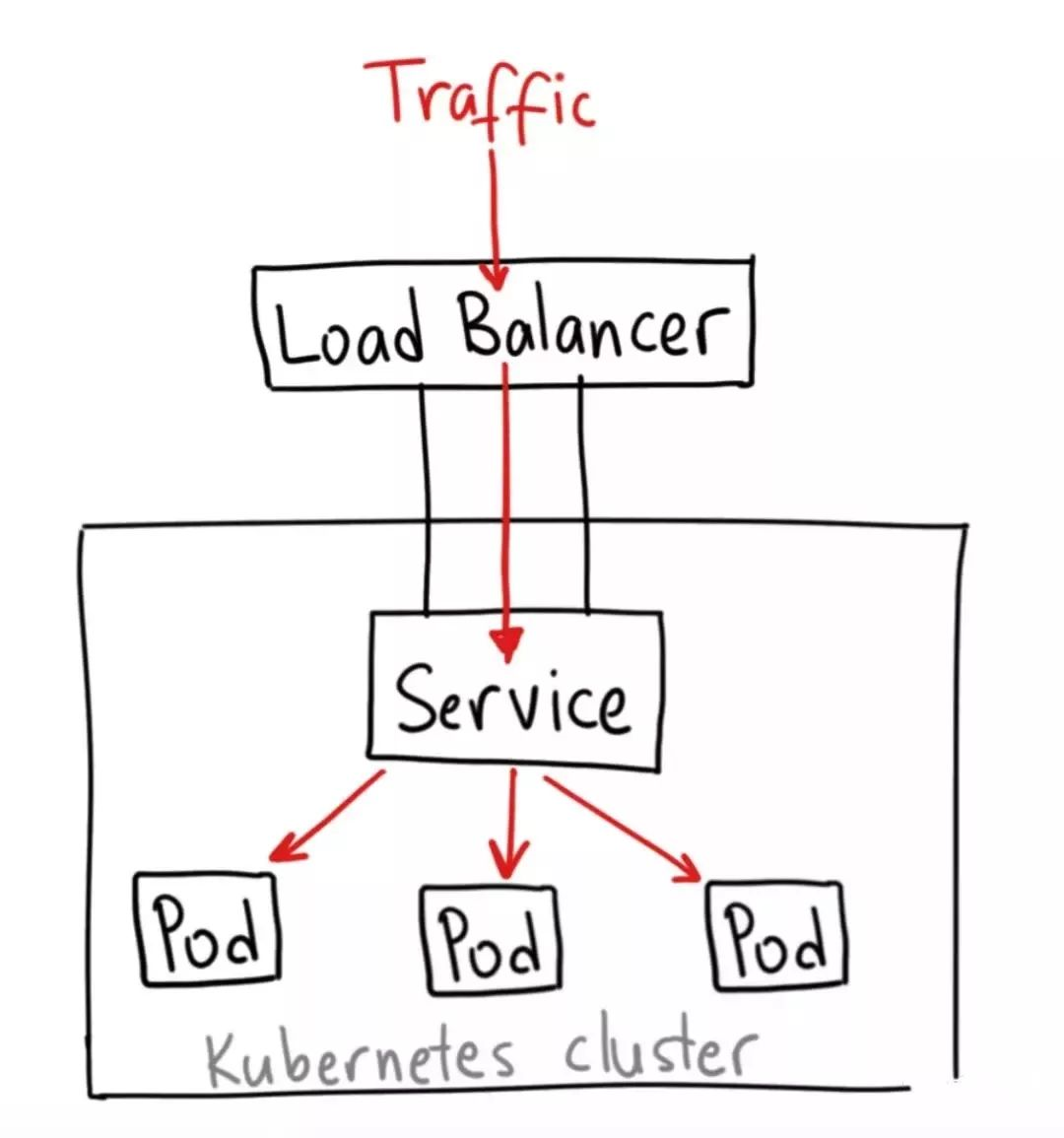

Loadblance:使用云提供商的负载局衡器,可以向外部暴露服务。外部的负载均衡器可以路由到Service然后到应用。

Externalname:依赖于DNS,用于将集群外部的服务通过域名的方式映射到Kubernetes集群内部,通过Service的name进行访问。

Service的三种端口类型

Port:Service对内暴露的端口

Targetport:POD暴露的端口

Nodeport:Service对外暴露的端口

集群外访问Service的方式

Nodeport

Loadblance

ExternalIP

ClusterIP Service 类型的结构

NodePort service 类型的结构

LoadBalancer service 类型

cat << EOF > nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentlabels:app: nginx-deployspec:replicas: 1selector:matchLabels:app: nginx-apptemplate:metadata:labels:app: nginx-appspec:containers:- name: nginximage: nginx:1.7.6imagePullPolicy: IfNotPresentports:- containerPort: 80EOF

1. 创建ClusterIP

方法一:通过命令行创建

kubectl expose deployment —target-port=80 —port=80 —type=ClusterIP

#方法二:通过yaml文件创建

#方法二:通过yaml文件创建

cat << EOF > clusterip.yamlkind: ServiceapiVersion: v1metadata:name: my-servicespec:type: ClusterIPselector:app: nginx-appports:- protocol: TCPport: 80targetPort: 80EOF

[liwm@rmaster01 liwm]$ kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.43.0.1 <none> 443/TCP 18dmy-service ClusterIP 10.43.160.136 <none> 80/TCP 20h[liwm@rmaster01 liwm]$ kubectl describe service my-serviceName: my-serviceNamespace: defaultLabels: <none>Annotations: kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"my-service","namespace":"default"},"spec":{"ports":[{"port":80,"p...Selector: app=nginxType: ClusterIPIP: 10.43.160.136Port: <unset> 80/TCPTargetPort: 80/TCPEndpoints: <none>Session Affinity: NoneEvents: <none>[liwm@rmaster01 liwm]$

资源记录格式:

SVC_NAME.NS_NAME.DOMAIN.LTD

默认的service的a记录 svc.cluster.local

my-service.default.cluster.local

2. 创建NodePort

方法一:通过命令行创建

kubectl expose deployment —target-port=80 —port=80 —type=NodePort

#方法二:通过yaml文件创建

#方法二:通过yaml文件创建

cat << EOF > nodeport.yamlkind: ServiceapiVersion: v1metadata:name: nodeport-servicespec:type: NodePortselector:app: nginx-appports:- protocol: TCPport: 80targetPort: 80EOF

[liwm@rmaster01 liwm]$ kubectl create -f nodeport.yamlservice/nodeport-service created[liwm@rmaster01 liwm]$ kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.43.0.1 <none> 443/TCP 18dmy-service ClusterIP 10.43.160.136 <none> 80/TCP 20hnodeport-service NodePort 10.43.171.253 <none> 80:31120/TCP 6s[liwm@rmaster01 liwm]$ kubectl describe service nodeport-serviceName: nodeport-serviceNamespace: defaultLabels: <none>Annotations: field.cattle.io/publicEndpoints:[{"addresses":["192.168.31.133"],"port":31120,"protocol":"TCP","serviceName":"default:nodeport-service","allNodes":true}]Selector: app=nginxType: NodePortIP: 10.43.171.253Port: <unset> 80/TCPTargetPort: 80/TCPNodePort: <unset> 31120/TCPEndpoints: <none>Session Affinity: NoneExternal Traffic Policy: ClusterEvents: <none>[liwm@rmaster01 liwm]$

查看pod的endpoints组合

cat << EOF > nodeport-pod.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentlabels:app: nginxspec:replicas: 1selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:1.7.6imagePullPolicy: IfNotPresentports:- containerPort: 80EOF

[liwm@rmaster01 liwm]$ kubectl describe service nodeport-serviceName: nodeport-serviceNamespace: defaultLabels: <none>Annotations: field.cattle.io/publicEndpoints:[{"addresses":["192.168.31.133"],"port":31120,"protocol":"TCP","serviceName":"default:nodeport-service","allNodes":true}]Selector: app=nginxType: NodePortIP: 10.43.171.253Port: <unset> 80/TCPTargetPort: 80/TCPNodePort: <unset> 31120/TCPEndpoints: 10.42.2.163:80,10.42.4.253:80Session Affinity: NoneExternal Traffic Policy: ClusterEvents: <none>[liwm@rmaster01 liwm]$ kubectl get endpointsNAME ENDPOINTS AGEkubernetes 192.168.31.130:6443,192.168.31.131:6443,192.168.31.132:6443 18dmy-service 10.42.2.163:80,10.42.4.253:80 20hnodeport-service 10.42.2.163:80,10.42.4.253:80 7m50s[liwm@rmaster01 liwm]$

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-74dc4cb5fb-52wfz 1/1 Running 0 12m

nginx-deployment-74dc4cb5fb-b4gwc 1/1 Running 0 11m

[liwm@rmaster01 liwm]$ kubectl exec -it nginx-deployment-74dc4cb5fb-52wfz bash

root@nginx-deployment-74dc4cb5fb-52wfz:/# cd /usr/share/nginx/html/

root@nginx-deployment-74dc4cb5fb-52wfz:/usr/share/nginx/html# echo 1 > index.html

root@nginx-deployment-74dc4cb5fb-52wfz:/usr/share/nginx/html# exit

exit

[liwm@rmaster01 liwm]$ kubectl exec -it nginx-deployment-74dc4cb5fb-b4gwc bash

root@nginx-deployment-74dc4cb5fb-b4gwc:/# cd /usr/share/nginx/html/

root@nginx-deployment-74dc4cb5fb-b4gwc:/usr/share/nginx/html# echo 2 > index.html

root@nginx-deployment-74dc4cb5fb-b4gwc:/usr/share/nginx/html# exit

exit

[liwm@rmaster01 liwm]$ for a in {1..10}; do curl http://10.43.171.253 && sleep 1s; done

1

2

1

2

1

1

1

2

2

1

[liwm@rmaster01 liwm]$

[liwm@rmaster01 liwm]$ for a in {1..10}; do curl http://192.168.31.130:31120 && sleep 1s; done

1

2

1

1

2

2

2

2

1

2

[liwm@rmaster01 liwm]$

3. Headless Services

cat << EOF > headless.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # has to match .spec.template.metadata.labels

serviceName: "nginx"

replicas: 3 # by default is 1

template:

metadata:

labels:

app: nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

EOF

4.ExternalName

cat << EOF > externalname.yaml

apiVersion: v1

kind: Service

metadata:

name: my-service

namespace: prod

spec:

type: ExternalName

externalName: my.database.example.com

EOF

5. External IPs

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: nginx

ports:

- name: http

protocol: TCP

port: 18080

targetPort: 9376

externalIPs:

- 172.31.53.96

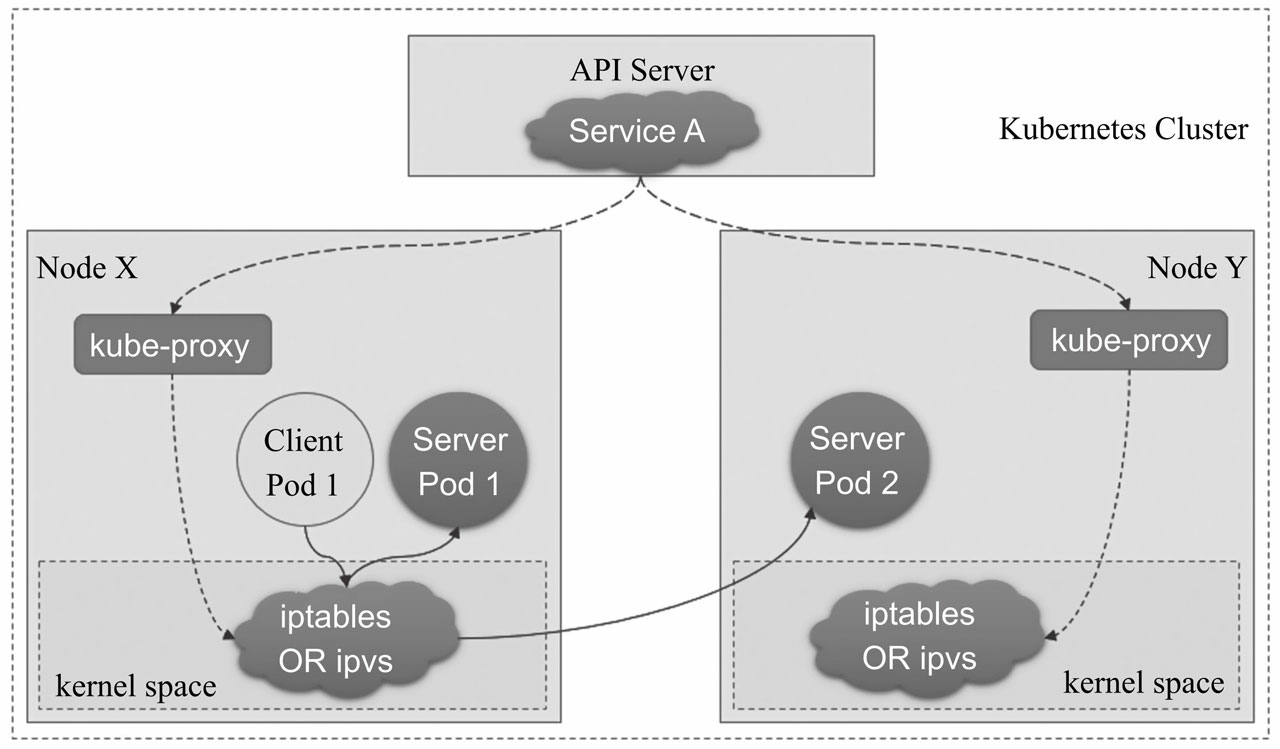

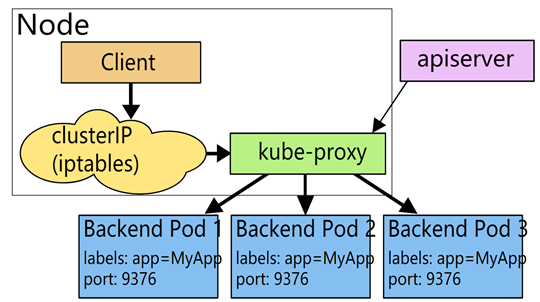

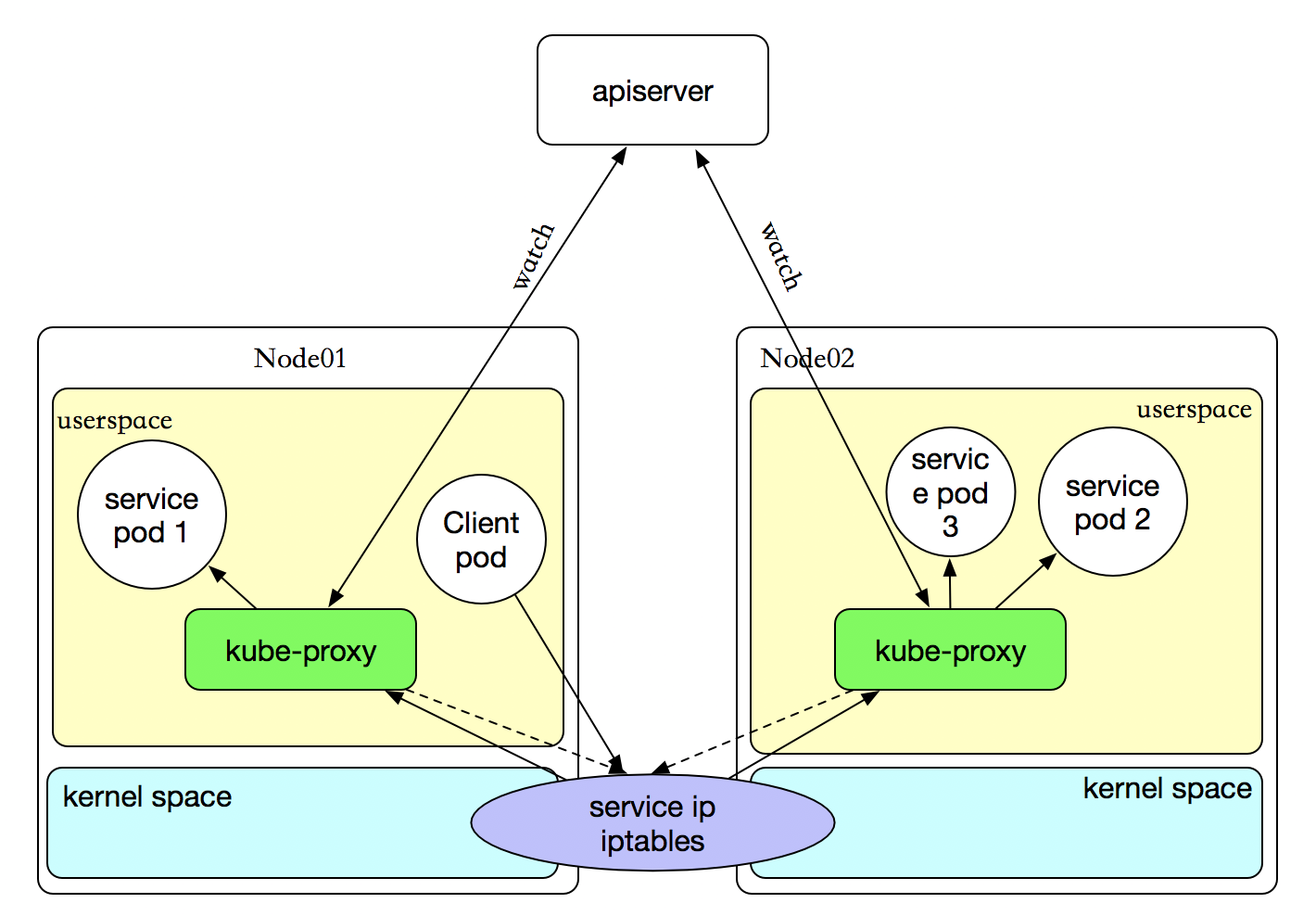

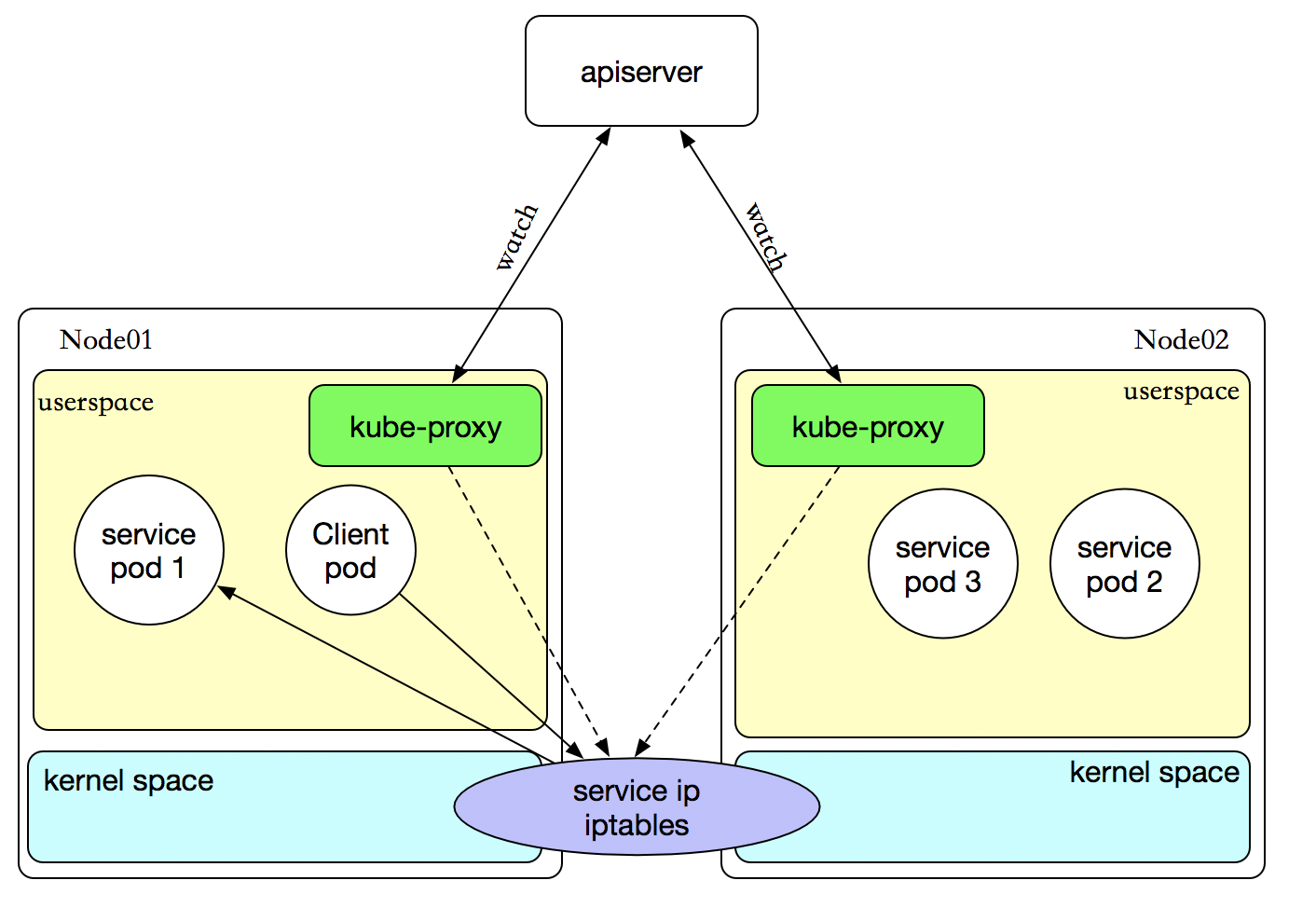

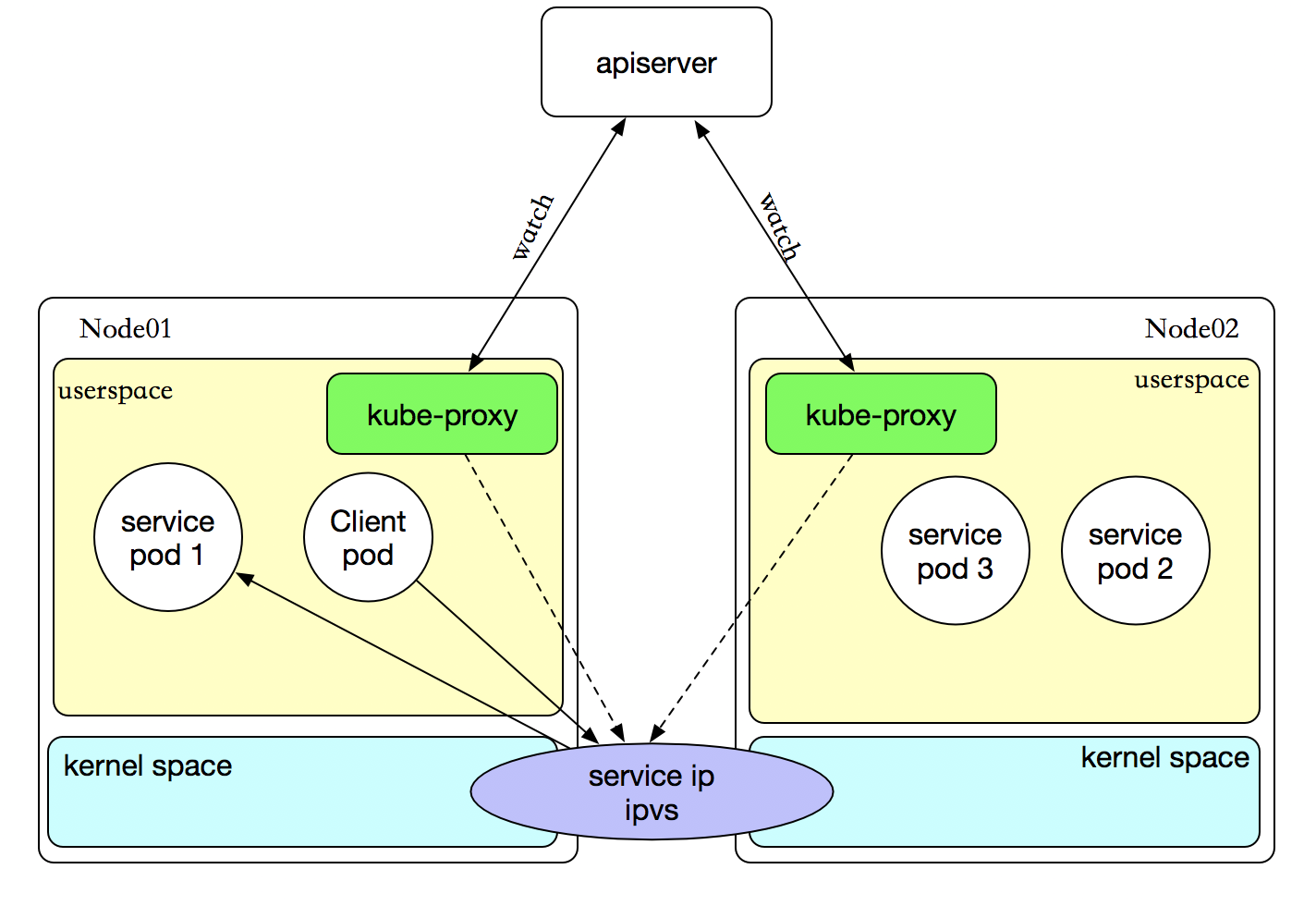

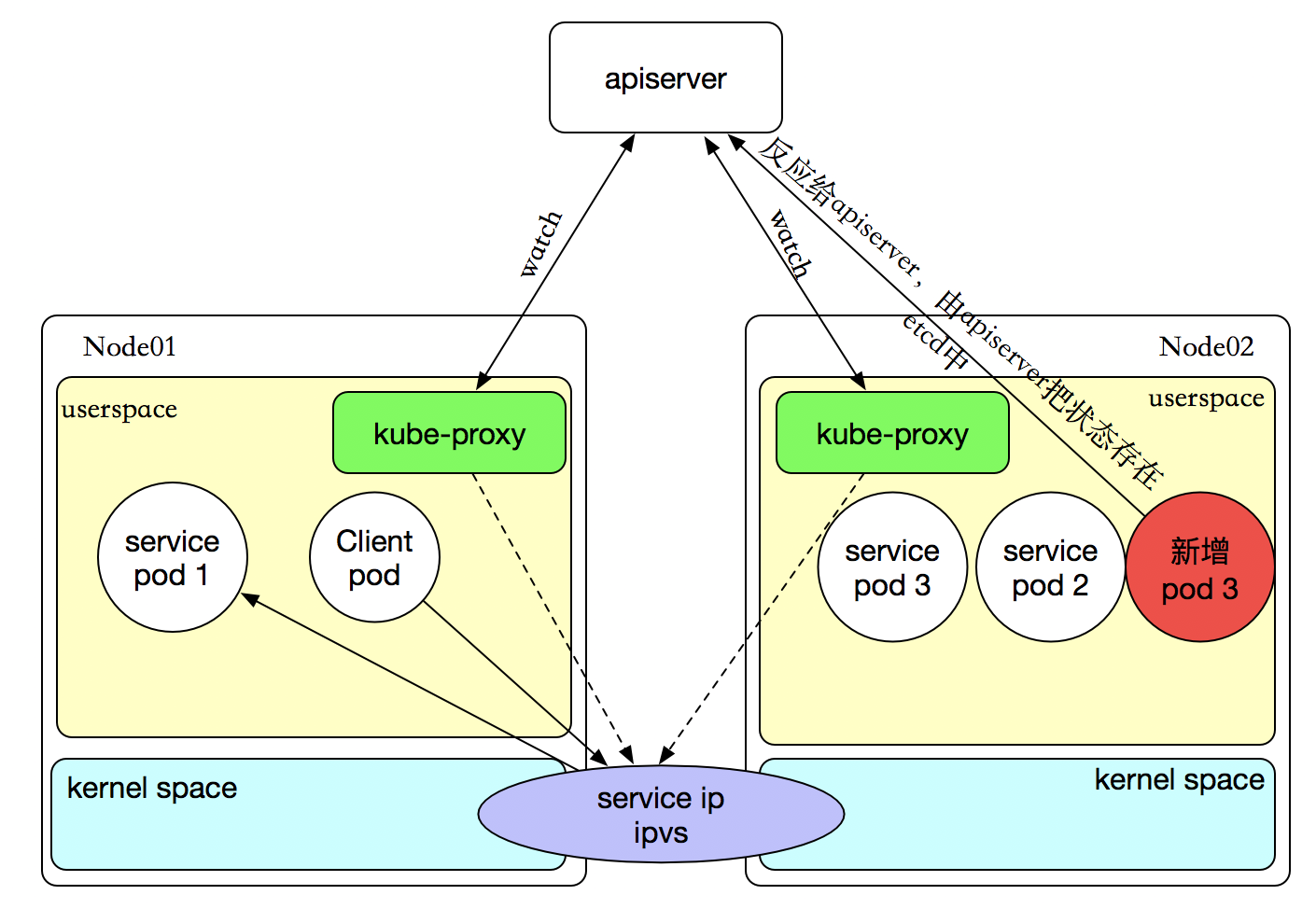

Userspace模式

kube-proxy会为每个service随机监听一个端口(proxy port ),并增加一条iptables规则。

所有到clusterIP:Port 的报文都转发到proxy port;

kube-proxy从它监听的proxy port收到报文后,走round robin(默认)或者session affinity(会话亲和性,即同一client IP都走同一链路给同一pod服务)分发给对应的pod。

优点:

实现方式简单,通过kube-proxy调用、转发。

缺点:

所有流量都要经过kube-proxy,容易造成性能瓶颈。

经过kube-proxy的流量还需要在经过iptables转发,需要在用户态和内核态不断进行切换,效率低。

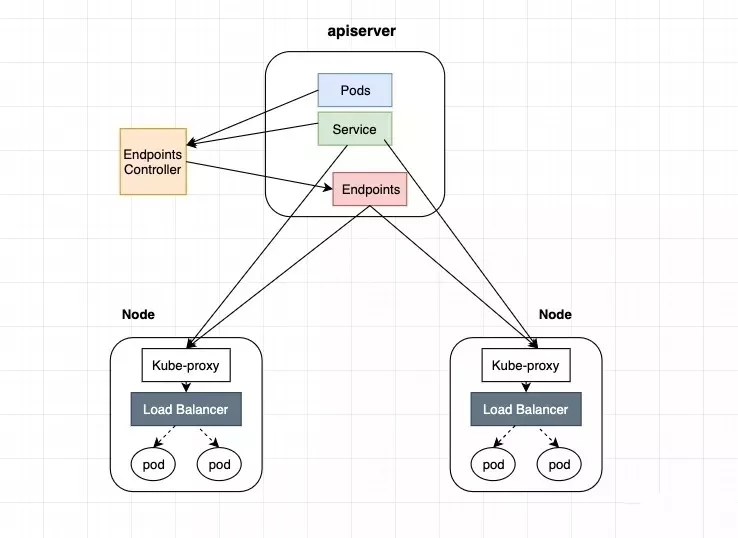

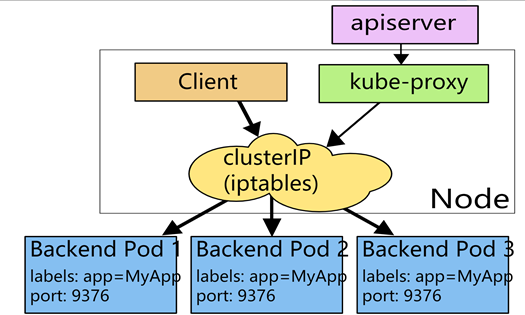

Iptables模式

kube-proxy 会监视 Kubernetes 控制节点对 Service 对象和 Endpoints 对象的添加和移除。 对每个 Service都会创建iptables 规则,从而捕获到达该 Service 的 clusterIP和端口的请求,进而将请求重定向到 Service 的一组 backend 中的某个上面。 <br />对于每个 Endpoints 对象,它也会创建iptables 规则,这个规则会选择一个 backend 组合。<br /> 默认的策略是,kube-proxy 在 iptables 模式下随机选择一个 backend。<br />目前kubernetes提供了两种负载分发策略:RoundRobin和SessionAffinity<br />优势:<br />使用 iptables 处理流量具有较低的系统开销,因为流量由 Linux netfilter 处理,而无需在用户空间和内核空间之间切换。 这种方法更可靠。<br />结合 Pod readiness探测器可以避免将流量通过 kube-proxy 发送到已知已失败的Pod。

**

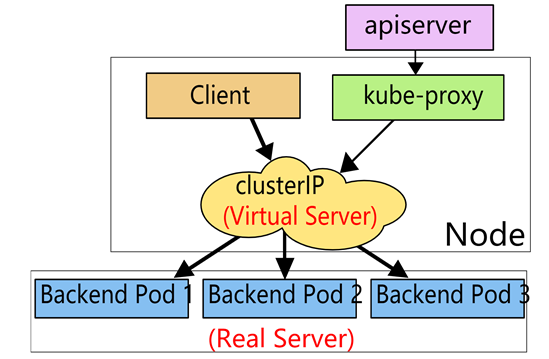

IPVS模式

在 ipvs模式下,kube-proxy监视Kubernetes服务和端点,调用netlink接口创建相应IPVS规则,并定期将 IPVS 规则与 Kubernetes Service和Enidpoint同步。访问Service时,IPVS将流量定向到后端Pod之一。

IPVS代理模式基于类似于 iptables 模式的 netfilter 挂钩函数,但是使用哈希表作为基础数据结构,并且在内核空间中工作。 这意味着与iptables模式下的 kube-proxy 相比,IPVS 模式下的 kube-proxy 重定向通信的延迟要短,并且在同步代理规则时具有更好的性能。与其他代理模式相比,IPVS 模式还支持更高的网络流量吞吐量。

注意:

要在 IPVS 模式下运行 kube-proxy,必须在启动 kube-proxy 之前使 IPVS Linux 在节点上可用。

当 kube-proxy 以 IPVS 代理模式启动时,它将验证 IPVS 内核模块是否可用。 如果未检测到 IPVS 内核模块,则 kube-proxy 将退回到以 iptables 代理模式运行。

IPVS主要有三种模式

DR模式:调度器LB直接修改报文的目的MAC地址为后端真实服务器地址,服务器响应处理后的报文无需经过调度器LB,直接返回给客户端。这种模式也是性能最好的。

TUN模式:LB接收到客户请求包,进行IP Tunnel封装。即在原有的包头加上IP Tunnel的包头。然后发给后端真实服务器,真实的服务器将响应处理后的数据直接返回给客户端。

NAT模式:LB将客户端发过来的请求报文修改目的IP地址为后端真实服务器IP另外也修改后端真实服务器发过来的响应的报文的源IP为LB上的IP。

注意:kube-proxy的IPVS模式用的上NAT模式,因为DR,TUN模式都不支持端口映射

IPVS多种调度算法来平衡后端的Pod流量:

rr:轮询

lc:最小连接

dh:目的地址哈希

sh:源地址哈希

sed: 最短的预期延迟

nq:永不队列

会话保持

在这些代理模型中如果要确保每次都将来自特定客户端的连接传递到同一Pod,则可以通过修改Service的字段: service.spec.sessionAffinity 设置为 “ClientIP” (默认值是 “None”),实现基于客户端的IP地址选择会话关联。还可以通过适当设置 service.spec.sessionAffinityConfig.clientIP.timeoutSeconds 来设置最大会话停留时间。 (默认值为 10800 秒,即 3 小时)

kubectl explain svc.spec.sessionAffinity

支持ClientIP和None 两种方式,默认是None(随机调度) ClientIP是来自于同一个客户端的请求调度到同一个pod中

创建Service编辑sessionAffinity

kubectl edit service/test

将sessionaffinity参数改为 sessionAffinity: ClientIP

iptables -t nat -L | grep 10800

cat << EOF > myapp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

labels:

app: myapp

spec:

replicas: 2

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: nginx

image: nginx:1.7.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

selector:

app: myapp

sessionAffinity: ClientIP

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30080

EOF

6. 服务发现

Kubernetes支持集群的的DNS服务器(例如CoreDNS)监视Kubernetes API中新创建的Service,并为每个Service创建一组DNS记录。如果在整个群集中都启用了DNS,则所有Pod都应该能够通过其DNS名称自动解析服务。

DNS策略:

“Default“:使用宿主机集群的DNS。

“ClusterFirst“:任何与配置的集群域后缀不匹配的DNS查询(例如“ www.kubernetes.io”)都将转发到节点上继承的DNS服务器。

“ClusterFirstWithHostNet“:Pod使用主机网络(hostNetwork: true),则使用此策略

“None“:它允许Pod忽略Kubernetes环境中的DNS设置,以pod Spec中dnsConfig配置为准。

注意:

“Default”不是默认的DNS策略。如果dnsPolicy未明确指定,则默认使用“ ClusterFirst”。

同个Namespace的应用可以直接通过service_name访问。跨Namespace访问通过service_name.namespace。

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

hostname: busybox-1

subdomain: default-subdomain

containers:

- image: busybox:1.28

command:

- sleep

- "3600"

name: busybox

apiVersion: v1

kind: Pod

metadata:

name: hostaliases-pod

spec:

restartPolicy: Never

hostAliases:

- ip: "127.0.0.1"

hostnames:

- "foo.local"

- "bar.local"

- ip: "10.1.2.3"

hostnames:

- "foo.remote"

- "bar.remote"

containers:

- name: cat-hosts

image: busybox

command:

- cat

args:

- "/etc/hosts"

[liwm@rmaster01 liwm]$ kubectl create -f myapp.yaml

Error from server (AlreadyExists): error when creating "myapp.yaml": deployments.apps "myapp" already exists

Error from server (Invalid): error when creating "myapp.yaml": Service "myapp" is invalid: spec.ports[0].nodePort: Invalid value: 30080: provided port is already allocated

[liwm@rmaster01 liwm]$

[liwm@rmaster01 liwm]$ kubectl delete deployments.apps --all

deployment.apps "myapp" deleted

[liwm@rmaster01 liwm]$

[liwm@rmaster01 liwm]$ kubectl create -f myapp.yaml

deployment.apps/myapp created

The Service "myapp" is invalid: spec.ports[0].nodePort: Invalid value: 30080: provided port is already allocated

[liwm@rmaster01 liwm]$ kubectl describe service myapp

Name: myapp

Namespace: default

Labels: <none>

Annotations: field.cattle.io/publicEndpoints:

[{"addresses":["192.168.31.133"],"port":30080,"protocol":"TCP","serviceName":"default:myapp","allNodes":true}]

Selector: app=myapp

Type: NodePort

IP: 10.43.76.239

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30080/TCP

Endpoints: 10.42.2.169:80,10.42.4.15:80

Session Affinity: ClientIP

External Traffic Policy: Cluster

Events: <none>

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-54d6f6cdb7-npg6q 1/1 Running 0 7m32s

myapp-54d6f6cdb7-ztpfp 1/1 Running 0 7m32s

[liwm@rmaster01 liwm]$ kubectl exec -it myapp-54d6f6cdb7-npg6q bash

root@myapp-54d6f6cdb7-npg6q:/# cd /usr/share/nginx/html/

root@myapp-54d6f6cdb7-npg6q:/usr/share/nginx/html# echo 1 > index.html

root@myapp-54d6f6cdb7-npg6q:/usr/share/nginx/html# exit

exit

[liwm@rmaster01 liwm]$ kubectl exec -it myapp-54d6f6cdb7-ztpfp bash

root@myapp-54d6f6cdb7-ztpfp:/# cd /usr/share/nginx/html/

root@myapp-54d6f6cdb7-ztpfp:/usr/share/nginx/html# echo 2 > index.html

root@myapp-54d6f6cdb7-ztpfp:/usr/share/nginx/html# exit

exit

[liwm@rmaster01 liwm]$ for a in {1..10}; do curl http://192.168.31.130:30080 && sleep 1s; done

2

2

2

2

2

2

2

2

2

2

[liwm@rmaster01 liwm]$ for a in {1..10}; do curl http://192.168.31.130:30080 && sleep 1s; done

2

2

2

2

2

2

2

2

2

2

[liwm@rmaster01 liwm]$ for a in {1..10}; do curl http://192.168.31.130:30080 && sleep 1s; done

2

2

2

2

2

2

2

2

2

2

[liwm@rmaster01 liwm]$

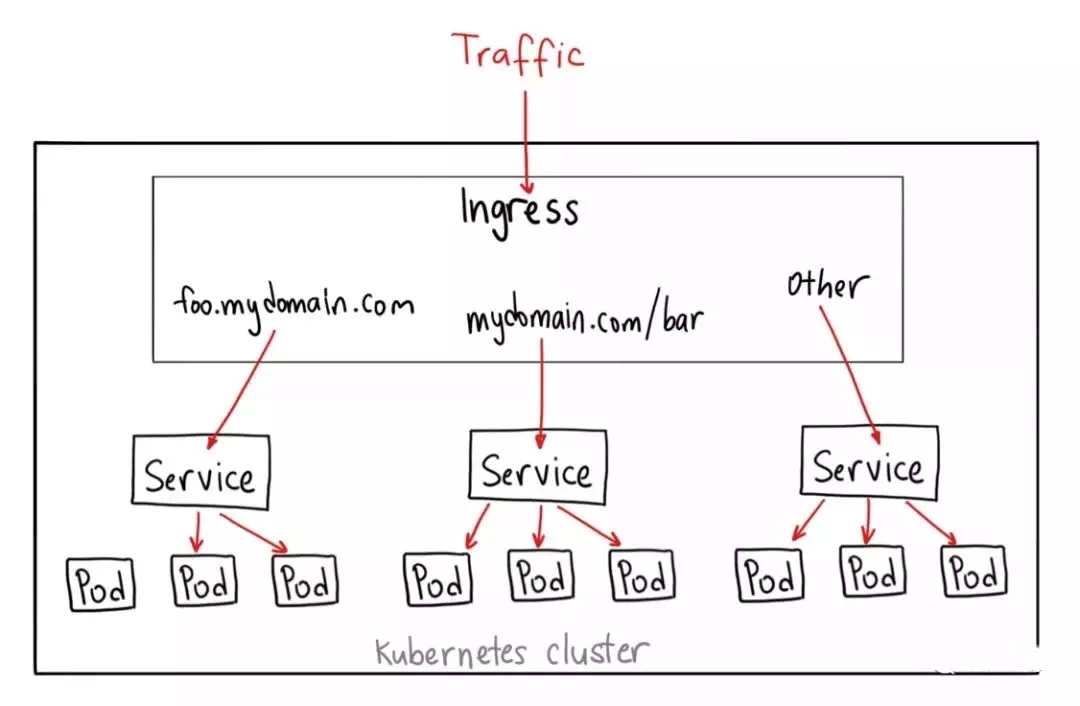

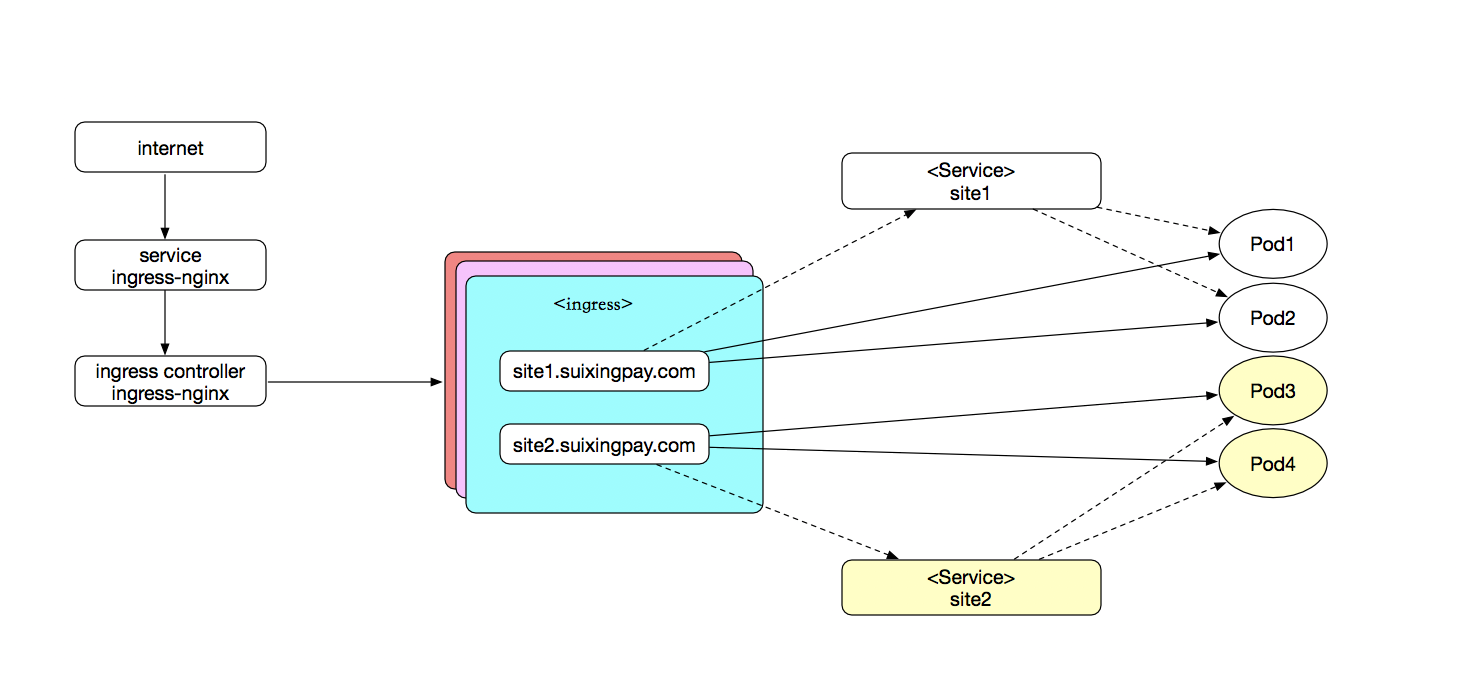

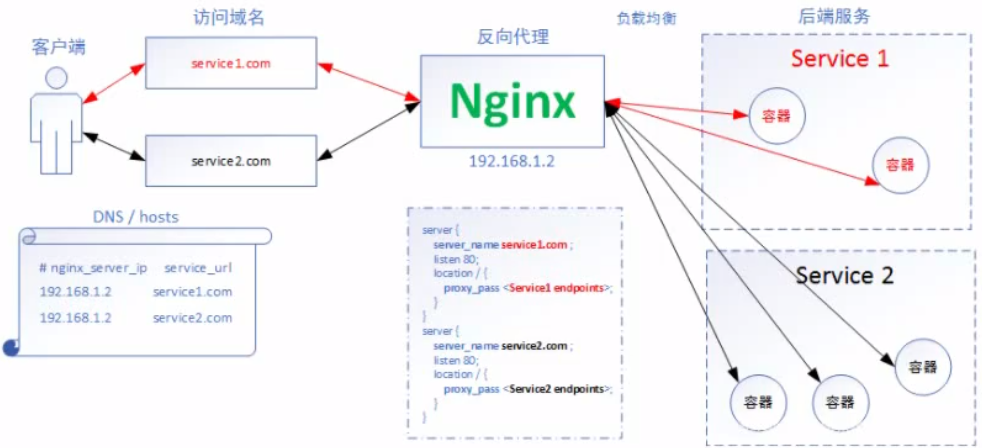

7. Ingress

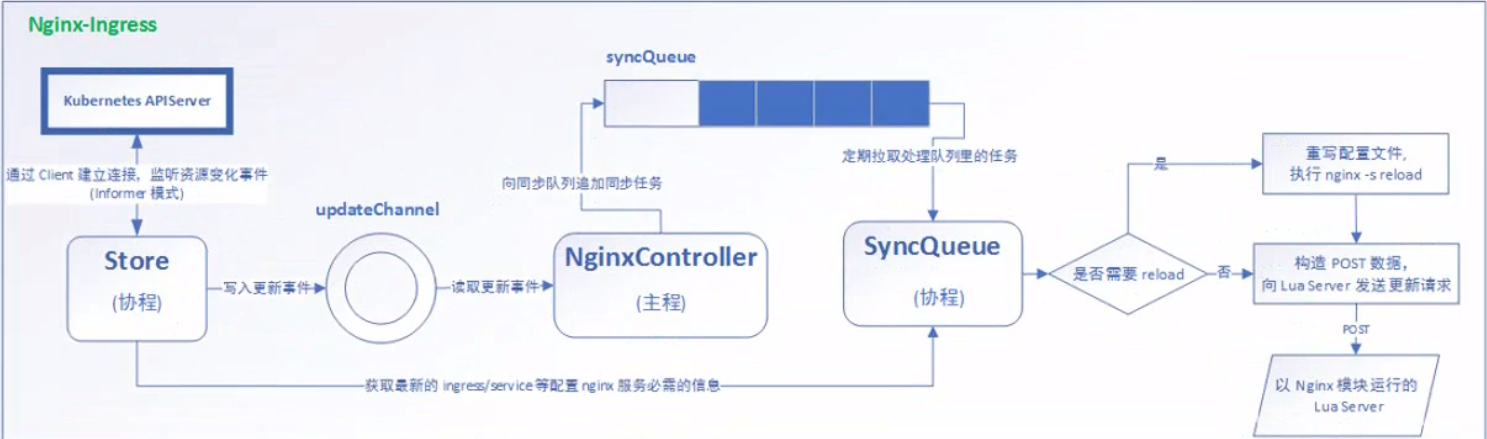

Ingress 公开了从集群外部到集群内 services 的HTTP和HTTPS路由。 在Ingress 资源上定义了流量路由规则控制。可以通过创建ingress实现针对URL、path、SSL的请求转发。<br /> 需要哪些准备?<br />必须具有 ingress Controllers才能满足 Ingress 的要求。仅创建 Ingress 资源无效

ingress-controller本身是一个pod,这个pod里面的容器安装了反向代理软件,通过读取添加的Service,动态生成负载均衡器的反向代理配置,你添加对应的ingress服务后,里面规则包含了对应的规则,里面有域名和对应的Service-backend。

NGINX Ingress Controller是目前使用最多也是评分最高的Ingress控制器:

基于http-header 的路由

基于 path 的路由

单个ingress 的 timeout (不影响其他ingress 的 timeout 时间设置)

请求速率limit rewrite

规则 ssl

Ingress 结构

**

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '1'

kubectl.kubernetes.io/last-applied-configuration: >

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"default-http-backend"},"name":"default-http-backend","namespace":"ingress-nginx"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"default-http-backend"}},"template":{"metadata":{"labels":{"app":"default-http-backend"}},"spec":{"affinity":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"beta.kubernetes.io/os","operator":"NotIn","values":["windows"]},{"key":"node-role.kubernetes.io/worker","operator":"Exists"}]}]}}},"containers":[{"image":"rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1","livenessProbe":{"httpGet":{"path":"/healthz","port":8080,"scheme":"HTTP"},"initialDelaySeconds":30,"timeoutSeconds":5},"name":"default-http-backend","ports":[{"containerPort":8080}],"resources":{"limits":{"cpu":"10m","memory":"20Mi"},"requests":{"cpu":"10m","memory":"20Mi"}}}],"terminationGracePeriodSeconds":60,"tolerations":[{"effect":"NoExecute","operator":"Exists"},{"effect":"NoSchedule","operator":"Exists"}]}}}}

creationTimestamp: '2020-04-27T12:56:52Z'

generation: 1

labels:

app: default-http-backend

name: default-http-backend

namespace: ingress-nginx

resourceVersion: '8840297'

selfLink: /apis/apps/v1/namespaces/ingress-nginx/deployments/default-http-backend

uid: ab861f90-92df-4952-9431-ab21f83d739b

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: default-http-backend

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: default-http-backend

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: NotIn

values:

- windows

- key: node-role.kubernetes.io/worker

operator: Exists

containers:

- image: 'rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1'

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: default-http-backend

ports:

- containerPort: 8080

protocol: TCP

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 60

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

status:

availableReplicas: 1

conditions:

- lastTransitionTime: '2020-04-27T12:56:52Z'

lastUpdateTime: '2020-04-27T12:57:18Z'

message: >-

ReplicaSet "default-http-backend-67cf578fc4" has successfully

progressed.

reason: NewReplicaSetAvailable

status: 'True'

type: Progressing

- lastTransitionTime: '2020-05-01T03:41:59Z'

lastUpdateTime: '2020-05-01T03:41:59Z'

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: 'True'

type: Available

observedGeneration: 1

readyReplicas: 1

replicas: 1

updatedReplicas: 1

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

deprecated.daemonset.template.generation: '1'

field.cattle.io/publicEndpoints: >-

[{"nodeName":"c-vvctx:machine-hvm67","addresses":["192.168.31.133"],"port":80,"protocol":"TCP","podName":"ingress-nginx:nginx-ingress-controller-5w89b","allNodes":false},{"nodeName":"c-vvctx:machine-hvm67","addresses":["192.168.31.133"],"port":443,"protocol":"TCP","podName":"ingress-nginx:nginx-ingress-controller-5w89b","allNodes":false},{"nodeName":"c-vvctx:machine-4hj8w","addresses":["192.168.31.134"],"port":80,"protocol":"TCP","podName":"ingress-nginx:nginx-ingress-controller-vg4sh","allNodes":false},{"nodeName":"c-vvctx:machine-4hj8w","addresses":["192.168.31.134"],"port":443,"protocol":"TCP","podName":"ingress-nginx:nginx-ingress-controller-vg4sh","allNodes":false}]

kubectl.kubernetes.io/last-applied-configuration: >

{"apiVersion":"apps/v1","kind":"DaemonSet","metadata":{"annotations":{},"name":"nginx-ingress-controller","namespace":"ingress-nginx"},"spec":{"selector":{"matchLabels":{"app":"ingress-nginx"}},"template":{"metadata":{"annotations":{"prometheus.io/port":"10254","prometheus.io/scrape":"true"},"labels":{"app":"ingress-nginx"}},"spec":{"affinity":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"beta.kubernetes.io/os","operator":"NotIn","values":["windows"]},{"key":"node-role.kubernetes.io/worker","operator":"Exists"}]}]}}},"containers":[{"args":["/nginx-ingress-controller","--default-backend-service=$(POD_NAMESPACE)/default-http-backend","--configmap=$(POD_NAMESPACE)/nginx-configuration","--tcp-services-configmap=$(POD_NAMESPACE)/tcp-services","--udp-services-configmap=$(POD_NAMESPACE)/udp-services","--annotations-prefix=nginx.ingress.kubernetes.io"],"env":[{"name":"POD_NAME","valueFrom":{"fieldRef":{"fieldPath":"metadata.name"}}},{"name":"POD_NAMESPACE","valueFrom":{"fieldRef":{"fieldPath":"metadata.namespace"}}}],"image":"rancher/nginx-ingress-controller:nginx-0.25.1-rancher1","livenessProbe":{"failureThreshold":3,"httpGet":{"path":"/healthz","port":10254,"scheme":"HTTP"},"initialDelaySeconds":10,"periodSeconds":10,"successThreshold":1,"timeoutSeconds":1},"name":"nginx-ingress-controller","ports":[{"containerPort":80,"name":"http"},{"containerPort":443,"name":"https"}],"readinessProbe":{"failureThreshold":3,"httpGet":{"path":"/healthz","port":10254,"scheme":"HTTP"},"periodSeconds":10,"successThreshold":1,"timeoutSeconds":1},"securityContext":{"capabilities":{"add":["NET_BIND_SERVICE"],"drop":["ALL"]},"runAsUser":33}}],"hostNetwork":true,"serviceAccountName":"nginx-ingress-serviceaccount","tolerations":[{"effect":"NoExecute","operator":"Exists"},{"effect":"NoSchedule","operator":"Exists"}]}}}}

creationTimestamp: '2020-04-27T12:56:52Z'

generation: 1

name: nginx-ingress-controller

namespace: ingress-nginx

resourceVersion: '8850974'

selfLink: /apis/apps/v1/namespaces/ingress-nginx/daemonsets/nginx-ingress-controller

uid: 7cbd5760-edf2-48ae-8584-683a29bf3ac8

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

labels:

app: ingress-nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: NotIn

values:

- windows

- key: node-role.kubernetes.io/worker

operator: Exists

containers:

- args:

- /nginx-ingress-controller

- '--default-backend-service=$(POD_NAMESPACE)/default-http-backend'

- '--configmap=$(POD_NAMESPACE)/nginx-configuration'

- '--tcp-services-configmap=$(POD_NAMESPACE)/tcp-services'

- '--udp-services-configmap=$(POD_NAMESPACE)/udp-services'

- '--annotations-prefix=nginx.ingress.kubernetes.io'

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: 'rancher/nginx-ingress-controller:nginx-0.25.1-rancher1'

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: nginx-ingress-controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

securityContext:

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 33

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

schedulerName: default-scheduler

serviceAccount: nginx-ingress-serviceaccount

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

status:

currentNumberScheduled: 2

desiredNumberScheduled: 2

numberAvailable: 2

numberMisscheduled: 0

numberReady: 2

observedGeneration: 1

updatedNumberScheduled: 2

创建Ingress

kubectl run —image=nginx test1

kubectl run —image=nginx test2

kubectl expose deployment test1 —port=8081 —target-port=80

kubectl expose deployment test2 —port=8081 —target-port=80

cat << EOF > ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-example

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: riyimei.cn

http:

paths:

- path: /dev

backend:

serviceName: test1

servicePort: 8081

- path: /uat

backend:

serviceName: test2

servicePort: 8081

EOF

hosts表添加node01的ip地址+域名

vim /etc/hosts

192.168.31.131 node01 riyimei.cn

curl riyimei.cn/dev

curl riyimei.cn/uat

编写ingress2.yaml,使用ip地址进行访问

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

backend:

serviceName: test

servicePort: 18080

curl 172.17.224.180