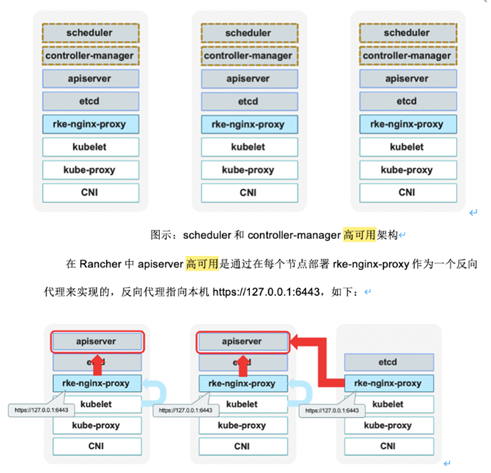

高可用架构

kubernetes集群的高可用主要考虑两个核心组件,分别是etcd、api-server。RKE部署后,会将3台etcd组成集群,实现etcd可以同时写入读取。而api-server是通过每个节点部署的rke-nginx-proxy作为一个反向代理实现的。反向代理指向本机的127.0.0.1:6443,当节点的api-server挂掉后,反向代理会将127.0.0.1:6443指向其他可用机器。rke-nginx-proxy的反向代理规则是自动更新的,无需手动操作

3台master只能宕机1台

查看node节点上的nginx反向代理设置:

[root@node01 ~]# docker ps|grep nginx-proxy45d332d69873 rancher/rke-tools:v0.1.56 "nginx-proxy CP_HOST…" 3 months ago Up 54 minutes nginx-proxy[root@node01 ~]# docker exec -it nginx-proxy bashbash-4.4#bash-4.4# more /etc/nginx/nginx.conferror_log stderr notice;worker_processes auto;events {multi_accept on;use epoll;worker_connections 1024;}stream {upstream kube_apiserver {server 192.168.11.100:6443;server 192.168.11.101:6443;server 192.168.11.102:6443;}server {listen 6443;proxy_pass kube_apiserver;proxy_timeout 30;proxy_connect_timeout 2s;}}bash-4.4#

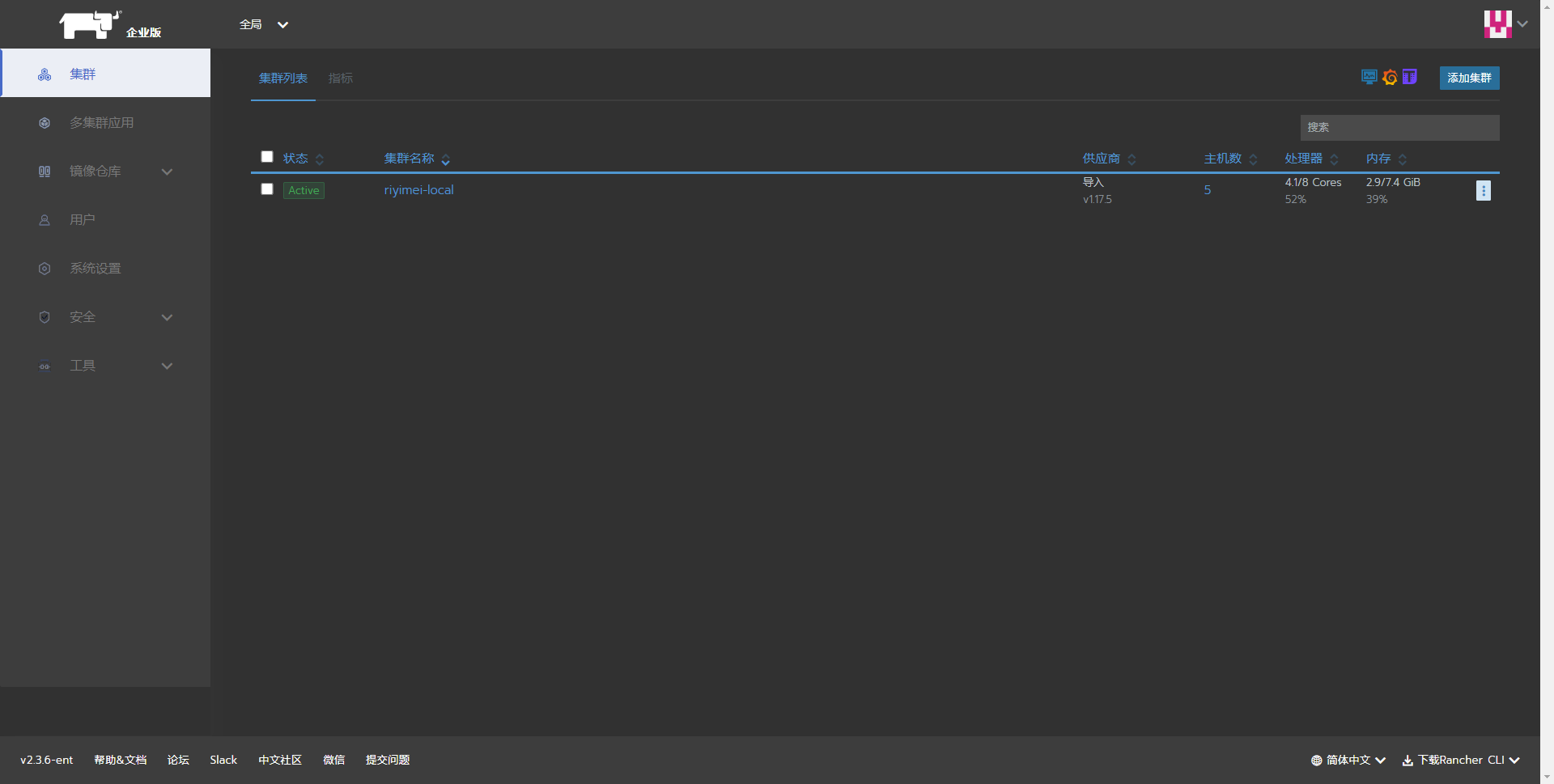

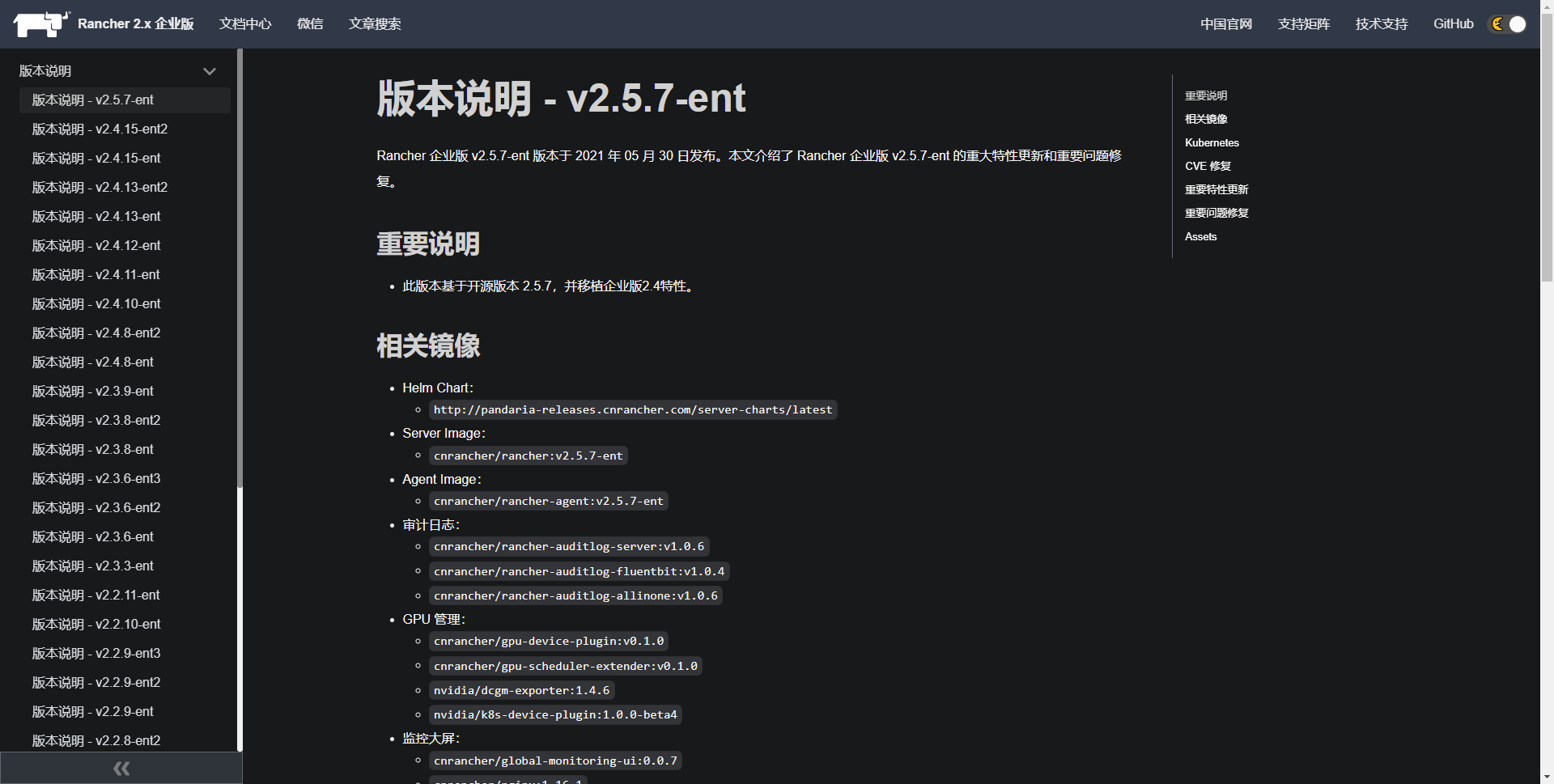

企业版快速安装

rancher service 部署

docker run -itd -p 443:443 cnrancher/rancher:v2.3.6-ent

清除

df -h|grep kubelet |awk -F % ‘{print $2}’|xargs umount

sudo rm /var/lib/kubelet/ -rf

sudo rm /etc/kubernetes/ -rf

sudo rm /etc/cni/ -rf

sudo rm /var/lib/rancher/ -rf

sudo rm /var/lib/etcd/ -rf

sudo rm /var/lib/cni/ -rf

sudo rm /opt/cni/* -rf

sudo ip link del flannel.1

ip link del cni0

iptables -F && iptables -t nat -F

docker ps -a|grep -v gitlab|awk ‘{print $1}’|xargs docker rm -f

docker volume ls|awk ‘{print $2}’|xargs docker volume rm

systemctl restart docker

高可用架构

LB 1台

master 3台

node 2台

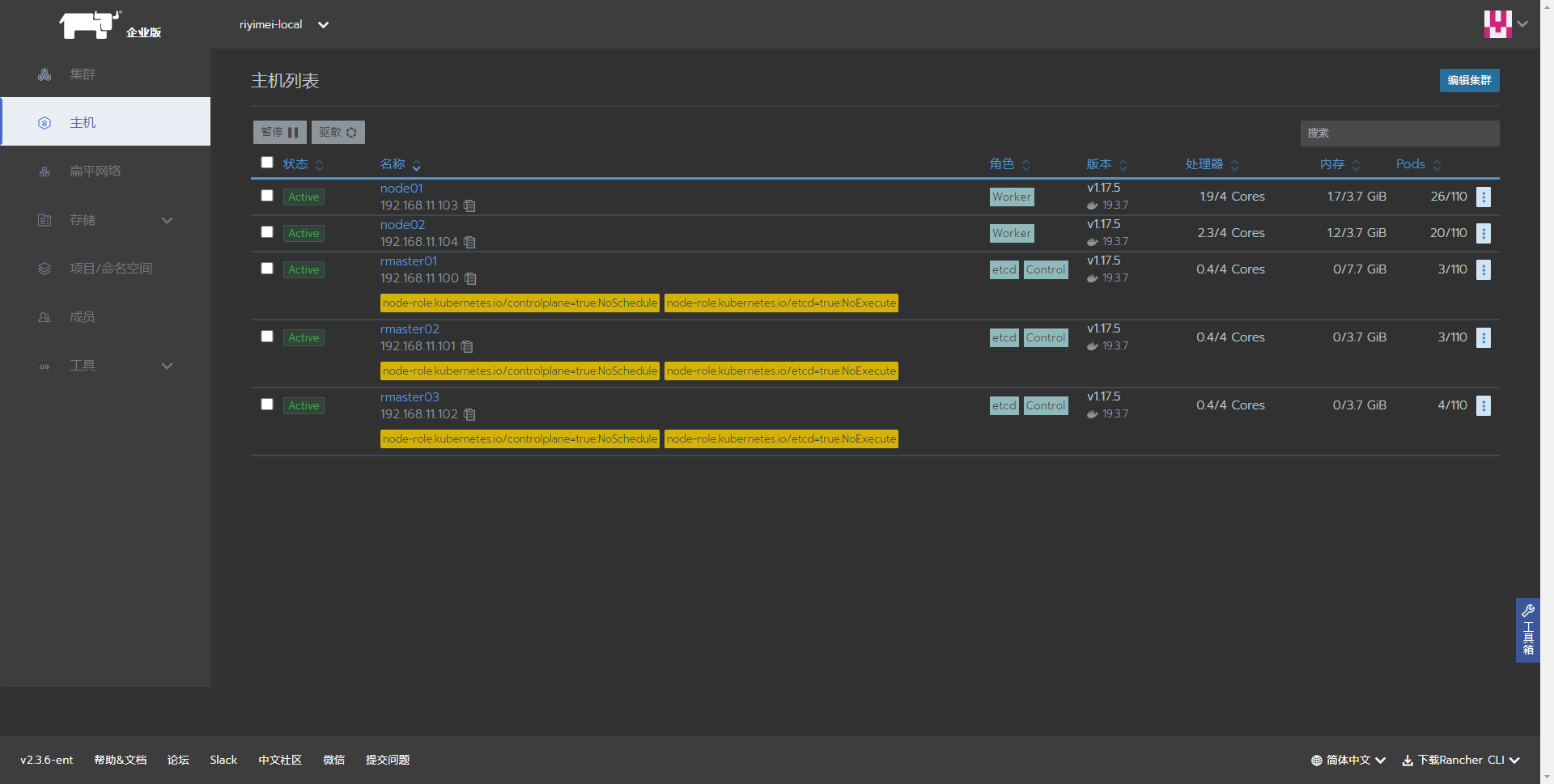

nodes:- address: 192.168.11.100hostname_override: rmaster01internal_address:user: rancherrole: [controlplane,etcd]- address: 192.168.11.101hostname_override: rmaster02internal_address:user: rancherrole: [controlplane,etcd]- address: 192.168.11.102hostname_override: rmaster03internal_address:user: rancherrole: [controlplane,etcd]- address: 192.168.11.103hostname_override: node01internal_address:user: rancherrole: [worker]- address: 192.168.11.104hostname_override: node02internal_address:user: rancherrole: [worker]# 定义kubernetes版本kubernetes_version: v1.17.5-rancher1-1# 如果要使用私有仓库中的镜像,配置以下参数来指定默认私有仓库地址。#private_registries:# - url: registry.com# user: Username# password: password# is_default: trueservices:etcd:# 扩展参数extra_args:# 240个小时后自动清理磁盘碎片,通过auto-compaction-retention对历史数据压缩后,后端数据库可能会出现内部碎片。内部碎片是指空闲状态的,能被后端使用但是仍然消耗存储空间,碎片整理过程将此存储空间释放回文>件系统auto-compaction-retention: 240 #(单位小时)# 修改空间配额为6442450944,默认2G,最大8Gquota-backend-bytes: '6442450944'# 自动备份snapshot: truecreation: 5m0sretention: 24hkubelet:extra_args:# 支持静态Pod。在主机/etc/kubernetes/目录下创建manifest目录,Pod YAML文件放在/etc/kubernetes/manifest/目录下pod-manifest-path: "/etc/kubernetes/manifest/"# 有几个网络插件可以选择:flannel、canal、calico,Rancher2默认canalnetwork:plugin: canaloptions:flannel_backend_type: "vxlan"# 可以设置provider: none来禁用ingress controlleringress:provider: nginx

[rancher@rmaster01 ~]$ kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcanal-7ncd7 2/2 Running 0 3m4scanal-bmrlq 2/2 Running 0 3m4scanal-j6h76 2/2 Running 0 3m4scanal-m9vpk 2/2 Running 0 3m4scanal-xb4wd 2/2 Running 0 3m4scoredns-7c5566588d-jnq98 1/1 Running 0 101scoredns-7c5566588d-lgcf5 1/1 Running 0 68scoredns-autoscaler-65bfc8d47d-tl9fv 1/1 Running 0 100smetrics-server-6b55c64f86-z4cb8 1/1 Running 0 96srke-coredns-addon-deploy-job-s9g8m 0/1 Completed 0 102srke-ingress-controller-deploy-job-9t5z5 0/1 Completed 0 92srke-metrics-addon-deploy-job-js269 0/1 Completed 0 97srke-network-plugin-deploy-job-hgmsk 0/1 Completed 0 3m47s[rancher@rmaster01 ~]$

部署

helm-v3.2.2

rancher-2.3.6

[rancher@rmaster01 home]$ sudo tar xf helm-v3.2.2-linux-amd64.tar.gz

[rancher@rmaster01 home]$ sudo tar xf rancher-2.3.6-ent.tgz

[rancher@rmaster01 home]$ sudo chmod a+x /usr/bin/helm

[rancher@rmaster01 home]$ sudo chmod a+x /usr/bin/helm

[rancher@rmaster01 home]$ kubectl create namespace cattle-system

#!/bin/bash -e# * 为必改项# * 服务器FQDN或颁发者名(更换为你自己的域名)CN='rancher'# 扩展信任IP或域名## 一般ssl证书只信任域名的访问请求,有时候需要使用ip去访问server,那么需要给ssl证书添加扩展IP,用逗号隔开。配置节点ip和lb的ip。SSL_IP='192.168.11.98,192.168.11.99,192.168.11.100,192.168.11.101,192.168.11.102,192.168.11.103,192.168.11.104,'SSL_DNS=''# 国家名(2个字母的代号)C=CN# 证书加密位数SSL_SIZE=2048# 证书有效期DATE=${DATE:-3650}# 配置文件SSL_CONFIG='openssl.cnf'if [[ -z $SILENT ]]; thenecho "----------------------------"echo "| SSL Cert Generator |"echo "----------------------------"echofiexport CA_KEY=${CA_KEY-"cakey.pem"}export CA_CERT=${CA_CERT-"cacerts.pem"}export CA_SUBJECT=ca-$CNexport CA_EXPIRE=${DATE}export SSL_CONFIG=${SSL_CONFIG}export SSL_KEY=$CN.keyexport SSL_CSR=$CN.csrexport SSL_CERT=$CN.crtexport SSL_EXPIRE=${DATE}export SSL_SUBJECT=${CN}export SSL_DNS=${SSL_DNS}export SSL_IP=${SSL_IP}export K8S_SECRET_COMBINE_CA=${K8S_SECRET_COMBINE_CA:-'true'}[[ -z $SILENT ]] && echo "--> Certificate Authority"if [[ -e ./${CA_KEY} ]]; then[[ -z $SILENT ]] && echo "====> Using existing CA Key ${CA_KEY}"else[[ -z $SILENT ]] && echo "====> Generating new CA key ${CA_KEY}"openssl genrsa -out ${CA_KEY} ${SSL_SIZE} > /dev/nullfiif [[ -e ./${CA_CERT} ]]; then[[ -z $SILENT ]] && echo "====> Using existing CA Certificate ${CA_CERT}"else[[ -z $SILENT ]] && echo "====> Generating new CA Certificate ${CA_CERT}"openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_EXPIRE} -out ${CA_CERT} -subj "/CN=${CA_SUBJECT}" > /dev/null || exit 1fiecho "====> Generating new config file ${SSL_CONFIG}"cat > ${SSL_CONFIG} <<EOM[req]req_extensions = v3_reqdistinguished_name = req_distinguished_name[req_distinguished_name][ v3_req ]basicConstraints = CA:FALSEkeyUsage = nonRepudiation, digitalSignature, keyEnciphermentextendedKeyUsage = clientAuth, serverAuthEOMif [[ -n ${SSL_DNS} || -n ${SSL_IP} ]]; thencat >> ${SSL_CONFIG} <<EOMsubjectAltName = @alt_names[alt_names]EOMIFS=","dns=(${SSL_DNS})dns+=(${SSL_SUBJECT})for i in "${!dns[@]}"; doecho DNS.$((i+1)) = ${dns[$i]} >> ${SSL_CONFIG}doneif [[ -n ${SSL_IP} ]]; thenip=(${SSL_IP})for i in "${!ip[@]}"; doecho IP.$((i+1)) = ${ip[$i]} >> ${SSL_CONFIG}donefifi[[ -z $SILENT ]] && echo "====> Generating new SSL KEY ${SSL_KEY}"openssl genrsa -out ${SSL_KEY} ${SSL_SIZE} > /dev/null || exit 1[[ -z $SILENT ]] && echo "====> Generating new SSL CSR ${SSL_CSR}"openssl req -sha256 -new -key ${SSL_KEY} -out ${SSL_CSR} -subj "/CN=${SSL_SUBJECT}" -config ${SSL_CONFIG} > /dev/null || exit 1[[ -z $SILENT ]] && echo "====> Generating new SSL CERT ${SSL_CERT}"openssl x509 -sha256 -req -in ${SSL_CSR} -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -out ${SSL_CERT} \-days ${SSL_EXPIRE} -extensions v3_req -extfile ${SSL_CONFIG} > /dev/null || exit 1if [[ -z $SILENT ]]; thenecho "====> Complete"echo "keys can be found in volume mapped to $(pwd)"echoecho "====> Output results as YAML"echo "---"echo "ca_key: |"cat $CA_KEY | sed 's/^/ /'echoecho "ca_cert: |"cat $CA_CERT | sed 's/^/ /'echoecho "ssl_key: |"cat $SSL_KEY | sed 's/^/ /'echoecho "ssl_csr: |"cat $SSL_CSR | sed 's/^/ /'echoecho "ssl_cert: |"cat $SSL_CERT | sed 's/^/ /'echofiif [[ -n $K8S_SECRET_NAME ]]; thenif [[ -n $K8S_SECRET_COMBINE_CA ]]; then[[ -z $SILENT ]] && echo "====> Adding CA to Cert file"cat ${CA_CERT} >> ${SSL_CERT}fi[[ -z $SILENT ]] && echo "====> Creating Kubernetes secret: $K8S_SECRET_NAME"kubectl delete secret $K8S_SECRET_NAME --ignore-not-foundif [[ -n $K8S_SECRET_SEPARATE_CA ]]; thenkubectl create secret generic \$K8S_SECRET_NAME \--from-file="tls.crt=${SSL_CERT}" \--from-file="tls.key=${SSL_KEY}" \--from-file="ca.crt=${CA_CERT}"elsekubectl create secret tls \$K8S_SECRET_NAME \--cert=${SSL_CERT} \--key=${SSL_KEY}fiif [[ -n $K8S_SECRET_LABELS ]]; then[[ -z $SILENT ]] && echo "====> Labeling Kubernetes secret"IFS=$' \n\t' # We have to reset IFS or label secret will misbehave on some systemskubectl label secret \$K8S_SECRET_NAME \$K8S_SECRET_LABELSfifiecho "4. 重命名服务证书"mv ${CN}.key tls.keymv ${CN}.crt tls.crt# 把生成的证书作为密文导入K8S## * 指定K8S配置文件路径kubeconfig=/home/rancher/.kube/configkubectl --kubeconfig=$kubeconfig create namespace cattle-systemkubectl --kubeconfig=$kubeconfig -n cattle-system create secret tls tls-rancher-ingress --cert=./tls.crt --key=./tls.keykubectl --kubeconfig=$kubeconfig -n cattle-system create secret generic tls-ca --from-file=cacerts.pem

helm安装Rancher

[rancher@rmaster01 home]$ ll

total 12636

-rw-r--r-- 1 rancher rancher 12925659 Jun 7 01:42 helm-v3.2.2-linux-amd64.tar.gz

drwxr-xr-x 2 3434 3434 50 Jun 5 05:25 linux-amd64

drwx------. 2 login01 login01 62 Jan 11 13:41 login01

drwx------ 6 rancher rancher 4096 Jun 7 02:00 rancher

-rw-r--r-- 1 rancher rancher 5688 May 10 17:18 rancher-2.3.6-ent.tgz

[rancher@rmaster01 home]$ helm install rancher rancher/ --namespace cattle-system --set rancherImage=cnrancher/rancher --set service.type=NodePort --set service.ports.nodePort=30001 --set tls=internal --set privateCA=true

NAME: rancher

LAST DEPLOYED: Sun Jun 7 02:01:06 2020

NAMESPACE: cattle-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Rancher Server has been installed.

NOTE: Rancher may take several minutes to fully initialize. Please standby while Certificates are being issued and Ingress comes up.

Check out our docs at https://rancher.com/docs/rancher/v2.x/en/

Browse to https://

Happy Containering!

[rancher@rmaster01 home]$

配置Loadblance转发

mkdir /etc/nginx

vim /etc/nginx/nginx.conf

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

stream {

upstream rancher_servers_https {

least_conn;

server <IP_NODE_1>:30001 max_fails=3 fail_timeout=5s;

server <IP_NODE_2>:30001 max_fails=3 fail_timeout=5s;

server <IP_NODE_3>:30001 max_fails=3 fail_timeout=5s;

}

server {

listen 443;

proxy_pass rancher_servers_https;

}

}

docker run -d --restart=unless-stopped -p 80:80 -p 443:443 -v /etc/nginx/nginx.conf:/etc/nginx/nginx.conf nginx:stable

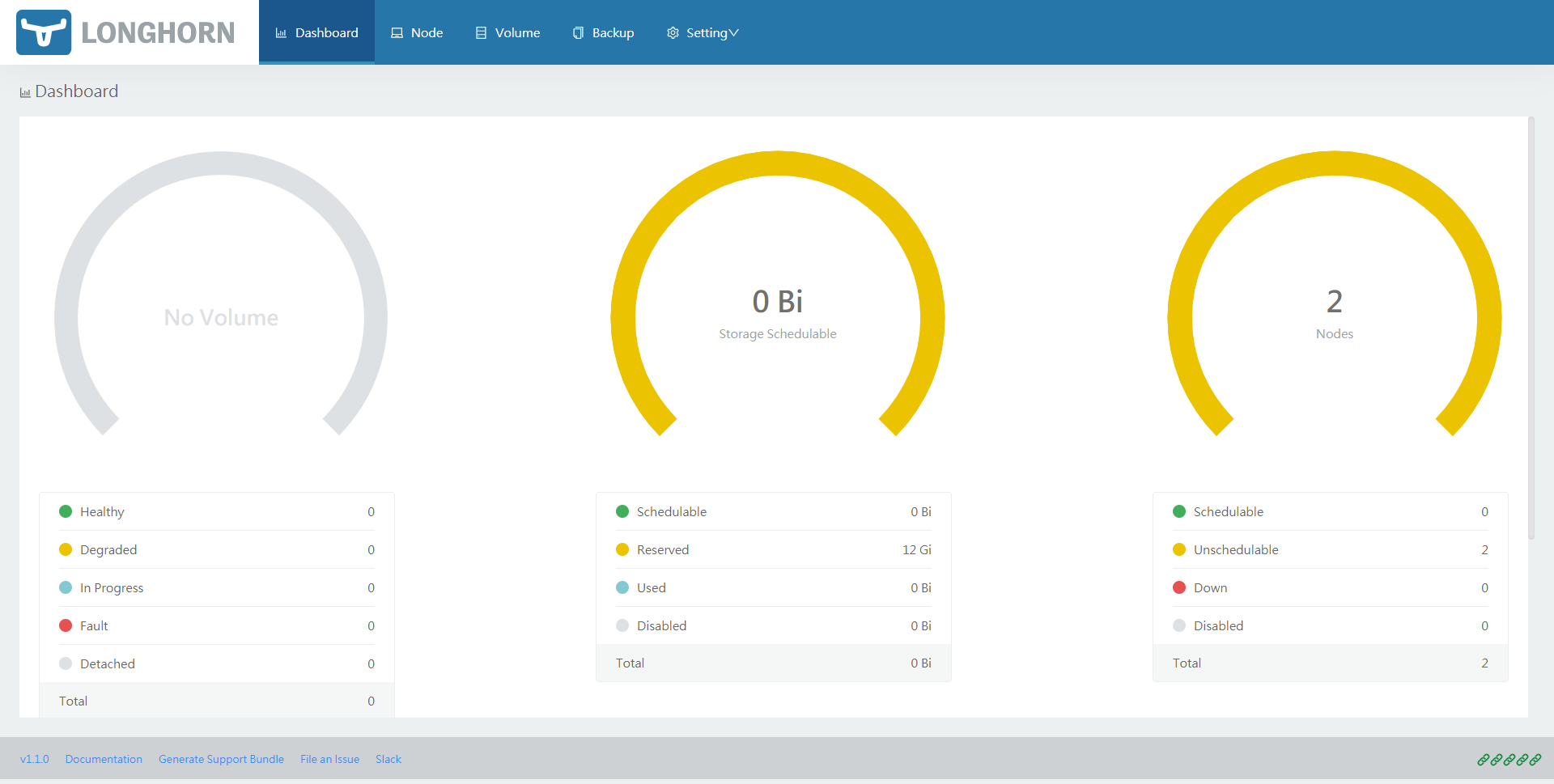

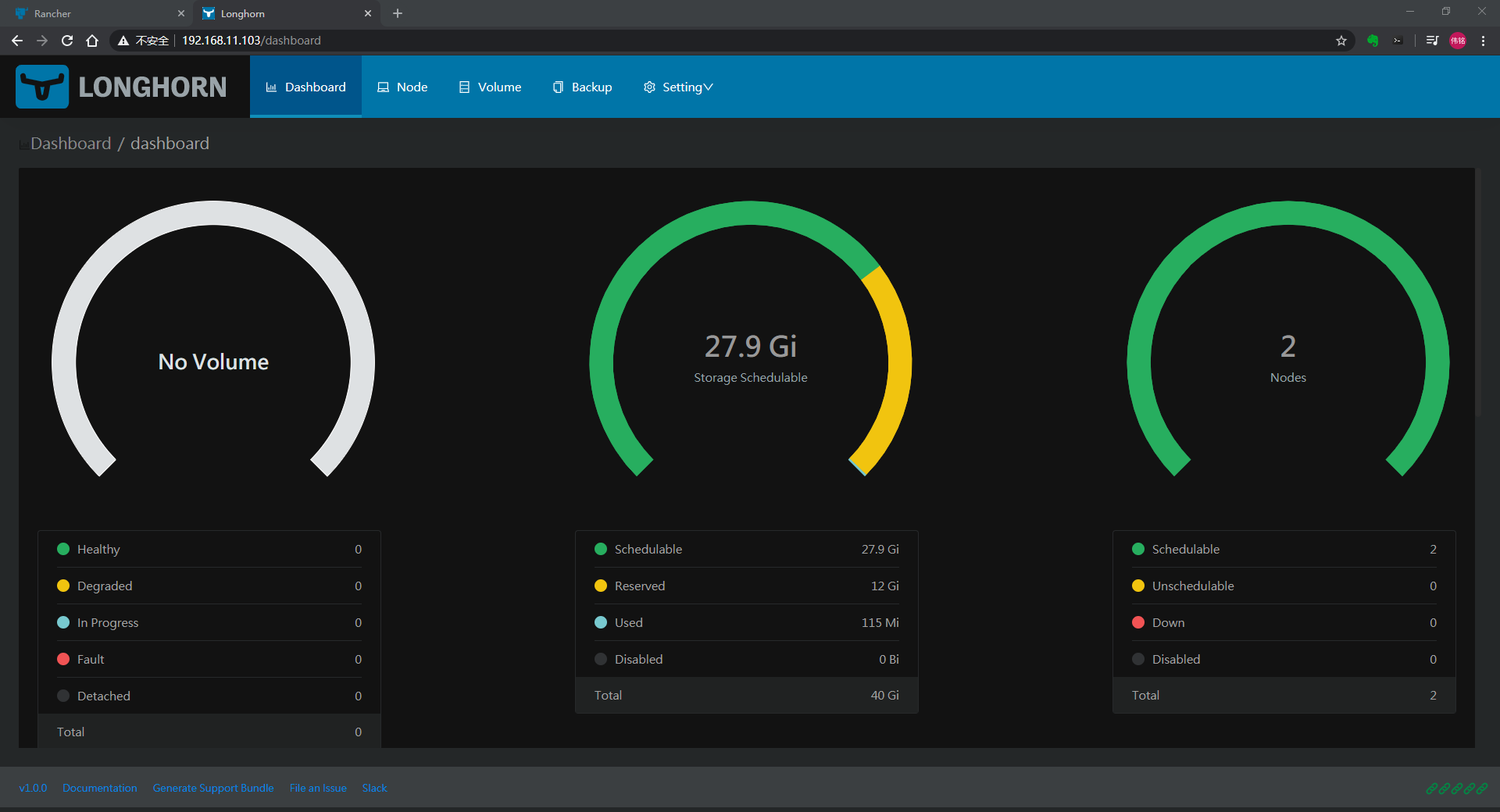

配置存储

https://github.com/longhorn/longhorn

node节点部署iSCSI

yum install iscsi-initiator-utils -y

初始化硬盘

添加到Longhorn的磁盘进行格式化和挂载到/var/lib/longhorn目录

mkfs.xfs /dev/sdb

mount /dev/sdb /var/lib/longhorn

[rancher@rmaster01 home]$ sudo git clone https://github.com/longhorn/longhorn

Cloning into 'longhorn'...

remote: Enumerating objects: 57, done.

remote: Counting objects: 100% (57/57), done.

remote: Compressing objects: 100% (39/39), done.

remote: Total 1752 (delta 28), reused 27 (delta 15), pack-reused 1695

Receiving objects: 100% (1752/1752), 742.57 KiB | 345.00 KiB/s, done.

Resolving deltas: 100% (1036/1036), done.

[rancher@rmaster01 longhorn]$

[rancher@rmaster01 longhorn]$ ll

total 432

drwxr-xr-x 3 root root 138 Jun 7 07:22 chart

-rw-r--r-- 1 root root 179 Jun 7 07:22 CODE_OF_CONDUCT.md

-rw-r--r-- 1 root root 3283 Jun 7 07:22 CONTRIBUTING.md

drwxr-xr-x 3 root root 47 Jun 7 07:22 deploy

drwxr-xr-x 3 root root 21 Jun 7 07:22 dev

drwxr-xr-x 2 root root 147 Jun 7 07:22 enhancements

drwxr-xr-x 3 root root 222 Jun 7 07:22 examples

-rw-r--r-- 1 root root 11357 Jun 7 07:22 LICENSE

-rw-r--r-- 1 root root 409356 Jun 7 07:22 longhorn-ui.png

-rw-r--r-- 1 root root 118 Jun 7 07:22 MAINTAINERS

-rw-r--r-- 1 root root 5309 Jun 7 07:22 README.md

drwxr-xr-x 2 root root 104 Jun 7 07:22 scripts

drwxr-xr-x 2 root root 28 Jun 7 07:22 uninstall

[rancher@rmaster01 longhorn]$ kubectl create namespace longhorn-system

namespace/longhorn-system created

helm部署

[rancher@rmaster01 home]$ helm install longhorn ./longhorn/chart/ --namespace longhorn-system

NAME: longhorn

LAST DEPLOYED: Sun Jun 7 07:34:58 2020

NAMESPACE: longhorn-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

kubectl get po -n $release_namespace

[rancher@rmaster01 home]$

[rancher@rmaster01 longhorn]$

kubectl部署

[rancher@rmaster01 deploy]$ pwd

/home/longhorn/deploy

[rancher@rmaster01 deploy]$ kubectl create -f longhorn.yaml

namespace/longhorn-system created

serviceaccount/longhorn-service-account created

clusterrole.rbac.authorization.k8s.io/longhorn-role created

clusterrolebinding.rbac.authorization.k8s.io/longhorn-bind created

customresourcedefinition.apiextensions.k8s.io/engines.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/replicas.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/settings.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/volumes.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/engineimages.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/nodes.longhorn.io created

customresourcedefinition.apiextensions.k8s.io/instancemanagers.longhorn.io created

configmap/longhorn-default-setting created

daemonset.apps/longhorn-manager created

service/longhorn-backend created

deployment.apps/longhorn-ui created

service/longhorn-frontend created

deployment.apps/longhorn-driver-deployer created

storageclass.storage.k8s.io/longhorn created

[rancher@rmaster01 deploy]$ kubectl -n longhorn-system get pod

NAME READY STATUS RESTARTS AGE

csi-attacher-78bf9b9898-2psjx 1/1 Running 0 3m21s

csi-attacher-78bf9b9898-9776q 1/1 Running 0 3m21s

csi-attacher-78bf9b9898-cflms 1/1 Running 0 3m21s

csi-provisioner-8599d5bf97-65x9b 1/1 Running 0 3m21s

csi-provisioner-8599d5bf97-dg6p9 1/1 Running 0 3m21s

csi-provisioner-8599d5bf97-nlbc5 1/1 Running 0 3m21s

csi-resizer-586665f745-pt2r7 1/1 Running 0 3m20s

csi-resizer-586665f745-tkj2b 1/1 Running 0 3m20s

csi-resizer-586665f745-xktkx 1/1 Running 0 3m20s

engine-image-ei-eee5f438-pqfqs 1/1 Running 0 4m23s

engine-image-ei-eee5f438-tw68r 1/1 Running 0 4m23s

instance-manager-e-19643db2 1/1 Running 0 4m4s

instance-manager-e-66366e8b 1/1 Running 0 4m22s

instance-manager-r-8a9c4425 1/1 Running 0 4m3s

instance-manager-r-f733bfb5 1/1 Running 0 4m21s

longhorn-csi-plugin-86x72 2/2 Running 0 3m20s

longhorn-csi-plugin-cjx2m 2/2 Running 0 3m20s

longhorn-driver-deployer-8848f7c7d-w6q8p 1/1 Running 0 4m34s

longhorn-manager-2sd8d 1/1 Running 0 4m34s

longhorn-manager-9hx92 1/1 Running 0 4m34s

longhorn-ui-5fb67b7dbb-tmhpw 1/1 Running 0 4m34s

[rancher@rmaster01 deploy]$

创建 basicauth 密码文件 auth

sudo USER=rancher; PASSWORD=rancher; echo "${USER}:$(openssl passwd -stdin -apr1 <<< ${PASSWORD})" >> auth

kubectl -n longhorn-system create secret generic basic-auth --from-file=auth

步骤

[rancher@rmaster01 longhorn]$ su - root

Password:

Last login: Sun Jun 7 08:25:24 CST 2020 on pts/0

[root@rmaster01 ~]# cd /home/longhorn/

[root@rmaster01 longhorn]# USER=rancher; PASSWORD=rancher; echo "${USER}:$(openssl passwd -stdin -apr1 <<< ${PASSWORD})" >> auth

[root@rmaster01 longhorn]# su - rancher

Last login: Sun Jun 7 08:33:19 CST 2020 on pts/0

[rancher@rmaster01 ~]$ cd /home/longhorn/

[rancher@rmaster01 longhorn]$ ll auth

-rw-r--r-- 1 root root 181 Jun 7 08:33 auth

[rancher@rmaster01 longhorn]$ kubectl -n longhorn-system create secret generic basic-auth --from-file=auth

secret/basic-auth created

[rancher@rmaster01 longhorn]$

kubectl -n longhorn-system create secret generic basic-auth --from-file=auth

[rancher@rmaster01 longhorn]$ cat longhorn-ingress.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: longhorn-ingress

namespace: longhorn-system

annotations:

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# prevent the controller from redirecting (308) to HTTPS

nginx.ingress.kubernetes.io/ssl-redirect: 'false'

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required '

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: longhorn-frontend

servicePort: 80

kubectl -n longhorn-system apply -f longhorn-ingress.yml

[rancher@rmaster01 longhorn]$ kubectl -n longhorn-system get ing

NAME HOSTS ADDRESS PORTS AGE

longhorn-ingress * 192.168.11.103,192.168.11.104 80 4m36s

[rancher@rmaster01 longhorn]$

[rancher@rmaster01 longhorn]$ curl -v http://192.168.11.103

* About to connect() to 192.168.11.103 port 80 (#0)

* Trying 192.168.11.103...

* Connected to 192.168.11.103 (192.168.11.103) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: 192.168.11.103

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Server: openresty/1.15.8.1

< Date: Sun, 07 Jun 2020 00:36:48 GMT

< Content-Type: text/html

< Content-Length: 185

< Connection: keep-alive

< WWW-Authenticate: Basic realm="Authentication Required"

<

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>openresty/1.15.8.1</center>

</body>

</html>

* Connection #0 to host 192.168.11.103 left intact

[rancher@rmaster01 longhorn]$

https://192.168.11.103/dashboard

1.1