Taints和Tolerations一起工作以确保不将pod安排到不适当的节点上

将一个或多个污点应用于节点;,这标志着节点不应该接受任何不能容忍污点的pod

Tolerations允许(但不要求)pods调度到具有匹配的污点的节点上

**

污点

设置污点

kubectl taint node node01 node-role.kubernetes.io/node=:NoSchedule

取消污点

kubectl taint node node01 node-role.kubernetes.io/node:NoSchedule-

检查污点

kubectl describe nodes node01

Taints

effect可以定义为:

NoSchedule

表示不允许调度,已调度的不影响

PreferNoSchedule

表示尽量不调度

NoExecute

表示不允许调度,已经运行的Pod副本会执行删除出的动作

kubectl run —image=nginx —image-pull-policy=Never test —replicas=4

[liwm@rmaster01 liwm]$ kubectl run --image=nginx --image-pull-policy=Never test --replicas=4kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.deployment.apps/test created[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-695b98cb4-5nf86 1/1 Running 0 7s 10.42.4.201 node01 <none> <none>test-695b98cb4-fnwtc 1/1 Running 0 7s 10.42.2.253 node02 <none> <none>test-695b98cb4-gm4zf 1/1 Running 0 7s 10.42.4.200 node01 <none> <none>test-695b98cb4-svghx 1/1 Running 0 7s 10.42.2.252 node02 <none> <none>[liwm@rmaster01 liwm]$ kubectl taint node node01 app=test:NoSchedulenode/node01 tainted[liwm@rmaster01 liwm]$ kubectl run --image=nginx --image-pull-policy=Never test1 --replicas=4kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.deployment.apps/test1 created[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-695b98cb4-5nf86 1/1 Running 0 67s 10.42.4.201 node01 <none> <none>test-695b98cb4-fnwtc 1/1 Running 0 67s 10.42.2.253 node02 <none> <none>test-695b98cb4-gm4zf 1/1 Running 0 67s 10.42.4.200 node01 <none> <none>test-695b98cb4-svghx 1/1 Running 0 67s 10.42.2.252 node02 <none> <none>test1-7b5b76c466-2hfdk 1/1 Running 0 10s 10.42.2.3 node02 <none> <none>test1-7b5b76c466-2pnfk 1/1 Running 0 10s 10.42.2.2 node02 <none> <none>test1-7b5b76c466-cmxgd 1/1 Running 0 10s 10.42.2.4 node02 <none> <none>test1-7b5b76c466-q44dp 1/1 Running 0 10s 10.42.2.254 node02 <none> <none>[liwm@rmaster01 liwm]$

NoExecute 迁移pod

[liwm@rmaster01 liwm]$ kubectl run --image=nginx --image-pull-policy=Never test --replicas=4kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.deployment.apps/test created[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-695b98cb4-6f7g8 1/1 Running 0 5s 10.42.4.202 node01 <none> <none>test-695b98cb4-bv8d8 1/1 Running 0 5s 10.42.2.6 node02 <none> <none>test-695b98cb4-q5kkh 1/1 Running 0 5s 10.42.2.5 node02 <none> <none>test-695b98cb4-s2qr2 1/1 Running 0 5s 10.42.4.203 node01 <none> <none>[liwm@rmaster01 liwm]$ kubectl taint node node01 app=test:NoExecutenode/node01 tainted[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-695b98cb4-bv8d8 1/1 Running 0 74s 10.42.2.6 node02 <none> <none>test-695b98cb4-h552m 1/1 Running 0 7s 10.42.2.7 node02 <none> <none>test-695b98cb4-k7gp5 1/1 Running 0 6s 10.42.2.8 node02 <none> <none>test-695b98cb4-q5kkh 1/1 Running 0 74s 10.42.2.5 node02 <none> <none>test-695b98cb4-s2qr2 0/1 Terminating 0 74s <none> node01 <none> <none>[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-695b98cb4-bv8d8 1/1 Running 0 79s 10.42.2.6 node02 <none> <none>test-695b98cb4-h552m 1/1 Running 0 12s 10.42.2.7 node02 <none> <none>test-695b98cb4-k7gp5 1/1 Running 0 11s 10.42.2.8 node02 <none> <none>test-695b98cb4-q5kkh 1/1 Running 0 79s 10.42.2.5 node02 <none> <none>[liwm@rmaster01 liwm]$

宽容

Tolerations

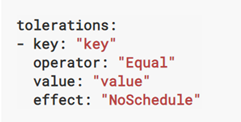

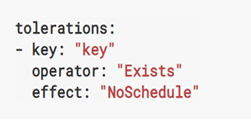

operator可以定义为:

Equal

表示key是否等于value

Exists

表示key是否存在,使用Exists无需定义vaule。

master节点运行应用副本

cat << EOF > taint.yamlapiVersion: apps/v1kind: DaemonSetmetadata:name: fluentd-elasticsearchlabels:k8s-app: fluentd-loggingspec:selector:matchLabels:name: fluentd-elasticsearchtemplate:metadata:labels:name: fluentd-elasticsearchspec:tolerations: #宽容- key: node-role.kubernetes.io/masteroperator: "Exists"effect: NoSchedulecontainers:- name: fluentd-elasticsearchimage: nginximagePullPolicy: IfNotPresentEOF

apiVersion: apps/v1kind: DaemonSetmetadata:name: fluentd-elasticsearchlabels:k8s-app: fluentd-loggingspec:selector:matchLabels:name: fluentd-elasticsearchtemplate:metadata:labels:name: fluentd-elasticsearchspec:tolerations: #宽容- key: node-role.kubernetes.io/nodeoperator: "Exists"effect: NoSchedule- key: node-role.kubernetes.io/etcdoperator: "Exists"effect: NoExecute- key: node-role.kubernetes.io/controlplaneoperator: "Exists"effect: NoSchedulecontainers:- name: fluentd-elasticsearchimage: nginximagePullPolicy: IfNotPresent

给node设置污点

kubectl taint node node01 ssd=:NoSchedule

kubectl taint node node02 app=nginx-1.9.0:NoSchedule

kubectl taint node node02 test=test:NoSchedule

创建pod+tolerations

cat << EOF > tolerations-pod.yamlapiVersion: v1kind: Podmetadata:name: test1spec:tolerations:- key: "ssd"operator: "Exists" #是否存在effect: "NoSchedule"containers:- name: demoimage: polinux/stressimagePullPolicy: IfNotPresentcommand: ["stress"]args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]---apiVersion: v1kind: Podmetadata:name: test2spec:tolerations:- key: "app"operator: "Equal" #等于value: "nginx-1.9.0"effect: "NoSchedule"- key: "test"operator: "Equal"value: "test"effect: "NoSchedule"containers:- name: demoimage: polinux/stressimagePullPolicy: IfNotPresentcommand: ["stress"]args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]EOF