https://kubernetes.io/zh/docs/tasks/

1: 环境准备

yum install vim wget bash-completion lrzsz nmap nc tree htop iftop net-tools ipvsadm -y

2: 修改主机名

hostnamectl set-hostname master

systemctl disable firewalld.servicesystemctl stop firewalld.servicesetenforce 0

3: 关闭虚拟机内存

swapoff -ased -i 's/.*swap.*/#&/' /etc/fstab

4: 关闭selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinuxsed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/configsed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinuxsed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

补充

Docker版本要求:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md

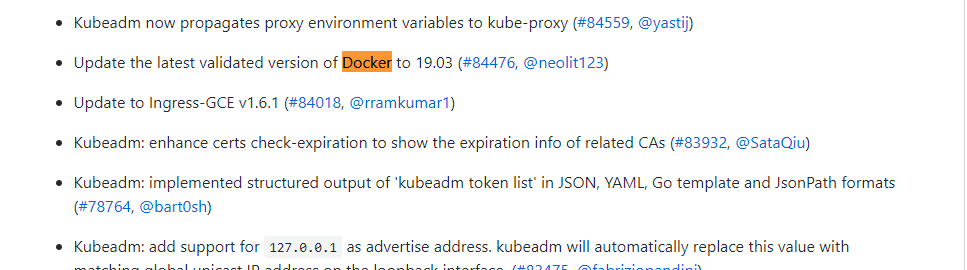

v1.17 docker 要求

Docker versions docker-ce-19.03

v1.16 要求:

Drop the support for docker 1.9.x. Docker versions 1.10.3, 1.11.2, 1.12.6 have been validated.

5: 安装依赖

yum install yum-utils device-mapper-persistent-data lvm2 -y

6:添加docker源

yum-config-manager \--add-repo \https://download.docker.com/linux/centos/docker-ce.repo

7: 更新源并安装指定版本

yum install docker-ce-18.06.3.ce -y

查看docker 版本:

yum list docker-ce --showduplicates|sort -r

8: 配置k8s的docker环境要求和镜像加速

sudo mkdir -p /etc/docker

cat << EOF > /etc/docker/daemon.json{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://0bb06s1q.mirror.aliyuncs.com"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"]}EOF

systemctl daemon-reload && systemctl restart docker && systemctl enable docker.service

或

配置 daocloud 镜像加速

https://www.daocloud.io/mirror#accelerator-doc

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

9:安装和配置containerd

新版本dockers自动安装这个包 可忽略这一步

yum install containerd.io -ymkdir -p /etc/containerdcontainerd config default > /etc/containerd/config.tomlsystemctl restart containerd

10:添加kubernetes源

kubernetes安装:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

11:安装最新版本或指定版本

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetessystemctl enable --now kubelet

指定版本

yum install -y kubeadm-1.18.0-0 kubelet-1.18.0-0 kubectl-1.18.0-0 --disableexcludes=kubernetessystemctl enable --now kubelet

12:开启内核转发

cat <<EOF > /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1#避免cpu资源长期使用率过高导致系统内核锁kernel.watchdog_thresh=30#开启iptables bridgenet.bridge.bridge-nf-call-iptables=1#调优ARP高速缓存net.ipv4.neigh.default.gc_thresh1=4096net.ipv4.neigh.default.gc_thresh2=6144net.ipv4.neigh.default.gc_thresh3=8192EOFsysctl -psystemctl restart docker

13:加载检查 ipvs内核

检查:

lsmod | grep ip_vs

加载:

modprobe ip_vs

kubeadm 常用命令

Last login: Sun Apr 12 21:21:17 2020 from 192.168.31.150[root@master ~]# kubeadm┌──────────────────────────────────────────────────────────┐│ KUBEADM ││ Easily bootstrap a secure Kubernetes cluster ││ ││ Please give us feedback at: ││ https://github.com/kubernetes/kubeadm/issues │└──────────────────────────────────────────────────────────┘Example usage:Create a two-machine cluster with one control-plane node(which controls the cluster), and one worker node(where your workloads, like Pods and Deployments run).┌──────────────────────────────────────────────────────────┐│ On the first machine: │├──────────────────────────────────────────────────────────┤│ control-plane# kubeadm init │└──────────────────────────────────────────────────────────┘┌──────────────────────────────────────────────────────────┐│ On the second machine: │├──────────────────────────────────────────────────────────┤│ worker# kubeadm join <arguments-returned-from-init> │└──────────────────────────────────────────────────────────┘You can then repeat the second step on as many other machines as you like.Usage:kubeadm [command]Available Commands:alpha Kubeadm experimental sub-commandscompletion Output shell completion code for the specified shell (bash or zsh)config Manage configuration for a kubeadm cluster persisted in a ConfigMap in the clusterhelp Help about any commandinit Run this command in order to set up the Kubernetes control planejoin Run this on any machine you wish to join an existing clusterreset Performs a best effort revert of changes made to this host by 'kubeadm init' or 'kubeadm join'token Manage bootstrap tokensupgrade Upgrade your cluster smoothly to a newer version with this commandversion Print the version of kubeadmFlags:--add-dir-header If true, adds the file directory to the header-h, --help help for kubeadm--log-file string If non-empty, use this log file--log-file-max-size uint Defines the maximum size a log file can grow to. Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)--rootfs string [EXPERIMENTAL] The path to the 'real' host root filesystem.--skip-headers If true, avoid header prefixes in the log messages--skip-log-headers If true, avoid headers when opening log files-v, --v Level number for the log level verbosityUse "kubeadm [command] --help" for more information about a command.

[root@master ~]# kubeadm init --helpRun this command in order to set up the Kubernetes control planeThe "init" command executes the following phases:

preflight Run pre-flight checks kubelet-start Write kubelet settings and (re)start the kubelet certs Certificate generation /ca Generate the self-signed Kubernetes CA to provision identities for other Kubernetes components /apiserver Generate the certificate for serving the Kubernetes API /apiserver-kubelet-client Generate the certificate for the API server to connect to kubelet /front-proxy-ca Generate the self-signed CA to provision identities for front proxy /front-proxy-client Generate the certificate for the front proxy client /etcd-ca Generate the self-signed CA to provision identities for etcd /etcd-server Generate the certificate for serving etcd /etcd-peer Generate the certificate for etcd nodes to communicate with each other /etcd-healthcheck-client Generate the certificate for liveness probes to healthcheck etcd /apiserver-etcd-client Generate the certificate the apiserver uses to access etcd /sa Generate a private key for signing service account tokens along with its public key kubeconfig Generate all kubeconfig files necessary to establish the control plane and the admin kubeconfig file /admin Generate a kubeconfig file for the admin to use and for kubeadm itself /kubelet Generate a kubeconfig file for the kubelet to use only for cluster bootstrapping purposes /controller-manager Generate a kubeconfig file for the controller manager to use /scheduler Generate a kubeconfig file for the scheduler to use control-plane Generate all static Pod manifest files necessary to establish the control plane /apiserver Generates the kube-apiserver static Pod manifest /controller-manager Generates the kube-controller-manager static Pod manifest /scheduler Generates the kube-scheduler static Pod manifest etcd Generate static Pod manifest file for local etcd /local Generate the static Pod manifest file for a local, single-node local etcd instance upload-config Upload the kubeadm and kubelet configuration to a ConfigMap /kubeadm Upload the kubeadm ClusterConfiguration to a ConfigMap /kubelet Upload the kubelet component config to a ConfigMap upload-certs Upload certificates to kubeadm-certs mark-control-plane Mark a node as a control-plane bootstrap-token Generates bootstrap tokens used to join a node to a cluster kubelet-finalize Updates settings relevant to the kubelet after TLS bootstrap /experimental-cert-rotation Enable kubelet client certificate rotation addon Install required addons for passing Conformance tests /coredns Install the CoreDNS addon to a Kubernetes cluster /kube-proxy Install the kube-proxy addon to a Kubernetes cluster

Usage:kubeadm init [flags]kubeadm init [command]Available Commands:phase Use this command to invoke single phase of the init workflowFlags:--apiserver-advertise-address string The IP address the API Server will advertise it's listening on. If not set the default network interface will be used.--apiserver-bind-port int32 Port for the API Server to bind to. (default 6443)--apiserver-cert-extra-sans strings Optional extra Subject Alternative Names (SANs) to use for the API Server serving certificate. Can be both IP addresses and DNS names.--cert-dir string The path where to save and store the certificates. (default "/etc/kubernetes/pki")--certificate-key string Key used to encrypt the control-plane certificates in the kubeadm-certs Secret.--config string Path to a kubeadm configuration file.--control-plane-endpoint string Specify a stable IP address or DNS name for the control plane.--cri-socket string Path to the CRI socket to connect. If empty kubeadm will try to auto-detect this value; use this option only if you have more than one CRI installed or if you have non-standard CRI socket.--dry-run Don't apply any changes; just output what would be done.-k, --experimental-kustomize string The path where kustomize patches for static pod manifests are stored.--feature-gates string A set of key=value pairs that describe feature gates for various features. Options are:IPv6DualStack=true|false (ALPHA - default=false)PublicKeysECDSA=true|false (ALPHA - default=false)-h, --help help for init--ignore-preflight-errors strings A list of checks whose errors will be shown as warnings. Example: 'IsPrivilegedUser,Swap'. Value 'all' ignores errors from all checks.--image-repository string Choose a container registry to pull control plane images from (default "k8s.gcr.io")--kubernetes-version string Choose a specific Kubernetes version for the control plane. (default "stable-1")--node-name string Specify the node name.--pod-network-cidr string Specify range of IP addresses for the pod network. If set, the control plane will automatically allocate CIDRs for every node.--service-cidr string Use alternative range of IP address for service VIPs. (default "10.96.0.0/12")--service-dns-domain string Use alternative domain for services, e.g. "myorg.internal". (default "cluster.local")--skip-certificate-key-print Don't print the key used to encrypt the control-plane certificates.--skip-phases strings List of phases to be skipped--skip-token-print Skip printing of the default bootstrap token generated by 'kubeadm init'.--token string The token to use for establishing bidirectional trust between nodes and control-plane nodes. The format is [a-z0-9]{6}\.[a-z0-9]{16} - e.g. abcdef.0123456789abcdef--token-ttl duration The duration before the token is automatically deleted (e.g. 1s, 2m, 3h). If set to '0', the token will never expire (default 24h0m0s)--upload-certs Upload control-plane certificates to the kubeadm-certs Secret.Global Flags:--add-dir-header If true, adds the file directory to the header--log-file string If non-empty, use this log file--log-file-max-size uint Defines the maximum size a log file can grow to. Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)--rootfs string [EXPERIMENTAL] The path to the 'real' host root filesystem.--skip-headers If true, avoid header prefixes in the log messages--skip-log-headers If true, avoid headers when opening log files-v, --v Level number for the log level verbosityUse "kubeadm init [command] --help" for more information about a command.

自定义配置文件

master节点部署:

14:导出kubeadm集群部署自定义文件

导出配置文件

kubeadm config print init-defaults > init.default.yaml

15:修改自定义配置文件

A:修改配置文件 添加 主节点IP advertiseAddress:

B:修改国内阿里镜像地址imageRepository:

C:自定义pod地址 podSubnet: “192.168.0.0/16”

D:开启 IPVS 模式 —-

E:阿里云部署使用内网地址

F:修改Master节点IP地址和需要部署kubernetes的版本

cat <<EOF > init.default.yamlapiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:#配置主节点IP信息advertiseAddress: 192.168.31.147bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: mastertaints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcd#自定义容器镜像拉取国内仓库地址imageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.18.0networking:dnsDomain: cluster.local#自定义podIP地址段podSubnet: "192.168.0.0/16"serviceSubnet: 10.96.0.0/12scheduler: {}# 开启 IPVS 模式---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: ipvsEOF

[root@master ~]# cat init.default.yamlapiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.6.111bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: mastertaints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.18.0networking:dnsDomain: cluster.localpodSubnet: 192.168.0.0/16serviceSubnet: 10.96.0.0/12scheduler: {}---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: ipvs[root@master ~]#

cat > /etc/sysconfig/kubelet <<EOFKUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.2EOF

16: 检查需要拉取的镜像

kubeadm config images list --config init.default.yaml

[root@riyimei ~]# kubeadm config images listk8s.gcr.io/kube-apiserver:v1.21.1k8s.gcr.io/kube-controller-manager:v1.21.1k8s.gcr.io/kube-scheduler:v1.21.1k8s.gcr.io/kube-proxy:v1.21.1k8s.gcr.io/pause:3.4.1k8s.gcr.io/etcd:3.4.13-0k8s.gcr.io/coredns/coredns:v1.8.0[root@riyimei ~]#[root@riyimei ~]# kubeadm config images list --config init.default.yamlregistry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.0registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0registry.aliyuncs.com/google_containers/pause:3.4.1registry.aliyuncs.com/google_containers/etcd:3.4.13-0registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0[root@riyimei ~]#

17: 拉取阿里云kubernetes容器镜像

kubeadm config images pull --config init.default.yaml

[root@master ~]# kubeadm config images list --config init.default.yamlW0204 00:37:10.879146 30608 validation.go:28] Cannot validate kubelet config - no validator is availableW0204 00:37:10.879175 30608 validation.go:28] Cannot validate kube-proxy config - no validator is availableregistry.aliyuncs.com/google_containers/kube-apiserver:v1.17.2registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.2registry.aliyuncs.com/google_containers/kube-proxy:v1.17.2registry.aliyuncs.com/google_containers/pause:3.1registry.aliyuncs.com/google_containers/etcd:3.4.3-0registry.aliyuncs.com/google_containers/coredns:1.6.5[root@master ~]# kubeadm config images pull --config init.default.yamlW0204 00:37:25.590147 30636 validation.go:28] Cannot validate kube-proxy config - no validator is availableW0204 00:37:25.590179 30636 validation.go:28] Cannot validate kubelet config - no validator is available[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.2[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.2[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.17.2[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5[root@master ~]#

18: kubernetes集群部署

开始部署集群 kubeadm init —config=init.default.yaml

[root@master ~]# kubeadm init --config=init.default.yaml | tee kubeadm-init.logW0204 00:39:48.825538 30989 validation.go:28] Cannot validate kube-proxy config - no validator is availableW0204 00:39:48.825592 30989 validation.go:28] Cannot validate kubelet config - no validator is available[init] Using Kubernetes version: v1.17.2[preflight] Running pre-flight checks[WARNING Hostname]: hostname "master" could not be reached[WARNING Hostname]: hostname "master": lookup master on 114.114.114.114:53: no such host[preflight] Pulling images required for setting up a Kubernetes cluster[preflight] This might take a minute or two, depending on the speed of your internet connection[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Starting the kubelet[certs] Using certificateDir folder "/etc/kubernetes/pki"[certs] Generating "ca" certificate and key[certs] Generating "apiserver" certificate and key[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.31.90][certs] Generating "apiserver-kubelet-client" certificate and key[certs] Generating "front-proxy-ca" certificate and key[certs] Generating "front-proxy-client" certificate and key[certs] Generating "etcd/ca" certificate and key[certs] Generating "etcd/server" certificate and key[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.31.90 127.0.0.1 ::1][certs] Generating "etcd/peer" certificate and key[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.31.90 127.0.0.1 ::1][certs] Generating "etcd/healthcheck-client" certificate and key[certs] Generating "apiserver-etcd-client" certificate and key[certs] Generating "sa" key and public key[kubeconfig] Using kubeconfig folder "/etc/kubernetes"[kubeconfig] Writing "admin.conf" kubeconfig file[kubeconfig] Writing "kubelet.conf" kubeconfig file[kubeconfig] Writing "controller-manager.conf" kubeconfig file[kubeconfig] Writing "scheduler.conf" kubeconfig file[control-plane] Using manifest folder "/etc/kubernetes/manifests"[control-plane] Creating static Pod manifest for "kube-apiserver"W0204 00:39:51.810550 30989 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"[control-plane] Creating static Pod manifest for "kube-controller-manager"W0204 00:39:51.811109 30989 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"[control-plane] Creating static Pod manifest for "kube-scheduler"[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s[apiclient] All control plane components are healthy after 35.006692 seconds[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster[upload-certs] Skipping phase. Please see --upload-certs[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule][bootstrap-token] Using token: abcdef.0123456789abcdef[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key[addons] Applied essential addon: CoreDNS[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.31.90:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:09959f846dba6a855fbbd090e99b4ba1df4e643ec1a1578c28eaf9a9d3ea6a03[root@master ~]#

19:配置用户证书

[root@master ~]#mkdir -p $HOME/.kube

[root@master ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

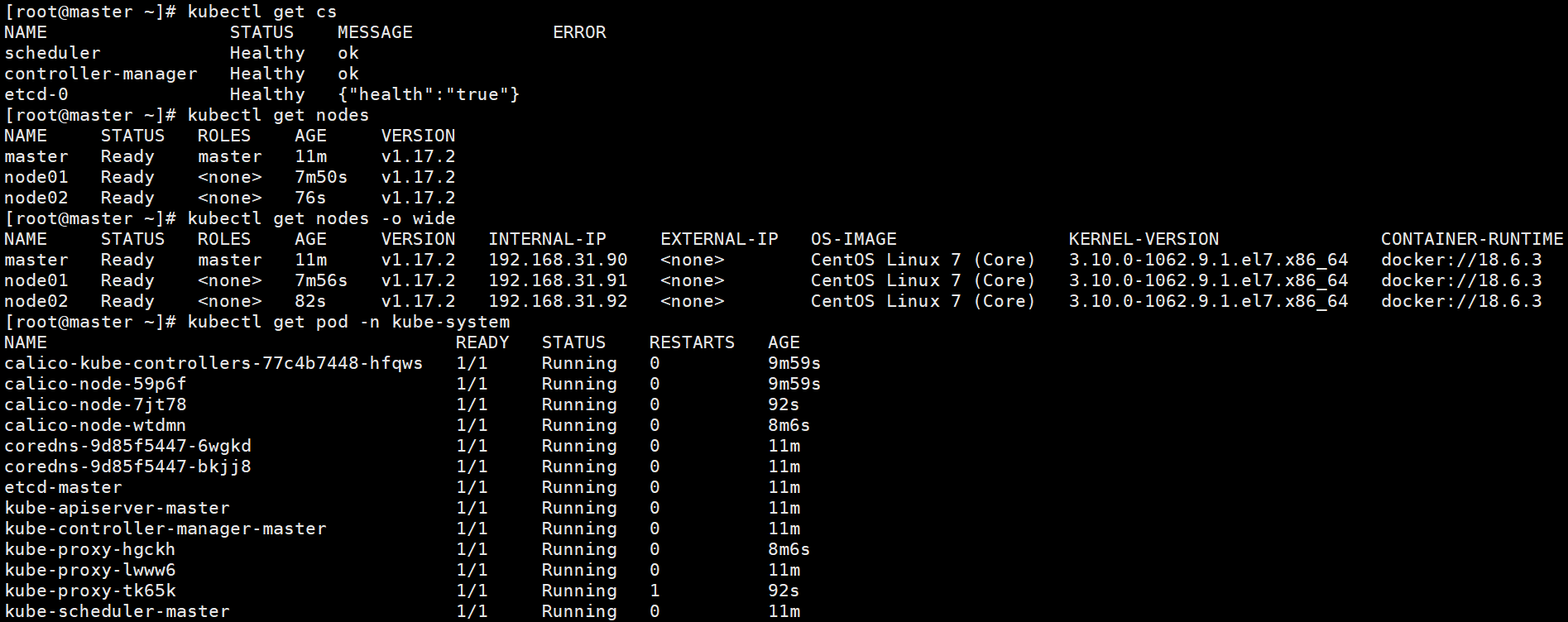

20:查看集群状态

[root@master ~]#kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster NotReady master 2m34s v1.17.2[root@master ~]#[root@master ~]# kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-0 Healthy {"health":"true"}

21:kuberctl 命令自动补全

source <(kubectl completion bash)echo "source <(kubectl completion bash)" >> ~/.bashrc

22:kubernetes网络部署

Calico

https://www.projectcalico.org/

https://docs.projectcalico.org/getting-started/kubernetes/

Calico网络部署:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

[root@master ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yamlconfigmap/calico-config createdcustomresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org createdclusterrole.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrole.rbac.authorization.k8s.io/calico-node createdclusterrolebinding.rbac.authorization.k8s.io/calico-node createddaemonset.apps/calico-node createdserviceaccount/calico-node createddeployment.apps/calico-kube-controllers createdserviceaccount/calico-kube-controllers created[root@master ~]#

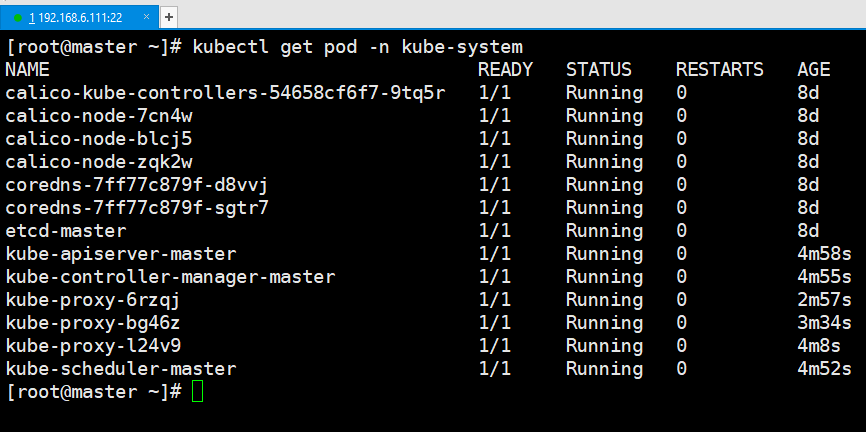

23:查看网络插件部署状态

[root@master ~]# kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-77c4b7448-hfqws 1/1 Running 0 32scalico-node-59p6f 1/1 Running 0 32scoredns-9d85f5447-6wgkd 1/1 Running 0 2m5scoredns-9d85f5447-bkjj8 1/1 Running 0 2m5setcd-master 1/1 Running 0 2m2skube-apiserver-master 1/1 Running 0 2m2skube-controller-manager-master 1/1 Running 0 2m2skube-proxy-lwww6 1/1 Running 0 2m5skube-scheduler-master 1/1 Running 0 2m2s

24:添加node节点

[root@node02 ~]# kubeadm join 192.168.31.90:6443 --token abcdef.0123456789abcdef \> --discovery-token-ca-cert-hash sha256:09959f846dba6a855fbbd090e99b4ba1df4e643ec1a1578c28eaf9a9d3ea6a03W0204 00:46:53.878006 30928 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checks[WARNING Hostname]: hostname "node02" could not be reached[WARNING Hostname]: hostname "node02": lookup node02 on 114.114.114.114:53: no such host[preflight] Reading configuration from the cluster...[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Starting the kubelet[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#如果过期可先执行此命令kubeadm token create #重新生成token1.列出tokenkubeadm token list | awk -F" " '{print $1}' |tail -n 12.获取CA公钥的哈希值openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'3.从节点加入集群kubeadm join 192.168.40.8:6443 --token token填这里 --discovery-token-ca-cert-hash sha256:哈希值填这里

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'(stdin)= 5be219290c207c460901a557a1727cbda0cf5638eba06a87ffe99962f6438966[root@master ~]#[root@master ~]# kubeadm token list | awk -F" " '{print $1}' |tail -n 1abcdef.0123456789abcdef[root@master ~]#[root@master ~]# kubeadm join 192.168.6.111:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:5be219290c207c460901a557a1727cbda0cf5638eba06a87ffe99962f6438966

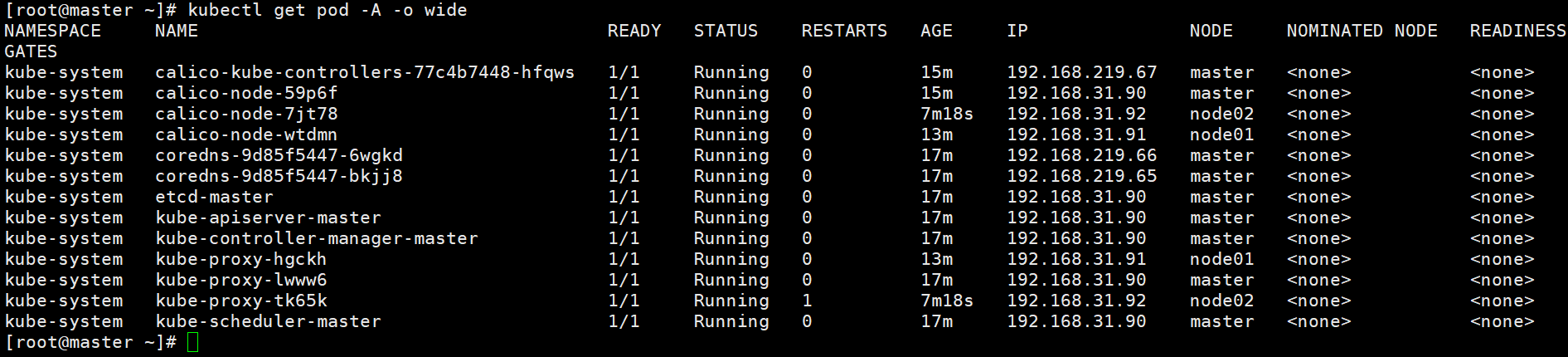

25:集群检查

[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 19m v1.17.2node01 Ready <none> 15m v1.17.2node02 Ready <none> 13m v1.17.2[root@master ~]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-0 Healthy {"health":"true"}[root@master ~]# kubectl get pod -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-system calico-kube-controllers-77c4b7448-zd6dt 0/1 Error 1 119skube-system calico-node-2fcbs 1/1 Running 0 119skube-system calico-node-56f95 1/1 Running 0 119skube-system calico-node-svlg9 1/1 Running 0 119skube-system coredns-9d85f5447-4f4hq 0/1 Running 0 19mkube-system coredns-9d85f5447-n68wd 0/1 Running 0 19mkube-system etcd-master 1/1 Running 0 19mkube-system kube-apiserver-master 1/1 Running 0 19mkube-system kube-controller-manager-master 1/1 Running 0 19mkube-system kube-proxy-ch4vl 1/1 Running 1 15mkube-system kube-proxy-fjl5c 1/1 Running 1 19mkube-system kube-proxy-hhsqc 1/1 Running 1 13mkube-system kube-scheduler-master 1/1 Running 0 19m[root@master ~]#

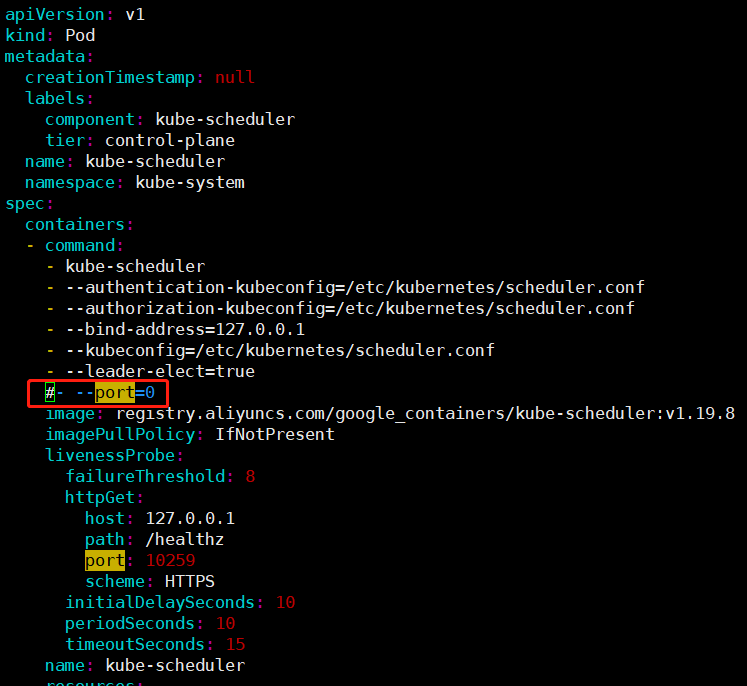

kubectl get cs 无法使用 请注释掉kubeadm静态pod的kube-controller-manager、kube-scheduler —port=0 端口

[root@master ~]# ll /etc/kubernetes/manifests/total 16-rw------- 1 root root 2126 Mar 13 14:37 etcd.yaml-rw------- 1 root root 3186 Mar 13 14:37 kube-apiserver.yaml-rw------- 1 root root 2860 Mar 13 14:40 kube-controller-manager.yaml-rw------- 1 root root 1414 Mar 13 14:40 kube-scheduler.yaml[root@master ~]#

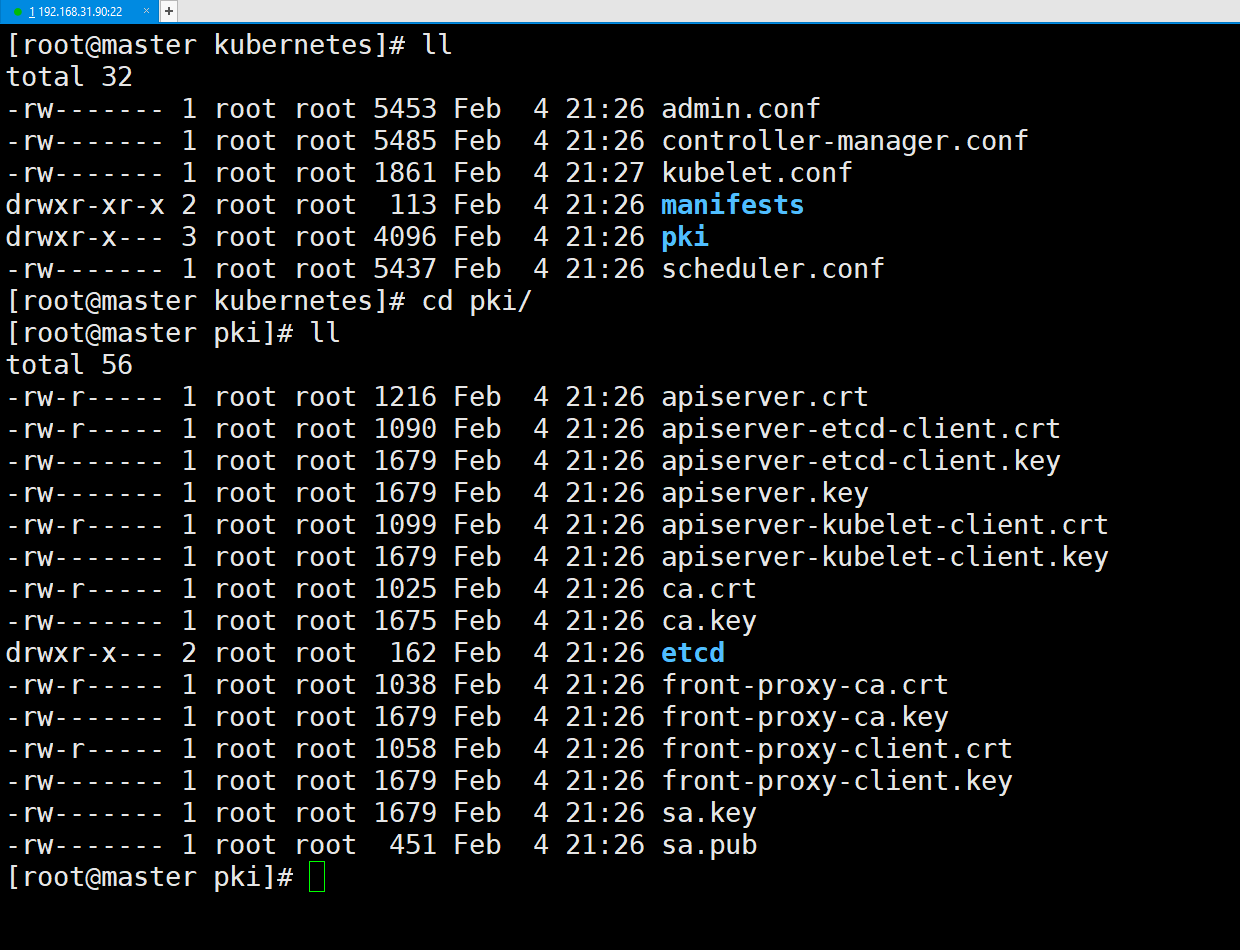

26:查看证书

[root@master ~]# cd /etc/kubernetes/[root@master kubernetes]# lltotal 32-rw------- 1 root root 5453 Feb 4 21:26 admin.conf-rw------- 1 root root 5485 Feb 4 21:26 controller-manager.conf-rw------- 1 root root 1861 Feb 4 21:27 kubelet.confdrwxr-xr-x 2 root root 113 Feb 4 21:26 manifestsdrwxr-x--- 3 root root 4096 Feb 4 21:26 pki-rw------- 1 root root 5437 Feb 4 21:26 scheduler.conf[root@master kubernetes]# tree.├── admin.conf├── controller-manager.conf├── kubelet.conf├── manifests│ ├── etcd.yaml│ ├── kube-apiserver.yaml│ ├── kube-controller-manager.yaml│ └── kube-scheduler.yaml├── pki│ ├── apiserver.crt│ ├── apiserver-etcd-client.crt│ ├── apiserver-etcd-client.key│ ├── apiserver.key│ ├── apiserver-kubelet-client.crt│ ├── apiserver-kubelet-client.key│ ├── ca.crt│ ├── ca.key│ ├── etcd│ │ ├── ca.crt│ │ ├── ca.key│ │ ├── healthcheck-client.crt│ │ ├── healthcheck-client.key│ │ ├── peer.crt│ │ ├── peer.key│ │ ├── server.crt│ │ └── server.key│ ├── front-proxy-ca.crt│ ├── front-proxy-ca.key│ ├── front-proxy-client.crt│ ├── front-proxy-client.key│ ├── sa.key│ └── sa.pub└── scheduler.conf3 directories, 30 files[root@master kubernetes]#

27:查看证书有效时间

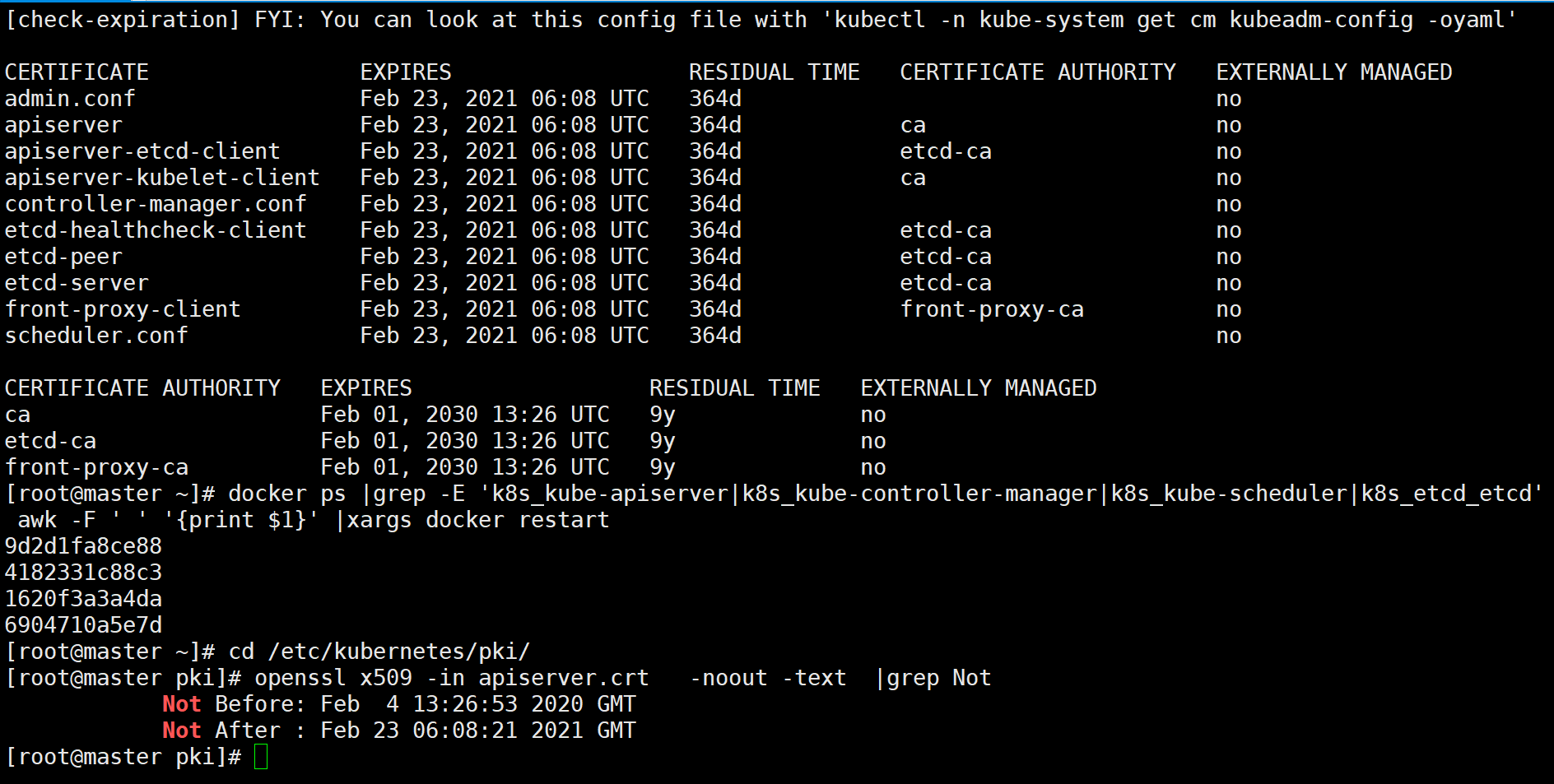

[root@master ~]# kubeadm alpha certs check-expiration[check-expiration] Reading configuration from the cluster...[check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGEDadmin.conf Mar 03, 2022 13:47 UTC 364d noapiserver Mar 03, 2022 13:47 UTC 364d ca noapiserver-etcd-client Mar 03, 2022 13:47 UTC 364d etcd-ca noapiserver-kubelet-client Mar 03, 2022 13:47 UTC 364d ca nocontroller-manager.conf Mar 03, 2022 13:47 UTC 364d noetcd-healthcheck-client Mar 03, 2022 13:47 UTC 364d etcd-ca noetcd-peer Mar 03, 2022 13:47 UTC 364d etcd-ca noetcd-server Mar 03, 2022 13:47 UTC 364d etcd-ca nofront-proxy-client Mar 03, 2022 13:47 UTC 364d front-proxy-ca noscheduler.conf Mar 03, 2022 13:47 UTC 364d noCERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGEDca Mar 01, 2031 13:47 UTC 9y noetcd-ca Mar 01, 2031 13:47 UTC 9y nofront-proxy-ca Mar 01, 2031 13:47 UTC 9y no[root@master ~]#

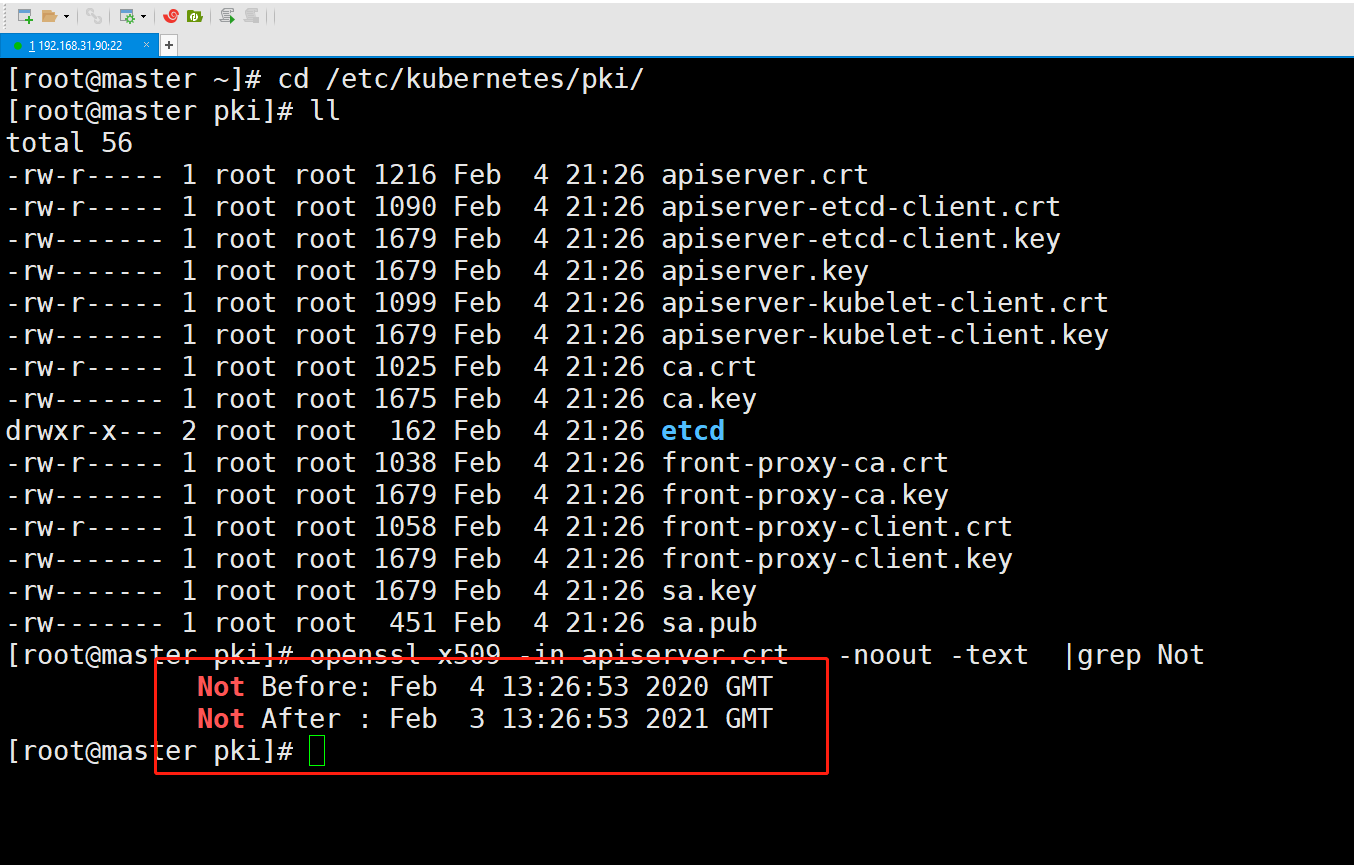

[root@master ~]# cd /etc/kubernetes/pki/[root@master pki]# lltotal 56-rw-r----- 1 root root 1216 Feb 4 21:26 apiserver.crt-rw-r----- 1 root root 1090 Feb 4 21:26 apiserver-etcd-client.crt-rw------- 1 root root 1679 Feb 4 21:26 apiserver-etcd-client.key-rw------- 1 root root 1679 Feb 4 21:26 apiserver.key-rw-r----- 1 root root 1099 Feb 4 21:26 apiserver-kubelet-client.crt-rw------- 1 root root 1679 Feb 4 21:26 apiserver-kubelet-client.key-rw-r----- 1 root root 1025 Feb 4 21:26 ca.crt-rw------- 1 root root 1675 Feb 4 21:26 ca.keydrwxr-x--- 2 root root 162 Feb 4 21:26 etcd-rw-r----- 1 root root 1038 Feb 4 21:26 front-proxy-ca.crt-rw------- 1 root root 1679 Feb 4 21:26 front-proxy-ca.key-rw-r----- 1 root root 1058 Feb 4 21:26 front-proxy-client.crt-rw------- 1 root root 1679 Feb 4 21:26 front-proxy-client.key-rw------- 1 root root 1679 Feb 4 21:26 sa.key-rw------- 1 root root 451 Feb 4 21:26 sa.pub[root@master pki]# openssl x509 -in apiserver.crt -noout -text |grep NotNot Before: Feb 4 13:26:53 2020 GMTNot After : Feb 3 13:26:53 2021 GMT[root@master pki]#

28:更新证书

https://kubernetes.io/zh/docs/tasks/tls/certificate-rotation

[root@master ~]# kubeadm config view > /root/kubeadm.yaml[root@master ~]# ll /root/kubeadm.yaml-rw-r----- 1 root root 492 Feb 24 14:07 /root/kubeadm.yaml[root@master ~]# cat /root/kubeadm.yamlapiServer:extraArgs:authorization-mode: Node,RBACtimeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.17.2networking:dnsDomain: cluster.localpodSubnet: 192.168.0.0/16serviceSubnet: 10.96.0.0/12scheduler: {}[root@master ~]# kubeadm alpha certs renew all --config=/root/kubeadm.yamlW0224 14:08:21.385077 47490 validation.go:28] Cannot validate kube-proxy config - no validator is availableW0224 14:08:21.385111 47490 validation.go:28] Cannot validate kubelet config - no validator is availablecertificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewedcertificate for serving the Kubernetes API renewedcertificate the apiserver uses to access etcd renewedcertificate for the API server to connect to kubelet renewedcertificate embedded in the kubeconfig file for the controller manager to use renewedcertificate for liveness probes to healthcheck etcd renewedcertificate for etcd nodes to communicate with each other renewedcertificate for serving etcd renewedcertificate for the front proxy client renewedcertificate embedded in the kubeconfig file for the scheduler manager to use renewed[root@master ~]# kubeadm alpha certs check-expiration[check-expiration] Reading configuration from the cluster...[check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGEDadmin.conf Feb 23, 2021 06:08 UTC 364d noapiserver Feb 23, 2021 06:08 UTC 364d ca noapiserver-etcd-client Feb 23, 2021 06:08 UTC 364d etcd-ca noapiserver-kubelet-client Feb 23, 2021 06:08 UTC 364d ca nocontroller-manager.conf Feb 23, 2021 06:08 UTC 364d noetcd-healthcheck-client Feb 23, 2021 06:08 UTC 364d etcd-ca noetcd-peer Feb 23, 2021 06:08 UTC 364d etcd-ca noetcd-server Feb 23, 2021 06:08 UTC 364d etcd-ca nofront-proxy-client Feb 23, 2021 06:08 UTC 364d front-proxy-ca noscheduler.conf Feb 23, 2021 06:08 UTC 364d noCERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGEDca Feb 01, 2030 13:26 UTC 9y noetcd-ca Feb 01, 2030 13:26 UTC 9y nofront-proxy-ca Feb 01, 2030 13:26 UTC 9y no[root@master ~]# docker ps |grep -E 'k8s_kube-apiserver|k8s_kube-controller-manager|k8s_kube-scheduler|k8s_etcd_etcd' | awk -F ' ' '{print $1}' |xargs docker restart9d2d1fa8ce884182331c88c31620f3a3a4da6904710a5e7d[root@master ~]# cd /etc/kubernetes/pki/[root@master pki]# openssl x509 -in apiserver.crt -noout -text |grep NotNot Before: Feb 4 13:26:53 2020 GMTNot After : Feb 23 06:08:21 2021 GMT[root@master pki]#

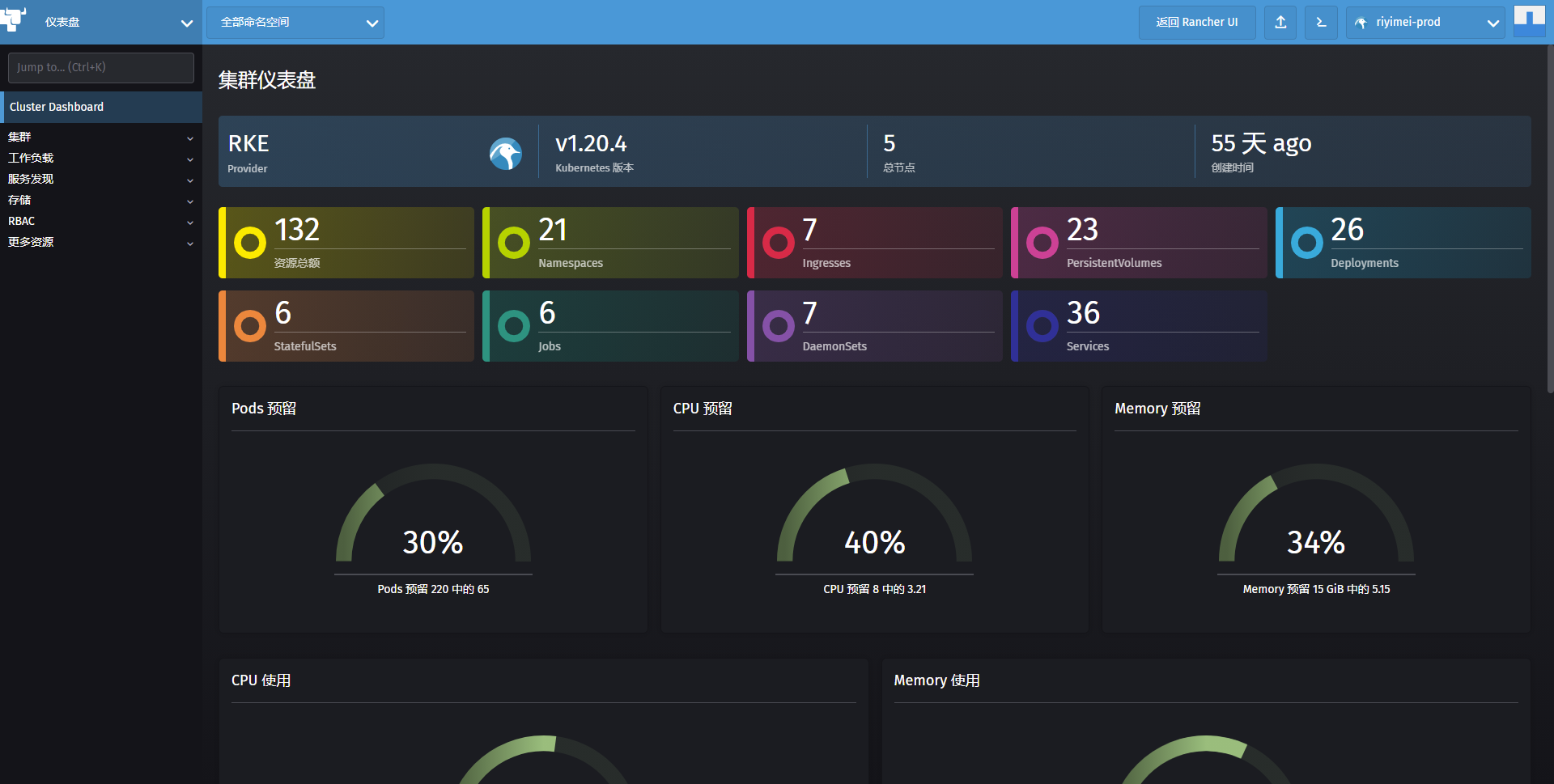

29:Kbernetes管理平台

rancher

https://docs.rancher.cn/rancher2x/

30:集群升级 v1.17.2 升级 v1.17.4 不可跨大版本升级

查看新版本 kubeadm upgrade plan

[root@master ~]# kubeadm upgrade plan[upgrade/config] Making sure the configuration is correct:[upgrade/config] Reading configuration from the cluster...[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[preflight] Running pre-flight checks.[upgrade] Making sure the cluster is healthy:[upgrade] Fetching available versions to upgrade to[upgrade/versions] Cluster version: v1.17.2[upgrade/versions] kubeadm version: v1.17.2I0326 09:28:34.816310 3805 version.go:251] remote version is much newer: v1.18.0; falling back to: stable-1.17[upgrade/versions] Latest stable version: v1.17.4[upgrade/versions] Latest version in the v1.17 series: v1.17.4Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':COMPONENT CURRENT AVAILABLEKubelet 3 x v1.17.2 v1.17.4Upgrade to the latest version in the v1.17 series:COMPONENT CURRENT AVAILABLEAPI Server v1.17.2 v1.17.4Controller Manager v1.17.2 v1.17.4Scheduler v1.17.2 v1.17.4Kube Proxy v1.17.2 v1.17.4CoreDNS 1.6.5 1.6.5Etcd 3.4.3 3.4.3-0You can now apply the upgrade by executing the following command:kubeadm upgrade apply v1.17.4Note: Before you can perform this upgrade, you have to update kubeadm to v1.17.4._____________________________________________________________________[root@master ~]#

[root@master ~]# kubectl -n kube-system get cm kubeadm-config -oyamlapiVersion: v1data:ClusterConfiguration: |apiServer:extraArgs:authorization-mode: Node,RBACtimeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.18.0networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12scheduler: {}ClusterStatus: |apiEndpoints:master:advertiseAddress: 192.168.11.90bindPort: 6443apiVersion: kubeadm.k8s.io/v1beta2kind: ClusterStatuskind: ConfigMapmetadata:creationTimestamp: "2021-02-27T03:08:25Z"managedFields:- apiVersion: v1fieldsType: FieldsV1fieldsV1:f:data:.: {}f:ClusterConfiguration: {}f:ClusterStatus: {}manager: kubeadmoperation: Updatetime: "2021-02-27T03:08:25Z"name: kubeadm-confignamespace: kube-systemresourceVersion: "158"selfLink: /api/v1/namespaces/kube-system/configmaps/kubeadm-configuid: e7ba4014-ba35-47bd-b8fc-c4747c4b4603[root@master ~]# kubeadm upgrade plan[upgrade/config] Making sure the configuration is correct:[upgrade/config] Reading configuration from the cluster...[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade] Fetching available versions to upgrade to[upgrade/versions] Cluster version: v1.18.0[upgrade/versions] kubeadm version: v1.18.0I0328 01:13:38.358116 47799 version.go:252] remote version is much newer: v1.20.5; falling back to: stable-1.18[upgrade/versions] Latest stable version: v1.18.17[upgrade/versions] Latest stable version: v1.18.17[upgrade/versions] Latest version in the v1.18 series: v1.18.17[upgrade/versions] Latest version in the v1.18 series: v1.18.17Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':COMPONENT CURRENT AVAILABLEKubelet 3 x v1.18.0 v1.18.17Upgrade to the latest version in the v1.18 series:COMPONENT CURRENT AVAILABLEAPI Server v1.18.0 v1.18.17Controller Manager v1.18.0 v1.18.17Scheduler v1.18.0 v1.18.17Kube Proxy v1.18.0 v1.18.17CoreDNS 1.6.7 1.6.7Etcd 3.4.3 3.4.3-0You can now apply the upgrade by executing the following command:kubeadm upgrade apply v1.18.17Note: Before you can perform this upgrade, you have to update kubeadm to v1.18.17._____________________________________________________________________[root@master ~]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster Ready,SchedulingDisabled master 28d v1.18.0node01 Ready <none> 28d v1.18.0node02 Ready <none> 28d v1.18.0[root@master ~]#

升级

kubeadm kubectl

yum install -y kubelet kubeadm kubectl —disableexcludes=kubernetes

指定升级版本

systemctl daemon-reloadsystemctl restart kubelet.service

[root@master ~]# kubectl versionClient Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.4", GitCommit:"8d8aa39598534325ad77120c120a22b3a990b5ea", GitTreeState:"clean", BuildDate:"2020-03-12T21:03:42Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}Server Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.2", GitCommit:"59603c6e503c87169aea6106f57b9f242f64df89", GitTreeState:"clean", BuildDate:"2020-01-18T23:22:30Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"}[root@master ~]#

使用集群的配置文件检查升级信息

kubeadm upgrade apply v1.17.4 --config init.default.yaml --dry-run

升级指定版本:

[root@master ~]# kubeadm upgrade apply v1.17.4 --config init.default.yamlW0326 09:42:59.690575 25446 validation.go:28] Cannot validate kube-proxy config - no validator is availableW0326 09:42:59.690611 25446 validation.go:28] Cannot validate kubelet config - no validator is available[upgrade/config] Making sure the configuration is correct:W0326 09:42:59.701115 25446 common.go:94] WARNING: Usage of the --config flag for reconfiguring the cluster during upgrade is not recommended!W0326 09:42:59.701862 25446 validation.go:28] Cannot validate kube-proxy config - no validator is availableW0326 09:42:59.701870 25446 validation.go:28] Cannot validate kubelet config - no validator is available[preflight] Running pre-flight checks.[upgrade] Making sure the cluster is healthy:[upgrade/version] You have chosen to change the cluster version to "v1.17.4"[upgrade/versions] Cluster version: v1.17.2[upgrade/versions] kubeadm version: v1.17.4[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd][upgrade/prepull] Prepulling image for component etcd.[upgrade/prepull] Prepulling image for component kube-apiserver.[upgrade/prepull] Prepulling image for component kube-controller-manager.[upgrade/prepull] Prepulling image for component kube-scheduler.[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd[upgrade/prepull] Prepulled image for component etcd.[upgrade/prepull] Prepulled image for component kube-scheduler.[upgrade/prepull] Prepulled image for component kube-apiserver.[upgrade/prepull] Prepulled image for component kube-controller-manager.[upgrade/prepull] Successfully prepulled the images for all the control plane components[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.17.4"...Static pod: kube-apiserver-master hash: 221485f981f15fee9c0123f34ca082cdStatic pod: kube-controller-manager-master hash: 8d9fdb8447a20709c28d62b361e21c5cStatic pod: kube-scheduler-master hash: 5fd6ddfbc568223e0845f80bd6fd6a1a[upgrade/etcd] Upgrading to TLS for etcd[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.17.4" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests668951726"[upgrade/staticpods] Preparing for "kube-apiserver" upgrade[upgrade/staticpods] Renewing apiserver certificate[upgrade/staticpods] Renewing apiserver-kubelet-client certificate[upgrade/staticpods] Renewing front-proxy-client certificate[upgrade/staticpods] Renewing apiserver-etcd-client certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-apiserver.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-apiserver-master hash: 221485f981f15fee9c0123f34ca082cdStatic pod: kube-apiserver-master hash: 5fc5a9f3b46c1fd494c3e99e0c7d307c[apiclient] Found 1 Pods for label selector component=kube-apiserver[upgrade/staticpods] Component "kube-apiserver" upgraded successfully![upgrade/staticpods] Preparing for "kube-controller-manager" upgrade[upgrade/staticpods] Renewing controller-manager.conf certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-controller-manager.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-controller-manager-master hash: 8d9fdb8447a20709c28d62b361e21c5cStatic pod: kube-controller-manager-master hash: 31ffb42eb9357ac50986e0b46ee527f8[apiclient] Found 1 Pods for label selector component=kube-controller-manager[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully![upgrade/staticpods] Preparing for "kube-scheduler" upgrade[upgrade/staticpods] Renewing scheduler.conf certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-scheduler.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-scheduler-master hash: 5fd6ddfbc568223e0845f80bd6fd6a1aStatic pod: kube-scheduler-master hash: b265ed564e34d3887fe43a6a6210fbd4[apiclient] Found 1 Pods for label selector component=kube-scheduler[upgrade/staticpods] Component "kube-scheduler" upgraded successfully![upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster[addons]: Migrating CoreDNS Corefile[addons] Applied essential addon: CoreDNS[addons] Applied essential addon: kube-proxy[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.17.4". Enjoy![upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.[root@master ~]#

组件升级成功:

重启master节点 kubelet组件

systemctl daemon-reloadsystemctl restart kubelet

等待一会 master组件可查询到更新完成

[root@master ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-77c4b7448-9nrx2 1/1 Running 6 50dcalico-node-mc7s7 0/1 Running 6 50dcalico-node-p8rm7 1/1 Running 5 50dcalico-node-wwtkl 1/1 Running 5 50dcoredns-9d85f5447-ht4c4 1/1 Running 6 50dcoredns-9d85f5447-wvjlb 1/1 Running 6 50detcd-master 1/1 Running 6 50dkube-apiserver-master 1/1 Running 0 2m59skube-controller-manager-master 1/1 Running 0 2m55skube-proxy-5hdvx 1/1 Running 0 2m1skube-proxy-7hdzf 1/1 Running 0 2m7skube-proxy-v4hjt 1/1 Running 0 2m17skube-scheduler-master 1/1 Running 0 2m52s[root@master ~]# systemctl daemon-reload[root@master ~]# systemctl restart kubelet[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster NotReady master 50d v1.17.2node01 Ready <none> 50d v1.17.2node02 Ready <none> 50d v1.17.2[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 50d v1.17.4node01 Ready <none> 50d v1.17.2node02 Ready <none> 50d v1.17.2[root@master ~]#

升级nodes节点 kubelet

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetessystemctl daemon-reloadsystemctl restart kubelet.service

等待etcd更新数据 即可查询到节点都已升级完成

[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 50d v1.17.4node01 Ready <none> 50d v1.17.2node02 Ready <none> 50d v1.17.2[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 50d v1.17.4node01 Ready <none> 50d v1.17.4node02 Ready <none> 50d v1.17.4[root@master ~]#

官方

https://kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

yum list --showduplicates kubeadm --disableexcludes=kubernetesyum install kubeadm-1.18.0-0 --disableexcludes=kubernetesyum install kubelet-1.18.0-0 kubectl-1.18.0-0 --disableexcludes=kubernetes

[root@master ~]# kubectl get pod -v=9I0412 19:14:41.572613 118710 loader.go:375] Config loaded from file: /root/.kube/configI0412 19:14:41.579296 118710 round_trippers.go:423] curl -k -v -XGET -H "User-Agent: kubectl/v1.18.0 (linux/amd64) kubernetes/9e99141" -H "Accept: application/json;as=Table;v=v1;g=meta.k8s.io,application/json;as=Table;v=v1beta1;g=meta.k8s.io,application/json" 'https://192.168.31.90:6443/api/v1/namespaces/default/pods?limit=500'I0412 19:14:41.588188 118710 round_trippers.go:443] GET https://192.168.31.90:6443/api/v1/namespaces/default/pods?limit=500 200 OK in 8 millisecondsI0412 19:14:41.588216 118710 round_trippers.go:449] Response Headers:I0412 19:14:41.588220 118710 round_trippers.go:452] Content-Type: application/jsonI0412 19:14:41.588222 118710 round_trippers.go:452] Content-Length: 2927I0412 19:14:41.588224 118710 round_trippers.go:452] Date: Sun, 12 Apr 2020 11:14:41 GMTI0412 19:14:41.588317 118710 request.go:1068] Response Body: {"kind":"Table","apiVersion":"meta.k8s.io/v1","metadata":{"selfLink":"/api/v1/namespaces/default/pods","resourceVersion":"580226"},"columnDefinitions":[{"name":"Name","type":"string","format":"name","description":"Name must be unique within a namespace. Is required when creating resources, although some resources may allow a client to request the generation of an appropriate name automatically. Name is primarily intended for creation idempotence and configuration definition. Cannot be updated. More info: http://kubernetes.io/docs/user-guide/identifiers#names","priority":0},{"name":"Ready","type":"string","format":"","description":"The aggregate readiness state of this pod for accepting traffic.","priority":0},{"name":"Status","type":"string","format":"","description":"The aggregate status of the containers in this pod.","priority":0},{"name":"Restarts","type":"integer","format":"","description":"The number of times the containers in this pod have been restarted.","priority":0},{"name":"Age","type":"string","format":"","description":"CreationTimestamp is a timestamp representing the server time when this object was created. It is not guaranteed to be set in happens-before order across separate operations. Clients may not set this value. It is represented in RFC3339 form and is in UTC.\n\nPopulated by the system. Read-only. Null for lists. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata","priority":0},{"name":"IP","type":"string","format":"","description":"IP address allocated to the pod. Routable at least within the cluster. Empty if not yet allocated.","priority":1},{"name":"Node","type":"string","format":"","description":"NodeName is a request to schedule this pod onto a specific node. If it is non-empty, the scheduler simply schedules this pod onto that node, assuming that it fits resource requirements.","priority":1},{"name":"Nominated Node","type":"string","format":"","description":"nominatedNodeName is set only when this pod preempts other pods on the node, but it cannot be scheduled right away as preemption victims receive their graceful termination periods. This field does not guarantee that the pod will be scheduled on this node. Scheduler may decide to place the pod elsewhere if other nodes become available sooner. Scheduler may also decide to give the resources on this node to a higher priority pod that is created after preemption. As a result, this field may be different than PodSpec.nodeName when the pod is scheduled.","priority":1},{"name":"Readiness Gates","type":"string","format":"","description":"If specified, all readiness gates will be evaluated for pod readiness. A pod is ready when all its containers are ready AND all conditions specified in the readiness gates have status equal to \"True\" More info: https://git.k8s.io/enhancements/keps/sig-network/0007-pod-ready%2B%2B.md","priority":1}],"rows":[]}No resources found in default namespace.[root@master ~]#

[root@master ~]# kubeadm upgrade plan[upgrade/config] Making sure the configuration is correct:[upgrade/config] Reading configuration from the cluster...[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade] Fetching available versions to upgrade to[upgrade/versions] Cluster version: v1.18.0[upgrade/versions] kubeadm version: v1.18.0[upgrade/versions] Latest stable version: v1.18.1[upgrade/versions] Latest stable version: v1.18.1[upgrade/versions] Latest version in the v1.18 series: v1.18.1[upgrade/versions] Latest version in the v1.18 series: v1.18.1Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':COMPONENT CURRENT AVAILABLEKubelet 3 x v1.18.0 v1.18.1Upgrade to the latest version in the v1.18 series:COMPONENT CURRENT AVAILABLEAPI Server v1.18.0 v1.18.1Controller Manager v1.18.0 v1.18.1Scheduler v1.18.0 v1.18.1Kube Proxy v1.18.0 v1.18.1CoreDNS 1.6.7 1.6.7Etcd 3.4.3 3.4.3-0You can now apply the upgrade by executing the following command:kubeadm upgrade apply v1.18.1Note: Before you can perform this upgrade, you have to update kubeadm to v1.18.1._____________________________________________________________________[root@master ~]#

[root@master ~]# yum install kubeadm-1.18.1-0 --disableexcludes=kubernetesLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfile* base: mirrors.aliyun.com* extras: mirrors.aliyun.com* updates: mirrors.aliyun.combase | 3.6 kB 00:00:00docker-ce-stable | 3.5 kB 00:00:00Resolving Dependencies--> Running transaction check---> Package kubeadm.x86_64 0:1.18.0-0 will be updated---> Package kubeadm.x86_64 0:1.18.1-0 will be an update--> Finished Dependency ResolutionDependencies Resolved=======================================================================================================================================Package Arch Version Repository Size=======================================================================================================================================Updating:kubeadm x86_64 1.18.1-0 kubernetes 8.8 MTransaction Summary=======================================================================================================================================Upgrade 1 PackageTotal download size: 8.8 MIs this ok [y/d/N]: yDownloading packages:Delta RPMs disabled because /usr/bin/applydeltarpm not installed.a86b51d48af8df740035f8bc4c0c30d994d5c8ef03388c21061372d5c5b2859d-kubeadm-1.18.1-0.x86_64.rpm | 8.8 MB 00:00:01Running transaction checkRunning transaction testTransaction test succeededRunning transactionUpdating : kubeadm-1.18.1-0.x86_64 1/2Cleanup : kubeadm-1.18.0-0.x86_64 2/2Verifying : kubeadm-1.18.1-0.x86_64 1/2Verifying : kubeadm-1.18.0-0.x86_64 2/2Updated:kubeadm.x86_64 0:1.18.1-0Complete![root@master ~]#

[root@master ~]# yum install kubelet-1.18.1-0 kubectl-1.18.1-0 --disableexcludes=kubernetes -yLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfile* base: mirrors.aliyun.com* extras: mirrors.aliyun.com* updates: mirrors.aliyun.comResolving Dependencies--> Running transaction check---> Package kubectl.x86_64 0:1.18.0-0 will be updated---> Package kubectl.x86_64 0:1.18.1-0 will be an update---> Package kubelet.x86_64 0:1.18.0-0 will be updated---> Package kubelet.x86_64 0:1.18.1-0 will be an update--> Finished Dependency ResolutionDependencies Resolved=======================================================================================================================================Package Arch Version Repository Size=======================================================================================================================================Updating:kubectl x86_64 1.18.1-0 kubernetes 9.5 Mkubelet x86_64 1.18.1-0 kubernetes 21 MTransaction Summary=======================================================================================================================================Upgrade 2 PackagesTotal download size: 30 MDownloading packages:Delta RPMs disabled because /usr/bin/applydeltarpm not installed.(1/2): 9b65a188779e61866501eb4e8a07f38494d40af1454ba9232f98fd4ced4ba935-kubectl-1.18.1-0.x86_64.rpm | 9.5 MB 00:00:02(2/2): 39b64bb11c6c123dd502af7d970cee95606dbf7fd62905de0412bdac5e875843-kubelet-1.18.1-0.x86_64.rpm | 21 MB 00:00:03---------------------------------------------------------------------------------------------------------------------------------------Total 9.1 MB/s | 30 MB 00:00:03Running transaction checkRunning transaction testTransaction test succeededRunning transactionUpdating : kubectl-1.18.1-0.x86_64 1/4Updating : kubelet-1.18.1-0.x86_64 2/4Cleanup : kubectl-1.18.0-0.x86_64 3/4Cleanup : kubelet-1.18.0-0.x86_64 4/4Verifying : kubelet-1.18.1-0.x86_64 1/4Verifying : kubectl-1.18.1-0.x86_64 2/4Verifying : kubectl-1.18.0-0.x86_64 3/4Verifying : kubelet-1.18.0-0.x86_64 4/4Updated:kubectl.x86_64 0:1.18.1-0 kubelet.x86_64 0:1.18.1-0Complete![root@master ~]#

[root@master ~]# kubeadm upgrade apply v1.18.1 --config init.default.yamlW0412 19:42:53.251523 28178 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io][upgrade/config] Making sure the configuration is correct:W0412 19:42:53.259480 28178 common.go:94] WARNING: Usage of the --config flag for reconfiguring the cluster during upgrade is not recommended!W0412 19:42:53.260162 28178 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io][preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade/version] You have chosen to change the cluster version to "v1.18.1"[upgrade/versions] Cluster version: v1.18.0[upgrade/versions] kubeadm version: v1.18.1[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd][upgrade/prepull] Prepulling image for component etcd.[upgrade/prepull] Prepulling image for component kube-apiserver.[upgrade/prepull] Prepulling image for component kube-controller-manager.[upgrade/prepull] Prepulling image for component kube-scheduler.[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd[upgrade/prepull] Prepulled image for component kube-apiserver.[upgrade/prepull] Prepulled image for component kube-controller-manager.[upgrade/prepull] Prepulled image for component kube-scheduler.[upgrade/prepull] Prepulled image for component etcd.[upgrade/prepull] Successfully prepulled the images for all the control plane components[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.18.1"...Static pod: kube-apiserver-master hash: b8bcd214f8b135af672715d5d8102bacStatic pod: kube-controller-manager-master hash: cfac61c0d2fc7bcd14585e430d31d4b3Static pod: kube-scheduler-master hash: ca2aa1b3224c37fa1791ef6c7d883bbe[upgrade/etcd] Upgrading to TLS for etcd[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.18.1" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests936160488"[upgrade/staticpods] Preparing for "kube-apiserver" upgrade[upgrade/staticpods] Renewing apiserver certificate[upgrade/staticpods] Renewing apiserver-kubelet-client certificate[upgrade/staticpods] Renewing front-proxy-client certificate[upgrade/staticpods] Renewing apiserver-etcd-client certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-12-19-48-15/kube-apiserver.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-apiserver-master hash: b8bcd214f8b135af672715d5d8102bac[upgrade/apply] FATAL: couldn't upgrade control plane. kubeadm has tried to recover everything into the earlier state. Errors faced: timed out waiting for the conditionTo see the stack trace of this error execute with --v=5 or higher[root@master ~]# kubeadm upgrade apply v1.18.1 --config init.default.yamlW0412 19:54:26.305065 46592 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io][upgrade/config] Making sure the configuration is correct:W0412 19:54:26.314312 46592 common.go:94] WARNING: Usage of the --config flag for reconfiguring the cluster during upgrade is not recommended!W0412 19:54:26.315231 46592 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io][preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade/version] You have chosen to change the cluster version to "v1.18.1"[upgrade/versions] Cluster version: v1.18.0[upgrade/versions] kubeadm version: v1.18.1[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd][upgrade/prepull] Prepulling image for component etcd.[upgrade/prepull] Prepulling image for component kube-apiserver.[upgrade/prepull] Prepulling image for component kube-controller-manager.[upgrade/prepull] Prepulling image for component kube-scheduler.[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd[upgrade/prepull] Prepulled image for component kube-apiserver.[upgrade/prepull] Prepulled image for component kube-controller-manager.[upgrade/prepull] Prepulled image for component etcd.[upgrade/prepull] Prepulled image for component kube-scheduler.[upgrade/prepull] Successfully prepulled the images for all the control plane components[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.18.1"...Static pod: kube-apiserver-master hash: b8bcd214f8b135af672715d5d8102bacStatic pod: kube-controller-manager-master hash: cfac61c0d2fc7bcd14585e430d31d4b3Static pod: kube-scheduler-master hash: ca2aa1b3224c37fa1791ef6c7d883bbe[upgrade/etcd] Upgrading to TLS for etcd[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.18.1" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests721958972"[upgrade/staticpods] Preparing for "kube-apiserver" upgrade[upgrade/staticpods] Renewing apiserver certificate[upgrade/staticpods] Renewing apiserver-kubelet-client certificate[upgrade/staticpods] Renewing front-proxy-client certificate[upgrade/staticpods] Renewing apiserver-etcd-client certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-12-19-59-44/kube-apiserver.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-apiserver-master hash: b8bcd214f8b135af672715d5d8102bacStatic pod: kube-apiserver-master hash: 3199b786e3649f9b8b3aba73c0e5e082[apiclient] Found 1 Pods for label selector component=kube-apiserver[apiclient] Error getting Pods with label selector "component=kube-apiserver" [Get https://192.168.31.90:6443/api/v1/namespaces/kube-system/pods?labelSelector=component%3Dkube-apiserver: dial tcp 192.168.31.90:6443: connect: connection refused][apiclient] Error getting Pods with label selector "component=kube-apiserver" [Get https://192.168.31.90:6443/api/v1/namespaces/kube-system/pods?labelSelector=component%3Dkube-apiserver: dial tcp 192.168.31.90:6443: connect: connection refused][upgrade/staticpods] Component "kube-apiserver" upgraded successfully![upgrade/staticpods] Preparing for "kube-controller-manager" upgrade[upgrade/staticpods] Renewing controller-manager.conf certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-12-19-59-44/kube-controller-manager.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-controller-manager-master hash: cfac61c0d2fc7bcd14585e430d31d4b3Static pod: kube-controller-manager-master hash: fbadd0eb537080d05354f06363a29760[apiclient] Found 1 Pods for label selector component=kube-controller-manager[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully![upgrade/staticpods] Preparing for "kube-scheduler" upgrade[upgrade/staticpods] Renewing scheduler.conf certificate[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-12-19-59-44/kube-scheduler.yaml"[upgrade/staticpods] Waiting for the kubelet to restart the component[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)Static pod: kube-scheduler-master hash: ca2aa1b3224c37fa1791ef6c7d883bbeStatic pod: kube-scheduler-master hash: 363a5bee1d59c51a98e345162db75755[apiclient] Found 1 Pods for label selector component=kube-scheduler[upgrade/staticpods] Component "kube-scheduler" upgraded successfully![upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster[addons] Applied essential addon: CoreDNS[addons] Applied essential addon: kube-proxy[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.18.1". Enjoy![upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.[root@master ~]#

[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 67d v1.18.0node01 Ready <none> 67d v1.18.0node02 Ready <none> 67d v1.18.0[root@master ~]# systemctl daemon-reload[root@master ~]# systemctl restart kubelet[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 67d v1.18.0node01 Ready <none> 67d v1.18.0node02 Ready <none> 67d v1.18.0[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 67d v1.18.1node01 Ready <none> 67d v1.18.0node02 Ready <none> 67d v1.18.0[root@master ~]#

[root@node01 ~]# yum install kubeadm-1.18.1-0 kubelet-1.18.1-0 kubectl-1.18.1-0 --disableexcludes=kubernetes -yLoaded plugins: fastestmirrorDetermining fastest mirrors* base: mirrors.aliyun.com* extras: mirrors.aliyun.com* updates: mirrors.aliyun.combase | 3.6 kB 00:00:00docker-ce-stable | 3.5 kB 00:00:00epel | 4.7 kB 00:00:00extras | 2.9 kB 00:00:00kubernetes/signature | 454 B 00:00:00kubernetes/signature | 1.4 kB 00:00:00 !!!updates | 2.9 kB 00:00:00(1/5): epel/x86_64/group_gz | 95 kB 00:00:00(2/5): epel/x86_64/updateinfo | 1.0 MB 00:00:00(3/5): extras/7/x86_64/primary_db | 165 kB 00:00:00(4/5): kubernetes/primary | 66 kB 00:00:00(5/5): epel/x86_64/primary_db | 6.8 MB 00:00:00kubernetes 484/484Resolving Dependencies--> Running transaction check---> Package kubeadm.x86_64 0:1.18.0-0 will be updated---> Package kubeadm.x86_64 0:1.18.1-0 will be an update---> Package kubectl.x86_64 0:1.18.0-0 will be updated---> Package kubectl.x86_64 0:1.18.1-0 will be an update---> Package kubelet.x86_64 0:1.18.0-0 will be updated---> Package kubelet.x86_64 0:1.18.1-0 will be an update--> Finished Dependency ResolutionDependencies Resolved=======================================================================================================================================Package Arch Version Repository Size=======================================================================================================================================Updating:kubeadm x86_64 1.18.1-0 kubernetes 8.8 Mkubectl x86_64 1.18.1-0 kubernetes 9.5 Mkubelet x86_64 1.18.1-0 kubernetes 21 MTransaction Summary=======================================================================================================================================Upgrade 3 PackagesTotal download size: 39 MDownloading packages:Delta RPMs disabled because /usr/bin/applydeltarpm not installed.(1/3): a86b51d48af8df740035f8bc4c0c30d994d5c8ef03388c21061372d5c5b2859d-kubeadm-1.18.1-0.x86_64.rpm | 8.8 MB 00:00:01(2/3): 39b64bb11c6c123dd502af7d970cee95606dbf7fd62905de0412bdac5e875843-kubelet-1.18.1-0.x86_64.rpm | 21 MB 00:00:02(3/3): 9b65a188779e61866501eb4e8a07f38494d40af1454ba9232f98fd4ced4ba935-kubectl-1.18.1-0.x86_64.rpm | 9.5 MB 00:00:04---------------------------------------------------------------------------------------------------------------------------------------Total 9.0 MB/s | 39 MB 00:00:04Running transaction checkRunning transaction testTransaction test succeededRunning transactionUpdating : kubectl-1.18.1-0.x86_64 1/6Updating : kubelet-1.18.1-0.x86_64 2/6Updating : kubeadm-1.18.1-0.x86_64 3/6Cleanup : kubeadm-1.18.0-0.x86_64 4/6Cleanup : kubectl-1.18.0-0.x86_64 5/6Cleanup : kubelet-1.18.0-0.x86_64 6/6Verifying : kubeadm-1.18.1-0.x86_64 1/6Verifying : kubelet-1.18.1-0.x86_64 2/6Verifying : kubectl-1.18.1-0.x86_64 3/6Verifying : kubectl-1.18.0-0.x86_64 4/6Verifying : kubelet-1.18.0-0.x86_64 5/6Verifying : kubeadm-1.18.0-0.x86_64 6/6Updated:kubeadm.x86_64 0:1.18.1-0 kubectl.x86_64 0:1.18.1-0 kubelet.x86_64 0:1.18.1-0Complete![root@node01 ~]# systemctl daemon-reload[root@node01 ~]# systemctl restart kubelet[root@node01 ~]#

[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready master 67d v1.18.1node01 Ready <none> 67d v1.18.1node02 Ready <none> 67d v1.18.1[root@master ~]#

跨大版本升级

yum list kubelet kubeadm kubectl --showduplicates|sort -r

[root@master ~]# kubeadm upgrade plan[upgrade/config] Making sure the configuration is correct:[upgrade/config] Reading configuration from the cluster...[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade] Fetching available versions to upgrade to[upgrade/versions] Cluster version: v1.18.16[upgrade/versions] kubeadm version: v1.18.16I0312 10:26:34.624848 24536 version.go:255] remote version is much newer: v1.20.4; falling back to: stable-1.18[upgrade/versions] Latest stable version: v1.18.16[upgrade/versions] Latest stable version: v1.18.16[upgrade/versions] Latest version in the v1.18 series: v1.18.16[upgrade/versions] Latest version in the v1.18 series: v1.18.16Awesome, you're up-to-date! Enjoy![root@master ~]#