Introduction

最近项目部署,要涉及到openpose的使用,考虑到速度,没有用其他用pytorch复现的openpose库,而是使用原生的openpose repo,使用的框架是caffe。遇到了很多问题,现记录如下。

github code:https://github.com/CMU-Perceptual-Computing-Lab/openpose

paper: https://ieeexplore.ieee.org/document/8765346

安装环境:Ubuntu18.04 命令行编译

CUDA:11.1

Cudnn:8.1.1

Installation

openpose的安装可以在Windows上安装发行版(https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/installation/0_index.md#windows-portable-demo),也可以从源码编译,一般后者是用来再开发和部署

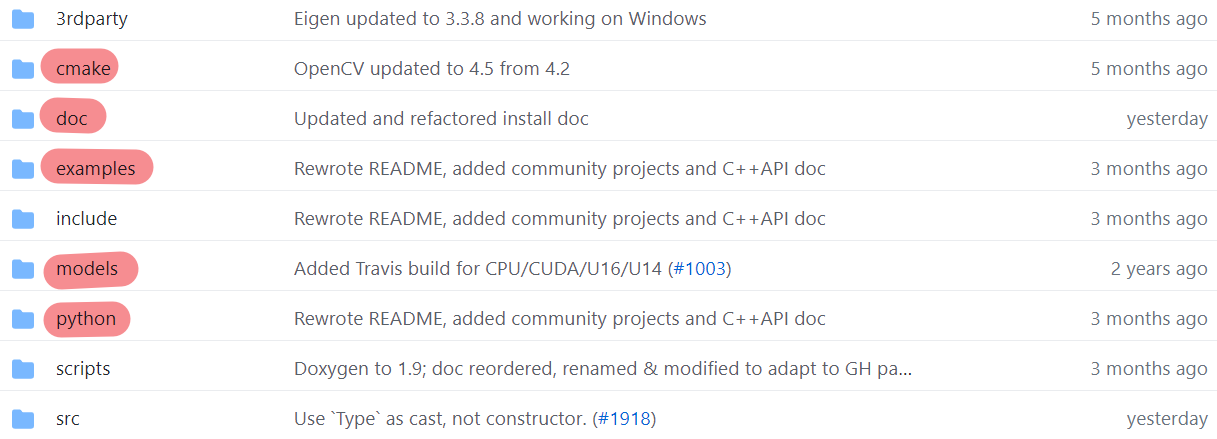

项目结构

安装之前先了解一下项目结构

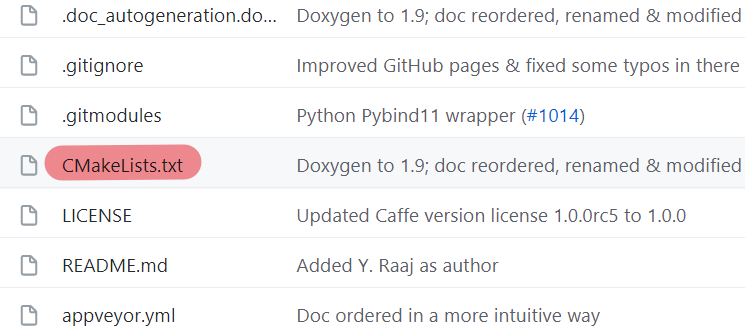

其中cmake文件夹里负责编译相关的指令,一般不用动;与cmake相关的还有一个主路径下的CMakeList.txt,里边包含了所有的cmake需要执行的编译指令和选项

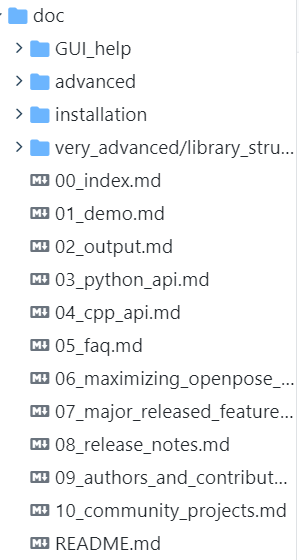

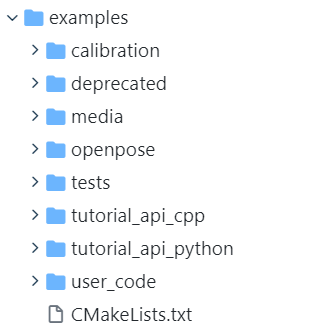

doc包含了API,demo,安装文档等,example里面放了各类示例,结合doc里面的03_python_api.md使用tutorial_api_python里的python文件,可以快速上手python API;model里面是pose,hand,face的模型

准备

直接参考https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/installation/1_prerequisites.md#ubuntu-prerequisites

Python环境

虽然anaconda说是会影响openpose,但是实测不会,只要使用的python是同一个就好。这里我替换了默认环境下的python

输入 whereis python

会出来一大堆,这些都是安装在机器中的python

然后指定默认运行的python版本:

sudo ln -s

运行python测试一下

这里我用的是我指定的anaconda的一个python环境

Cmake

首先遇到的问题就是openpose默认使用的是cmake-gui,我使用的环境是没有图形界面的,因此都用cmake替代

sudo apt-get install cmake

所有的选项在:https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/CMakeLists.txt

CUDA&Cudnn

然后就是检查cuda,cudnn, 没有的要去下载

https://developer.nvidia.com/cuda-11.1.1-download-archive

https://developer.nvidia.com/rdp/cudnn-archive

其他

OpenCL:sudo apt-get install libviennacl-dev

opencv: sudo apt-get install libopencv-dev

caffe:sudo bash ./scripts/ubuntu/install_deps.sh

编译

先下载,这一步都没问题

然后是编译,因为说明中使用的是cmake-gui,我要用cmake替代,参考https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/installation/2_additional_settings.md#cmake-command-line-configuration-ubuntu-only

而且我要使用python API,因此要设置-DBUILD_PYTHON=ON

mkdir build && cd build && cmake -DBUILD_PYTHON=ON -DPYTHON_EXECUTABLE=/usr/bin/python -DPYTHON_LIBRARY=/home/***/software/anaconda3/lib/libpython3.8.so .. && make -j `nproc`

如果之后在build中想重新编译:

cd .. && rm -r build || true && mkdir build && cd build && cmake -DBUILD_PYTHON=ON -DPYTHON_EXECUTABLE=/usr/bin/python -DPYTHON_LIBRARY=/home/***/software/anaconda3/lib/libpython3.8.so .. && make -j `nproc`

到这一步一般要么编译成功,要么就各种bug。。。

我遇到的是以下几个:

- cuda和cudnn版本不符/缺少

- anaconda中python环境和默认的python环境不一样,这个参考上面换一下就好

- 缺少caffe/opencv

用demo(https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/01_demo.md)里的测试一下是否编译成功

用https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/03_python_api.md测试python API是否正常使用

注意这里由于没有显示器,所以要使用:

python 01_body_from_image.py --display 0

使用Openpose Python API

调用API

主要的选项看https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/01_demo.md#main-flags

所有的选项在https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/advanced/demo_advanced.md#all-flags

如果在其他路径调用openpose,需要添加环境变量sys.path.append('path_openpose/build/python/openpose')

和在params中添加路径params["model_folder"]=<path_openpose/models/>

下面两类是调用的关键类

Wrapper class: https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/include/openpose/wrapper/wrapper.hpp

Datum Class: https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/include/openpose/core/datum.hpp

python封装的C++类

https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/python/openpose/openpose_python.cpp#L194

代码模板

import sysimport cv2import globsys.path.append('/home/***/workspace/2dpose/openpose/build/python/openpose')import pyopenpose as opimport argparseimport _init_pathsfrom _init_paths import get_pathfrom utils.utilitys import plot_keypoint, PreProcess, write, load_jsonfrom config import cfg, update_configfrom utils.transforms import *from utils.inference import get_final_predsimport modelsimport numpy as npfrom tqdm import tqdmsys.path.pop(0)import logginglogging.basicConfig(filename='logger.log', level=logging.CRITICAL)class Openpose_Human():def __init__(self):super(Openpose_Human,self).__init__()self.params = dict()self.params["model_folder"] = "/home/zjl/workspace/2dpose/openpose/models/"self.params['model_pose'] = 'COCO'self.params['num_gpu'] = 1self.params['num_gpu_start'] = 0self.params['display'] = 0# Starting OpenPoseself.opWrapper = op.WrapperPython()self.opWrapper.configure(self.params)self.opWrapper.start()self.datum = op.Datum()def gen_image_kpts(self, img):self.datum.cvInputData = img# Processself.opWrapper.emplaceAndPop(op.VectorDatum([self.datum]))return self.datum.poseKeypointsdef gen_video_kpts(self, video, max_num_person=256):cap = cv2.VideoCapture(video)assert cap.isOpened(), 'Cannot capture source'video_length = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))# collect keypoints coordinateprint('Generating 2D pose ...')kpts_result = []scores_result = []coco_order_from_openpose = [0, 15, 14, 17, 16, 5, 2, 6, 3, 7, 4, 11, 8, 12, 9, 13, 10]# coco_keypoints = keypoints[coco_order_from_openpose, :-1]for i in tqdm(range(video_length)):ret, frame = cap.read()if not ret: continuekpts_op = self.gen_image_kpts(frame) # kpts shape:[num_person, 18, 3(xy+score)]# logging.critical('kpts_op shape: ' + str(kpts_op.shape))num_person = len(kpts_op)kpts = np.zeros((max_num_person, 17, 2), dtype=np.float32)scores_kpts = np.zeros((max_num_person, 17), dtype=np.float32)kpts[:num_person, :, :] = kpts_op[:, coco_order_from_openpose, :-1]scores_kpts[:num_person, :] = kpts_op[:, coco_order_from_openpose, -1]kpts_result.append(kpts)scores_result.append(scores_kpts)# logging.critical('kpts_result shape: ' + str(kpts_result.shape))# logging.critical('scores_result shape: ' + str(scores_result.shape))keypoints = np.array(kpts_result)scores = np.array(scores_result)# logging.critical('keypoints shape: ' + str(keypoints.shape))# logging.critical('scores shape: ' + str(scores.shape))keypoints = keypoints.transpose(1, 0, 2, 3) # (T, M, N, 2) --> (M, T, N, 2)scores = scores.transpose(1, 0, 2) # (T, M, N) --> (M, T, N)return keypoints, scores

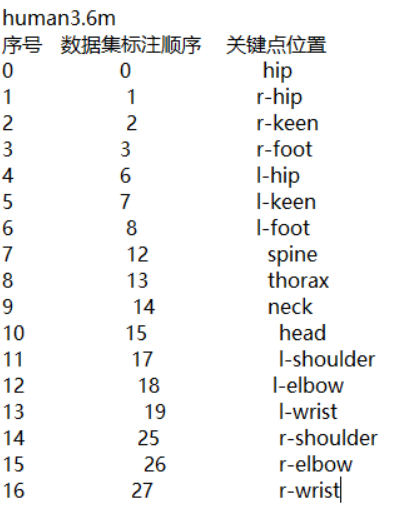

Output format

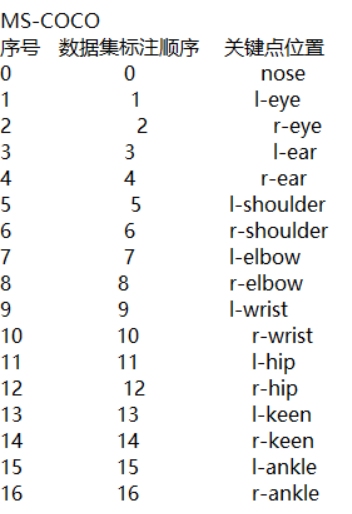

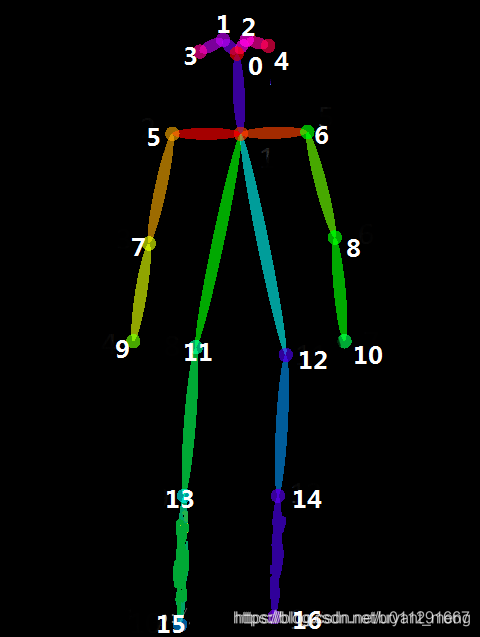

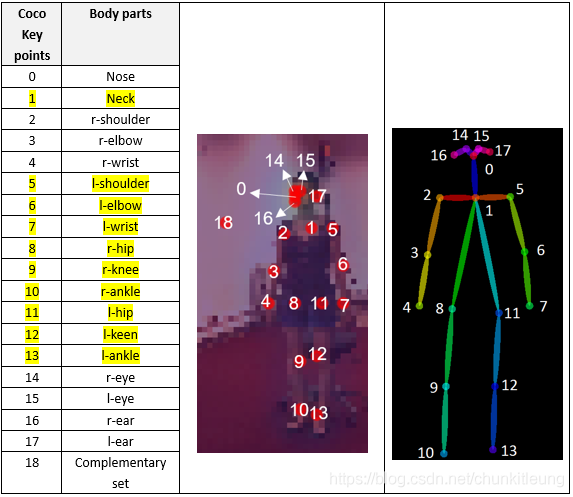

文件中https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/02_output.md描述了openpose的COCO形式输出,但是和传统的COCO输出不一样

左COCO注释,右OpenPose注释

OpenPose的姿态识别用了COCO数据库,coco有17个keypoint, OpenPose增加了一个, 用两侧肩膀生成一个中心点代表脖子,就是如图openpose编号为1的人体中心点

不注意顺序真的害死人

由openpose得出的keypoints转换为COCO的标注顺序:

keypoints = datum.poseKeypoints# coco_order_from_openpose = [0, 15, 14, 17, 16, 5, 2, 6, 3, 7, 4, 11, 8, 12, 9, 13, 10]coco_order_from_openpose = [0, 14, 15, 16, 17, 2, 5, 3, 6, 4, 7, 8, 11, 9, 12, 10, 13]coco_keypoints = keypoints[coco_order_from_openpose, :-1]