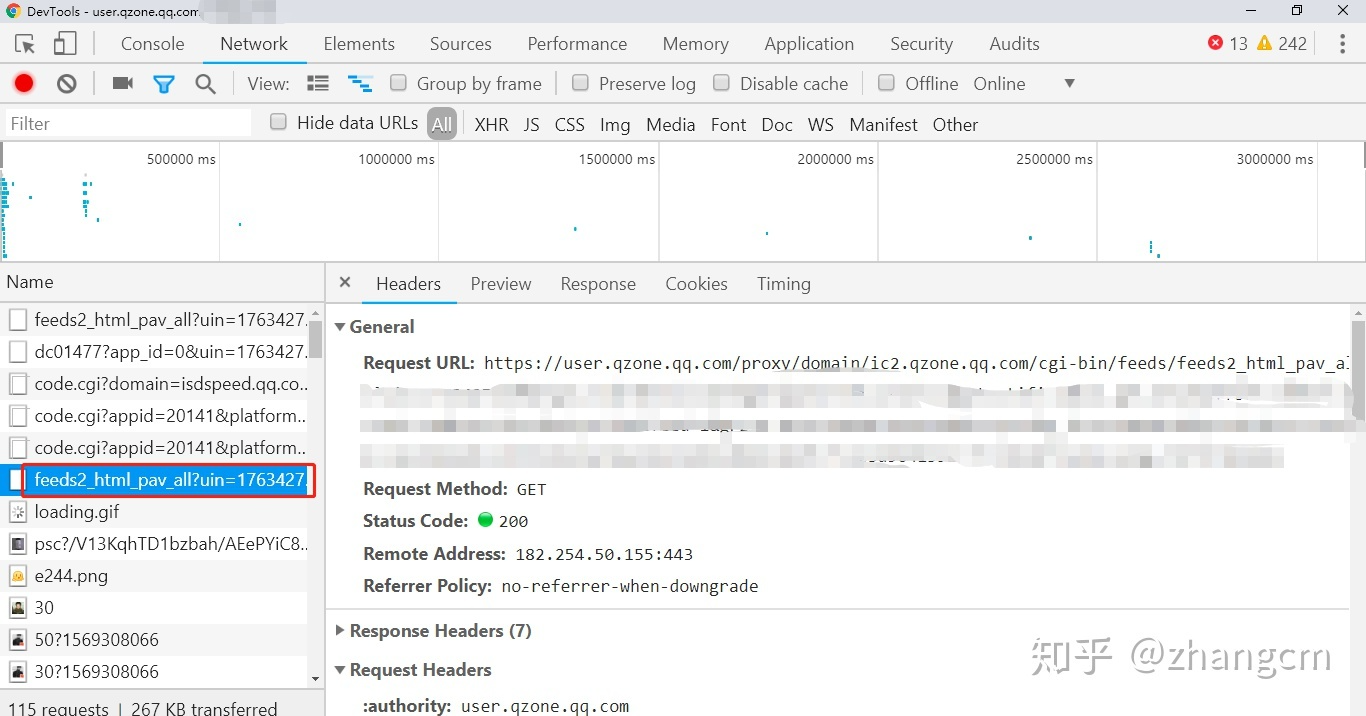

1.Cookie登录

直接去浏览器F12复制粘贴Cookie即可,只需在headers里面加入”Cookie”

2.多线程下载

最方便的多线程调用外部含参方法,每个线程传入的参数都不同

def func(id):os.makedirs(out_path+str(id*1000), exist_ok=True) # 输出目录for i in range(id*1000, (id+1)*1000):downloadfeed(i, './dynamics/'+str(id*1000)+'/')print(str(i))for i in range(0,16):t=threading.Thread(target=func,args=(i,)) # 此处格式需注意'''target=右边写方法名,不加括号,args=右边写传入方法的参数,最后要多加一个逗号'''t.start()

以下完整代码:分了16个线程,分的线程越多,下载速度越快

import osimport requestsimport threadingimport timeout_path = './dynamics/'cookie = 'pgv_pvid=1766022180; ptcz=d8ab098bd2c8905001b854755a03c3b97807c29b1d6f0dcf1ce7c0b7397eb355; RK=D+JFl04PN0; ptui_loginuin=1311738213; _qpsvr_localtk=0.44438202147946215; pgv_info=ssid=s9187442548; uin=o1311738213; skey=@VW09dxbUs; qz_screen=1366x768; 1311738213_todaycount=0; 1311738213_totalcount=25636; cpu_performance_v8=28; Loading=Yes; __Q_w_s__QZN_TodoMsgCnt=1; QZ_FE_WEBP_SUPPORT=0; p_uin=o1311738213; pt4_token=BpahtF7G7mhfAwMt-16gXq8WfAM-ywr9v3FTo--9VvM_; p_skey=12hehjj8HdNcNZt3hAmFTmBZF3apwcgcS--PW2bOy0k_'def download(file_path, url):headers = {"Cookie": cookie,"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36 QIHU 360SE",}r = requests.get(url, headers=headers)if(len(r.content) > 100):with open(file_path, 'wb') as f:f.write(r.content)def downloadfeed(offset, path):left_url = 'https://user.qzone.qq.com/proxy/domain/ic2.qzone.qq.com/cgi-bin/feeds/feeds2_html_pav_all?uin=1311738213&begin_time=0&end_time=0&getappnotification=1&getnotifi=1&has_get_key=0&offset='right_url = '&set=0&count=10&useutf8=1&outputhtmlfeed=1&grz=0.8910795355675938&scope=1&g_tk=1626987046'url = url = left_url + str(offset) + right_urlfile_path = path + str(offset) + '.txt'download(file_path, url)def func(id):os.makedirs(out_path+str(id*1000), exist_ok=True) # 输出目录for i in range(id*1000, (id+1)*1000):downloadfeed(i, './dynamics/'+str(id*1000)+'/')print(str(i))for i in range(0,16):t=threading.Thread(target=func,args=(i,))t.start()

此脚本用于爬取所有QQ空间“关于我的”消息,直接爬到天荒地老。前提是需要先登录。

原理参考链接:https://www.zhihu.com/question/340649774/answer/1277723623

简单记录步骤:

打开“关于我的”页面下拉加载,找到上图数据,复制网址修改offset=的值即可,值越大距时间越早