LZO库编译

- 进入官网下载源码包

安装maven

配置maven的仓库为阿里云镜像

<mirrors><mirror><id>nexus-aliyun</id><mirrorOf>central</mirrorOf><name>Nexus aliyun</name><url>http://maven.aliyun.com/nexus/content/groups/public</url></mirror></mirrors>

配置环境变量

- 通过yum安装:

yum -y install gcc-c++ lzo-devel zlib-devel autoconf automake libtool - 生成Confiuration文件:

./configure -prefix=/usr/local/hadoop/1zo/ - 编译:

make - 安装:

make install

到目前位置,安装的是LZO的库,下面要编译安装hadoop-lzo的依赖

Hadoop-LZO

- 去官网下载Hadoop-lzo

解压后,去修改pom.xml:修改hadoop的版本

<hadoop.current.version>2.7.2</hadoop.current.version>声明两个变量(上面安装的LZO路径):

export C_INCLUDE_PATH=/usr/local/hadoop/lzo/include export LIBRARY_PATH=/usr/local/hadoop/lzo/lib执行

mvn package -Dmaven.test.skip=true- 复制生成的

target/hadoop-lzo-0.4.20.jar到 hadoop根目录 下的/share/hadoop/common/ - 分发该jar包到其他节点上(如果有的话)

- 配置

core-site.xml,添加一个节点 ```xmlio.compression.codecs org.apache.hadoop.io.compress.GzipCodec, org.apache.hadoop.io.compress.DefaultCodec, org.apache.hadoop.io.compress.BZip2Codec, org.apache.hadoop.io.compress.SnappyCodec, com.hadoop.compression.1zo.LzoCodec, com.hadoop.compression.1zo.LzopCodec io.compression.codec.lzo.c1ass com.hadoop.compression.lzo.LzoCodec

8. 分发 `core-site.xml` ,重启所有节点

<a name="ctWnV"></a>

# 测试

1. 首先搞一个lzo测试文件,我把测试文件丢网盘上了,需要的小伙伴自行下载,戳[这里 (提取码:ricl)](https://pan.baidu.com/s/1UVqjkdmfmaCgUgBQQEBfpg)下载

1. 把该测试文件上传到hadoop里

1. 创建文件夹:`hadoop fs -mkdir /input`

1. 上传文件: `hadoop fs -put /home/codeleven/bigtable.lzo /input`

3. 运行测试 `hadoop jar /opt/module/hadoop-2.7.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /input /output1`

```bash

20/08/01 20:09:47 INFO client.RMProxy: Connecting to ResourceManager at hadoop2/192.168.127.102:8032

20/08/01 20:09:48 INFO input.FileInputFormat: Total input paths to process : 1

20/08/01 20:09:48 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

20/08/01 20:09:48 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 52decc77982b58949890770d22720a91adce0c3f]

20/08/01 20:09:49 INFO mapreduce.JobSubmitter: number of splits:1

20/08/01 20:09:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1596283728425_0001

20/08/01 20:09:49 INFO impl.YarnClientImpl: Submitted application application_1596283728425_0001

20/08/01 20:09:49 INFO mapreduce.Job: The url to track the job: http://hadoop2:8088/proxy/application_1596283728425_0001/

20/08/01 20:09:49 INFO mapreduce.Job: Running job: job_1596283728425_0001

创建索引分片

hadoop jar ../share/hadoop/common/hadoop-lzo-0.4.20.jar \

com.hadoop.compression.lzo.DistributedLzoIndexer \

/input/bigtable.lzo

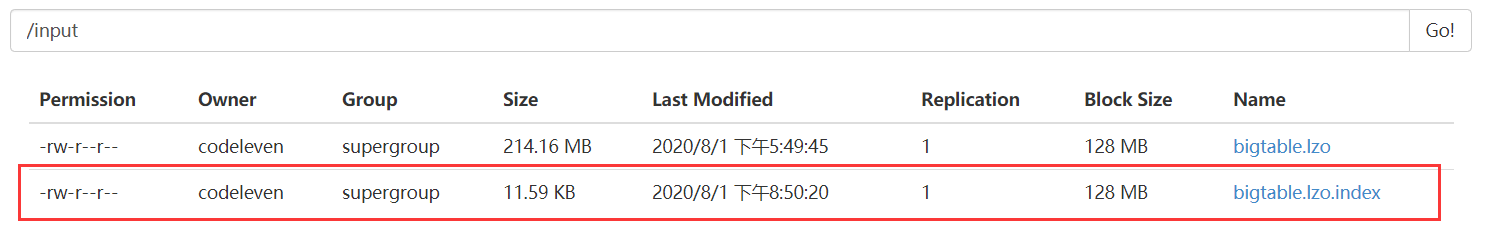

执行成功后会在HDFS里多出一个文件:

此时再进行测试: hadoop jar /opt/module/hadoop-2.7.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /input /output3

输出如下结果,看第五行,已经是分成两个切片了:

20/08/01 20:55:48 INFO client.RMProxy: Connecting to ResourceManager at hadoop2/192.168.127.102:8032

20/08/01 20:55:50 INFO input.FileInputFormat: Total input paths to process : 2

20/08/01 20:55:50 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

20/08/01 20:55:50 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 52decc77982b58949890770d22720a91adce0c3f]

20/08/01 20:55:50 INFO mapreduce.JobSubmitter: number of splits:2

20/08/01 20:55:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1596283728425_0004

20/08/01 20:55:50 INFO impl.YarnClientImpl: Submitted application application_1596283728425_0004

20/08/01 20:55:50 INFO mapreduce.Job: The url to track the job: http://hadoop2:8088/proxy/application_1596283728425_0004/

20/08/01 20:55:50 INFO mapreduce.Job: Running job: job_1596283728425_0004