1、地址

https://github.com/ververica/flink-cdc-connectors

2、依赖

<dependencies><dependency><groupId>org.apache.flink</groupId><artifactId>flink-java</artifactId><version>1.12.0</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-streaming-java_2.12</artifactId><version>1.12.0</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-clients_2.12</artifactId><version>1.12.0</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>3.1.3</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>5.1.49</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-planner-blink_2.12</artifactId><version>1.12.0</version></dependency><dependency><groupId>com.ververica</groupId><artifactId>flink-connector-mysql-cdc</artifactId><version>2.0.0</version></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>1.2.75</version></dependency></dependencies><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-assembly-plugin</artifactId><version>3.0.0</version><configuration><descriptorRefs><descriptorRef>jar-with-dependencies</descriptorRef></descriptorRefs></configuration><executions><execution><id>make-assembly</id><phase>package</phase><goals><goal>single</goal></goals></execution></executions></plugin></plugins></build>

3、DataStream

public class FlinkCDC {public static void main(String[] args) throws Exception {//1.创建执行环境StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();env.setParallelism(1);//2.Flink-CDC将读取binlog的位置信息以状态的方式保存在CK,如果想要做到断点续传,需要从Checkpoint或者Savepoint启动程序//2.1 开启Checkpoint,每隔5秒钟做一次CKenv.enableCheckpointing(5000L);//2.2 指定CK的一致性语义env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);//2.3 设置任务关闭的时候保留最后一次CK数据env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);//2.4 指定从CK自动重启策略env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000L));//2.5 设置状态后端env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/flinkCDC"));//2.6 设置访问HDFS的用户名System.setProperty("HADOOP_USER_NAME", "atguigu");//3.创建Flink-MySQL-CDC的Source//initial (default): Performs an initial snapshot on the monitored database tables upon first startup, and continue to read the latest binlog.//latest-offset: Never to perform snapshot on the monitored database tables upon first startup, just read from the end of the binlog which means only have the changes since the connector was started.//timestamp: Never to perform snapshot on the monitored database tables upon first startup, and directly read binlog from the specified timestamp. The consumer will traverse the binlog from the beginning and ignore change events whose timestamp is smaller than the specified timestamp.//specific-offset: Never to perform snapshot on the monitored database tables upon first startup, and directly read binlog from the specified offset.DebeziumSourceFunction<String> mysqlSource = MySQLSource.<String>builder().hostname("hadoop102").port(3306).username("root").password("000000").databaseList("gmall-flink").tableList("gmall-flink.z_user_info") //可选配置项,如果不指定该参数,则会读取上一个配置下的所有表的数据,注意:指定的时候需要使用"db.table"的方式.startupOptions(StartupOptions.initial()).deserializer(new StringDebeziumDeserializationSchema()).build();//4.使用CDC Source从MySQL读取数据DataStreamSource<String> mysqlDS = env.addSource(mysqlSource);//5.打印数据mysqlDS.print();//6.执行任务env.execute();}}

4、FlinkSql

public class FlinkSQL_CDC {public static void main(String[] args) throws Exception {//1.创建执行环境StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();env.setParallelism(1);StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);//2.创建Flink-MySQL-CDC的SourcetableEnv.executeSql("CREATE TABLE user_info (" +" id INT," +" name STRING," +" phone_num STRING" +") WITH (" +" 'connector' = 'mysql-cdc'," +" 'hostname' = 'hadoop102'," +" 'port' = '3306'," +" 'username' = 'root'," +" 'password' = '000000'," +" 'database-name' = 'gmall-flink'," +" 'table-name' = 'z_user_info'" +")");tableEnv.executeSql("select * from user_info").print();env.execute();}}

5、反序列化

new DebeziumDeserializationSchema<String>() { //自定义数据解析器@Overridepublic void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {//获取主题信息,包含着数据库和表名 mysql_binlog_source.gmall-flink.z_user_infoString topic = sourceRecord.topic();String[] arr = topic.split("\\.");String db = arr[1];String tableName = arr[2];//获取操作类型 READ DELETE UPDATE CREATEEnvelope.Operation operation = Envelope.operationFor(sourceRecord);//获取值信息并转换为Struct类型Struct value = (Struct) sourceRecord.value();//获取变化后的数据Struct after = value.getStruct("after");//创建JSON对象用于存储数据信息JSONObject data = new JSONObject();for (Field field : after.schema().fields()) {Object o = after.get(field);data.put(field.name(), o);}//创建JSON对象用于封装最终返回值数据信息JSONObject result = new JSONObject();result.put("operation", operation.toString().toLowerCase());result.put("data", data);result.put("database", db);result.put("table", tableName);//发送数据至下游collector.collect(result.toJSONString());}@Overridepublic TypeInformation<String> getProducedType() {return TypeInformation.of(String.class);}})

6、flinkcdc1.0痛点

7、设计思想

在对于有主键的表做初始化模式,整体的流程主要分为5个阶段:

1.Chunk切分;

2.Chunk分配;(实现并行读取数据&CheckPoint)

3.Chunk读取;(实现无锁读取)

4.Chunk汇报;

5.Chunk分配。

8、flinksql和ds区别

ds能监控整个库和指定的表,sql只能监控单表

ds需要自己序列化,sql已经序列化好

注意flink和cdc对应版本

二、例子

1、MongoDB CDC实践

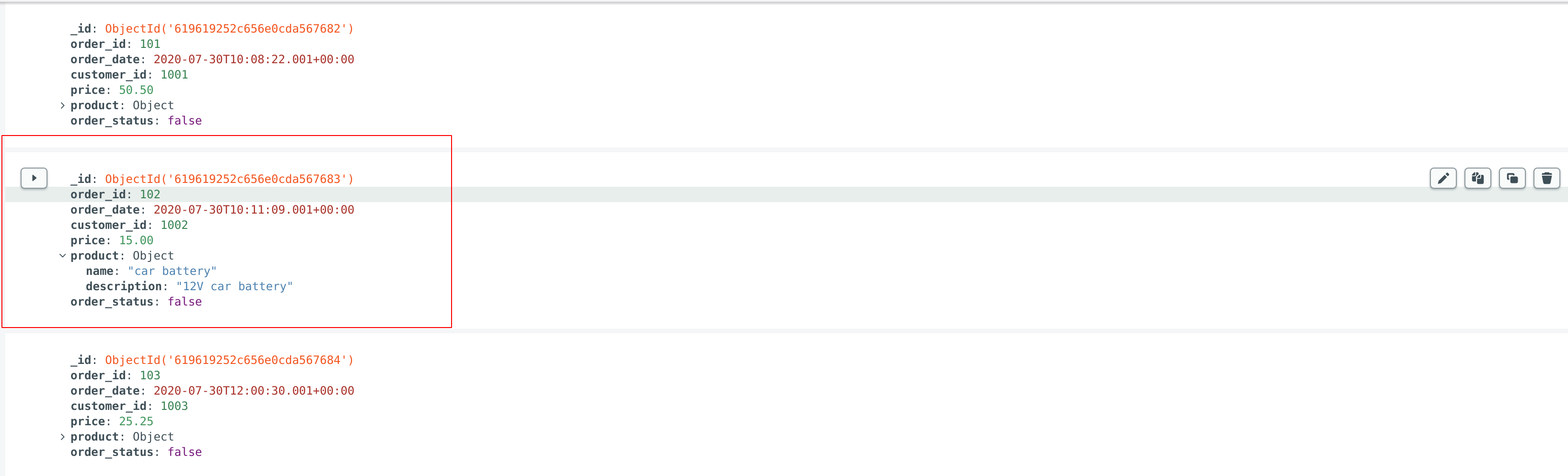

1)原始数据

2)cdc数据:change streams

insert

{"_id": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}, \"copyingData\": true}","operationType": "insert","fullDocument": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}, \"order_id\": 102.0, \"order_date\": {\"$date\": 1596103869001}, \"customer_id\": 1002.0, \"price\": {\"$numberDecimal\": \"15.00\"}, \"product\": {\"name\": \"car battery\", \"description\": \"12V car battery\"}, \"order_status\": false}","source": {"ts_ms": 0,"snapshot": "true"},"ns": {"db": "hlh","coll": "orders"},"to": null,"documentKey": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}}","updateDescription": null,"clusterTime": null,"txnNumber": null,"lsid": null}

update

{"_id": "{\"_data\": \"8262A00E3D0000003F2B022C0100296E5A1004E80FAED9CBC34B7BAAF866694C9D24AB46645F69640064619619252C656E0CDA5676830004\"}","operationType": "update","fullDocument": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}, \"order_id\": 102.0, \"order_date\": {\"$date\": 1596103869001}, \"customer_id\": 1002.0, \"price\": {\"$numberDecimal\": \"16.00\"}, \"product\": {\"name\": \"car battery\", \"description\": \"12V car battery\"}, \"order_status\": false}","source": {"ts_ms": 1654656573000,"snapshot": null},"ns": {"db": "hlh","coll": "orders"},"to": null,"documentKey": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}}","updateDescription": {"updatedFields": "{\"price\": {\"$numberDecimal\": \"16.00\"}}","removedFields": []},"clusterTime": "{\"$timestamp\": {\"t\": 1654656573, \"i\": 63}}","txnNumber": null,"lsid": null}

delete

{"_id": "{\"_data\": \"8262A00F890000000A2B022C0100296E5A1004E80FAED9CBC34B7BAAF866694C9D24AB46645F69640064619619252C656E0CDA5676830004\"}","operationType": "delete","fullDocument": null,"source": {"ts_ms": 1654656905000,"snapshot": null},"ns": {"db": "hlh","coll": "orders"},"to": null,"documentKey": "{\"_id\": {\"$oid\": \"619619252c656e0cda567683\"}}","updateDescription": null,"clusterTime": "{\"$timestamp\": {\"t\": 1654656905, \"i\": 10}}","txnNumber": null,"lsid": null}

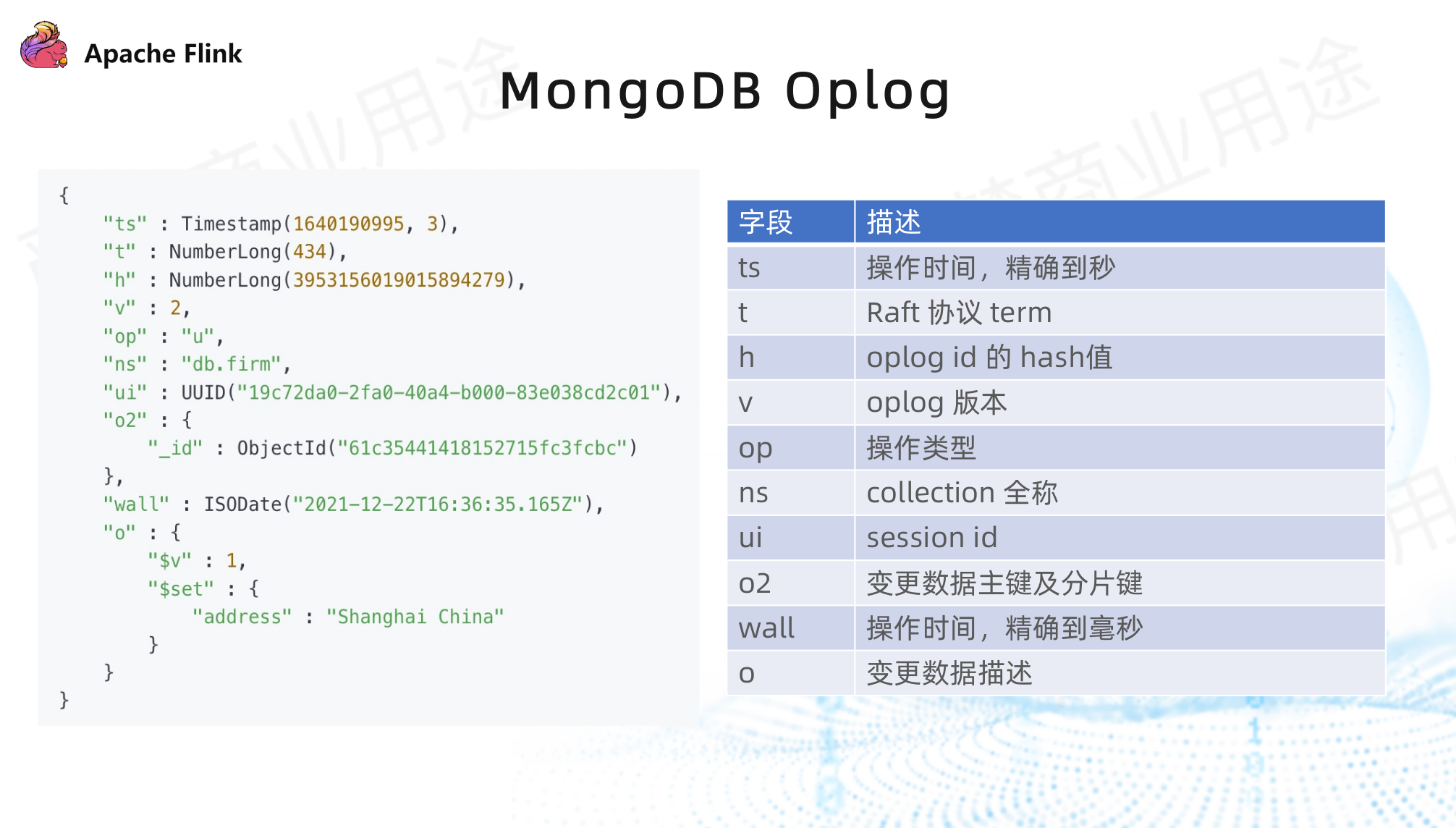

3)OpLog

MongoDB oplog 没存变更前和变更后的完整数据。fullDocument是获取到变更事件文档的最新状态。

当我们从检查点或保存点恢复 Flink 作业时,心跳事件可以将 resumeToken 向前推送,以避免 resumeToken 过期。

2、Flink CDC实践

1)原始数据

- 全量读取

{"PUSHKITTOKEN": "","UPDATETIME": "2017-08-10 01:42:31","PUSHTYPE": 0,"APNSTOKEN": "cea5bc9e4f5b74213424bada03a3959c821f230bdc1cc0e1ab66b809182170cf","MOBILEOPERATOR": "movistar","JAILBREAK": 0,"SPARE1": "","RESERVE": "","VERSION_STR": "","DEVICEID": "7a3cc059f6d66bd77bbae29fbed291a123dcf6db","SPARE2": 0,"OSVERSION": "10.3.3","APPSTORECOUNTRY": "","OSLANG": "en-GB","USERID": 8051194,"VERSION": "2.3.6","OSTYPE": 0,"DEVICEDETAIL": "iPhone7,2","OPERATORCOUNTRY": "cl","operation": "read", -- 操作类型"table": "HT_USER_TERMINAL"}