设置输出日志

遇上配置目录不生效的情况,修改jar内的日志配置文件

例子

打开jar把里面的logback.xml的info改成debug,再复现这个错误,查看日志,把日志提交到Mycat团队或者作者

![L]{XYXELYF91YC@YZG2%79.png

或者启动的时候添加

-Dlogback.configurationFile=xxxx\logback.xml

java -jar -Dlogback.configurationFile=xxx\logback.xml xxxx\mycat2-1.21-release-jar-with-dependencies-2021-1-12-1.jar

-D参数一定要写在jar路径前面

另外也可以把这个参数添加在wrapper.conf里面

wrapper.java.additional.10=-Dlogback.configurationFile=./conf/logback.xml

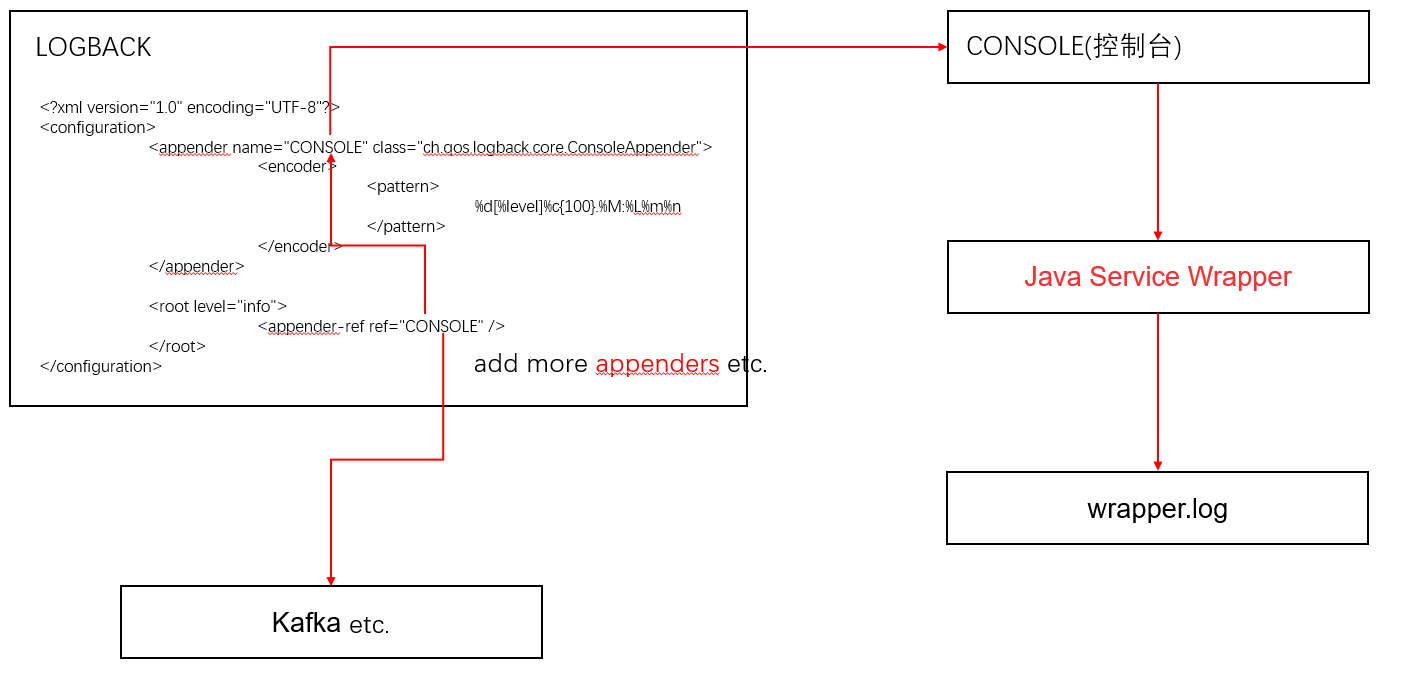

logback.xml

<?xml version="1.0" encoding="UTF-8"?><configuration><appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"><encoder><pattern>%d[%level]%c{100}.%M:%L%m%n</pattern></encoder></appender><root level="info"><appender-ref ref="CONSOLE" /></root></configuration>

此处的CONSOLE就是输出到控制台,Java Service Wrapper会把控制台的输出转到Wrapper.log里面,如果发现Wrapper.log过大就要把此处修改.

设置debug级别日志后,日志文件里面会出现debug前缀的日志

2022-02-22 16:52:01,316[DEBUG]io.vertx.core.logging.LoggerFactory.?:?Using io.vertx.core.logging.SLF4JLogDelegateFactory2022-02-22 16:52:01,344[INFO]io.mycat.MycatCore.newMycatServer:214start VertxMycatServer2022-02-22 16:52:02,015[DEBUG]io.netty.util.internal.logging.InternalLoggerFactory.useSlf4JLoggerFactory:63Using SLF4J as the default logging framework2022-02-22 16:52:02,023[DEBUG]io.netty.util.internal.PlatformDependent0.explicitNoUnsafeCause0:460-Dio.netty.noUnsafe: false2022-02-22 16:52:02,023[DEBUG]io.netty.util.internal.PlatformDependent0.javaVersion0:954Java version: 8

当然也包括查询的sql

2022-02-22 16:53:00,340[DEBUG]io.mycat.commands.MycatdbCommand.executeQuery:172SET NAMES utf82022-02-22 16:53:00,387[DEBUG]io.mycat.commands.MycatdbCommand.executeQuery:172SELECT TIMEDIFF(NOW(), UTC_TIMESTAMP())

Mycat2随着发展使用了不同的日志框架

到1.20为止使用slf4j接口框架,其实现是simplelogger(过时)

它的设置参考如下

simplelogger.properties

# SLF4J's SimpleLogger configuration file# Simple implementation of Logger that sends all enabled log messages, for all defined loggers, to System.err.# Default logging detail level for all instances of SimpleLogger.# Must be one of ("trace", "debug", "info", "warn", or "error").# If not specified, defaults to "info".org.slf4j.simpleLogger.defaultLogLevel=info# Logging detail level for a SimpleLogger instance named "xxxxx".# Must be one of ("trace", "debug", "info", "warn", or "error").# If not specified, the default logging detail level is used.#org.slf4j.simpleLogger.log.xxxxx=# Set to true if you want the current date and time to be included in output messages.# Default is false, and will output the number of milliseconds elapsed since startup.#org.slf4j.simpleLogger.showDateTime=false# The date and time format to be used in the output messages.# The pattern describing the date and time format is the same that is used in java.text.SimpleDateFormat.# If the format is not specified or is invalid, the default format is used.# The default format is yyyy-MM-dd HH:mm:ss:SSS Z.#org.slf4j.simpleLogger.dateTimeFormat=yyyy-MM-dd HH:mm:ss:SSS Z# Set to true if you want to output the current thread name.# Defaults to true.#org.slf4j.simpleLogger.showThreadName=true# Set to true if you want the Logger instance name to be included in output messages.# Defaults to true.#org.slf4j.simpleLogger.showLogName=true# Set to true if you want the last component of the name to be included in output messages.# Defaults to false.#org.slf4j.simpleLogger.showShortLogName=false

simplelogger.propertiesorg.slf4j.simpleLogger.defaultLogLevel=debug遇上配置目录不生效的情况,修改jar内的simplelogger.properties文件java启动参数-Dorg.slf4j.simpleLogger.defaultLogLevel=debug生产环境依据情况设置级别-Dorg.slf4j.simpleLogger.defaultLogLevel=infodebug与info级别,性能有两倍差异

在2021-10-15号发布的1.20使用了logback,并添加了kafka连接器(lingkang提交该功能,通过更换maven依赖实现),便于记录并分析生产日志

它的设置参考如下

logback.xml

<?xml version="1.0" encoding="UTF-8"?><configuration><appender name="kafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender"><encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"><pattern>%message %n</pattern><charset>utf8</charset></encoder><topic>applog-test</topic><keyingStrategy class="com.github.danielwegener.logback.kafka.keying.NoKeyKeyingStrategy"/><deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy"/><producerConfig>bootstrap.servers=192.168.18.43:9092,192.168.18.44:9092</producerConfig><producerConfig>linger.ms=1000</producerConfig><producerConfig>acks=0</producerConfig><producerConfig>client.id=localhost-time-collector-logback-relaxed</producerConfig><producerConfig>max.block.ms=0</producerConfig></appender><logger name="org.apache.kafka.clients" level="error"></logger><logger name="org.apache.kafka.clients.NetworkClient" level="error"></logger><appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"><encoder><pattern>%d[%level]%c{100}.%M:%L%m%n</pattern></encoder></appender><root level="debug"><appender-ref ref="kafkaAppender"/><appender-ref ref="CONSOLE" /></root></configuration>

其中bootstrap.servers是kafka服务器的ip

不带kafka的配置如下

<?xml version="1.0" encoding="UTF-8"?><configuration><appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"><encoder><pattern>%d[%level]%c{100}.%M:%L%m%n</pattern></encoder></appender><root level="debug"><appender-ref ref="CONSOLE" /></root></configuration>

配置关系

过滤日志

<?xml version="1.0" encoding="UTF-8"?><configuration><appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"><encoder><pattern>%d[%level]%c{100}.%M:%L%m%n</pattern></encoder><filter class="ch.qos.logback.core.filter.EvaluatorFilter"><!--匹配处理器--><evaluator><!-- 处理模式,默认为 ch.qos.logback.classic.boolex.JaninoEventEvaluator --><!-- 存在某个字符串则匹配成功 --><expression>return (message.contains("Connection reset by peer") || message.contains("远程主机强迫"));</expression></evaluator><!--匹配则停止执行日志输出--><OnMatch>DENY</OnMatch><!--不匹配则往下执行--><OnMismatch>ACCEPT</OnMismatch></filter></appender><root level="info"><appender-ref ref="CONSOLE" /></root></configuration>

日志会不输出

带有 “Connection reset by peer”或者 “远程主机强迫”的日志