现在如果给机器人一个相声台本,他就能跟我们说相声了,只不过是文字交流。那么能不能让机器人加上耳朵,能够听,加上嘴巴能够说呢?前者是语音识别,后者是语音合成。当然在这之前要给机器人准备好相应的器官—嘴巴(扬声器)和耳朵(麦克风)。

首先语音识别,也就是从一段声音里面识别出文字信息。这里我们先准备好一段语音hello.wav,该音频文件为中文。

然后安装语音识别库SpeechRecognition,但该库对中文不太友好,这里我们选用大厂的语音识别功能,像百度、阿里、讯飞等。首先我们需要注册百度(或其他厂)的语音开发者账号,拿到属于自己的三元组。然后pip install baidu-aip,接下来代码如下:

from aip import AipSpeechAPP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)# 读取文件def get_file_content(filePath):with open(filePath, 'rb') as fp:return fp.read()# 识别本地文件test1 = client.asr(get_file_content('hello.wav'), 'pcm', 16000, {'dev_pid': 1536, })print(test1)

现在已经能够识别到本地音频文件了,那么要实现实时语音识别,就是调用麦克风功能录下声音存成文件,然后及时再调用该文件这样的过程。使用python调用麦克风进行录音的程序如下:

首先也需要一个库文件的支持pip install pyaudio:

import pyaudioimport waveinput_filename = "input.wav" # 麦克风采集的语音输入input_filepath = "../src/" # 输入文件的pathin_path = input_filepath + input_filenamedef get_audio(filepath):CHUNK = 256FORMAT = pyaudio.paInt16CHANNELS = 1 # 声道数RATE = 11025 # 采样率RECORD_SECONDS = 5WAVE_OUTPUT_FILENAME = filepathp = pyaudio.PyAudio()stream = p.open(format=FORMAT,channels=CHANNELS,rate=RATE,input=True,frames_per_buffer=CHUNK)print("*"*10, "开始录音:请在5秒内输入语音")frames = []for i in range(0, int(RATE / CHUNK * RECORD_SECONDS)):data = stream.read(CHUNK)frames.append(data)print("*"*10, "录音结束\n")stream.stop_stream()stream.close()p.terminate()wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')wf.setnchannels(CHANNELS)wf.setsampwidth(p.get_sample_size(FORMAT))wf.setframerate(RATE)wf.writeframes(b''.join(frames))wf.close()get_audio(in_path)

然后把上面两个程序合并:

from aip import AipSpeechimport pyaudioimport waveinput_filename = "input.wav" # 麦克风采集的语音输入input_filepath = "../src/" # 输入文件的pathin_path = input_filepath + input_filenamedef get_audio(filepath):CHUNK = 256FORMAT = pyaudio.paInt16CHANNELS = 1 # 声道数RATE = 11025 # 采样率RECORD_SECONDS = 5WAVE_OUTPUT_FILENAME = filepathp = pyaudio.PyAudio()stream = p.open(format=FORMAT,channels=CHANNELS,rate=RATE,input=True,frames_per_buffer=CHUNK)print("*"*10, "开始录音:请在5秒内输入语音")frames = []for i in range(0, int(RATE / CHUNK * RECORD_SECONDS)):data = stream.read(CHUNK)frames.append(data)print("*"*10, "录音结束\n")stream.stop_stream()stream.close()p.terminate()wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')wf.setnchannels(CHANNELS)wf.setsampwidth(p.get_sample_size(FORMAT))wf.setframerate(RATE)wf.writeframes(b''.join(frames))wf.close()get_audio(in_path)#申请百度语音识别APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)# 读取文件def get_file_content(filePath):with open(filePath, 'rb') as fp:return fp.read()# 识别本地文件# path='/Users/alice/Documents/Blog/AI/语音识别/speechrecognition/audiofiles'test1 = client.asr(get_file_content(in_path), 'pcm', 16000, {'dev_pid': 1536, })print(test1)

这样就能实时把我们的讲话识别转换成文字了,在从识别出来的文字中提取有效信息,交给后台进行匹配对应的回答内容输出出来。

那么输出出来的文字如何也变成声音说出来呢,接下来就是语音合成了,语音合成也需要相应的库文件,这里我们使用pyttsx3语音合成模块pip install pyttsx3

import pyttsx3engine = pyttsx3.init()engine.say("风飘荡,雨濛茸,翠条柔弱花头重")engine.runAndWait()

当然我们也可以使用百度的语音合成功能:

from aip import AipSpeech""" 你的 APPID AK SK """APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)result = client.synthesis(text = '你好百度', options={'vol':5})if not isinstance(result,dict):with open('audio.mp3','wb') as f:f.write(result)else:print(result)

该文字已经转成音频,要实时播放出来的话,再加上python调用音频文件播放的功能。

但是这里百度语音合成的是mp3格式的,所以python播放mp3的话需要另外一个音频库pip install playsound:

from playsound import playsoundplaysound("audio.mp3")

跟百度语音合成的程序合并:

from aip import AipSpeechfrom playsound import playsound""" 你的 APPID AK SK """APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)result = client.synthesis(text = '你好百度', options={'vol':5})if not isinstance(result,dict):with open('audio.mp3','wb') as f:f.write(result)else:print(result)playsound("audio.mp3")

还记得pyaudio那个处理音频的文件库吗,当然也可以用它,但是pyaudio播放的是wav格式的音频文件,要实现音频播放的话,得在播放之前把mp3格式的文件处理成wav格式,而音频格式的转换需要另外使用pydub和ffmpeg实现mp3转wav。有兴趣的可以试一下。

至此,我们已经实现语音转文字的语音识别功能和文字转语音的语音合成功能。那么我们把之前准备的语料库放进来试下,在放之前呢再补充一点百度语音的一个有点,也就是可以更换朗读者,上面程序第10行里面是百度语音的属性选项,在其选项里添加一个朗读者角色,第10行的程序更改如下:

#per String 发音人选择, 0为女声,1为男声,3为情感合成-度逍遥,4为情感合成-度丫丫,默认为普通女 否result = client.synthesis(text = '你好百度', options={'vol':5,'per':4})

那么完整程序如下:

from aip import AipSpeechfrom playsound import playsound""" 你的 APPID AK SK """APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)#下面option中:vol值0-15,per可选0,1,3,4result = client.synthesis(text = '你好百度', options={'vol':5,'per':4})if not isinstance(result,dict):with open('audio.mp3','wb') as f:f.write(result)else:print(result)playsound("audio.mp3")

好了,现在把之前的语料库的内容放进来,还记得当时纯文字交流的程序吗:

with open("语料库.txt","r",encoding="utf-8") as lib:lists = lib.readlines()print(lists)print(len(lists))while True:ask = input("请开始对话:")a = 0b = 0while a==0:for i in range(0,len(lists),2):if lists[i].find(ask)!=-1:print(lists[i+1])b =1breaka = 1if b==0:print("你问的问题太深奥,我还没学会呢,换个问题吧")ask = input("请开始对话:")a = 0

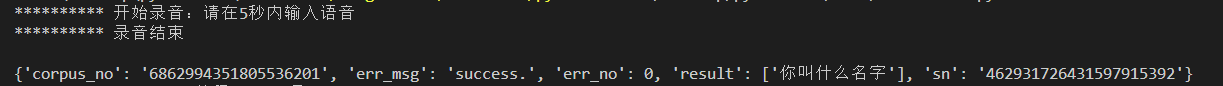

首先是语音识别,把我们的讲话识别成文字,并封装成一个子函数,注意这里识别出来的内容是以另一种叫做json的文件格式存储的。

也就是上面语音识别的程序的输出结果test1是一串数据,而我们想要的只有result里面的“你叫什么名字”,所以这里还需要另外python读取json文件

#*****************以下是语音合成的程序**********************from aip import AipSpeechimport pyaudioimport waveinput_filename = "input.wav" # 麦克风采集的语音输入input_filepath = "../src/" # 输入文件的pathin_path = input_filepath + input_filenamedef get_audio(filepath):CHUNK = 256FORMAT = pyaudio.paInt16CHANNELS = 1 # 声道数RATE = 11025 # 采样率RECORD_SECONDS = 5WAVE_OUTPUT_FILENAME = filepathp = pyaudio.PyAudio()stream = p.open(format=FORMAT,channels=CHANNELS,rate=RATE,input=True,frames_per_buffer=CHUNK)print("*"*10, "开始录音:请在5秒内输入语音")frames = []for i in range(0, int(RATE / CHUNK * RECORD_SECONDS)):data = stream.read(CHUNK)frames.append(data)print("*"*10, "录音结束\n")stream.stop_stream()stream.close()p.terminate()wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')wf.setnchannels(CHANNELS)wf.setsampwidth(p.get_sample_size(FORMAT))wf.setframerate(RATE)wf.writeframes(b''.join(frames))wf.close()get_audio(in_path)#申请百度语音识别APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)# 读取文件def get_file_content(filePath):with open(filePath, 'rb') as fp:return fp.read()# 识别本地文件# path='/Users/alice/Documents/Blog/AI/语音识别/speechrecognition/audiofiles'test1 = client.asr(get_file_content(in_path), 'pcm', 16000, {'dev_pid': 1536, })print(test1)#***********************语音合成到此结束*****************import jsonprint(test1["result"])

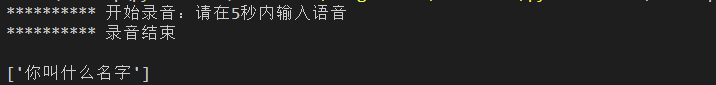

现在输出的是数组,只需要再从数组里面取出文字就行了。即如下程序:

a=["abc","123"]print(a[0])

语音识别的程序的最后一行改成:

print(test1["result"][0])

现在就已经能够拿到我们需要的内容了,那么就可以继续封装:

import jsonfrom aip import AipSpeechimport pyaudioimport waveinput_filename = "input.wav" # 麦克风采集的语音输入input_filepath = "../src/" # 输入文件的pathin_path = input_filepath + input_filenamedef get_audio(filepath):CHUNK = 256FORMAT = pyaudio.paInt16CHANNELS = 1 # 声道数RATE = 11025 # 采样率RECORD_SECONDS = 5WAVE_OUTPUT_FILENAME = filepathp = pyaudio.PyAudio()stream = p.open(format=FORMAT,channels=CHANNELS,rate=RATE,input=True,frames_per_buffer=CHUNK)print("*"*10, "开始录音:请在5秒内输入语音")frames = []for i in range(0, int(RATE / CHUNK * RECORD_SECONDS)):data = stream.read(CHUNK)frames.append(data)print("*"*10, "录音结束\n")stream.stop_stream()stream.close()p.terminate()wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')wf.setnchannels(CHANNELS)wf.setsampwidth(p.get_sample_size(FORMAT))wf.setframerate(RATE)wf.writeframes(b''.join(frames))wf.close()get_audio(in_path)#申请百度语音识别APP_ID = '16835749'API_KEY = 'UnZlBOVhwYu8m5eNqwOPHt99'SECRET_KEY = '6jhCitggsR0Ew91fdC47oMa1qtibTrsK'client = AipSpeech(APP_ID, API_KEY, SECRET_KEY)# 读取文件def get_file_content(filePath):with open(filePath, 'rb') as fp:return fp.read()def result_word():# 识别本地文件# path='/Users/alice/Documents/Blog/AI/语音识别/speechrecognition/audiofiles'test1 = client.asr(get_file_content(in_path), 'pcm', 16000, {'dev_pid': 1536, })# print(test1["result"][0])return test1["result"][0]print(result_word)with open("语料库.txt","r",encoding="utf-8") as lib:lists = lib.readlines()print(lists)print(len(lists))while True:print("请开始对话:")ask = result_word()a = 0b = 0while a==0:for i in range(0,len(lists),2):if lists[i].find(ask)!=-1:print(lists[i+1])b =1breaka = 1if b==0:print("你问的问题太深奥,我还没学会呢,换个问题吧")print("请开始对话:")ask = result_word()a = 0

但是测试发现只能识别一次,这是因为我们调用录音识别程序的那个命令get_audio(in_path)为放在重复执行里面,但是放进合适的位置之后发现还会出现别的问题,比如提示消息太多还太乱,还有就是如果我们在程序运行过程中没有讲话,而录一个空的文件,也会中断程序的运行,所以现在开始debug的过程。