一:集群安装前提

https://dolphinscheduler.apache.org/zh-cn/docs/latest/user_doc/cluster-deployment.html

1:zk集群搭建

看zk章节

2:3台服务器atguigu用户,并配置免密登录

看hadoop章节

3:3台服务器每台机器配置主机名ip映射

192.168.234.128 hadoop101192.168.234.129 hadoop102192.168.234.130 hadoop103192.168.234.131 hadoop104192.168.234.132 hadoop105192.168.234.133 hadoop106

4:新建文件夹放软件,分发

sudo mkdir -p /ruozedata/softwaresudo mkdir -p /ruozedata/appchmod 777 -R /ruozedatatar -zxvf apache-dolphinscheduler-1.3.6-bin.tar.gz -C /ruozedata/software/mv apache-dolphinscheduler-1.3.6-bin/ dolphinscheduler-bin

5:三台机器修改目录权限

sudo chown -R hadoop:hadoop dolphinscheduler-bin/

6:修改数据原配置,并增加mysql客户端jar放入lib内,分发

cd conf/vi datasource.properties

内容为

# mysqlspring.datasource.driver-class-name=com.mysql.jdbc.Driverspring.datasource.url=jdbc:mysql://192.168.234.129:3306/dolphinscheduler?useUnicode=true&characterEncoding=UTF-8spring.datasource.username=dolspring.datasource.password=dol

修改并保存完后,执行 script 目录下的创建表及导入基础数据脚本

sh script/create-dolphinscheduler.sh

7:hadoop102配置 cd conf/env 修改目录下环境变量dolphinscheduler_env.sh,分发

export HADOOP_HOME=/opt/module/hadoop-3.1.3export HADOOP_CONF_DIR=/opt/module/hadoop-3.1.3/etc/hadoopexport JAVA_HOME=/opt/module/jdk1.8.0_212export HIVE_HOME=/opt/module/hiveexport PATH=$HADOOP_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH######################################以下不用配置,是当前环境变量配置#JAVA_HOMEexport JAVA_HOME=/opt/module/jdk1.8.0_212export PATH=$PATH:$JAVA_HOME/bin#HADOOP_HOMEexport HADOOP_HOME=/opt/module/hadoop-3.1.3export PATH=$PATH:$HADOOP_HOME/binexport PATH=$PATH:$HADOOP_HOME/sbin#HIVE_HOMEHIVE_HOME=/opt/module/hivePATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/binexport PATH JAVA_HOME HADOOP_HOME HIVE_HOME#KAFKA_HOMEexport KAFKA_HOME=/opt/module/kafkaexport PATH=$PATH:$KAFKA_HOME/bin########################################

8:三台机器增加软连接

sudo ln -s /opt/module/jdk1.8.0_212/bin/java /usr/bin/java

9:修改一键部署配置文件 conf/config/install_config.conf中的各参数,特别注意以下参数的配置,分发

## Licensed to the Apache Software Foundation (ASF) under one or more# contributor license agreements. See the NOTICE file distributed with# this work for additional information regarding copyright ownership.# The ASF licenses this file to You under the Apache License, Version 2.0# (the "License"); you may not use this file except in compliance with# the License. You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.## NOTICE : If the following config has special characters in the variable `.*[]^${}\+?|()@#&`, Please escape, for example, `[` escape to `\[`# postgresql or mysqldbtype="mysql"# db config# db address and portdbhost="192.168.234.129:3306"# db usernameusername="dol"# database namedbname="dolphinscheduler"# db passwprd# NOTICE: if there are special characters, please use the \ to escape, for example, `[` escape to `\[`password="dol"# zk clusterzkQuorum="hadoop102:2181,hadoop103:2181,hadoop104:2181"# Note: the target installation path for dolphinscheduler, please not config as the same as the current path (pwd)installPath="/ruozedata/app/dolphinscheduler"# deployment user# Note: the deployment user needs to have sudo privileges and permissions to operate hdfs. If hdfs is enabled, the root directory needs to be created by itselfdeployUser="atguigu"# alert config# mail server hostmailServerHost="smtp.qq.com"# mail server port# note: Different protocols and encryption methods correspond to different ports, when SSL/TLS is enabled, make sure the port is correct.mailServerPort="25"# sendermailSender="505543479@qq.com"# usermailUser="505543479@qq.com"# sender password# note: The mail.passwd is email service authorization code, not the email login password.mailPassword="zyeeixgwjmuvcafc"# TLS mail protocol supportstarttlsEnable="true"# SSL mail protocol support# only one of TLS and SSL can be in the true state.sslEnable="false"#note: sslTrust is the same as mailServerHostsslTrust="smtp.qq.com"# resource storage type: HDFS, S3, NONE#resourceStorageType="NONE"# if resourceStorageType is HDFS锛宒efaultFS write namenode address锛孒A you need to put core-site.xml and hdfs-site.xml in the conf directory.# if S3锛寃rite S3 address锛孒A锛宖or example 锛歴3a://dolphinscheduler锛?# Note锛宻3 be sure to create the root directory /dolphinscheduler#defaultFS="hdfs://mycluster:8020"# if resourceStorageType is S3, the following three configuration is required, otherwise please ignore#s3Endpoint="http://192.168.xx.xx:9010"#s3AccessKey="xxxxxxxxxx"#s3SecretKey="xxxxxxxxxx"# if resourcemanager HA is enabled, please set the HA IPs; if resourcemanager is single, keep this value empty#yarnHaIps="192.168.xx.xx,192.168.xx.xx"# if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single, you only need to replace ds1 to actual resourcemanager hostname#singleYarnIp="yarnIp1"# resource store on HDFS/S3 path, resource file will store to this hadoop hdfs path, self configuration, please make sure the directory exists on hdfs and have read write permissions. "/dolphinscheduler" is recommendedresourceUploadPath="/rzdolphinscheduler"# who have permissions to create directory under HDFS/S3 root path# Note: if kerberos is enabled, please config hdfsRootUser=hdfsRootUser="atguigu"# kerberos config# whether kerberos starts, if kerberos starts, following four items need to config, otherwise please ignore#kerberosStartUp="false"# kdc krb5 config file path#krb5ConfPath="$installPath/conf/krb5.conf"# keytab username#keytabUserName="hdfs-mycluster@ESZ.COM"# username keytab path#keytabPath="$installPath/conf/hdfs.headless.keytab"# api server portapiServerPort="12345"# install hosts# Note: install the scheduled hostname list. If it is pseudo-distributed, just write a pseudo-distributed hostnameips="hadoop102,hadoop103,hadoop104"# ssh port, default 22# Note: if ssh port is not default, modify heresshPort="22"# run master machine# Note: list of hosts hostname for deploying mastermasters="hadoop102,hadoop103"# run worker machine# note: need to write the worker group name of each worker, the default value is "default"workers="hadoop102:default,hadoop103:default,hadoop104:default"# run alert machine# note: list of machine hostnames for deploying alert serveralertServer="hadoop102"# run api machine# note: list of machine hostnames for deploying api serverapiServers="hadoop102,hadoop103"

如果配置了hdfs,则下面参数需要注意

# 业务用到的比如sql等资源文件上传到哪里,可以设置:HDFS,S3,NONE,单机如果想使用本地文件系统,请配置为HDFS,因为HDFS支持本地文件系统;如果不需要资源上传功能请选择NONE。强调一点:使用本地文件系统不需要部署hadoopresourceStorageType="HDFS"#如果上传资源保存想保存在hadoop上,hadoop集群的NameNode启用了HA的话,需要将hadoop的配置文件core-site.xml和hdfs-site.xml放到安装路径的conf目录下,本例即是放到/opt/soft/dolphinscheduler/conf下面,并配置namenode cluster名称;如果NameNode不是HA,则只需要将mycluster修改为具体的ip或者主机名即可defaultFS="hdfs://mycluster:8020"# 如果没有使用到Yarn,保持以下默认值即可;如果ResourceManager是HA,则配置为ResourceManager节点的主备ip或者hostname,比如"192.168.xx.xx,192.168.xx.xx";如果是单ResourceManager请配置yarnHaIps=""即可yarnHaIps="192.168.xx.xx,192.168.xx.xx"# 如果ResourceManager是HA或者没有使用到Yarn保持默认值即可;如果是单ResourceManager,请配置真实的ResourceManager主机名或者ipsingleYarnIp="yarnIp1"# 资源上传根路径,主持HDFS和S3,由于hdfs支持本地文件系统,需要确保本地文件夹存在且有读写权限resourceUploadPath="/data/dolphinscheduler"# 具备权限创建resourceUploadPath的用户hdfsRootUser="hdfs"

10:一键部署

切换到atguigu用户

sh install.sh

脚本完成后,会启动以下5个服务,使用jps命令查看服务是否启动(jps为java JDK自带)

MasterServer ----- master服务WorkerServer ----- worker服务LoggerServer ----- logger服务ApiApplicationServer ----- api服务AlertServer ----- alert服务

部署成功后,可以进行日志查看,日志统一存放于/ruozedata/app/logs文件夹内

logs/├── dolphinscheduler-alert-server.log├── dolphinscheduler-master-server.log|—— dolphinscheduler-worker-server.log|—— dolphinscheduler-api-server.log|—— dolphinscheduler-logger-server.log

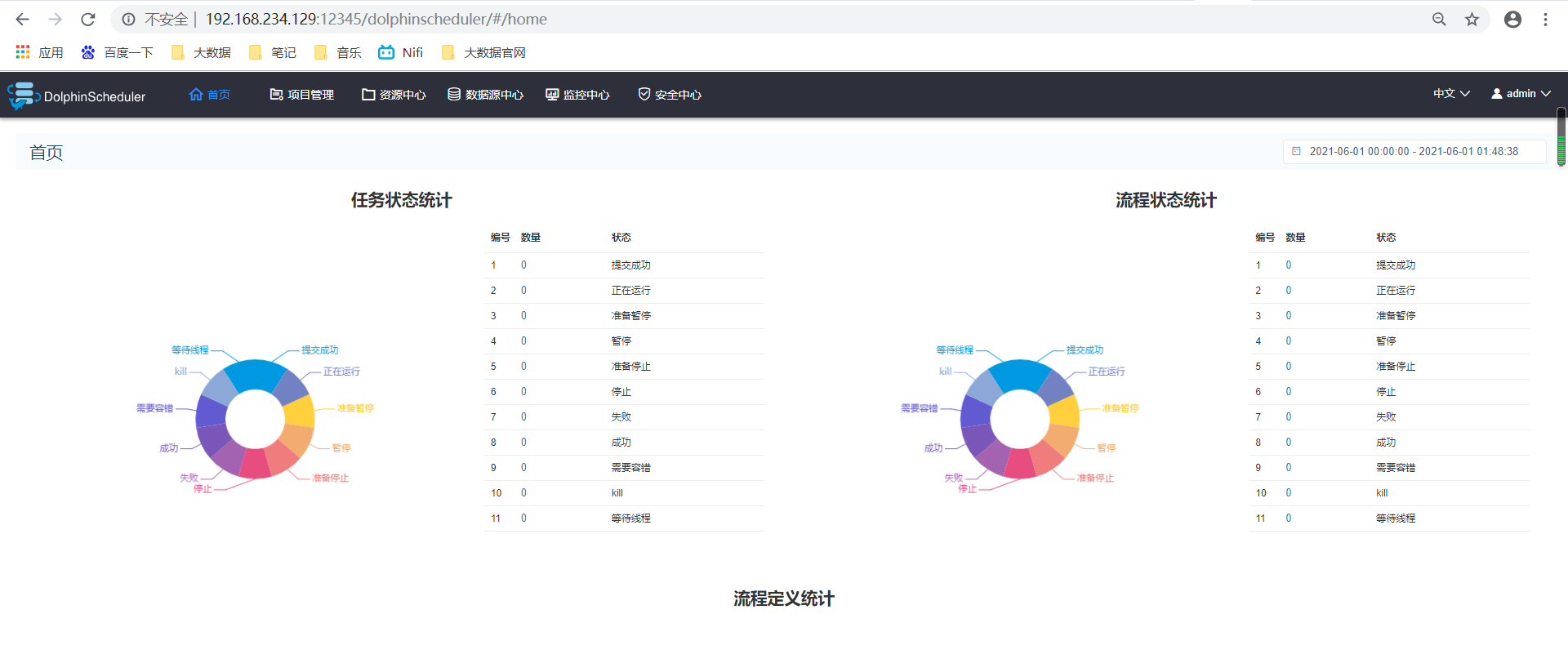

11:登录系统

访问前端页面地址,接口ip(自行修改) http://192.168.234.129:12345/dolphinscheduler

用户名admin 密码dolphinscheduler123

12:启停服务

一键停止集群所有服务sh ./bin/stop-all.sh

一键开启集群所有服务sh ./bin/start-all.sh

启停Master

sh ./bin/dolphinscheduler-daemon.sh start master-server sh ./bin/dolphinscheduler-daemon.sh stop master-server

启停Worker

sh ./bin/dolphinscheduler-daemon.sh start worker-server sh ./bin/dolphinscheduler-daemon.sh stop worker-server

启停Api

sh ./bin/dolphinscheduler-daemon.sh start api-server sh ./bin/dolphinscheduler-daemon.sh stop api-server

启停Logger

sh ./bin/dolphinscheduler-daemon.sh start logger-server sh ./bin/dolphinscheduler-daemon.sh stop logger-server

启停Alert

sh ./bin/dolphinscheduler-daemon.sh start alert-server sh ./bin/dolphinscheduler-daemon.sh stop alert-server