一、官方提供的三种部署方式

1、minikube

Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。 部署地址:https://kubernetes.io/docs/setup/minikube/

2、kubeadm

Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。 部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

3、二进制

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。 下载地址:https://github.com/kubernetes/kubernetes/releases

二、kubeadm方式部署Kubernetes集群

1、 安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.x-86_x64

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

2、准备环境

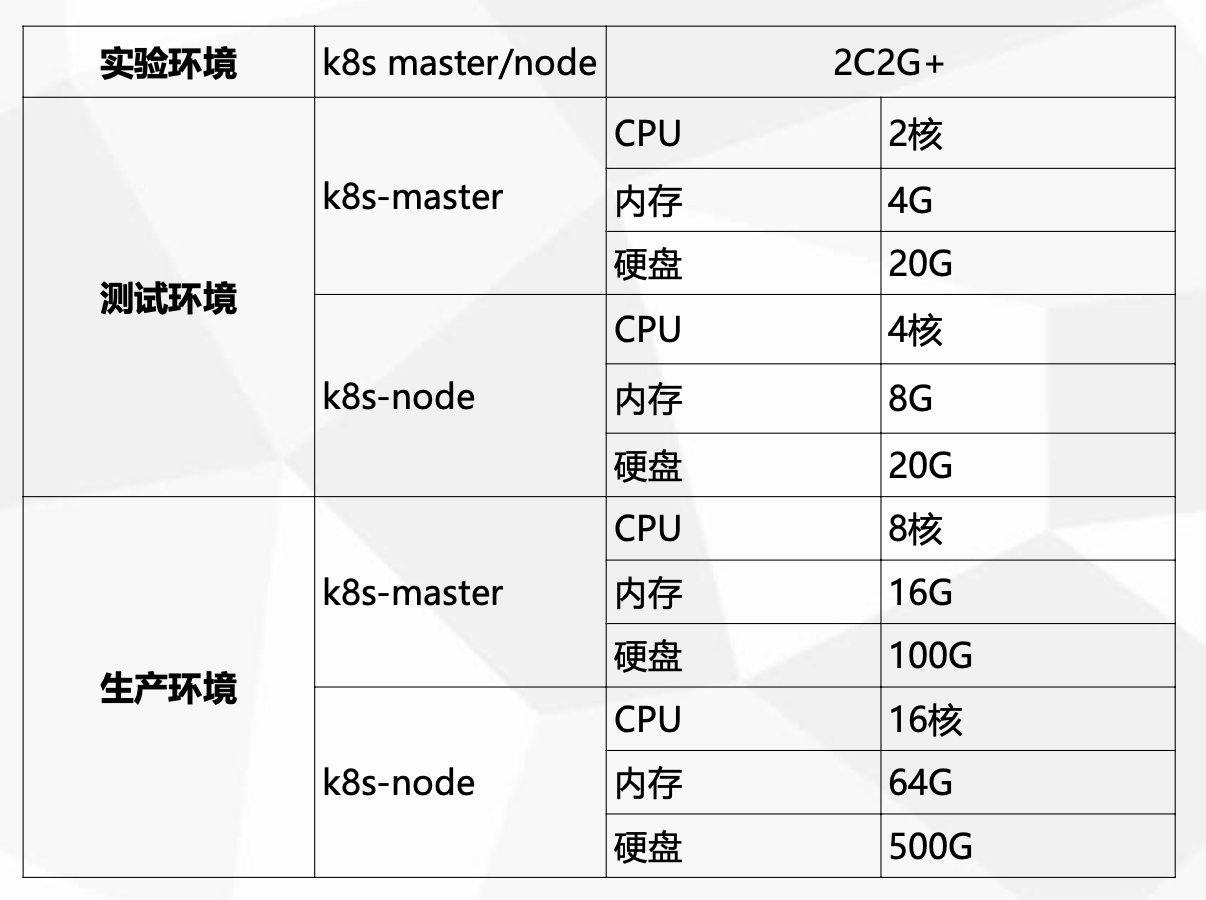

服务器硬件配置要求:

| 角色 | IP |

|---|---|

| k8s-master | 192.168.31.61 |

| k8s-node1 | 192.168.31.62 |

| k8s-node2 | 192.168.31.63 |

关闭防火墙:$ systemctl stop firewalld$ systemctl disable firewalld关闭selinux:$ sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久$ setenforce 0 # 临时关闭swap:$ swapoff -a # 临时$ vim /etc/fstab # 永久设置主机名:$ hostnamectl set-hostname <hostname>在master添加hosts:$ cat >> /etc/hosts << EOF192.168.31.61 k8s-master192.168.31.62 k8s-node1192.168.31.63 k8s-node2EOF将桥接的IPv4流量传递到iptables的链:$ cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOF$ sysctl --system # 生效时间同步:$ yum install ntpdate -y$ ntpdate time.windows.com

3、所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

1、 安装Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo$ yum -y install docker-ce-18.06.1.ce-3.el7$ systemctl enable docker && systemctl start docker$ docker --versionDocker version 18.06.1-ce, build e68fc7a

# cat > /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]}EOF#重启docker# systemctl restart docker

2、 添加阿里云YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

3、 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

$ yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0$ systemctl enable kubelet

4、部署Kubernetes Master

在192.168.31.61(Master)执行。

$ kubeadm init \--apiserver-advertise-address=192.168.31.61 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.18.0 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16执行后需要等待一段时间,完成后会在/etc/kubernetes目录下生成相关的文件

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

使用kubectl工具:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config$ kubectl get nodes

5、安装Pod网络插件(CNI)

# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml# 修改国内镜像仓库# sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.11.0-amd64#g" kube-flannel.yml#修改后的内存如下image: lizhenliang/flannel:v0.11.0-amd64image: lizhenliang/flannel:v0.11.0-amd64#安装Pod网络# kubectl apply -f kube-flannel.ymal

CNI网络组件说明:

net-conf.json: |{"Network": "10.244.0.0/16", #这里对应pod-network-cidr定义的网段地址"Backend": {"Type": "vxlan" #这里修改需要的网络模式}}

1、flannel(vxlan 隧道方案、hostgw 路由方案,性能最好)

2、calico(ipip、bgp)

6、加入Kubernetes Node

在192.168.31.62/63(Node)执行。

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

$ kubeadm join 192.168.31.61:6443 --token esce21.q6hetwm8si29qxwn \

--discovery-token-ca-cert-hash sha256:00603a05805807501d7181c3d60b478788408cfe6cedefedb1f97569708be9c5

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,操作如下:

# kubeadm token create

# kubeadm token list

#执行以上命令后,输出结果:

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

nuja6n.o3jrhsffiqs9swnu 11h 2020-07-15T22:51:51+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

#说明:记录nuja6n.o3jrhsffiqs9swnu

# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

#执行以上命令后,输出结果:

63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

#说明:记录63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

#查看集群的token值

kubeadm token create --print-join-command

# kubeadm join 192.168.31.61:6443 --token nuja6n.o3jrhsffiqs9swnu --discovery-token-ca-cert-hash sha256:63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

#参数说明:

--token:跟kubeadm token list执行出来的token结果,例如:nuja6n.o3jrhsffiqs9swnu

--discovery-token-ca-cert-hash:跟openssl执行出来的结果,例如:63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

kubeadm token create —print-join-command

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-join/

7、测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

$ kubectl create deployment nginx --image=nginx

$ kubectl expose deployment nginx --port=80 --type=NodePort

$ kubectl get pod,svc

访问地址:http://NodeIP:Port

8、部署 Dashboard

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

访问地址:http://NodeIP:30001

说明:因dashboard的ssl证书是无效的,会导致其他浏览器无法正常访问,目前只有火狐浏览器可以访问

解决Dashboard其他浏览器无法访问,解决方法如下:

# 二进制 部署

# 注意你部署Dashboard的命名空间(之前部署默认是kube-system,新版是kubernetes-dashboard)

1、 删除默认的secret,用自签证书创建新的secret

kubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboard

kubectl create secret generic kubernetes-dashboard-certs \

--from-file=/opt/kubernetes/ssl/server-key.pem --from-file=/opt/kubernetes/ssl/server.pem -n kubernetes-dashboard

2、修改 dashboard.yaml 文件,在args下面增加证书两行

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=server-key.pem

- --tls-cert-file=server.pem

kubectl apply -f kubernetes-dashboard.yaml

# kubeadm 部署

# 注意你部署Dashboard的命名空间(之前部署默认是kube-system,新版是kubernetes-dashboard)

1、删除默认的secret,用自签证书创建新的secret

kubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboard

kubectl create secret generic kubernetes-dashboard-certs \

--from-file=/etc/kubernetes/pki/apiserver.key --from-file=/etc/kubernetes/pki/apiserver.crt -n kubernetes-dashboard

说明:测试环境为了方便,直接使用kubernetes的apiserver证书到引用到kubernetes-dashboard里,如果是生产环境,建议使用自签ssl证书,方法:cfssl、openssl

2、修改 dashboard.yaml 文件,在args下面增加证书两行

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=apiserver.key

- --tls-cert-file=apiserver.crt

kubectl apply -f kubernetes-dashboard.yaml

创建service account并绑定默认cluster-admin管理员集群角色:

#创建账号dashboard-admin

kubectl create serviceaccount dashboard-admin -n kube-system

#为dashboard-admin账号赋予权限

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#获取dashboard-admin账号的token

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

使用输出的token登录Dashboard。

9、k8s集群删除和添加node节点

在已有k8s云平台中误删除node节点,然后将误删除的节点添加进集群中。如果是一台新服务器必须还要安装docker和k8s基础组件。

1.查看节点数和删除node节点(master节点)

[root@k8s-master k8s-yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 8d v1.18.0

k8s-node1 Ready <none> 8d v1.18.0

k8s-node2 Ready <none> 8d v1.18.0

[root@k8s01 ~]# kubectl delete nodes k8s-node1

node "k8s-node1" deleted

[root@k8s-master k8s-yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 8d v1.18.0

k8s-node2 Ready <none> 8d v1.18.0

2.在被删除的node节点清空集群信息

[root@k8s-node1 ~]# kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0725 23:06:48.855827 16215 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

3.在master节点查看集群的token值

[root@k8s-master k8s-yaml]# kubeadm token create --print-join-command

W0725 23:07:01.816101 51812 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

kubeadm join 192.168.118.138:6443 --token esgw9t.l5iutdzt9zceqj59 --discovery-token-ca-cert-hash sha256:ed8b8602ef2c193d16da8b0e13134c80fda8063b4d144d7b1b62728869f68cc9

4.将node节点重新添加到k8s集群中

[root@k8s-node1 ~]# kubeadm join 192.168.118.138:6443 --token esgw9t.l5iutdzt9zceqj59 --discovery-token-ca-cha256:ed8b8602ef2c193d16da8b0e13134c80fda8063b4d144d7b1b62728869f68cc9

W0725 23:07:27.918381 16230 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ig control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver d". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8s-node1" could not be reached

[WARNING Hostname]: hostname "k8s-node1": lookup k8s-node1 on 192.168.118.2:53: server misbehaving

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-sspace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

5.查看整个集群状态

[root@k8s-master k8s-yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 8d v1.18.0

k8s-node1 Ready <none> 4m16s v1.18.0

k8s-node2 Ready <none> 8h v1.18.0

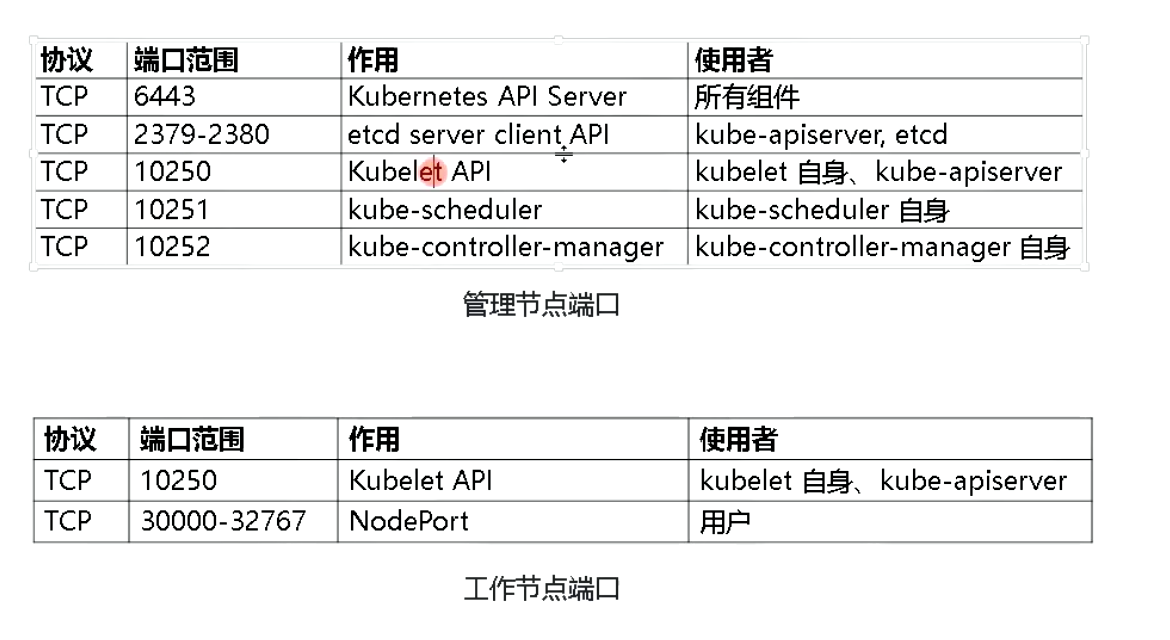

10、Kubernetes暴露的端口