- 一、前提条件

- 二、部署拓扑

- 三、docker 镜像

- 四、Kubernetes部署

- 五、初始化 dashboard

- 六、启动测试

- !/usr/bin/env bash

- Script created to launch Jmeter tests directly from the current terminal without accessing the jmeter master pod.

- It requires that you supply the path to the jmx file

- After execution, test script jmx file may be deleted from the pod itself but not locally.

- 直接从当前终端启动 Jmeter 测试而创建的脚本,无需访问 Jmeter master pod。

- 要求提供 jmx 文件的路径

- 执行后,测试脚本 jmx 文件可能会从 pod 本身删除,但不会在本地删除。

- 获取 namesapce 变量

- 获取 master pod 详细信息

一、前提条件

Kubernetes > 1.16

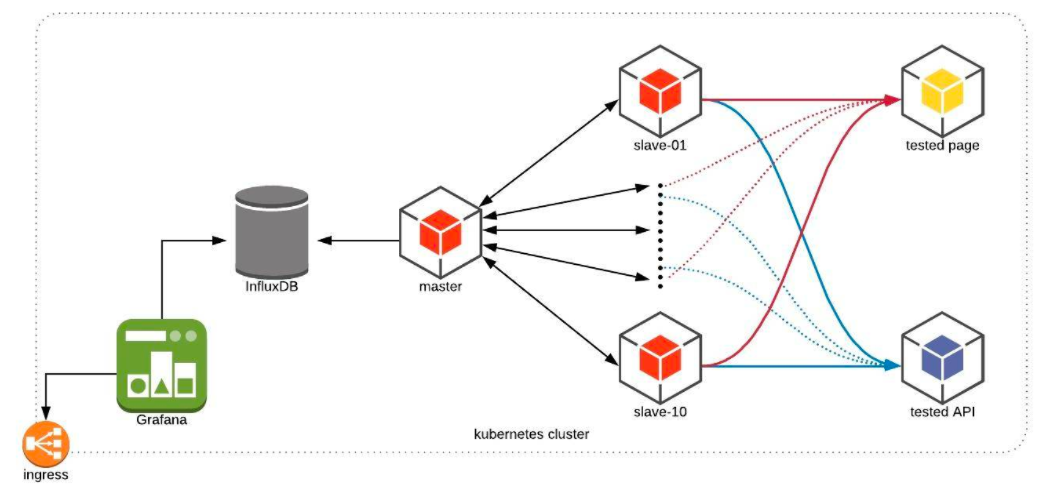

二、部署拓扑

可以从 master 节点启动测试,master 节点把对应的测试脚本发送到对应的 slaves 节点,slave 节点的 pod/nodes 主要作用即发压。

部署文件清单:

jmeter_cluster_create.sh — 此脚本将要求一个唯一的 namespace,然后它将继续创建命名空间和所有组件(jmeter master,slaves,influxdb 和 grafana)。

注意:在启动前,请在jmeter_slaves_deploy.yaml文件中设置要用于 slaves 服务器的副本数,通常副本数应与拥有的 worker nodes 相匹配。

jmeter_master_configmap.yaml — Jmeter master 的应用配置。

jmeter_master_deployment.yaml — Jmeter master 的部署清单。

jmeter_slaves_deploy.yaml — Jmeter slave 的部署清单。

jmeter_slave_svc.yaml — jmeter slave 的服务清单。使用 headless service,这使我们能够直接获取 jmeter slave 的 POD IP 地址,而我们不需要 DNS 或轮询。创建此文件是为了使 slave Pod IP 地址更容易直接发送到 jmeter master。

jmeter_influxdb_configmap.yaml — influxdb 部署的应用配置。如果要在默认的 influxdb 端口之外使用 graphite 存储方法,这会将 influxdb 配置为暴露端口 2003,以便支持 graphite 。因此,可以使用 influxdb 部署来支持jmeter 后置监听器方法(graphite 和 influxdb)。

jmeter_influxdb_deploy.yaml — Influxdb 的部署清单

jmeter_influxdb_svc.yaml — Influxdb 的服务清单。

jmeter_grafana_deploy.yaml — grafana 部署清单。

jmeter_grafana_svc.yaml — grafana 部署的服务清单,默认情况下使用 NodePort,如果公有云中运行它,则可以将其更改为 LoadBalancer(并且可以设置 CNAME 以使用 FQDN 缩短名称)。

dashboard.sh — 该脚本用于自动创建以下内容:

(1)influxdb pod 中的一个 influxdb 数据库(Jmeter)

(2)grafana 中的数据源(jmeterdb)

start_test.sh —此脚本用于自动运行 Jmeter 测试脚本,而无需手动登录 Jmeter 主 shell,它将询问 Jmeter 测试脚本的位置,然后将其复制到 Jmeter master pod 并启动自动对 Jmeter slave 进行测试。

jmeter_stop.sh - 停止测试

GrafanaJMeterTemplate.json — 预先构建的 Jmeter grafana 仪表板。

Dockerfile-base - 构建 Jmeter 基础镜像

Dockerfile-master - 构建 Jmeter master 镜像

Dockerfile-slave - 构建 Jmeter slave 镜像

Dockerimages.sh - 批量构建 docker 镜像

三、docker 镜像

1、构建 docker 镜像

执行脚本,构建镜像:

./dockerimages.sh

查看镜像:

$ docker images

将镜像推送到 Registry:

$ sudo docker login --username=xxxx registry.cn-beijing.aliyuncs.com$ sudo docker tag [ImageId] registry.cn-beijing.aliyuncs.com/7d/jmeter-base:[镜像版本号]$ sudo docker push registry.cn-beijing.aliyuncs.com/7d/jmeter-base:[镜像版本号]

2、部署清单

Dockerfile-base (构建 Jmeter 基础镜像):

FROM alpine:latestLABEL MAINTAINER 7DGroupARG JMETER_VERSION=5.2.1#定义时区参数ENV TZ=Asia/ShanghaiRUN apk update && \apk upgrade && \apk add --update openjdk8-jre wget tar bash && \mkdir /jmeter && cd /jmeter/ && \wget https://mirrors.tuna.tsinghua.edu.cn/apache/jmeter/binaries/apache-jmeter-${JMETER_VERSION}.tgz && \tar -xzf apache-jmeter-$JMETER_VERSION.tgz && rm apache-jmeter-$JMETER_VERSION.tgz && \cd /jmeter/apache-jmeter-$JMETER_VERSION/ && \wget -q -O /tmp/JMeterPlugins-Standard-1.4.0.zip https://jmeter-plugins.org/downloads/file/JMeterPlugins-Standard-1.4.0.zip && unzip -n /tmp/JMeterPlugins-Standard-1.4.0.zip && rm /tmp/JMeterPlugins-Standard-1.4.0.zip && \wget -q -O /jmeter/apache-jmeter-$JMETER_VERSION/lib/ext/pepper-box-1.0.jar https://github.com/raladev/load/blob/master/JARs/pepper-box-1.0.jar?raw=true && \cd /jmeter/apache-jmeter-$JMETER_VERSION/ && \wget -q -O /tmp/bzm-parallel-0.7.zip https://jmeter-plugins.org/files/packages/bzm-parallel-0.7.zip && \unzip -n /tmp/bzm-parallel-0.7.zip && rm /tmp/bzm-parallel-0.7.zip && \ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo "$TZ" > /etc/timezoneENV JMETER_HOME /jmeter/apache-jmeter-$JMETER_VERSION/ENV PATH $JMETER_HOME/bin:$PATH#JMeter 主配置文件ADD jmeter.properties $JMETER_HOME/bin/jmeter.properties

Dockerfile-master(构建 Jmeter master 镜像):

FROM registry.cn-beijing.aliyuncs.com/7d/jmeter-base:latestMAINTAINER 7DGroupEXPOSE 60000

Dockerfile-slave(构建 Jmeter slave 镜像):

Dockerfile-slave:FROM registry.cn-beijing.aliyuncs.com/7d/jmeter-base:latestMAINTAINER 7DGroupEXPOSE 1099 50000ENTRYPOINT $JMETER_HOME/bin/jmeter-server \-Dserver.rmi.localport=50000 \-Dserver_port=1099 \-Jserver.rmi.ssl.disable=true

Dockerimages.sh(批量构建 docker 镜像):

#!/bin/bash -edocker build --tag="registry.cn-beijing.aliyuncs.com/7d/jmeter-base:latest" -f Dockerfile-base .docker build --tag="registry.cn-beijing.aliyuncs.com/7d/jmeter-master:latest" -f Dockerfile-master .docker build --tag="registry.cn-beijing.aliyuncs.com/7d/jmeter-slave:latest" -f Dockerfile-slave .

四、Kubernetes部署

1、部署组件

执行jmeter_cluster_create.sh,并输入一个唯一的 namespace

./jmeter_cluster_create.sh

等待一会,查看pods安装情况:

kubectl get pods -n 7dgroupNAME READY STATUS RESTARTS AGEinfluxdb-jmeter-584cf69759-j5m85 1/1 Running 2 5mjmeter-grafana-6d5b75b7f6-57dxj 1/1 Running 1 5mjmeter-master-84bfd5d96d-kthzm 1/1 Running 0 5mjmeter-slaves-b5b75757-dxkxz 1/1 Running 0 5mjmeter-slaves-b5b75757-n58jw 1/1 Running 0 5m

2、部署清单

2.1、主执行脚本

jmeter_cluster_create.sh(创建命名空间和所有组件(jmeter master,slaves,influxdb 和 grafana)):

#!/usr/bin/env bash#Create multiple Jmeter namespaces on an existing kuberntes cluster#Started On January 23, 2018working_dir=`pwd`echo "checking if kubectl is present"if ! hash kubectl 2>/dev/nullthenecho "'kubectl' was not found in PATH"echo "Kindly ensure that you can acces an existing kubernetes cluster via kubectl"exitfikubectl version --shortecho "Current list of namespaces on the kubernetes cluster:"echokubectl get namespaces | grep -v NAME | awk '{print $1}'echoecho "Enter the name of the new tenant unique name, this will be used to create the namespace"read tenantecho#Check If namespace existskubectl get namespace $tenant > /dev/null 2>&1if [ $? -eq 0 ]thenecho "Namespace $tenant already exists, please select a unique name"echo "Current list of namespaces on the kubernetes cluster"sleep 2kubectl get namespaces | grep -v NAME | awk '{print $1}'exit 1fiechoecho "Creating Namespace: $tenant"kubectl create namespace $tenantecho "Namspace $tenant has been created"echoecho "Creating Jmeter slave nodes"nodes=`kubectl get no | egrep -v "master|NAME" | wc -l`echoecho "Number of worker nodes on this cluster is " $nodesecho#echo "Creating $nodes Jmeter slave replicas and service"echokubectl create -n $tenant -f $working_dir/jmeter_slaves_deploy.yamlkubectl create -n $tenant -f $working_dir/jmeter_slaves_svc.yamlecho "Creating Jmeter Master"kubectl create -n $tenant -f $working_dir/jmeter_master_configmap.yamlkubectl create -n $tenant -f $working_dir/jmeter_master_deploy.yamlecho "Creating Influxdb and the service"kubectl create -n $tenant -f $working_dir/jmeter_influxdb_configmap.yamlkubectl create -n $tenant -f $working_dir/jmeter_influxdb_deploy.yamlkubectl create -n $tenant -f $working_dir/jmeter_influxdb_svc.yamlecho "Creating Grafana Deployment"kubectl create -n $tenant -f $working_dir/jmeter_grafana_deploy.yamlkubectl create -n $tenant -f $working_dir/jmeter_grafana_svc.yamlecho "Printout Of the $tenant Objects"echokubectl get -n $tenant allecho namespace = $tenant > $working_dir/tenant_export

2.2、jmeter_slaves

jmeter_slaves_deploy.yaml(Jmeter slave 的部署清单):

apiVersion: apps/v1kind: Deploymentmetadata:name: jmeter-slaveslabels:jmeter_mode: slavespec:replicas: 2selector:matchLabels:jmeter_mode: slavetemplate:metadata:labels:jmeter_mode: slavespec:containers:- name: jmslaveimage: registry.cn-beijing.aliyuncs.com/7d/jmeter-slave:latestimagePullPolicy: IfNotPresentports:- containerPort: 1099- containerPort: 50000resources:limits:cpu: 4000mmemory: 4Girequests:cpu: 500mmemory: 512Mi

jmeter_slaves_svc.yaml( Jmeter slave 的服务清单)

apiVersion: v1kind: Servicemetadata:name: jmeter-slaves-svclabels:jmeter_mode: slavespec:clusterIP: Noneports:- port: 1099name: firsttargetPort: 1099- port: 50000name: secondtargetPort: 50000

2.3、jmeter_master

jmeter_master_configmap.yaml(jmeter_master 应用配置):

apiVersion: v1kind: ConfigMapmetadata:name: jmeter-load-testlabels:app: influxdb-jmeterdata:load_test: |#!/bin/bash#Script created to invoke jmeter test script with the slave POD IP addresses#Script should be run like: ./load_test "path to the test script in jmx format"/jmeter/apache-jmeter-*/bin/jmeter -n -t $1 `getent ahostsv4 jmeter-slaves-svc | cut -d' ' -f1 | sort -u | awk -v ORS=, '{print $1}' | sed 's/,$//'`

jmeter_master_deploy.yaml(jmeter_master 部署清单):

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2kind: Deploymentmetadata:name: jmeter-masterlabels:jmeter_mode: masterspec:replicas: 1selector:matchLabels:jmeter_mode: mastertemplate:metadata:labels:jmeter_mode: masterspec:containers:- name: jmmasterimage: registry.cn-beijing.aliyuncs.com/7d/jmeter-master:latestimagePullPolicy: IfNotPresentcommand: [ "/bin/bash", "-c", "--" ]args: [ "while true; do sleep 30; done;" ]volumeMounts:- name: loadtestmountPath: /load_testsubPath: "load_test"ports:- containerPort: 60000resources:limits:cpu: 4000mmemory: 4Girequests:cpu: 500mmemory: 512Mivolumes:- name: loadtestconfigMap:name: jmeter-load-test

2.4、influxdb

jmeter_influxdb_configmap.yaml(influxdb 的应用配置):

apiVersion: v1kind: ConfigMapmetadata:name: influxdb-configlabels:app: influxdb-jmeterdata:influxdb.conf: |[meta]dir = "/var/lib/influxdb/meta"[data]dir = "/var/lib/influxdb/data"engine = "tsm1"wal-dir = "/var/lib/influxdb/wal"# Configure the graphite api[[graphite]]enabled = truebind-address = ":2003" # If not set, is actually set to bind-address.database = "jmeter" # store graphite data in this database

jmeter_influxdb_deploy.yaml(influxdb 部署清单):

apiVersion: apps/v1kind: Deploymentmetadata:name: influxdb-jmeterlabels:app: influxdb-jmeterspec:replicas: 1selector:matchLabels:app: influxdb-jmetertemplate:metadata:labels:app: influxdb-jmeterspec:containers:- image: influxdbimagePullPolicy: IfNotPresentname: influxdbvolumeMounts:- name: config-volumemountPath: /etc/influxdbports:- containerPort: 8083name: influx- containerPort: 8086name: api- containerPort: 2003name: graphitevolumes:- name: config-volumeconfigMap:name: influxdb-config

jmeter_influxdb_svc.yaml(influxdb 部署服务清单):

apiVersion: v1kind: Servicemetadata:name: jmeter-influxdblabels:app: influxdb-jmeterspec:ports:- port: 8083name: httptargetPort: 8083- port: 8086name: apitargetPort: 8086- port: 2003name: graphitetargetPort: 2003selector:app: influxdb-jmeter

2.5、grafana

jmeter_grafana_deploy.yaml(grafana 部署清单):

apiVersion: apps/v1kind: Deploymentmetadata:name: jmeter-grafanalabels:app: jmeter-grafanaspec:replicas: 1selector:matchLabels:app: jmeter-grafanatemplate:metadata:labels:app: jmeter-grafanaspec:containers:- name: grafanaimage: grafana/grafana:5.2.0imagePullPolicy: IfNotPresentports:- containerPort: 3000protocol: TCPenv:- name: GF_AUTH_BASIC_ENABLEDvalue: "true"- name: GF_USERS_ALLOW_ORG_CREATEvalue: "true"- name: GF_AUTH_ANONYMOUS_ENABLEDvalue: "true"- name: GF_AUTH_ANONYMOUS_ORG_ROLEvalue: Admin- name: GF_SERVER_ROOT_URL# If you're only using the API Server proxy, set this value instead:# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxyvalue: /

jmeter_grafana_svc.yaml(grafana 部署服务清单):

apiVersion: v1kind: Servicemetadata:name: jmeter-grafanalabels:app: jmeter-grafanaspec:ports:- port: 3000targetPort: 3000selector:app: jmeter-grafanatype: NodePort---apiVersion: extensions/v1beta1kind: Ingressmetadata:annotations:nginx.ingress.kubernetes.io/service-weight: 'jmeter-grafana: 100'name: jmeter-grafana-ingressspec:rules:# 配置七层域名- host: grafana-jmeter.7d.comhttp:paths:# 配置Context Path- path: /backend:serviceName: jmeter-grafanaservicePort: 3000

五、初始化 dashboard

1、启动 dashboard 脚本

$ ./dashboard.sh

检查 service 部署情况:

$ kubectl get svc -n 7dgroupNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEjmeter-grafana NodePort 10.96.6.201 <none> 3000:31801/TCP 10mjmeter-influxdb ClusterIP 10.96.111.60 <none> 8083/TCP,8086/TCP,2003/TCP 10mjmeter-slaves-svc ClusterIP None <none> 1099/TCP,50000/TCP 10m

我们可以通过http://任意 node_ip:31801/访问 grafana

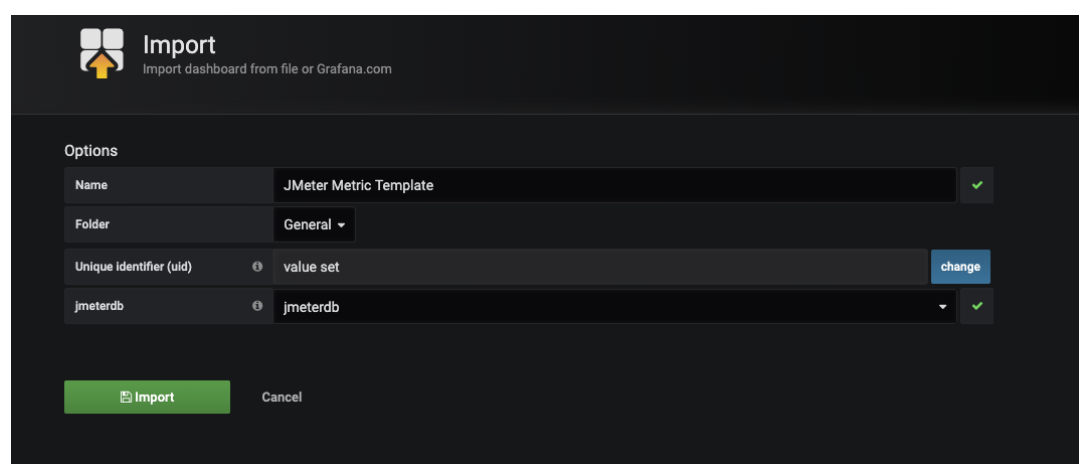

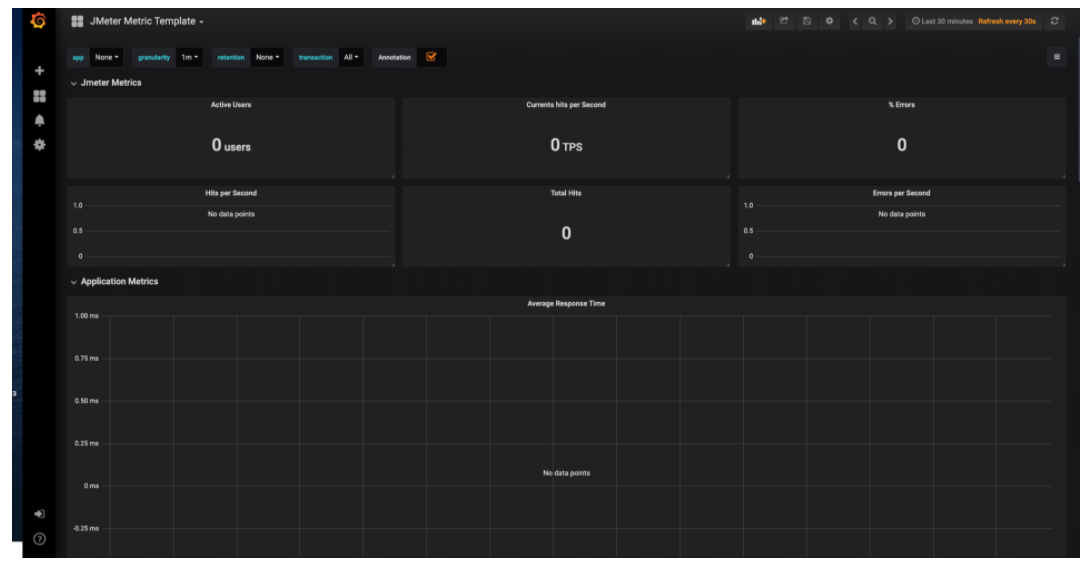

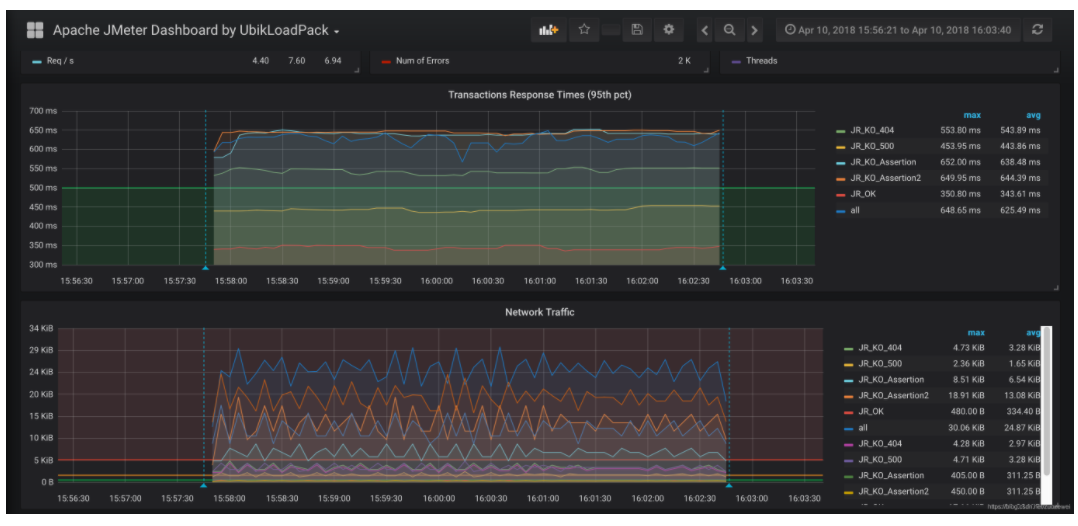

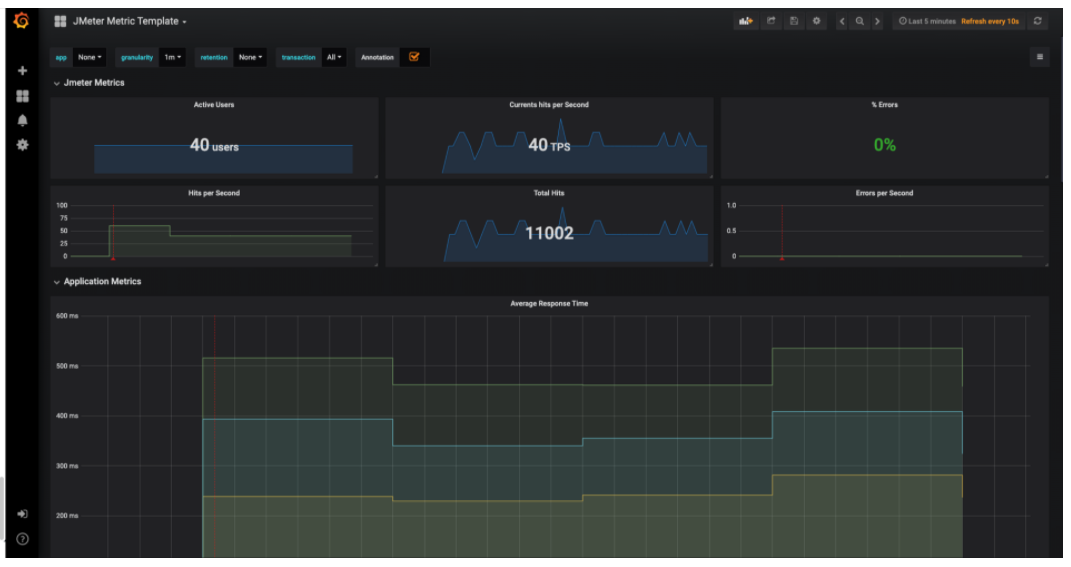

最后,我们在 grafana 导入 dashborad 模版:

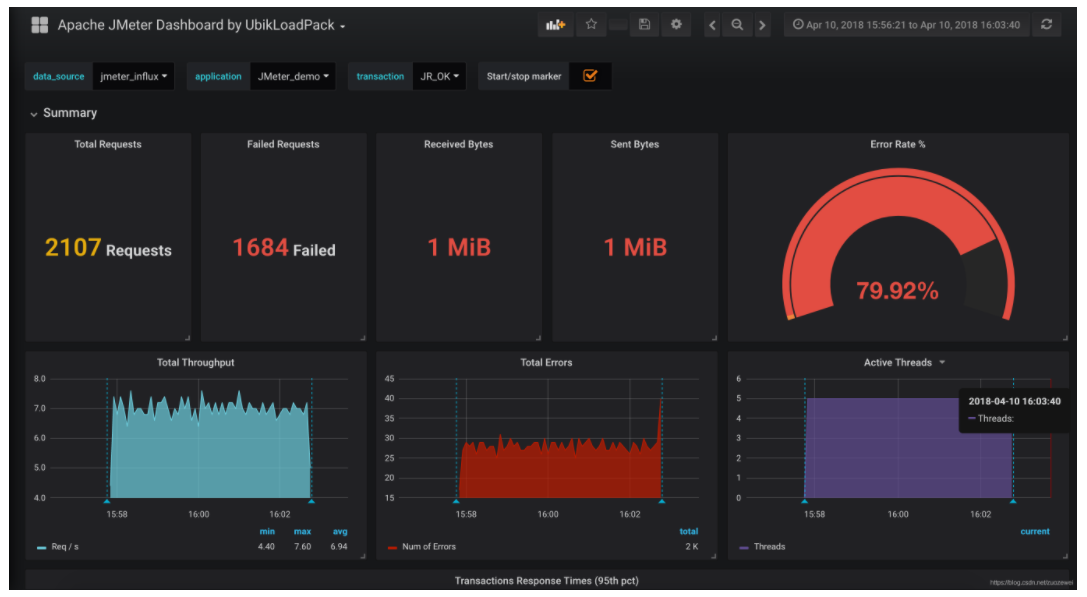

如果你不喜欢这个模版,也可以导入热门模版:5496

2、部署清单

dashboard.sh该脚本用于自动创建以下内容:

- (1)influxdb pod 中的一个 influxdb 数据库(Jmeter)

- (2)grafana 中的数据源(jmeterdb)

#!/usr/bin/env bashworking_dir=`pwd`#Get namesapce variabletenant=`awk '{print $NF}' $working_dir/tenant_export`## Create jmeter database automatically in Influxdbecho "Creating Influxdb jmeter Database"##Wait until Influxdb Deployment is up and running##influxdb_status=`kubectl get po -n $tenant | grep influxdb-jmeter | awk '{print $2}' | grep Runninginfluxdb_pod=`kubectl get po -n $tenant | grep influxdb-jmeter | awk '{print $1}'`kubectl exec -ti -n $tenant $influxdb_pod -- influx -execute 'CREATE DATABASE jmeter'## Create the influxdb datasource in Grafanaecho "Creating the Influxdb data source"grafana_pod=`kubectl get po -n $tenant | grep jmeter-grafana | awk '{print $1}'`## Make load test script in Jmeter master pod executable#Get Master pod detailsmaster_pod=`kubectl get po -n $tenant | grep jmeter-master | awk '{print $1}'`kubectl exec -ti -n $tenant $master_pod -- cp -r /load_test /![]()jmeter/load_testkubectl exec -ti -n $tenant $master_pod -- chmod 755 /jmeter/load_test##kubectl cp $working_dir/influxdb-jmeter-datasource.json -n $tenant $grafana_pod:/influxdb-jmeter-datasource.jsonkubectl exec -ti -n $tenant $grafana_pod -- curl 'http://admin:admin@127.0.0.1:3000/api/datasources' -X POST -H 'Content-Type: application/json;charset=UTF-8' --data-binary '{"name":"jmeterdb","type":"influxdb","url":"http://jmeter-influxdb:8086","access":"proxy","isDefault":true,"database":"jmeter","user":"admin","password":"admin"}'

六、启动测试

1、执行脚本

需要一个测试脚本,本例为:web-test.jmx$ ./start_test.sh

查看测试数据:$ ./start_test.shEnter path to the jmx file web-test.jmx''SLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/jmeter/apache-jmeter-5.0/lib/log4j-slf4j-impl-2.11.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/jmeter/apache-jmeter-5.0/lib/ext/pepper-box-1.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Jul 25, 2020 11:30:58 AM java.util.prefs.FileSystemPreferences$1 runINFO: Created user preferences directory.Creating summariser <summary>Created the tree successfully using web-test.jmxConfiguring remote engine: 10.100.113.31Configuring remote engine: 10.100.167.173Starting remote enginesStarting the test @ Sat Jul 25 11:30:59 UTC 2020 (1595676659540)Remote engines have been startedWaiting for possible Shutdown/StopTestNow/Heapdump message on port 4445summary + 803 in 00:00:29 = 27.5/s Avg: 350 Min: 172 Max: 1477 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary + 1300 in 00:00:29 = 45.3/s Avg: 367 Min: 172 Max: 2729 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 2103 in 00:00:58 = 36.4/s Avg: 361 Min: 172 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:31 = 45.4/s Avg: 342 Min: 160 Max: 2145 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 3503 in 00:01:29 = 39.5/s Avg: 353 Min: 160 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:31 = 45.2/s Avg: 352 Min: 169 Max: 2398 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 4903 in 00:02:00 = 41.0/s Avg: 353 Min: 160 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:30 = 46.8/s Avg: 344 Min: 151 Max: 1475 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 6303 in 00:02:30 = 42.1/s Avg: 351 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1200 in 00:00:28 = 43.5/s Avg: 354 Min: 163 Max: 2018 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 7503 in 00:02:57 = 42.3/s Avg: 351 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1300 in 00:00:30 = 43.7/s Avg: 456 Min: 173 Max: 2401 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 8803 in 00:03:27 = 42.5/s Avg: 367 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:31 = 44.9/s Avg: 349 Min: 158 Max: 2128 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 10203 in 00:03:58 = 42.8/s Avg: 364 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:32 = 44.3/s Avg: 351 Min: 166 Max: 1494 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 11603 in 00:04:30 = 43.0/s Avg: 363 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:30 = 46.9/s Avg: 344 Min: 165 Max: 2075 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 13003 in 00:05:00 = 43.4/s Avg: 361 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1300 in 00:00:28 = 46.0/s Avg: 352 Min: 159 Max: 1486 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 14303 in 00:05:28 = 43.6/s Avg: 360 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 1400 in 00:00:31 = 45.6/s Avg: 339 Min: 163 Max: 2042 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0summary = 15703 in 00:05:58 = 43.8/s Avg: 358 Min: 151 Max: 2729 Err: 0 (0.00%)summary + 494 in 00:00:07 = 69.0/s Avg: 350 Min: 171 Max: 1499 Err: 0 (0.00%) Active: 0 Started: 40 Finished: 40summary = 16197 in 00:06:06 = 44.3/s Avg: 358 Min: 151 Max: 2729 Err: 0 (0.00%)Tidying up remote @ Sat Jul 25 11:37:09 UTC 2020 (1595677029361)... end of run

2、部署清单

start_test.sh(此脚本用于自动运行 Jmeter 测试脚本,而无需手动登录 Jmeter 主 shell,它将询问 Jmeter 测试脚本的位置,然后将其复制到 Jmeter master pod 并启动自动对 Jmeter slave 进行测试): ```bash!/usr/bin/env bash

Script created to launch Jmeter tests directly from the current terminal without accessing the jmeter master pod.

It requires that you supply the path to the jmx file

After execution, test script jmx file may be deleted from the pod itself but not locally.

直接从当前终端启动 Jmeter 测试而创建的脚本,无需访问 Jmeter master pod。

要求提供 jmx 文件的路径

执行后,测试脚本 jmx 文件可能会从 pod 本身删除,但不会在本地删除。

working_dir=”pwd“

获取 namesapce 变量

tenant=awk '{print $NF}' "$working_dir/tenant_export"

jmx=”$1” [ -n “$jmx” ] || read -p ‘Enter path to the jmx file ‘ jmx

if [ ! -f “$jmx” ]; then echo “Test script file was not found in PATH” echo “Kindly check and input the correct file path” exit fi

test_name=”$(basename “$jmx”)”

获取 master pod 详细信息

master_pod=kubectl get po -n $tenant | grep jmeter-master | awk '{print $1}'

kubectl cp “$jmx” -n $tenant “$master_pod:/$test_name”

启动 Jmeter 压测

kubectl exec -ti -n $tenant $master_pod — /bin/bash /load_test “$test_name” kubectl exec -ti -n $tenant $master_pod — /bin/bash /load_test “$test_name”

jmeter_stop.sh(停止测试):```bash#!/usr/bin/env bash#Script writtent to stop a running jmeter master test#Kindly ensure you have the necessary kubeconfig#编写脚本来停止运行的 jmeter master 测试#请确保你有必要的 kubeconfigworking_dir=`pwd`#获取 namesapce 变量tenant=`awk '{print $NF}' $working_dir/tenant_export`master_pod=`kubectl get po -n $tenant | grep jmeter-master | awk '{print $1}'`kubectl -n $tenant exec -it $master_pod -- bash -c "./jmeter/apache-jmeter-5.0/bin/stoptest.sh"

七、小结

传统 Jmeter 存在的问题:

并发数超过单节点承载能力时,多节点环境配置、维护复杂;

默认配置下无法并行运行多个测试,需要更改配置启动额外进程;

难以支持云环境下测试资源的弹性伸缩需求。

Kubernetes-Jmeter 带来的改变:

压测执行节点一键安装;

多个项目、多个测试可并行使用同一个测试资源池(最大并发数允许情况下, Kubernetes 也提供了 RBAC、namespace 等管理能力,支持多用户共享一个集群,并实现资源限制),提高资源利用率;

对接 Kubernetes HPA 根据并发数自动启动、释放压测执行节点。

源码地址:

https://github.com/zuozewei/blog-example/tree/master/Kubernetes/k8s-jmeter-cluster