了解ReplicationController

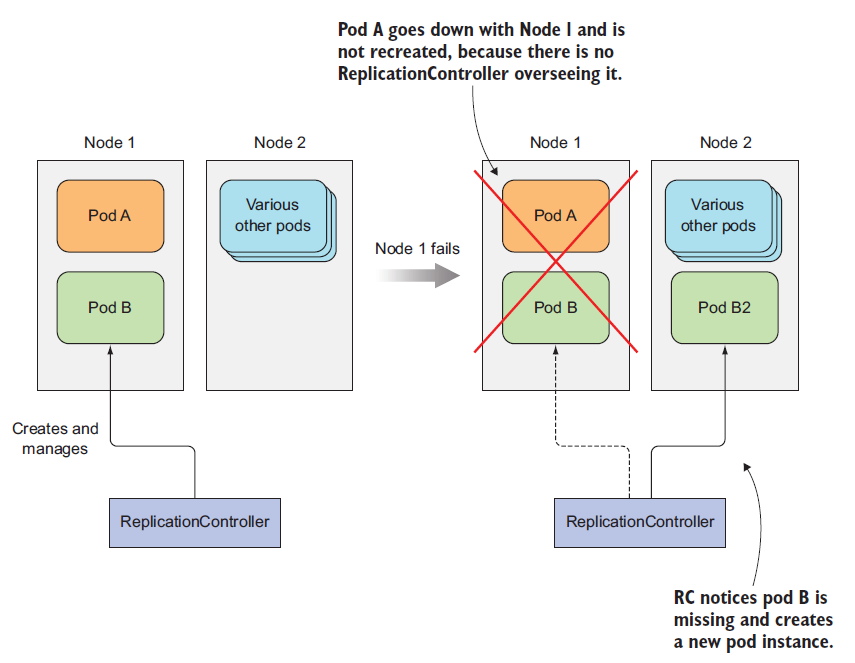

ReplicationController 是一种Kubernetes 资源,简称RC,可确保它的pod 始终保持运行状态。如果pod 因任何原因消失(例如节点从集群中消失或由于该pod 从节点中逐出),则ReplicationController 会注意到缺少了pod 并创建替代pod 。

当一个节点突然下线且带有两个pod 时会发生什么。pod A 是被直接创建的,因此是非托管的pod ,而pod B 由ReplicationController 管理。节点异常退出后,ReplicationController 会创建一个新的pod ( pod B2 )来替换缺少的pod B ,而pod A完全丢失一一没有东西负责重建它。

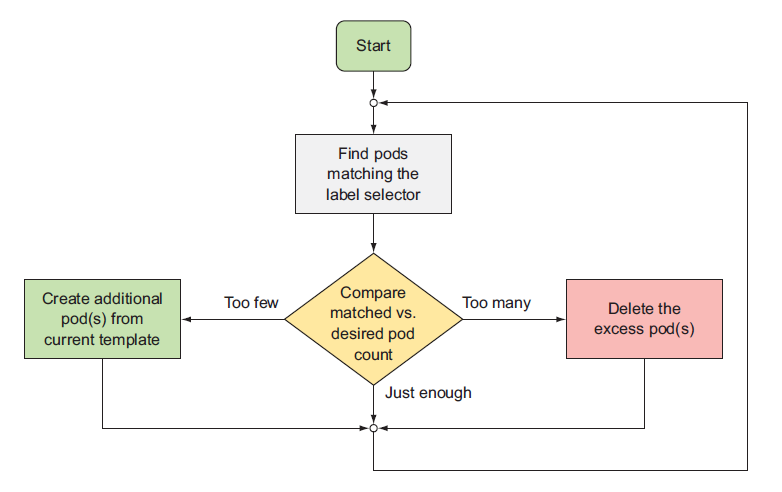

ReplicationController 会持续监控正在运行的pod 列表,并保证相应“类型”的pod 的数目与期望相符。如正在运行的pod 太少,它会根据pod 模板创建新的副本。如正在运行的pod 太多,它将删除多余的副本。

ReplicationController 操作

一个ReplicationController 有三个主要部分

- label selector (标签选择器) ,用于确定ReplicationController 作用域中有哪些pod

- replica count (副本个数),指定应运行的pod 数量

- pod template ( pod 模板),用于创建新的pod 副本

[root@master01 ~]# kubectl explain ReplicationControllerKIND: ReplicationControllerVERSION: v1DESCRIPTION:ReplicationController represents the configuration of a replicationcontroller.FIELDS:apiVersion <string>APIVersion defines the versioned schema of this representation of anobject. Servers should convert recognized schemas to the latest internalvalue, and may reject unrecognized values. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resourceskind <string>Kind is a string value representing the REST resource this objectrepresents. Servers may infer this from the endpoint the client submitsrequests to. Cannot be updated. In CamelCase. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kindsmetadata <Object>If the Labels of a ReplicationController are empty, they are defaulted tobe the same as the Pod(s) that the replication controller manages. Standardobject's metadata. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadataspec <Object>Spec defines the specification of the desired behavior of the replicationcontroller. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-statusstatus <Object>Status is the most recently observed status of the replication controller.This data may be out of date by some window of time. Populated by thesystem. Read-only. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

[root@master01 ~]# kubectl explain ReplicationController.specKIND: ReplicationControllerVERSION: v1RESOURCE: spec <Object>DESCRIPTION:Spec defines the specification of the desired behavior of the replicationcontroller. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-statusReplicationControllerSpec is the specification of a replication controller.FIELDS:minReadySeconds <integer>Minimum number of seconds for which a newly created pod should be readywithout any of its container crashing, for it to be considered available.Defaults to 0 (pod will be considered available as soon as it is ready)replicas <integer>Replicas is the number of desired replicas. This is a pointer todistinguish between explicit zero and unspecified. Defaults to 1. Moreinfo:https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller#what-is-a-replicationcontrollerselector <map[string]string>Selector is a label query over pods that should match the Replicas count.If Selector is empty, it is defaulted to the labels present on the Podtemplate. Label keys and values that must match in order to be controlledby this replication controller, if empty defaulted to labels on Podtemplate. More info:https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#label-selectorstemplate <Object>Template is the object that describes the pod that will be created ifinsufficient replicas are detected. This takes precedence over aTemplateRef. More info:https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller#pod-template

创建RC

cat > myapp-rc.yaml <<EOFapiVersion: v1kind: ReplicationControllermetadata:name: myapp-rclabels:app: myapptype: rcspec:replicas: 3selector:app: myapptype: rctemplate:metadata:name: myapp-podlabels:app: myapptype: rcspec:containers:- name: myappimage: ikubernetes/myapp:v1ports:- name: httpcontainerPort: 80EOF

上面的YAML文件:

- apiVersion: v1

- kindRe: plicationController

- spec.replicas: 指定Pod副本数量,默认为1

- spec.selector: RC通过该属性来筛选要控制的Pod

- spec.template: 这里就是我们之前的Pod的定义的模块,但是不需要apiVersion和kind了

- spec.template.metadata.labels: 注意这里的Pod的labels要和spec.selector相同,这样RC就可以来控制当前这个Pod了。

kubectl apply -f myapp-rc.yaml

[root@master01 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rc-2nh88 1/1 Running 0 43s 10.244.186.204 node03 <none> <none>myapp-rc-dl8px 1/1 Running 0 43s 10.244.196.139 node01 <none> <none>myapp-rc-vhbm7 1/1 Running 0 43s 10.244.140.71 node02 <none> <none>

查看rc

[root@master01 ~]# kubectl get rc

NAME DESIRED CURRENT READY AGE

myapp-rc 3 3 3 3m59s

查看具体信息

[root@master01 ~]# kubectl describe rc myapp-rc

Name: myapp-rc

Namespace: default

Selector: app=myapp,type=rc

Labels: app=myapp

type=rc

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"ReplicationController","metadata":{"annotations":{},"labels":{"app":"myapp","type":"rc"},"name":"myapp-rc","nam...

Replicas: 3 current / 3 desired

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=myapp

type=rc

Containers:

myapp:

Image: ikubernetes/myapp:v1

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 4m47s replication-controller Created pod: myapp-rc-dl8px

Normal SuccessfulCreate 4m47s replication-controller Created pod: myapp-rc-2nh88

Normal SuccessfulCreate 4m46s replication-controller Created pod: myapp-rc-vhbm7

修改RC

vim myapp-rc.yaml

....

replicas: 5

kubectl apply -f myapp-rc.yaml

[root@master01 ~]# kubectl get rc

NAME DESIRED CURRENT READY AGE

myapp-rc 5 5 5 7m41s

[root@master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-rc-2nh88 1/1 Running 0 7m50s 10.244.186.204 node03 <none> <none>

myapp-rc-dl8px 1/1 Running 0 7m50s 10.244.196.139 node01 <none> <none>

myapp-rc-f4qzt 1/1 Running 0 21s 10.244.186.205 node03 <none> <none>

myapp-rc-rqs74 1/1 Running 0 21s 10.244.196.140 node01 <none> <none>

myapp-rc-vhbm7 1/1 Running 0 7m50s 10.244.140.71 node02 <none> <none>

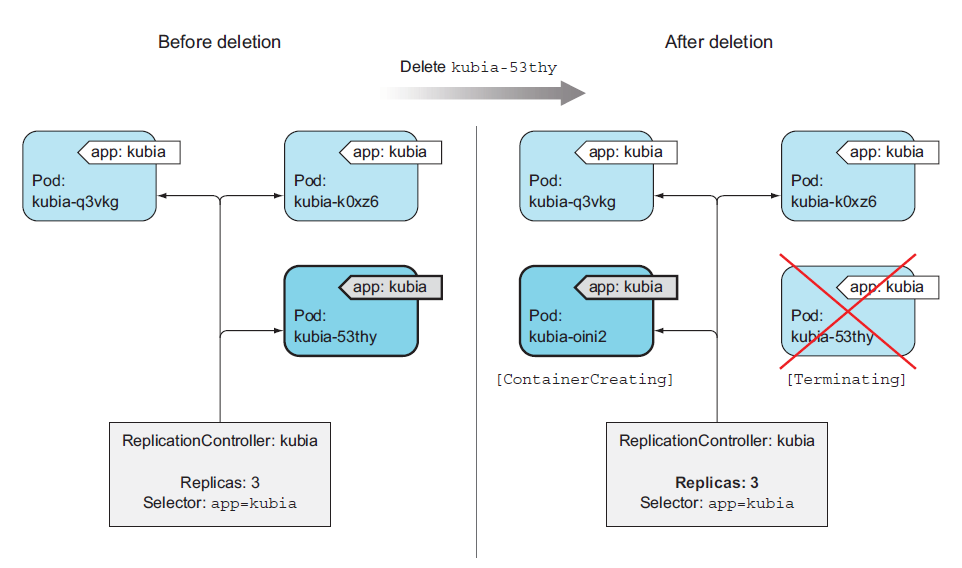

删除rc中的一个pod

[root@master01 ~]# kubectl delete pod myapp-rc-2nh88

pod "myapp-rc-2nh88" deleted

[root@master01 ~]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

myapp-rc-2nh88 1/1 Running 0 10m

myapp-rc-dl8px 1/1 Running 0 10m

myapp-rc-f4qzt 1/1 Running 0 3m2s

myapp-rc-rqs74 1/1 Running 0 3m2s

myapp-rc-vhbm7 1/1 Running 0 10m

myapp-rc-2nh88 1/1 Terminating 0 10m

myapp-rc-29rwv 0/1 Pending 0 0s

myapp-rc-29rwv 0/1 Pending 0 0s

myapp-rc-29rwv 0/1 ContainerCreating 0 0s

myapp-rc-29rwv 0/1 ContainerCreating 0 1s

myapp-rc-29rwv 1/1 Running 0 2s

模拟节点故障

将node03 网卡下线

[root@node03 ~]# ifconfig eth0 down

观察pod是否迁移

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready master 44h v1.16.4

master02 Ready master 44h v1.16.4

master03 Ready master 44h v1.16.4

node01 Ready node 44h v1.16.4

node02 Ready node 44h v1.16.4

node03 NotReady node 44h v1.16.4

[root@master01 ~]# kubectl get pod -w -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-rc-29rwv 1/1 Running 0 8m19s 10.244.186.206 node03 <none> <none>

myapp-rc-dl8px 1/1 Running 0 19m 10.244.196.139 node01 <none> <none>

myapp-rc-f4qzt 1/1 Running 0 11m 10.244.186.205 node03 <none> <none>

myapp-rc-rqs74 1/1 Running 0 11m 10.244.196.140 node01 <none> <none>

myapp-rc-vhbm7 1/1 Running 0 19m 10.244.140.71 node02 <none> <none>

myapp-rc-f4qzt 1/1 Terminating 0 14m 10.244.186.205 node03 <none> <none>

myapp-rc-29rwv 1/1 Terminating 0 11m 10.244.186.206 node03 <none> <none>

myapp-rc-tsgjs 0/1 Pending 0 0s <none> <none> <none> <none>

myapp-rc-tsgjs 0/1 Pending 0 0s <none> node02 <none> <none>

myapp-rc-nz9sj 0/1 Pending 0 0s <none> <none> <none> <none>

myapp-rc-nz9sj 0/1 Pending 0 0s <none> node01 <none> <none>

myapp-rc-tsgjs 0/1 ContainerCreating 0 0s <none> node02 <none> <none>

myapp-rc-nz9sj 0/1 ContainerCreating 0 0s <none> node01 <none> <none>

myapp-rc-nz9sj 0/1 ContainerCreating 0 1s <none> node01 <none> <none>

myapp-rc-tsgjs 0/1 ContainerCreating 0 1s <none> node02 <none> <none>

myapp-rc-tsgjs 1/1 Running 0 2s 10.244.140.72 node02 <none> <none>

myapp-rc-nz9sj 1/1 Running 0 2s 10.244.196.141 node01 <none> <none>

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-rc-dl8px 1/1 Running 0 23m 10.244.196.139 node01 <none> <none>

myapp-rc-nz9sj 1/1 Running 0 95s 10.244.196.141 node01 <none> <none>

myapp-rc-rqs74 1/1 Running 0 16m 10.244.196.140 node01 <none> <none>

myapp-rc-tsgjs 1/1 Running 0 95s 10.244.140.72 node02 <none> <none>

myapp-rc-vhbm7 1/1 Running 0 23m 10.244.140.71 node02 <none> <none>

将pod 移入或移出ReplicationController 的作用域

由ReplicationController 创建的pod 并不是绑定到ReplicationController 。在任何时刻, ReplicationController 管理与标签选择器匹配的pod 。通过更改pod 的标签, 可以将它从ReplicationController 的作用域中添加或删除。它甚至可以从一个ReplicationController 移动到另一个。

如果你更改了一个pod 的标签,使它不再与ReplicationController的标签选择器相匹配, 那么该pod 就变得和其他手动创建的pod 一样了。它不再被任何东西管理。如果运行该节点的pod 异常终止, 它显然不会被重新调度。但请记住,当你更改pod 的标签时, ReplicationController 发现一个pod 丢失了, 并启动一个新的pod替换它。

添加标签

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-dl8px 1/1 Running 0 30m app=myapp,type=rc

myapp-rc-nz9sj 1/1 Running 0 8m39s app=myapp,type=rc

myapp-rc-rqs74 1/1 Running 0 23m app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 8m39s app=myapp,type=rc

myapp-rc-vhbm7 1/1 Running 0 30m app=myapp,type=rc

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-dl8px 1/1 Running 0 30m app=myapp,type=rc

myapp-rc-nz9sj 1/1 Running 0 8m39s app=myapp,type=rc

myapp-rc-rqs74 1/1 Running 0 23m app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 8m39s app=myapp,type=rc

myapp-rc-vhbm7 1/1 Running 0 30m app=myapp,type=rc

[root@master01 ~]# kubectl label pod myapp-rc-dl8px release=beta

pod/myapp-rc-dl8px labeled

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-dl8px 1/1 Running 0 31m app=myapp,release=beta,type=rc

myapp-rc-nz9sj 1/1 Running 0 9m33s app=myapp,type=rc

myapp-rc-rqs74 1/1 Running 0 24m app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 9m33s app=myapp,type=rc

myapp-rc-vhbm7 1/1 Running 0 31m app=myapp,type=rc

给其中一个pod 添加了标签,再次列出所有pod 会显示和以前一样的三个pod 。因为从ReplicationController 角度而言, 没发生任何更改

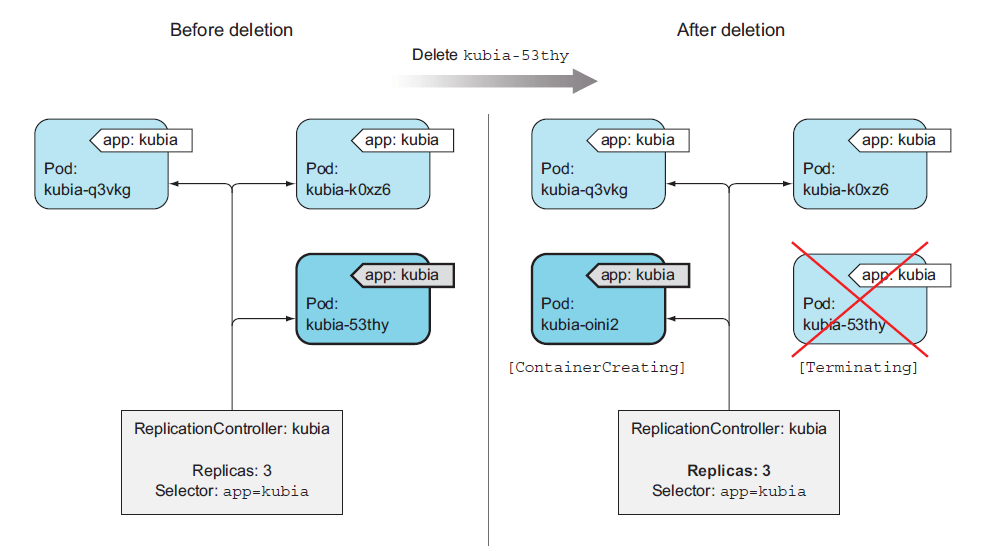

删除标签

现在,删除一个标签。这将使该pod不再与ReplicationController 的标签选择器相匹配,只剩下4个匹配的pod 。因此, ReplicationController 会启动一个新的pod , 将数目恢复为5:

[root@master01 ~]# kubectl label pod myapp-rc-nz9sj type-

pod/myapp-rc-nz9sj labeled

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-dl8px 1/1 Running 0 33m app=myapp,release=beta,type=rc

myapp-rc-nz9sj 1/1 Running 0 11m app=myapp

myapp-rc-rqs74 1/1 Running 0 26m app=myapp,type=rc

myapp-rc-rtm8c 1/1 Running 0 2s app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 11m app=myapp,type=rc

myapp-rc-vhbm7 1/1 Running 0 33m app=myapp,type=rc

修改标签

现在,修改一个标签。这将使该pod不再与ReplicationController 的标签选择器相匹配,只剩下4个匹配的pod 。因此, ReplicationController 会启动一个新的pod , 将数目恢复为5:

[root@master01 ~]# kubectl label pod myapp-rc-tsgjs app=myapp01 --overwrite

pod/myapp-rc-tsgjs labeled

[root@master01 ~]# kubectl get pod --show-labels -w

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-dl8px 1/1 Running 0 38m app=myapp,release=beta,type=rc

myapp-rc-nz9sj 1/1 Running 0 16m app=myapp

myapp-rc-rqs74 1/1 Running 0 30m app=myapp,type=rc

myapp-rc-rtm8c 1/1 Running 0 4m39s app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 16m app=myapp,type=rc

myapp-rc-vhbm7 1/1 Running 0 38m app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 16m app=myapp01,type=rc

myapp-rc-tsgjs 1/1 Running 0 16m app=myapp01,type=rc

myapp-rc-27gd6 0/1 Pending 0 0s app=myapp,type=rc

myapp-rc-27gd6 0/1 Pending 0 0s app=myapp,type=rc

myapp-rc-27gd6 0/1 ContainerCreating 0 0s app=myapp,type=rc

myapp-rc-27gd6 0/1 ContainerCreating 0 1s app=myapp,type=rc

myapp-rc-27gd6 1/1 Running 0 3s app=myapp,type=rc

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-27gd6 1/1 Running 0 66s app=myapp,type=rc

myapp-rc-dl8px 1/1 Running 0 40m app=myapp,release=beta,type=rc

myapp-rc-nz9sj 1/1 Running 0 17m app=myapp

myapp-rc-rqs74 1/1 Running 0 32m app=myapp,type=rc

myapp-rc-rtm8c 1/1 Running 0 6m26s app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 17m app=myapp01,type=rc

myapp-rc-vhbm7 1/1 Running 0 40m app=myapp,type=rc

扩容RC

[root@master01 ~]# kubectl scale rc myapp-rc --replicas=10

replicationcontroller/myapp-rc scaled

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-rc-27gd6 1/1 Running 0 6m25s app=myapp,type=rc

myapp-rc-5p7ws 1/1 Running 0 15s app=myapp,type=rc

myapp-rc-66ggv 1/1 Running 0 15s app=myapp,type=rc

myapp-rc-dl8px 1/1 Running 0 45m app=myapp,release=beta,type=rc

myapp-rc-kdw6p 1/1 Running 0 15s app=myapp,type=rc

myapp-rc-nz9sj 1/1 Running 0 23m app=myapp

myapp-rc-pqqk4 1/1 Running 0 15s app=myapp,type=rc

myapp-rc-rqs74 1/1 Running 0 38m app=myapp,type=rc

myapp-rc-rtm8c 1/1 Running 0 11m app=myapp,type=rc

myapp-rc-tsgjs 1/1 Running 0 23m app=myapp01,type=rc

myapp-rc-vhbm7 1/1 Running 0 45m app=myapp,type=rc

myapp-rc-w655s 1/1 Running 0 15s app=myapp,type=rc

滚动更新

rolling-update官方已经不推荐了,因为RC现在已被deployment取代了,现在都是直接用deployment

[root@master01 ~]# kubectl rolling-update myapp-rc --image=ikubernetes/myapp:v2

Command "rolling-update" is deprecated, use "rollout" instead

Found existing update in progress (myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3), resuming.

Continuing update with existing controller myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3.

Scaling up myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 from 1 to 10, scaling down myapp-rc from 10 to 0 (keep 10 pods available, don't exceed 11 pods)

Scaling myapp-rc down to 9

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 2

Scaling myapp-rc down to 8

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 3

Scaling myapp-rc down to 7

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 4

Scaling myapp-rc down to 6

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 5

Scaling myapp-rc down to 5

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 6

Scaling myapp-rc down to 4

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 7

Scaling myapp-rc down to 3

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 8

Scaling myapp-rc down to 2

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 9

Scaling myapp-rc down to 1

Scaling myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 up to 10

Scaling myapp-rc down to 0

Update succeeded. Deleting old controller: myapp-rc

Renaming myapp-rc-9545d61ba1b2ce3cbe6eef6a8e8abef3 to myapp-rc

replicationcontroller/myapp-rc rolling updated

删除RC

[root@master01 ~]# kubectl delete rc myapp-rc

replicationcontroller "myapp-rc" deleted