一、 前言

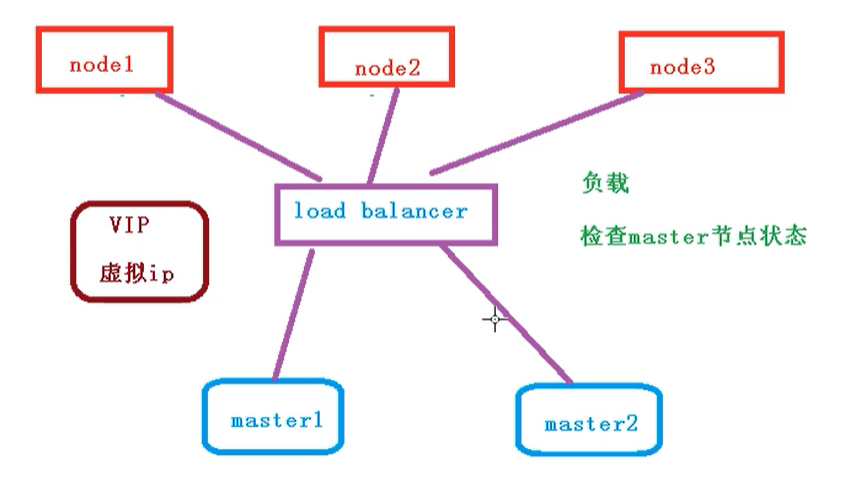

之前我们搭建的集群,只有一个master节点,当master节点宕机的时候,通过node将无法继续访问,而master主要是管理作用,所以整个集群将无法提供服务

二、 高可用集群

下面我们就需要搭建一个多master节点的高可用集群,不会存在单点故障问题

但是在node 和 master节点之间,需要存在一个 LoadBalancer组件,作用如下:

- 负载

- 检查master节点的状态

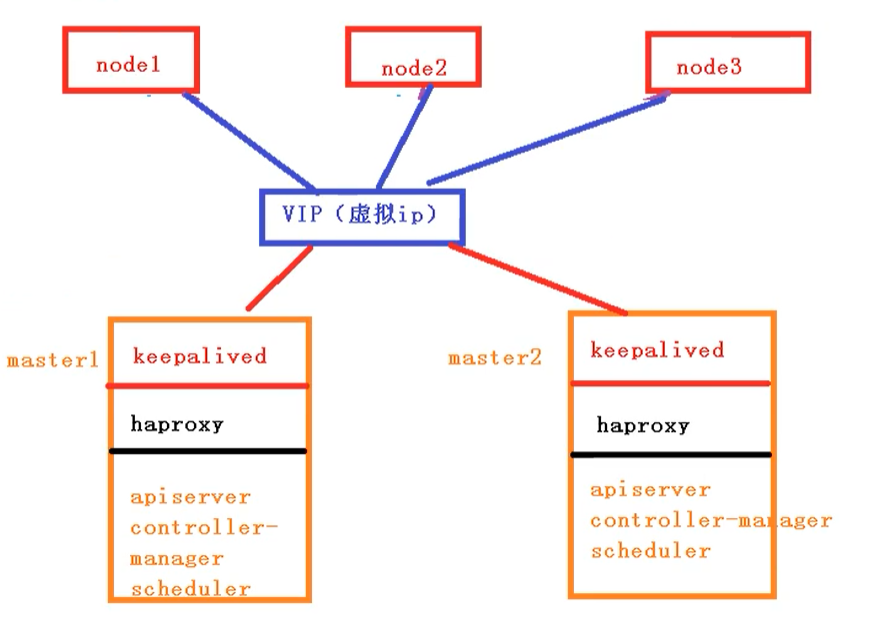

三、 高可用集群技术细节

高可用集群技术细节如下所示:

- keepalived:配置虚拟ip,检查节点的状态

- haproxy:负载均衡服务【类似于nginx】

- apiserver:

- controller:

- manager:

- scheduler:

四、 高可用集群步骤

我们采用2个master节点,一个node节点来搭建高可用集群,下面给出了每个节点需要做的事情

| master1 | 10.4.104.169 | 1.部署Keepalived 2.部署harproxy 3.初始化操作 4.安装docker,网络插件 |

|---|---|---|

| master2 | 10.4.104.170 | 1.部署Keepalived 2.部署harproxy 3.添加master2节点到集群 4.安装docker,网络插件 |

| node1 | 10.4.104.171 | 1.加入到集群中 2.安装docker,网络插件 |

| VIP |

4.1 初始化操作

我们需要在这三个节点上进行操作

# 关闭防火墙systemctl stop firewalldsystemctl disable firewalld# 关闭selinux# 永久关闭sed -i 's/enforcing/disabled/' /etc/selinux/config# 临时关闭setenforce 0# 关闭swap# 临时swapoff -a# 永久关闭sed -ri 's/.*swap.*/#&/' /etc/fstab# 根据规划设置主机名【master1节点上操作】hostnamectl set-hostname master1# 根据规划设置主机名【master2节点上操作】hostnamectl set-hostname master1# 根据规划设置主机名【node1节点操作】hostnamectl set-hostname node1# r添加hostscat >> /etc/hosts << EOF192.168.44.158 k8smaster192.168.44.155 master01.k8s.io master1192.168.44.156 master02.k8s.io master2192.168.44.157 node01.k8s.io node1EOF# 将桥接的IPv4流量传递到iptables的链【3个节点上都执行】cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOF# 生效sysctl --system# 时间同步yum install ntpdate -yntpdate time.windows.com

4.2 部署keepAlived

下面我们需要在所有的master节点【master1和master2】上部署keepAlive

4.2.1 安装相关包

# 安装相关工具yum install -y conntrack-tools libseccomp libtool-ltdl# 安装keepalivedyum install -y keepalived

4.2.2 配置master节点

添加master1的配置

cat > /etc/keepalived/keepalived.conf <<EOF! Configuration File for keepalivedglobal_defs {router_id k8s}vrrp_script check_haproxy {script "killall -0 haproxy"interval 3weight -2fall 10rise 2}vrrp_instance VI_1 {state MASTERinterface ens33virtual_router_id 51priority 250advert_int 1authentication {auth_type PASSauth_pass ceb1b3ec013d66163d6ab}virtual_ipaddress {192.168.44.158}track_script {check_haproxy}}EOF

添加master2的配置

cat > /etc/keepalived/keepalived.conf <<EOF! Configuration File for keepalivedglobal_defs {router_id k8s}vrrp_script check_haproxy {script "killall -0 haproxy"interval 3weight -2fall 10rise 2}vrrp_instance VI_1 {state BACKUPinterface ens33virtual_router_id 51priority 200advert_int 1authentication {auth_type PASSauth_pass ceb1b3ec013d66163d6ab}virtual_ipaddress {192.168.44.158}track_script {check_haproxy}}EOF

4.2.3 启动和检查

在两台master节点都执行

# 启动keepalivedsystemctl start keepalived.service# 设置开机启动systemctl enable keepalived.service# 查看启动状态systemctl status keepalived.service

启动后查看master的网卡信息

ip a s ens33

4.3 部署haproxy

haproxy主要做负载的作用,将我们的请求分担到不同的node节点上

4.3.1 安装

在两个master节点安装 haproxy

# 安装haproxyyum install -y haproxy# 启动 haproxysystemctl start haproxy# 开启自启systemctl enable haproxy

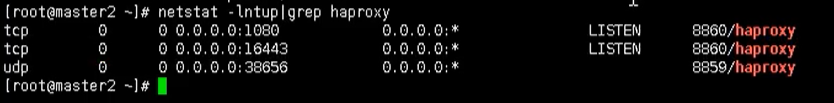

启动后,我们查看对应的端口是否包含 16443

netstat -tunlp | grep haproxyCopy to clipboardErrorCopied

4.3.2 配置

两台master节点的配置均相同,配置中声明了后端代理的两个master节点服务器,指定了haproxy运行的端口为16443等,因此16443端口为集群的入口

cat > /etc/haproxy/haproxy.cfg << EOF#---------------------------------------------------------------------# Global settings#---------------------------------------------------------------------global# to have these messages end up in /var/log/haproxy.log you will# need to:# 1) configure syslog to accept network log events. This is done# by adding the '-r' option to the SYSLOGD_OPTIONS in# /etc/sysconfig/syslog# 2) configure local2 events to go to the /var/log/haproxy.log# file. A line like the following can be added to# /etc/sysconfig/syslog## local2.* /var/log/haproxy.log#log 127.0.0.1 local2chroot /var/lib/haproxypidfile /var/run/haproxy.pidmaxconn 4000user haproxygroup haproxydaemon# turn on stats unix socketstats socket /var/lib/haproxy/stats#---------------------------------------------------------------------# common defaults that all the 'listen' and 'backend' sections will# use if not designated in their block#---------------------------------------------------------------------defaultsmode httplog globaloption httplogoption dontlognulloption http-server-closeoption forwardfor except 127.0.0.0/8option redispatchretries 3timeout http-request 10stimeout queue 1mtimeout connect 10stimeout client 1mtimeout server 1mtimeout http-keep-alive 10stimeout check 10smaxconn 3000#---------------------------------------------------------------------# kubernetes apiserver frontend which proxys to the backends#---------------------------------------------------------------------frontend kubernetes-apiservermode tcpbind *:16443option tcplogdefault_backend kubernetes-apiserver#---------------------------------------------------------------------# round robin balancing between the various backends#---------------------------------------------------------------------backend kubernetes-apiservermode tcpbalance roundrobinserver master01.k8s.io 192.168.44.155:6443 checkserver master02.k8s.io 192.168.44.156:6443 check#---------------------------------------------------------------------# collection haproxy statistics message#---------------------------------------------------------------------listen statsbind *:1080stats auth admin:awesomePasswordstats refresh 5sstats realm HAProxy\ Statisticsstats uri /admin?statsEOF

4.4 安装Docker、Kubeadm、kubectl

所有节点安装Docker/kubeadm/kubelet ,Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker

4.4.1 安装Docker

首先配置一下Docker的阿里yum源

cat >/etc/yum.repos.d/docker.repo<<EOF[docker-ce-edge]name=Docker CE Edge - \$basearchbaseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/\$basearch/edgeenabled=1gpgcheck=1gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpgEOF

然后yum方式安装docker

# yum安装yum -y install docker-ce# 查看docker版本docker --version# 启动dockersystemctl enable dockersystemctl start docker

配置docker的镜像源

cat >> /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]}EOF

然后重启docker

systemctl restart docker

4.4.2 添加kubernetes软件源

然后我们还需要配置一下yum的k8s软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

4.4.3 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

# 安装kubelet、kubeadm、kubectl,同时指定版本yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0# 设置开机启动systemctl enable kubelet

4.5 部署Kubernetes Master【master节点】

4.5.1 创建kubeadm配置文件

在具有vip的master上进行初始化操作,这里为master1

# 创建文件夹mkdir /usr/local/kubernetes/manifests -p# 到manifests目录cd /usr/local/kubernetes/manifests/# 新建yaml文件vi kubeadm-config.yaml

yaml内容如下所示:

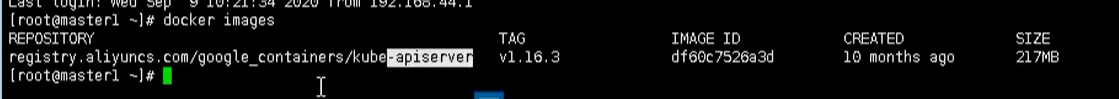

apiServer:certSANs:- master1- master2- master.k8s.io- 192.168.44.158- 192.168.44.155- 192.168.44.156- 127.0.0.1extraArgs:authorization-mode: Node,RBACtimeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta1certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: "master.k8s.io:16443"controllerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.16.3networking:dnsDomain: cluster.localpodSubnet: 10.244.0.0/16serviceSubnet: 10.1.0.0/16scheduler: {}

然后我们在 master1 节点执行

kubeadm init --config kubeadm-config.yaml

执行完成后,就会在拉取我们的进行了【需要等待…】

按照提示配置环境变量,使用kubectl工具

# 执行下方命令mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config# 查看节点kubectl get nodes# 查看podkubectl get pods -n kube-system

按照提示保存以下内容,一会要使用:

kubeadm join master.k8s.io:16443 --token jv5z7n.3y1zi95p952y9p65 \--discovery-token-ca-cert-hash sha256:403bca185c2f3a4791685013499e7ce58f9848e2213e27194b75a2e3293d8812 \--control-plane

—control-plane : 只有在添加master节点的时候才有

查看集群状态

# 查看集群状态kubectl get cs# 查看podkubectl get pods -n kube-system

4.6 安装集群网络

从官方地址获取到flannel的yaml,在master1上执行

# 创建文件夹mkdir flannelcd flannel# 下载yaml文件wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

安装flannel网络

kubectl apply -f kube-flannel.yml

检查

kubectl get pods -n kube-system

4.7 master2节点加入集群

4.7.1 复制密钥及相关文件

从master1复制密钥及相关文件到master2

# ssh root@192.168.44.156 mkdir -p /etc/kubernetes/pki/etcd# scp /etc/kubernetes/admin.conf root@192.168.44.156:/etc/kubernetes# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@192.168.44.156:/etc/kubernetes/pki# scp /etc/kubernetes/pki/etcd/ca.* root@192.168.44.156:/etc/kubernetes/pki/etcd

4.7.2 master2加入集群

执行在master1上init后输出的join命令,需要带上参数--control-plane表示把master控制节点加入集群

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba --control-plane

检查状态

kubectl get nodekubectl get pods --all-namespaces

4.8 加入Kubernetes Node

在node1上执行

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba

集群网络重新安装,因为添加了新的node节点

检查状态

kubectl get nodekubectl get pods --all-namespaces

4.9 测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

# 创建nginx deploymentkubectl create deployment nginx --image=nginx# 暴露端口kubectl expose deployment nginx --port=80 --type=NodePort# 查看状态kubectl get pod,svc

然后我们通过任何一个节点,都能够访问我们的nginx页面