前言

- k8s中有默认的几个角色

- role:特定命名空间访问权限

- ClusterRole:所有命名空间的访问权限

- 角色绑定

- roleBinding:角色绑定到主体

- ClusterRoleBinding:集群角色绑定到主体

- 主体

- user:用户

- group:用户组

- serviceAccount:服务账号

- 角色绑定主题有两种方式:

- 绑定kind为service account

- 绑定kind为user

一、 在集群内拿到Token

1.1 创建命名空间sademo

# 创建命名空间kubectl create ns sademo

1.2 创建角色sa-role

sa-role.yaml ```yaml kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: sademo # namespace的name,可更改 name: sa-role # role的name,可更改 rules:# 创建角色# 新建role yaml文件vim sa-role.yaml# 执行kubectl apply -f sa-role.yaml

- apiGroups: [“”] # “” indicates the core API group resources: [“pods”] verbs: [“get”, “watch”, “list”]

<a name="wTBlP"></a>### 1.3 创建角色绑定user-rolebinding```shell#创建serviceAccountkubectl create serviceaccount sa-sa# 创建roleBinding yaml文件vim user-rolebinding.yamlkubectl apply -f user-rolebinding.yaml

1.5 service account绑定(kind 是service account)

service account绑定的yaml

sa-roleRinding.yaml

kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: sa-rolebindingnamespace: sademosubjects:- kind: ServiceAccountname: sa-sa # Name is case sensitivenamespace: sademoroleRef:kind: Role #this must be Role or ClusterRolename: sa-role # this must match the name of the Role or ClusterRole you wish to bind toapiGroup: rbac.authorization.k8s.io

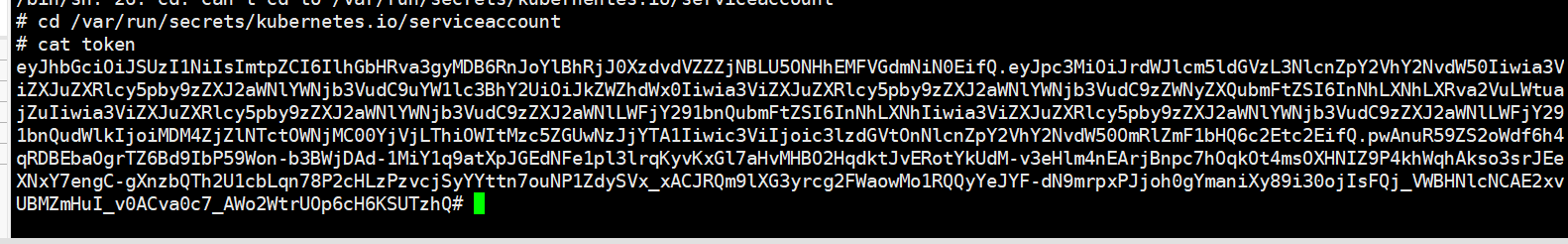

1.5.1 在pod中使用service account

# 创建podvim sa-nginx-pod.yaml执行kubectl apply -f sa-nginx-pod.yaml# 查看pod的信息kubectl describe pod sa-nginx-pod# 进入pod内部# ab-nginx-pod 为新创建的pod的名称 可以通过 kubect get pod 查看kubectl exec -it sa-nginx-pod -- /bin/bash# 生成CA和Tockenroot@sa-nginx-pod:/#export CURL_CA_BUNDLE=/var/run/secrets/kubernetes.io/serviceaccount/ca.crtroot@sa-nginx-pod:/#TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)# 使用curl 获取 pods列表root@sa-nginx-pod:/#curl -H "Authorization: Bearer $TOKEN" https://10.4.104.169:6443/api/v1/namespaces/defult/podskubectl exec it sa-nginx-pod -- /bin/shcd /var/run/secrets/kubernetes.io/serviceaccountcat token

sa-nginx-pod.yaml:

apiVersion: v1kind: Podmetadata:name: sa-nginx-podspec:serviceAccountName: sa-sacontainers:- image: nginx:latestname: nginx

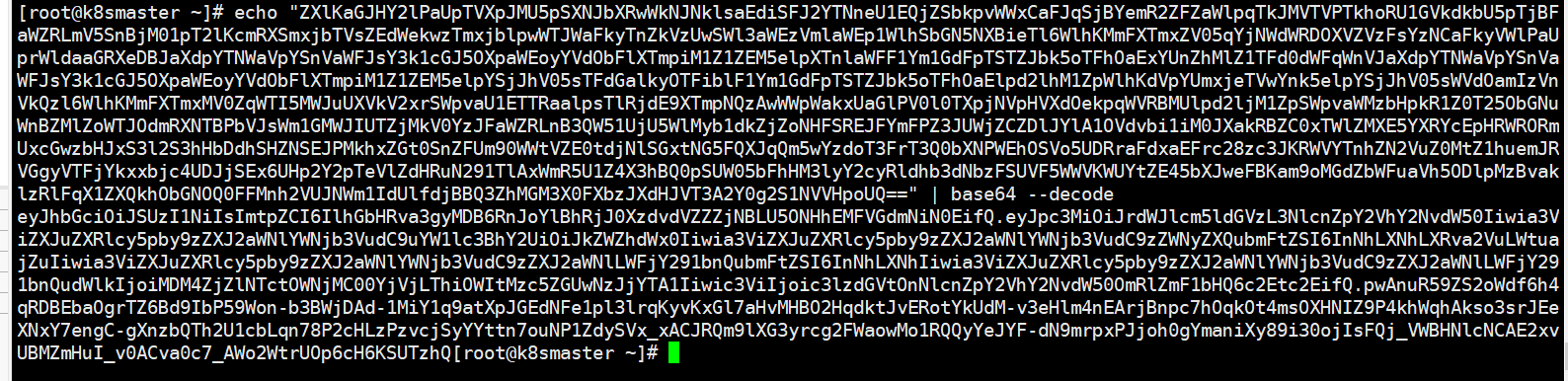

二、验证能否在集群外拿到Tocken

# 获取service accountkubectl get serviceaccount# 查看service account 信息kubectl describe sa sa-sa# 查看secret 的json信息kubectl get secret secret的name -o json# 转换Tokenecho "Tocken" | base64 --decode

三、 验证Tocken是否相同

根据截图,相同√

"eyJhbGciOiJSUzI1NiIsImtpZCI6IlhGbHRva3gyMDB6RnJoYlBhRjJ0XzdvdVZZZjNBLU5ONHhEMFVGdmNiN0EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InNhLXNhLXRva2VuLXZ0YjJ0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InNhLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODljOGVlYWEtZGY5Mi00NjI2LWJkMzUtOTczZmRlODlhYWUyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6c2Etc2EifQ.DdhVDUO6azpa9bY-803SvFm-YAyz4pSOspFBLts91mnxaQEH9AY-yTJt1DR8QBMVz4XnIY0bckkq3SJoIq_ZgOdcBl31EM7srvPl67hkjYF1562i3YmOfDj3D7b3X7hrB3xKdpbK9youlqAtalfR9DnvP3c9H4n5asI2Nu37IoxBuEPLv7Ke_mizfb638sV4rNyhKzx7WWeyLIxmebk-_C7_F6rZlGLgG3245OwLwvYu_HSiZtcRMeK9-pM417PXLqAVOJ5g1VKgn30jXXs6U0ht0DMylziv2en1q_iQfT3YAPfVenqjtaBxmlnZlGuiA9rXGFuCuBxiCWe7viP6Qw"

四、用代码测试

package mainimport ("context""fmt"metav1 "k8s.io/apimachinery/pkg/apis/meta/v1""k8s.io/apimachinery/pkg/runtime/schema""k8s.io/client-go/dynamic""k8s.io/client-go/rest")func getConfig() *rest.Config {config := rest.Config{// Host: "https://10.2.238.171:6443",Host: "https://10.4.104.169:6443",// ContentConfig: rest.ContentConfig{// GroupVersion: &v1.SchemeGroupVersion,// NegotiatedSerializer: scheme.Codecs.WithoutConversion(),// },TLSClientConfig: rest.TLSClientConfig{Insecure: true,},// BearerToken: "eyJhbGciOiJSUzI1NiIsImtpZCI6IlhlazJtR3QxTGQ0OEhrN1ZoZWE2d2NuenZEbEc4WjNSeXV6RnlvUEpobEUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImN2YmFja3VwLXRva2VuLTg5OHQ3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImN2YmFja3VwIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZDRjYTE0NzQtNTM5Yy00Y2FhLTllNDMtNmM3OWUwNDQwYTExIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6Y3ZiYWNrdXAifQ.GY4UVEtXufBvDGuqhxZAzqTcdGh-wUpJ9bHYsCu5ds0uFqMNmu4iQBueW6aJJRnAl1ziiRFYANvMIg5PMGlszg1H7EuDTg79dUD0j1PNE7JgDCeP0yIzqEKXJlWQKK7W8WIa-KSMK3JyHjfN3h2tOMgilWbxweKBc5lzxHWJlyXI2caoV_ihx6pWRBIyadUBl1ptgc7GrkMnlEcstgbLtUcq7z5pgptTFXFRFi-4_SwsSo9QiCkDi0QxiuziESnbbzPUGkdqb87uL_yKhT0SvWTFAJ3N4gLq4Zi76hQvgcUT5i2zSV8T7BPgMA_cSOodRqyJqJyfHqqhMYuQU-sGlg",BearerToken: "eyJhbGciOiJSUzI1NiIsImtpZCI6IlhGbHRva3gyMDB6RnJoYlBhRjJ0XzdvdVZZZjNBLU5ONHhEMFVGdmNiN0EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InNhLXNhLXRva2VuLXZ0YjJ0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InNhLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODljOGVlYWEtZGY5Mi00NjI2LWJkMzUtOTczZmRlODlhYWUyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6c2Etc2EifQ.DdhVDUO6azpa9bY-803SvFm-YAyz4pSOspFBLts91mnxaQEH9AY-yTJt1DR8QBMVz4XnIY0bckkq3SJoIq_ZgOdcBl31EM7srvPl67hkjYF1562i3YmOfDj3D7b3X7hrB3xKdpbK9youlqAtalfR9DnvP3c9H4n5asI2Nu37IoxBuEPLv7Ke_mizfb638sV4rNyhKzx7WWeyLIxmebk-_C7_F6rZlGLgG3245OwLwvYu_HSiZtcRMeK9-pM417PXLqAVOJ5g1VKgn30jXXs6U0ht0DMylziv2en1q_iQfT3YAPfVenqjtaBxmlnZlGuiA9rXGFuCuBxiCWe7viP6Qw",}return &config}func main() {// 设置资源组合版本gvr := schema.GroupVersionResource{Group: "apps", Version: "v1", Resource: "deployments"}// 获取config对象config := getConfig()// 获取动态客户端dynamicClient, _ := dynamic.NewForConfig(config)//获取deployment资源resStruct, _ := dynamicClient.Resource(gvr).Namespace("default").Get(context.TODO(), "mysql", metav1.GetOptions{})// 将unstructured序列化成jsonj, _ := resStruct.MarshalJSON()// 打印jsonfmt.Println(string(j))}

结果:

附录(以下是错误尝试记录)

一、 尝试不在Linux下创建用户

用户名user绑定(kind是User)

角色绑定的yaml

user-rolebinding.yaml

kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: user-rolebingding # roleBinding的name,可更改namespace: sademosubjects:- kind: Username: lucy # Name is case sensitiveapiGroup: rbac.authorization.k8s.ioroleRef:kind: Role #this must be Role or ClusterRolename: sa-role # this must match the name of the Role or ClusterRole you wish to bind toapiGroup: rbac.authorization.k8s.io

1.4.1 创建角色的文件夹

mkdir /usr/local/k8s/lucyvim lucy-csr.json

lucy-csr.json

{"CN": "lucy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing",# Group"O": "k8s","OU": "System"}]}

1.4.2 下载证书工具:

mkdir /udr/local/binwget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64chmod a+x *

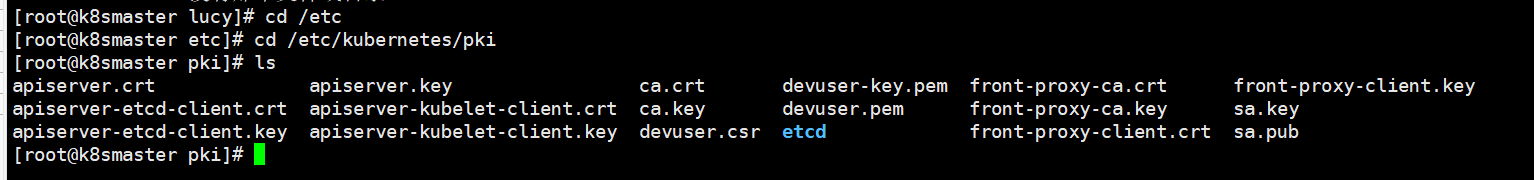

到lucy的目录下查看kubenetes

ls /usr/local/k8s/lucy/etc/kubernetes/pki

1.4.3 生成令牌,设置各种参数

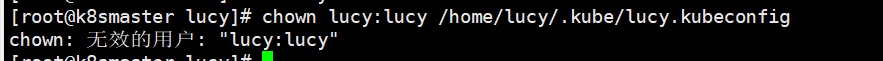

# 生成令牌cfssl gencert -ca=ca.crt -ca-key=ca.key -profile=kubernetes /usr/local/k8s/lucy/lucy-csr.json | cfssljson -bare lucy# ls 就能看到生成了lucy-key.pem,lucy.pem# 进入Lucy 目录 设置集群参数cd /usr/local/k8s/lucykubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.crt \--embed-certs=true \--server=https://10.4.104.169:6443 \--kubeconfig=lucy.kubeconfig# 设置客户端认证参数kubectl config set-credentials lucy \--client-key=/etc/kubernetes/pki/lucy-key.pem \--client-certificate=/etc/kubernetes/pki/lucy.pem \--embed-certs=true \--kubeconfig=lucy.kubeconfig# 设置上下文参数kubectl config set-context default \--cluster=kubernetes \--user=lucy \--namespace=sademo \--kubeconfig=lucy.kubeconfig# 设置默认上下文(可以没有)kubectl config use-context default --kubeconfig=lucy.kubeconfig# 绑定角色空间kubectl create rolebinding devuser-admin-binding \--clusterrole=admin \--user=lucy \--namespace=sademo# 进入Lucy,设置一个./kube文件mkdir ./kube# 将其复制到lucy目录下cp lucy.kubeconfig /home/lucy/.kube# 设置文件的所有者为lucychown lucy:lucy /home/lucy/.kube/lucy.kubeconfig

二、 CV官方提供测试Token

和以上操作一样,比以上更简洁,做测试使用

(以下是CV官方提供的测试命令)# 要创建Kubernetes服务帐户(例如cvbackup)kubectl create serviceaccount cvbackup# 为确保服务帐户具有执行数据保护操作的足够特权,请将服务帐户添加到default-sa-crb群集角色绑定中kubectl create clusterrolebinding default-sa-crb \--clusterrole=cluster-admin \--serviceaccount=default:cvbackup# 提取配置Kubernetes集群以进行数据保护所需的服务帐户令牌。kubectl get secrets -o jsonpath="{.items[?(@.metadata.annotations['kubernetes\.io/service-account\.name']=='cvbackup')].data.token}"|base64 --decode