1. 说明

Kubeadm是一种常用的kubernetes集群安装方式,可以在生产环境中安装高可用集群,需要注意的是,这种方式安装的集群证书有效期只有一年,需要定期轮换。

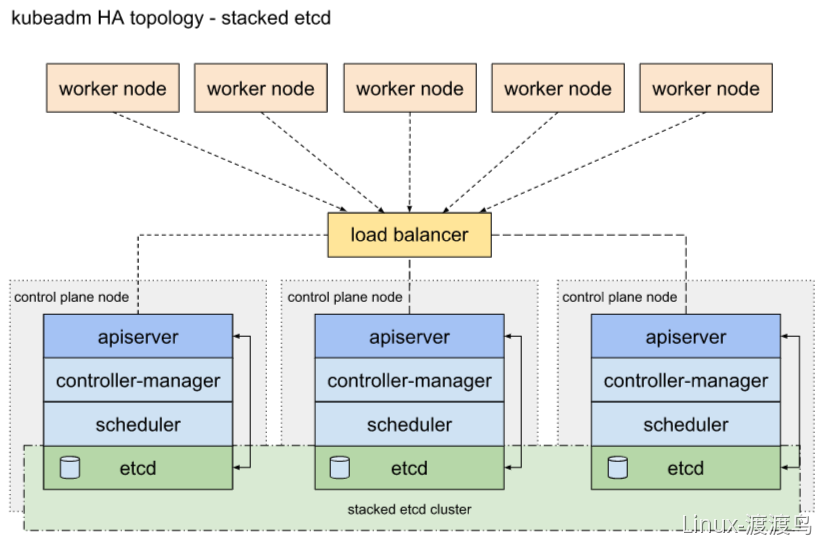

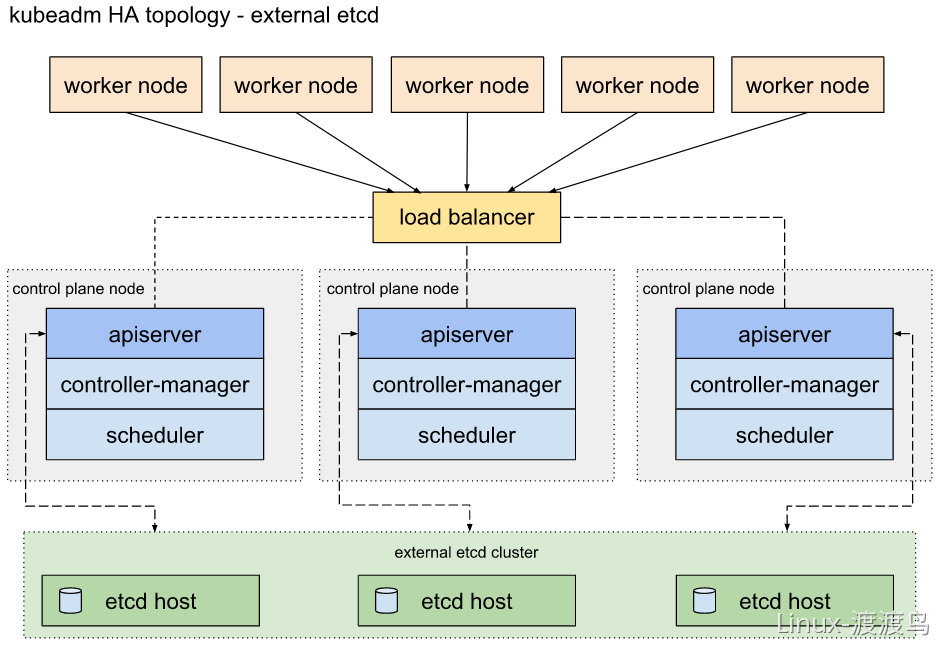

使用kubeadm部署高可用集群的时候,etcd集群可以放到master节点上,也可以使用外部的etcd集群。前者节约资源,方便管理,后者可用性更高

2. 节点配置

我们实验使用 VMware 虚拟出一组虚拟机用于部署一套Kubernetes测试环境。

这里仅仅设置了一个Master,主要是因为笔记本电脑只有64G内存,不足以分配3个Master节点,另外在内网小规模开发环境一个Master节点也够用了

另外这里LB也只设置了一台Nginx,只要不宕机,测试环境下一台Nginx不可能成为瓶颈

我们将更多的资源留给Worker节点,方便后续部署监控系统、CICD,Istio,Knative 等组件

并且设置一个独立的 Middleware 节点,该节点在集群外部,在其之上部署中间件服务,提供给集群使用,这个模拟公有云服务

Tips:有条件的做好快照

| Name | IP | OS | Kernel | CPU | Mem | Disk(/data) | Describe |

|---|---|---|---|---|---|---|---|

| LB-80 | 10.4.7.80 | CentOS 7 | 5.4 | 2 | 4 | 0 | LoadBalancer |

| Master-81 | 10.4.7.81 | CentOS 7 | 5.4 | 2 | 4 | 100 | Kubernetes Master |

| Worker-84 | 10.4.7.84 | CentOS 7 | 5.4 | 4 | 8 | 100 | Kubernetes Worker |

| Worker-85 | 10.4.7.85 | CentOS 7 | 5.4 | 4 | 8 | 100 | Kubernetes Worker |

| Worker-86 | 10.4.7.86 | CentOS 7 | 5.4 | 4 | 8 | 100 | Kubernetes Worker |

| Worker-87 | 10.4.7.87 | CentOS 7 | 5.4 | 4 | 8 | 100 | Kubernetes Worker |

| Worker-88 | 10.4.7.88 | CentOS 7 | 5.4 | 4 | 8 | 100 | Kubernetes Worker |

| Middleware-89 | 10.4.7.89 | CentOS 7 | 5.4 | 4 | 8 | 200(ssd) | Middleware Service (MySQL,Redis,MQ…) |

2.1. 工具准备

因为是手动部署,设计远程批量执行shell命令、批量下发文件操作,我们将使用批处理工具 gosh 和几个IP清单文件:

all.ip:包含清单中8个节点ip地址node.ip:包含Master和Worker节点的IP地址master.ip:包含Master节点的IP地址worker.ip:包含worker节点的IP地址2.2. CheckList

注意我们使用的 ip 地址清单文件,有些是用的node.ip有些是all.ip2.2.1. 确保禁用swap分区

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "free -g | grep -i swap"10.4.7.81Swap: 0 0 010.4.7.86Swap: 0 0 010.4.7.85Swap: 0 0 010.4.7.87Swap: 0 0 010.4.7.88Swap: 0 0 010.4.7.84Swap: 0 0 0

2.2.2. 确保mac地址唯一

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "ifconfig ens32 | grep ether | awk '{print \$2}'"|xargs -n 2 10.4.7.88 00:0c:29:a7:59:43 10.4.7.87 00:0c:29:22:7d:e8 10.4.7.85 00:0c:29:e7:8a:01 10.4.7.81 00:0c:29:94:80:98 10.4.7.86 00:0c:29:bb:02:5b 10.4.7.84 00:0c:29:c9:84:192.2.3. 确保product_uuid唯一

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "cat /sys/class/dmi/id/product_uuid"|xargs -n 2 10.4.7.85 bed34d56-4d2f-ac5f-9bb8-c11329e78a01 10.4.7.86 5dfe4d56-85ff-96c5-3ede-4e0f4bbb025b 10.4.7.81 070c4d56-9d67-ba2f-16b8-e13ca2948098 10.4.7.84 c7674d56-bcee-4fb2-73fb-7a22b4c98419 10.4.7.87 8d7a4d56-cbca-9f2b-5cbc-6059bc227de8 10.4.7.88 a25b4d56-bd53-ce8b-cd04-26b0e2a759432.2.4. 确保关闭防火墙

``` [root@duduniao deploy-kubernetes]# gosh cmd -i all.ip —print.field stdout —print.field ip “systemctl is-active firewalld; systemctl is-enabled firewalld” | xargs -n 3 10.4.7.85 unknown disabled 10.4.7.84 unknown disabled 10.4.7.88 unknown disabled 10.4.7.87 unknown disabled 10.4.7.80 unknown disabled 10.4.7.81 unknown disabled 10.4.7.86 unknown disabled 10.4.7.89 unknown disabled

[root@duduniao deploy-kubernetes]# gosh cmd -i all.ip “getenforce;grep ‘^SELINUX=’ /etc/selinux/config”|xargs -n 3 10.4.7.81 Disabled SELINUX=disabled 10.4.7.85 Disabled SELINUX=disabled 10.4.7.89 Disabled SELINUX=disabled 10.4.7.88 Disabled SELINUX=disabled 10.4.7.86 Disabled SELINUX=disabled 10.4.7.84 Disabled SELINUX=disabled 10.4.7.87 Disabled SELINUX=disabled 10.4.7.80 Disabled SELINUX=disabled

<a name="lbHzr"></a>

### 2.2.5. 确保时区准确,时间同步服务开启

[root@duduniao deploy-kubernetes]# gosh cmd -i all.ip “date +’%F %T %:z’” 10.4.7.85 2022-07-02 21:50:50 +08:00 10.4.7.81 2022-07-02 21:50:50 +08:00 10.4.7.80 2022-07-02 21:50:50 +08:00 10.4.7.86 2022-07-02 21:50:50 +08:00 10.4.7.89 2022-07-02 21:50:50 +08:00 10.4.7.88 2022-07-02 21:50:50 +08:00 10.4.7.87 2022-07-02 21:50:50 +08:00 10.4.7.84 2022-07-02 21:50:50 +08:00

[root@duduniao deploy-kubernetes]# gosh cmd -i all.ip “systemctl is-active chronyd;systemctl is-enabled chronyd”|xargs -n 3 10.4.7.81 active enabled 10.4.7.80 active enabled 10.4.7.87 active enabled 10.4.7.84 active enabled 10.4.7.88 active enabled 10.4.7.85 active enabled 10.4.7.89 active enabled 10.4.7.86 active enabled

[root@duduniao deploy-kubernetes]# gosh cmd -i all.ip “chronyc sources stats”

<a name="qqrXM"></a>

### 2.2.6. 配置内核参数

[root@duduniao deploy-kubernetes]# cat conf/k8s-sysctl.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

[root@duduniao deploy-kubernetes]# gosh push -i node.ip conf/k8s-sysctl.conf /etc/sysctl.d/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip “sysctl —system”

```

net.core.netdev_max_backlog = 65535

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_max_syn_backlog = 65535

net.core.somaxconn = 65535

net.ipv4.tcp_max_tw_buckets = 8192

net.ipv4.ip_local_port_range = 10240 65000

vm.swappiness = 0

2.2.7. 开启ipvs和br_netfilter内核模块

参考文档: https://github.com/kubernetes/kubernetes/blob/master/pkg/proxy/ipvs/README.md

检查内核版本,如果内核版本超过4.19,则需要安装 nf_conntrack 而不是 nf_conntrack_ipv4

[root@duduniao deploy-kubernetes]# cat conf/k8s-module.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

br_netfilter

[root@duduniao deploy-kubernetes]# gosh push -i node.ip conf/k8s-module.conf /etc/modules-load.d/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "awk '{print \"modprobe --\",\$1}' /etc/modules-load.d/k8s-module.conf|bash"

# 确保上述的几个模块都已经加载成功

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "cut -f1 -d ' ' /proc/modules | grep -E 'ip_vs|nf_conntrack|br_netfilter'"|xargs -n 7

10.4.7.81 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

10.4.7.88 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

10.4.7.86 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

10.4.7.85 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

10.4.7.87 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

10.4.7.84 br_netfilter ip_vs_sh ip_vs_wrr ip_vs_rr ip_vs nf_conntrack

# 安装ipvsadm客户端工具

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip --print.status failed "yum install -y ipset ipvsadm"

3. 安装依赖服务

3.1. 部署Nginx LB

Nginx LB主要是用来给 Ingress Controller 负载流量以及为多个Master节点ApiServer负载流量。当前集群为单节点,可以不用配置ApiServer的负载均衡器,但为了方便后续扩展,这里也配置下负载均衡。

[root@duduniao deploy-kubernetes]# gosh cmd -H 10.4.7.80 "yum install -y nginx nginx-mod-stream"

[root@duduniao deploy-kubernetes]# gosh cmd -H 10.4.7.80 "mkdir /etc/nginx/conf.d/stream -p"

[root@duduniao deploy-kubernetes]# gosh push -H 10.4.7.80 conf/nginx/nginx.conf /etc/nginx/

[root@duduniao deploy-kubernetes]# gosh push -H 10.4.7.80 conf/nginx/apiserver.conf /etc/nginx/conf.d/stream/

[root@duduniao deploy-kubernetes]# gosh cmd -H 10.4.7.80 "nginx -t"

10.4.7.80

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@duduniao deploy-kubernetes]# gosh cmd -H 10.4.7.80 "systemctl start nginx;systemctl enable nginx"

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 4096;

}

http {

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

log_format access '$time_local|$remote_addr|$upstream_addr|$status|'

'$upstream_connect_time|$bytes_sent|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

access_log /var/log/nginx/access.log access;

error_log /var/log/nginx/error.log;

gzip on;

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

include /etc/nginx/conf.d/http/*.conf;

}

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

access_log /var/log/nginx/stream_access.log proxy;

error_log /var/log/nginx/stream_error.log;

include /etc/nginx/conf.d/stream/*.conf;

}

upstream kube-apiserver {

server 10.4.7.81:6443 max_fails=1 fail_timeout=60s ;

# server 10.4.7.82:6443 max_fails=1 fail_timeout=60s ;

# server 10.4.7.83:6443 max_fails=1 fail_timeout=60s ;

}

server {

listen 0.0.0.0:6443 ;

allow 192.168.0.0/16;

allow 10.0.0.0/8;

deny all;

proxy_connect_timeout 2s;

proxy_next_upstream on;

proxy_next_upstream_timeout 5;

proxy_next_upstream_tries 1;

proxy_pass kube-apiserver;

access_log /var/log/nginx/kube-apiserver.log proxy;

}

3.2. 部署containerd

3.2.1. 部署containerd服务端

containerd 部署方式有两种,一种是使用 docker-ce 仓库进行安装,另一种是下载二进制包手动安装,这里选择比较麻烦的后者。

安装containerd的详细文档在 github 页面,需要注意,CNI插件我们通过Kubernetes仓库安装

containerd 可以从 release 界面下载,我们使用的是 1.6.4 版本,1.6.3 存在Bug

runc 可以从 release 界面下载,我们使用的是 1.1.1 版本

[root@duduniao deploy-kubernetes]# cd containerd/

[root@duduniao containerd]# wget https://github.com/containerd/containerd/releases/download/v1.6.4/containerd-1.6.4-linux-amd64.tar.gz

[root@duduniao containerd]# wget -O runc https://github.com/opencontainers/runc/releases/download/v1.1.1/runc.amd64

[root@duduniao containerd]# tar -tf containerd-1.6.4-linux-amd64.tar.gz

bin/

bin/containerd-stress

bin/ctr

bin/containerd-shim-runc-v1

bin/containerd

bin/containerd-shim

bin/containerd-shim-runc-v2

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/containerd-1.6.4-linux-amd64.tar.gz /tmp/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "tar -xf /tmp/containerd-1.6.4-linux-amd64.tar.gz -C /usr/local"

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/containerd.service /usr/lib/systemd/system/ # 文件内容在下个代码块中

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "mkdir /etc/containerd"

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/config.toml /etc/containerd/

[root@duduniao deploy-kubernetes]# chmod +x containerd/runc

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/runc /usr/local/bin/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "systemctl daemon-reload; systemctl start containerd ; systemctl enable containerd "

# Copyright The containerd Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

# 这里面主动加载了 overlay,所以上述的 k8s-module.conf 中才没有添加

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

containerd 配置文件中,已经添加了 docker.io镜像加速,SystemdCgroup = true以及sandbox_image

disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = false

disable_cgroup = false

disable_hugetlb_controller = true

disable_proc_mount = false

disable_tcp_service = true

enable_selinux = false

enable_tls_streaming = false

enable_unprivileged_icmp = false

enable_unprivileged_ports = false

ignore_image_defined_volumes = false

max_concurrent_downloads = 3

max_container_log_line_size = 16384

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

selinux_category_range = 1024

stats_collect_period = 10

stream_idle_timeout = "4h0m0s"

stream_server_address = "127.0.0.1"

stream_server_port = "0"

systemd_cgroup = false

tolerate_missing_hugetlb_controller = true

unset_seccomp_profile = ""

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

conf_template = ""

ip_pref = ""

max_conf_num = 1

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = "node"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://q2gr04ke.mirror.aliyuncs.com"]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.internal.v1.tracing"]

sampling_ratio = 1.0

service_name = "containerd"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

no_shim = false

runtime = "runc"

runtime_root = ""

shim = "containerd-shim"

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.service.v1.tasks-service"]

rdt_config_file = ""

[plugins."io.containerd.snapshotter.v1.aufs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.btrfs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.devmapper"]

async_remove = false

base_image_size = ""

discard_blocks = false

fs_options = ""

fs_type = ""

pool_name = ""

root_path = ""

[plugins."io.containerd.snapshotter.v1.native"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.overlayfs"]

root_path = ""

upperdir_label = false

[plugins."io.containerd.snapshotter.v1.zfs"]

root_path = ""

[plugins."io.containerd.tracing.processor.v1.otlp"]

endpoint = ""

insecure = false

protocol = ""

[proxy_plugins]

[stream_processors]

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]

accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar"

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]

accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar+gzip"

[timeouts]

"io.containerd.timeout.bolt.open" = "0s"

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[ttrpc]

address = ""

gid = 0

uid = 0

3.2.2. 配置crictl客户端

crictl是兼容CRI接口的容器调试工具,因为默认的ctr并不是很好用,又缺乏docker这样强大的client,因此推荐containerd环境部署crictl工具。crictl调试Kubernetes节点可以参考Kubernetes文档,官方Readme也有相关介绍,这里我们使用 1.23.0 版本

[root@duduniao deploy-kubernetes]# wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.23.0/crictl-v1.23.0-linux-amd64.tar.gz -O containerd/crictl-v1.23.0-linux-amd64.tar.gz

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/crictl-v1.23.0-linux-amd64.tar.gz /tmp/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "tar -xf /tmp/crictl-v1.23.0-linux-amd64.tar.gz -C /usr/local/bin"

[root@duduniao deploy-kubernetes]# gosh push -i node.ip containerd/crictl.yaml /etc/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "crictl completion bash > /etc/bash_completion.d/crictl"

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false # debug调试的时候设置为true

4. 部署Kubernetes

4.1. 安装集群

4.1.1. 配置主机名解析

10.4.7.81 master-81

10.4.7.84 worker-84

10.4.7.85 worker-85

10.4.7.86 worker-86

10.4.7.87 worker-87

10.4.7.88 worker-88

[root@duduniao deploy-kubernetes]# gosh push -i node.ip conf/hosts.dns /tmp/

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "cat /tmp/hosts.dns >> /etc/hosts"

4.1.2. 安装二进制文件

本实验安装 Kubernetes 1.23.6 版本。

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

[root@duduniao deploy-kubernetes]# gosh push -i node.ip yum.repos.d/kubernetes.repo /etc/yum.repos.d/

# 安装1.23.6版本,可以根据需求安装指定版本

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "yum search kubelet kubeadm kubectl --disableexcludes=kubernetes --showduplicates|grep 1.23.6"

10.4.7.81

kubelet-1.23.6-0.x86_64 : Container cluster management

kubeadm-1.23.6-0.x86_64 : Command-line utility for administering a Kubernetes

kubectl-1.23.6-0.x86_64 : Command-line utility for interacting with a Kubernetes

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "yum install -y --disableexcludes=kubernetes kubelet-1.23.6-0.x86_64 kubeadm-1.23.6-0.x86_64 kubectl-1.23.6-0.x86_64"

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "systemctl start kubelet ; systemctl enable kubelet"

# 安装kubelet 时候会安装CNI插件的,如果你在安装containerd时候按照官方文档安装了新版本CNI二进制,可能存在冲突

[root@duduniao deploy-kubernetes]# gosh cmd -i node.ip "ls /opt/cni"

4.1.3. 初始化控制平面

[root@master-81 ~]# kubeadm init --control-plane-endpoint "10.4.7.80:6443" --pod-network-cidr 10.200.0.0/16 --service-cidr 10.100.0.0/16 --image-repository registry.aliyuncs.com/google_containers --upload-certs --apiserver-cert-extra-sans k8s-local-01.huanle.com --apiserver-cert-extra-sans k8s-local.huanle.com

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.4.7.80:6443 --token uyl0l1.j6a6b6bng2ofacnd \

--discovery-token-ca-cert-hash sha256:8d53c43c27b24cd882683e53a6be0e21a7d59b0bc4e70feee0244a2a60c61b1c \

--control-plane --certificate-key 493949abeee92288565f34526541dece387aa23ac4c58d1fecebd55de02a5426

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.4.7.80:6443 --token uyl0l1.j6a6b6bng2ofacnd \

--discovery-token-ca-cert-hash sha256:8d53c43c27b24cd882683e53a6be0e21a7d59b0bc4e70feee0244a2a60c61b1c

4.1.4. 初始化数据平面

[root@duduniao deploy-kubernetes]# gosh cmd -i worker.ip "kubeadm join 10.4.7.80:6443 --token uyl0l1.j6a6b6bng2ofacnd --discovery-token-ca-cert-hash sha256:8d53c43c27b24cd882683e53a6be0e21a7d59b0bc4e70feee0244a2a60c61b1c"

4.1.5. 配置kube-proxy为ipvs模式

[root@duduniao deploy-kubernetes]# mkdir ~/.kube

[root@duduniao deploy-kubernetes]# scp 10.4.7.81:/etc/kubernetes/admin.conf ~/.kube/config

[root@duduniao deploy-kubernetes]# scp 10.4.7.81:/usr/bin/kubectl /usr/local/bin/

[root@duduniao deploy-kubernetes]# kubectl edit -n kube-system configmaps kube-proxy # 将 config.conf 中 mode 设置为 ipvs

[root@duduniao deploy-kubernetes]# kubectl get pod -n kube-system | awk '$1 ~ /^kube-proxy/{print "kubectl delete pod -n kube-system",$1}'|bash # 重建pod

# 挑任意节点执行 ipvsadm -ln 验证是否存在ipvs规则

4.1.6. 验证集群状态

# 因为没有部署 CNI 插件所以提示异常

[root@duduniao deploy-kubernetes]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-81 NotReady control-plane,master 6m31s v1.23.6 10.4.7.81 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-84 NotReady <none> 2m35s v1.23.6 10.4.7.84 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-85 NotReady <none> 2m35s v1.23.6 10.4.7.85 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-86 NotReady <none> 2m35s v1.23.6 10.4.7.86 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-87 NotReady <none> 2m35s v1.23.6 10.4.7.87 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-88 NotReady <none> 2m35s v1.23.6 10.4.7.88 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

[root@duduniao deploy-kubernetes]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d8c4cb4d-g2ztc 0/1 Pending 0 6m23s

kube-system coredns-6d8c4cb4d-nxr8r 0/1 Pending 0 6m23s

kube-system etcd-master-81 1/1 Running 1 6m33s

kube-system kube-apiserver-master-81 1/1 Running 1 6m40s

kube-system kube-controller-manager-master-81 1/1 Running 1 6m33s

kube-system kube-proxy-c5mf5 1/1 Running 0 2m44s

kube-system kube-proxy-k9n8n 1/1 Running 0 6m23s

kube-system kube-proxy-nrf75 1/1 Running 0 2m44s

kube-system kube-proxy-nt8kp 1/1 Running 0 2m44s

kube-system kube-proxy-rrsk9 1/1 Running 0 2m44s

kube-system kube-proxy-xh7rl 1/1 Running 0 2m45s

kube-system kube-scheduler-master-81 1/1 Running 1 6m38s

4.2. 部署插件和必要服务

4.2.1. 部署CNI插件

CNI插件的选择比较多,通常在非大规模集群中,我们可以有把握的说,所有的节点都会在同一个VPC中,各个节点之间的通信不需要跨路由,这种场景我个人推荐简单的 flannel 插件的 host-gw 模式。

为了方便,此处选择安装Flannel作为插件,github地址:https://github.com/coreos/flannel

安装指导: https://github.com/coreos/flannel/blob/master/Documentation/kubernetes.md

Flannel的网络模式:https://github.com/flannel-io/flannel/blob/master/Documentation/backends.md

[root@duduniao flannel]# wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

# 需要修改一下内容:

# 1. Network 必须要是Pod CIDR,这个在 kubeadm init 时指定的

# 2. Type 是指flannel backend,推荐使用 host-gw

# 3. flannel 的镜像,当前版本默认使用的是 rancher 仓库,这里就不再修改了

[root@duduniao flannel]# grep -Ew "Type|image|Network" kube-flannel.yml

"Network": "10.200.0.0/16",

"Type": "host-gw"

#image: flannelcni/flannel-cni-plugin:v1.0.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1

#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel:v0.17.0

#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel:v0.17.0

[root@duduniao flannel]# kubectl apply -f kube-flannel.yml

[root@duduniao deploy-kubernetes]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-81 Ready control-plane,master 9h v1.23.6 10.4.7.81 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-84 Ready <none> 9h v1.23.6 10.4.7.84 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-85 Ready <none> 9h v1.23.6 10.4.7.85 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-86 Ready <none> 9h v1.23.6 10.4.7.86 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-87 Ready <none> 9h v1.23.6 10.4.7.87 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

worker-88 Ready <none> 9h v1.23.6 10.4.7.88 <none> CentOS Linux 7 (Core) 5.4.203-1.el7.elrepo.x86_64 containerd://1.6.4

[root@duduniao deploy-kubernetes]# kubectl get pod -n kube-system | grep core # coredns 可以正常运行了

coredns-6d8c4cb4d-mqgk4 1/1 Running 0 25m

coredns-6d8c4cb4d-nxr8r 1/1 Running 0 9h

4.2.2. 部署ingress controller

Ingress Controller 有很多种,Istio-gateway,nginx,traefik 等等,这里使用 nginx 作为Ingress Controller,部署可以参考下面的博客

05-3-1-nginx

4.2.3. 部署metrics-server

在kubernetes中HPA自动伸缩指标依据、kubectl top 命令的资源使用率,可以通过 metrics-server 来获取,但是它不适合作为准确的监控数据来源。

官方主页:https://github.com/kubernetes-sigs/metrics-server。在大部分情况下,使用deployment部署一个副本即可,最多支持5000个node,每个node消耗3m CPU 和 3M 内存。

[root@duduniao metrics-server]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

[root@duduniao metrics-server]# grep -w -E "image|kubelet-insecure-tls" components.yaml # 1. 镜像仓库推荐替换;2. 增加--kubelet-insecure-tls启动参数,跳过证书验证

- --kubelet-insecure-tls

# image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.1

[root@duduniao metrics-server]# kubectl apply -f components.yaml

[root@duduniao metrics-server]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master-81 166m 8% 1089Mi 28%

worker-84 74m 1% 333Mi 4%

worker-85 55m 1% 283Mi 3%

worker-86 64m 1% 331Mi 4%

worker-87 50m 1% 252Mi 3%

worker-88 59m 1% 268Mi 3%

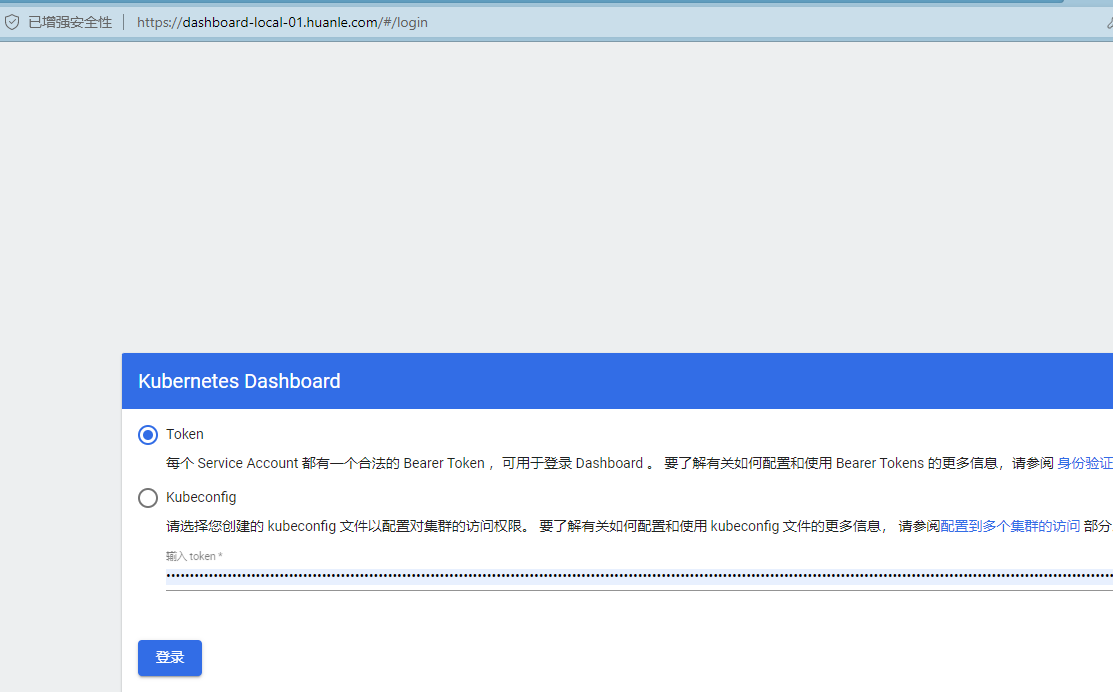

4.2.4. 安装kubernetes-dashboard

dashboard默认的yaml是采用https接口,dashboard的github主页:https://github.com/kubernetes/dashboard

我对清单文件做了调整,增加了管理员账户,ingress资源

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.5.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

annotations:

# nginx.ingress.kubernetes.io/secure-backends:

nginx.org/ssl-services: "kubernetes-dashboard"

spec:

ingressClassName: nginx

rules:

- host: dashboard-local-01.huanle.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443

tls:

- hosts: ["dashboard-local-01.huanle.com"]

---

# admin.yaml, 管理员账号

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

4.2.5. 安装nfs-storage-class

通过情况下,都需要为Kubernetes配置storage class,测试环境中使用 NFS 居多,下面以 NFS 为案例,Gitlab 地址 https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

# middle-89 提供NFS存储,各个节点进行挂载。这些节点都需要安装 nfs相关工具

[root@duduniao deploy-kubernetes]# gosh cmd -i all.ip "yum install -y nfs-utils"

[root@middle-89 ~]# echo '/data/nfs 10.4.7.0/24(rw,sync,no_wdelay,no_root_squash)' > /etc/exports

[root@middle-89 ~]# mkdir /data/nfs

[root@middle-89 ~]# systemctl start nfs

[root@middle-89 ~]# systemctl enable nfs

[root@middle-89 ~]# showmount -e

Export list for middle-89:

/data/nfs 10.4.7.0/24

---

apiVersion: v1

kind: Namespace

metadata:

name: infra-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: infra-storage

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: linuxduduniao/nfs-subdir-external-provisioner:v4.0.1

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: default-nfs-provisioner

- name: NFS_SERVER

value: 10.4.7.89

- name: NFS_PATH

value: /data/nfs

- name: TZ

value: Asia/Shanghai

volumes:

- name: nfs-client-root

nfs:

server: 10.4.7.89

path: /data/nfs

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: infra-storage

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: infra-storage

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: infra-storage

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: infra-storage

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: infra-storage

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: default-nfs-provisioner

parameters:

archiveOnDelete: "false"

验证NFS存储

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: gcr.io/google_containers/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

[root@duduniao nfs-provisorner]# kubectl apply -f test-claim.yaml -f test-pod

[root@duduniao nfs-provisorner]# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-pod 0/1 Completed 0 17s

[root@duduniao nfs-provisorner]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-baf4603c-fea5-4ea6-93ac-b3387a1f150c 1Mi RWX managed-nfs-storage 34s