- 1. Pod网络

- 2. Service网络

- 1. 从nat表的output链匹配,下一条规则KUBE-SERVICES

- 2. 由于目标地址为 10.96.149.22,被以下规则捕获

- 3. 这里实现了rr的负载均衡,iptables规则从上到下匹配,第一条匹配概率为1/3,第二条为1/2,第三条为1

- 1. 进入 nat 的 prerouting 链

- 2. 由于目标地址为 10.96.149.22,被以下规则捕获

- 3. 这里实现了rr的负载均衡,iptables规则从上到下匹配,第一条匹配概率为1/3,第二条为1/2,第三条为1

- 4. 假设匹配到上述的第一条规则,则下一步匹配下述第一条DNAT规则:

- 5. 对流量打上mark

- 6.

- 3. DNS

1. Pod网络

1.1. CNI插件

CNI(Container Network Interface),是CNCF旗下的一个开源项目,由一组配置容器网络接口的规范和库组成,同时包含了一些插件,Kubernetes 组件内置了CNI代码。CNI 插件负责以下几个工作:

- 创建容器的网络名称空间

- 分配集群内部唯一的IP地址给容器(Pod)

- 创建网络设备,维护Pod网络的路由表

- 存储各个节点子网信息到数据库(Kubernetes API或者etcd)

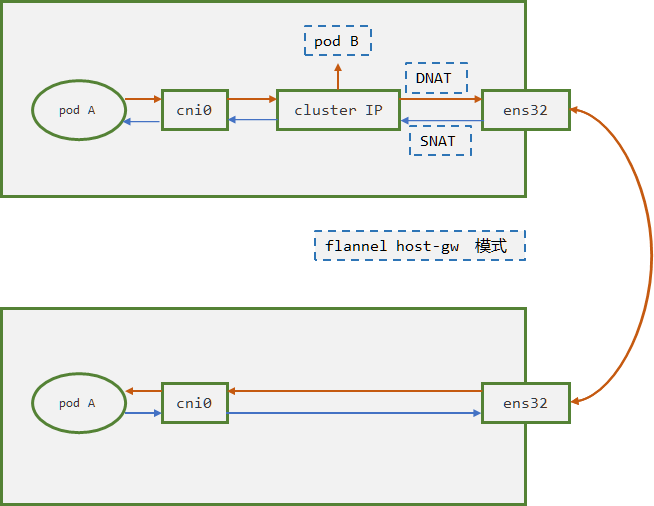

如果集群节点都在同一个二层网络下,并且没有太多复杂功能需求,那么推荐使用 Flannel 的host-gw。如果网络环境比较复杂,可以考虑使用 Calico。这两种是目前使用最为广泛的。常见CNI测试数据可参考:https://itnext.io/benchmark-results-of-kubernetes-network-plugins-cni-over-10gbit-s-network-36475925a560

1.2. Flannel

1.2.1. 两种模式

Flannel是为k8s设计的基于三层网络轻量级的CNI插件,专注于维护Pod之间网络通信。Flannel从Pod地址池中为每一台主机维护一个子网地址池,同一个node节点创建出来的Pod在一个子网中,Flannel 通过Kubernetes或者etcd来存储网络配置。Flannel 支持多种网络模式(官方称为 Backend),不建议在运行时更改,推荐使用的有以下vxlan和host-gw,更多信息查看官方文档 :

- vxlan

vxlan是flannel默认使用的封装数据报文的方式,通过udp包文封装Pod的IP包文。相关配置参数如下:

Type: vxlanDirectRouting: 是否开启直路由模式,当节点在同一个二层网络下,直接通过路由配置,不走udp封包

- host-gw

该方式性能比较高,不通过udp封包,只通过配置到目标主机的路由,要求所有节点在同一个二层网络下,配置参数:

Type: host-gw

1.2.2. flannel部署

1.2.2.1. 部署文档

https://www.yuque.com/duduniao/k8s/togtwi#1EeCk

1.2.2.2. 配置信息

configmap: 配置了 cni 插件信息,pod的网络地址池

data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "172.16.0.0/16", "Backend": { "Type": "vxlan" } }DaemonSet:

两个容器,initContainer用于将configmap中 cni-conf.json 拷贝到节点的 /etc/cni/net.d/ 下面

kube-flannel容器启动flanneld进程,维护Pod网络

- 节点上flannel相关配置

```yaml

[root@centos-7-55 ~]# cat /etc/cni/net.d/10-flannel.conflist # cni 配置文件

{

“name”: “cbr0”,

“cniVersion”: “0.3.1”,

“plugins”: [

{

}, {"type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true }

} ] }"type": "portmap", "capabilities": { "portMappings": true }

[root@centos-7-55 ~]# cat /var/run/flannel/subnet.env # 当前节点的Pod地址池和网络配置 FLANNEL_NETWORK=172.16.0.0/16 FLANNEL_SUBNET=172.16.4.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true

[root@centos-7-55 ~]# route -n # 注意路由配置 Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.4.7.254 0.0.0.0 UG 100 0 0 ens32 10.4.7.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32 172.16.0.0 172.16.0.0 255.255.255.0 UG 0 0 0 flannel.1 172.16.1.0 172.16.1.0 255.255.255.0 UG 0 0 0 flannel.1 172.16.2.0 172.16.2.0 255.255.255.0 UG 0 0 0 flannel.1 172.16.3.0 172.16.3.0 255.255.255.0 UG 0 0 0 flannel.1 172.16.4.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0 172.16.5.0 172.16.5.0 255.255.255.0 UG 0 0 0 flannel.1 172.24.20.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

<a name="IgZxA"></a>

### 1.2.3. vxlan通信原理

<a name="w2S8p"></a>

#### 1.2.3.1. 原理描述

以两个Pod跨节点 通信为例,使用以下deployment做验证

```yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

namespace: apps

spec:

replicas: 3

selector:

matchLabels:

app: slb

template:

metadata:

labels:

app: slb

spec:

containers:

- name: slb-demo

image: linuxduduniao/nginx:v1.0.0

[root@centos-7-51 ~]# kubectl get pod -n apps -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

slb-deploy-86b95846-b29pf 1/1 Running 3 4d1h 172.16.4.39 centos-7-55 <none> <none>

slb-deploy-86b95846-dj9n9 1/1 Running 3 4d1h 172.16.3.188 centos-7-54 <none> <none>

slb-deploy-86b95846-r6wjg 1/1 Running 3 4d1h 172.16.4.41 centos-7-55 <none> <none>

[root@centos-7-51 ~]# kubectl exec -it -n apps slb-deploy-86b95846-b29pf -- ping -c 1 -w 1 172.16.3.188

PING 172.16.3.188 (172.16.3.188) 56(84) bytes of data.

64 bytes from 172.16.3.188: icmp_seq=1 ttl=62 time=0.646 ms

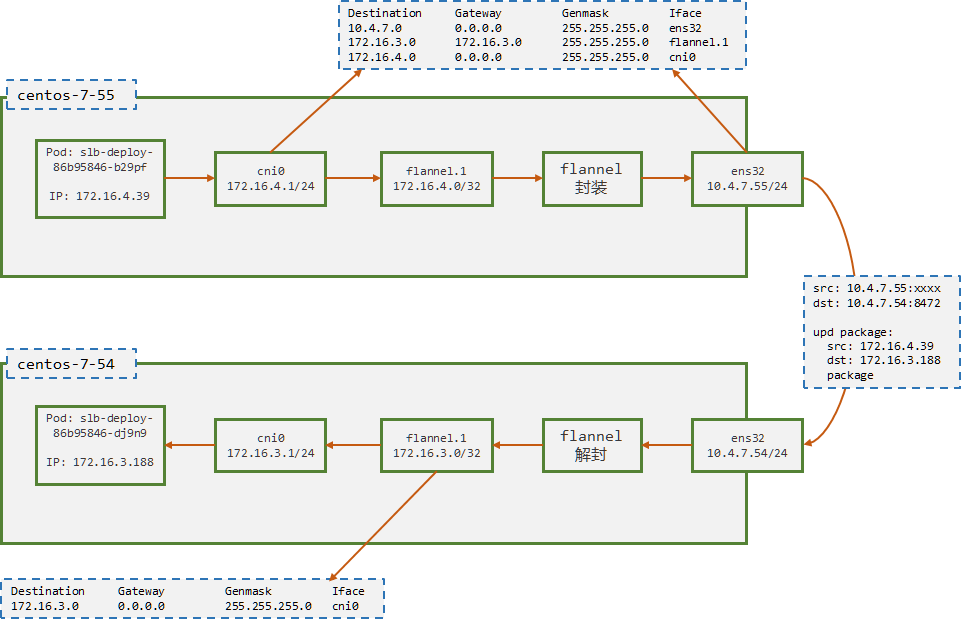

- centos-7-55 流转过程:

- Pod 的出口网关为 cni0,icmp 报文首先流向 cni0 设备

- icmp 报文到达 cni0 后,根据路由的下一跳地址,将icmp 报文转到 flannel.1

- flannel 程序在 flannel.1 收到包文后,将其放入 upd 数据层,并打上 udp 报文头部

- 宿主机根据udp的目标IP地址,将udp报文通过ens32发给centos-7-54的flannel服务(监听8472端口 )

centos-7-54 流转过程

centos-7-55 客户端容器准备

[root@centos-7-51 ~]# kubectl exec -it -n apps slb-deploy-86b95846-b29pf -- bash [root@slb-deploy-86b95846-b29pf /]# ping 172.16.3.188 # 开启长ping模式centos-7-55 cni0 抓包

[root@centos-7-55 ~]# tcpdump -i cni0 dst 172.16.3.188 and icmp # ICMP 包, 172.16.4.39 > 172.16.3.188 23:25:48.438193 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 92, length 64 23:25:49.439716 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 93, length 64centos-7-55 flannel.1 抓包

[root@centos-7-55 ~]# tcpdump -i flannel.1 dst 172.16.3.188 and icmp # ICMP 包, 172.16.4.39 > 172.16.3.188 23:27:16.504208 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 180, length 64 23:27:17.505122 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 181, length 64centos-7-55 ens32 抓包

[root@centos-7-55 ~]# tcpdump -i ens32 dst 10.4.7.54 and udp and port 8472 -n # 注意此处, upd包文中包含了一层 icmp 包,这就是overlay network 23:28:41.568671 IP 10.4.7.55.48053 > 10.4.7.54.otv: OTV, flags [I] (0x08), overlay 0, instance 1 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 265, length 64 23:28:42.570468 IP 10.4.7.55.48053 > 10.4.7.54.otv: OTV, flags [I] (0x08), overlay 0, instance 1 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 266, length 64centos-7-54 ens32 抓包

[root@centos-7-54 ~]# tcpdump -i ens32 udp and src 10.4.7.55 -n # 从centos-7-55来的upd包中,包含了一层 icmp 包 23:31:31.700409 IP 10.4.7.55.48053 > 10.4.7.54.otv: OTV, flags [I] (0x08), overlay 0, instance 1 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 435, length 64 23:31:32.701438 IP 10.4.7.55.48053 > 10.4.7.54.otv: OTV, flags [I] (0x08), overlay 0, instance 1 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 436, length 64centos-7-54 flannel 抓包

[root@centos-7-54 ~]# tcpdump -i flannel.1 icmp and dst 172.16.3.188 # 注意此处,flannel解包后,报文从 flannel.1 流出 23:35:16.862788 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 660, length 64 23:35:17.862577 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 661, length 64centos-7-54 cni0 抓包

[root@centos-7-54 ~]# tcpdump -i cni0 icmp and dst 172.16.3.188 # 从flannel.1到cni0是通过路由指定 23:37:54.979933 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 818, length 64 23:37:55.980852 IP 172.16.4.39 > 172.16.3.188: ICMP echo request, id 60, seq 819, length 641.2.4. host-gw通信过程

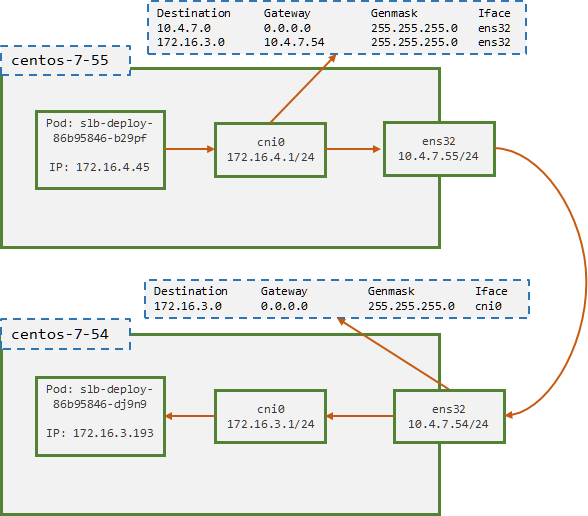

以上述的Deployment创建的Pod为例,以

slb-deploy-86b95846-b29pf跨节点pingslb-deploy-86b95846-dj9n9进行分析[root@duduniao local-k8s-yaml]# kubectl get pod -n apps -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES slb-deploy-86b95846-b29pf 1/1 Running 5 4d23h 172.16.4.45 centos-7-55 <none> <none> slb-deploy-86b95846-dj9n9 1/1 Running 5 4d23h 172.16.3.193 centos-7-54 <none> <none> slb-deploy-86b95846-r6wjg 1/1 Running 5 4d23h 172.16.4.46 centos-7-55 <none> <none>

在host-gw模式下,不涉及 flannel.1 的封包,性能损失较少,在节点规模较少的时候使用是个不错的选择。由于网络通信仅仅设计路由的下一跳,此处不做抓包分析。

2. Service网络

2.1. Service的介绍

参考 服务发现 章节

2.2. iptables和ipvs模式详解

2.2.1. iptables模式

2.2.1.1. iptables入门

分析 iptables 规则之前,需要对 iptables 的规则基本掌握,可参考: https://www.yuque.com/duduniao/notes/yg3qq0

2.2.1.2. 流量路线分析

iptables 的模式下,kubeproxy主要修改了 nat 和 filter 表,自定义了很多的链,流程较为复杂,此处通过案例进行逐步分析,此处需要对iptables规则比较熟悉,需要知道四表五链,以下 iptables 规则是通过命令 iptables-save 获得。

创建Pod和对应的Service,用于分析流量走过的路线

[root@duduniao ~]# kubectl get pod -n apps -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES slb-deploy-86b95846-b29pf 1/1 Running 12 52d 172.16.4.66 centos-7-55 <none> <none> slb-deploy-86b95846-dj9n9 1/1 Running 12 52d 172.16.3.214 centos-7-54 <none> <none> slb-deploy-86b95846-r6wjg 1/1 Running 12 52d 172.16.4.68 centos-7-55 <none> <none> [root@duduniao ~]# kubectl get -n apps svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE slb ClusterIP 10.96.149.22 <none> 80/TCP 46dcentos-7-54节点上: 节点直接访问 svc:

curl 10.96.149.22```1. 从nat表的output链匹配,下一条规则KUBE-SERVICES

-A OUTPUT -m comment —comment “kubernetes service portals” -j KUBE-SERVICES

2. 由于目标地址为 10.96.149.22,被以下规则捕获

-A KUBE-SERVICES -d 10.96.149.22/32 -p tcp -m comment —comment “apps/slb:http cluster IP” -m tcp —dport 80 -j KUBE-SVC-FOTIPUBY5PEWL5CQ

3. 这里实现了rr的负载均衡,iptables规则从上到下匹配,第一条匹配概率为1/3,第二条为1/2,第三条为1

-A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -m statistic —mode random —probability 0.33333333349 -j KUBE-SEP-BYOK3ZVVD33HLWVA -A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -m statistic —mode random —probability 0.50000000000 -j KUBE-SEP-JRNBZIV4KI6QJ6NQ -A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -j KUBE-SEP-J2PNN7GCFZDFCIQQ

```

# 4. 假设匹配到上述的第一条规则,则下一步匹配下述第二条DNAT规则:

-A KUBE-SEP-BYOK3ZVVD33HLWVA -s 172.16.3.214/32 -m comment --comment "apps/slb:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-BYOK3ZVVD33HLWVA -p tcp -m comment --comment "apps/slb:http" -m tcp -j DNAT --to-destination 172.16.3.214:80

# 5. 进入OUTPUT的filter(此时src: 10.4.7.54,dst:172.16.3.214)

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

# 6. 进入POSTFORWARD的nat链

-A POSTROUTING -m comment --comment "CNI portfwd requiring masquerade" -j CNI-HOSTPORT-MASQ

# 7. 匹配到 0x2000/0x2000 标记进行SNAT,但是当前未匹配上

-A CNI-HOSTPORT-MASQ -m mark --mark 0x2000/0x2000 -j MASQUERADE

# 8. 请求根据路由走向下一跳(此时src: 10.4.7.54,dst:172.16.3.214)

172.16.3.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

- 在centos-7-54的pod中访问slb

```

1. 进入 nat 的 prerouting 链

-A PREROUTING -m comment —comment “kubernetes service portals” -j KUBE-SERVICES

2. 由于目标地址为 10.96.149.22,被以下规则捕获

-A KUBE-SERVICES -d 10.96.149.22/32 -p tcp -m comment —comment “apps/slb:http cluster IP” -m tcp —dport 80 -j KUBE-SVC-FOTIPUBY5PEWL5CQ

3. 这里实现了rr的负载均衡,iptables规则从上到下匹配,第一条匹配概率为1/3,第二条为1/2,第三条为1

-A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -m statistic —mode random —probability 0.33333333349 -j KUBE-SEP-BYOK3ZVVD33HLWVA -A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -m statistic —mode random —probability 0.50000000000 -j KUBE-SEP-JRNBZIV4KI6QJ6NQ -A KUBE-SVC-FOTIPUBY5PEWL5CQ -m comment —comment “apps/slb:http” -j KUBE-SEP-J2PNN7GCFZDFCIQQ

4. 假设匹配到上述的第一条规则,则下一步匹配下述第一条DNAT规则:

-A KUBE-SEP-BYOK3ZVVD33HLWVA -s 172.16.3.214/32 -m comment —comment “apps/slb:http” -j KUBE-MARK-MASQ -A KUBE-SEP-BYOK3ZVVD33HLWVA -p tcp -m comment —comment “apps/slb:http” -m tcp -j DNAT —to-destination 172.16.3.214:80

5. 对流量打上mark

-A KUBE-MARK-MASQ -j MARK —set-xmark 0x4000/0x4000

6.

<a name="htekd"></a>

### 2.2.2. ipvs模式

Kubernetes在1.11版本之后支持ipvs模式作为service的内部负载均衡,其出发点是提高大规模集群下,service负载均衡的性能,因为iptables规则随着service增加会越来越多,更新iptables和内核遍历iptables都会变得很慢,严重影响性能。而ipvs底层采用的是哈希表,性能几乎不会因为service的增加而出现下降。

<a name="wGC8R"></a>

#### 2.2.2.1. ipvs模式配置

```bash

# 修改kube-proxy启动参数,kubeadm则修改启动配置文件 config.conf, 以config.conf为例(kubectl get cm -n kube-system kube-proxy -o yaml)

mode: ipvs # 模式改为 ipvs

ipvs:

excludeCIDRs: null # 清理ipvs时,需要忽略的ip地址范围,避免清理掉主机上其它lvs规则

minSyncPeriod: 0s # ipvs规则最小刷新时间间隔,必须大于0

scheduler: "rr" # ipvs调度算法,默认轮询(rr),支持最少连接(lc),目标地址哈希(dh),源地址哈希(sh),永不排队(nq),最短预期延迟(sed)

strictARP: false # 是否启动严格arp

syncPeriod: 0s # ipvs规则最小刷新时间间隔,默认30s,必须大于0

tcpFinTimeout: 0s # 收到FIN数据包后,ipvs tcp连接超时时间,0表示保持连接不变

tcpTimeout: 0s # 空闲ipvs tcp连接的超时时间,0表示保持连接不变

udpTimeout: 0s # 空闲ipvs udp连接的超时时间,0表示保持连接不变

2.2.2.2. 测clusterIP模式

- 部署测试资源

```yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

namespace: apps

spec:

replicas: 3

selector:

matchLabels:

template: metadata:app: slb

spec:labels: app: slbcontainers: - name: slb-demo image: linuxduduniao/nginx:v1.0.0

apiVersion: v1 kind: Service metadata: name: slb namespace: apps spec: selector: app: slb ports:

- name: http

port: 80

targetPort: 80

slb-deploy-86b95846-dj9n9 1/1 Running 7 6d12h 172.16.3.199 centos-7-54 slb-deploy-86b95846-r6wjg 1/1 Running 7 6d12h 172.16.4.51 centos-7-55 [root@duduniao local-k8s-yaml]# kubectl describe svc -n apps slb # 注意endpoints Name: slb Namespace: apps Labels: Annotations: Selector: app=slb Type: ClusterIP IP: 10.96.149.22 Port: http 80/TCP TargetPort: 80/TCP Endpoints: 172.16.3.199:80,172.16.4.51:80,172.16.4.53:80 Session Affinity: None Events:

[root@centos-7-51 ~]# ipvsadm -l -n

TCP 10.96.149.22:80 rr

-> 172.16.3.199:80 Masq 1 0 0

-> 172.16.4.51:80 Masq 1 0 0

-> 172.16.4.53:80 Masq 1 0 0

```

- 分析流量路线