1. CPU和内存限制

在生产环境中,一般都要求Pod配置CPU和内存的限制,如果没有配置可能会吃掉过多的内存影响其它的Pod正常运行。kubernetes为namespace的资源限制提供了CPU、Memory、GPU三个维度,并且提供了默认值、最大值、最小值配置。当配置了这些参数后,创建容器时候会进行资源限制的检查:

- 如果容器没有指定资源限制,则使用默认的配置

- 检查容器的CPU和Memory的Limits是否超过Max设定,超过则报错

- 检查容器的CPU和Memory的Requests是否低于Min设定,低于则报错

1.1. LimitRange字段

apiVersion: v1kind: LimitRangemetadataname <string> # 在一个名称空间不能重复namespace <string> # 指定名称空间,默认defalutlabels <map[string]string> # 标签annotations <map[string]string> # 注释specdefault <map[string]string> # 默认的资源上限, limitdefaultRequest <map[string]string> # 默认的资源申请值, requiredmax <map[string]string> # 声明的limit上限min <map[string]string> # 声明的required下线type <string> -required- # 被限制的对象,Container

1.2. 默认值案例

1.2.1. 设置默认的request和limit

```yaml apiVersion: v1 kind: Namespace metadata: name: apps

apiVersion: v1 kind: LimitRange metadata: name: limit-resource namespace: apps spec: limits:

设定默认request和limit

- default:

memory: 1024Mi

cpu: 500m

defaultRequest:

memory: 128Mi

cpu: 100m

type: Container

Annotations: Status: Active

No resource quota.

Resource Limits Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

Container memory - - 128Mi 1Gi - Container cpu - - 100m 500m -

<a name="7oBTR"></a>

#### 1.2.2. 不指定request和limit

```yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

# 容器采用默认值

[root@duduniao local-k8s-yaml]# kubectl describe pod -n apps nginx-demo-1

Limits:

cpu: 500m

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

1.2.3. 指定request,不指定limit

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

requests:

memory: 200Mi

cpu: 200m

# 当指定request后,以request为准, limit使用默认的limit。

# 如果request超过了默认Limit限制,会出现报错(请自行测试)

[root@duduniao local-k8s-yaml]# kubectl describe pod -n apps nginx-demo-1

Limits:

cpu: 500m

memory: 1Gi

Requests:

cpu: 200m

memory: 200Mi

Environment: <none>

1.2.4. 不指定request,指定limit

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

limit:

memory: 200Mi

cpu: 200m

# 当指定Limits时,request和Limits都以指定的Limit为准

# 当指定Limits小于默认requests时,并不会报错

[root@duduniao local-k8s-yaml]# kubectl describe pod -n apps nginx-demo-1

Limits:

cpu: 200m

memory: 200Mi

Requests:

cpu: 200m

memory: 200Mi

1.2.5. 同时指定request和limit

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

limits:

memory: 500Mi

cpu: 500m

requests:

memory: 400Mi

cpu: 200m

# 如果指定了requests和limits,则以用户指定为准

[root@duduniao local-k8s-yaml]# kubectl describe pod -n apps nginx-demo-1

Limits:

cpu: 500m

memory: 500Mi

Requests:

cpu: 200m

memory: 400Mi

1.3. 极值设置

1.3.1. 设定极值

apiVersion: v1

kind: LimitRange

metadata:

name: limit-resource

namespace: apps

spec:

limits:

- default:

memory: 1024Mi

cpu: 500m

defaultRequest:

memory: 128Mi

cpu: 100m

max:

memory: 2048Mi

cpu: 1000m

min:

memory: 64Mi

cpu: 50m

type: Container

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

No resource quota.

Resource Limits

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 50m 1 100m 500m -

Container memory 64Mi 2Gi 128Mi 1Gi -

1.3.2. 超过最大值场景

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

limits:

memory: 4096Mi

cpu: 500m

requests:

memory: 400Mi

cpu: 200m

[root@duduniao local-k8s-yaml]# kubectl apply -f pod-demo.yaml

Error from server (Forbidden): error when creating "pod-demo.yaml": pods "nginx-demo-1" is forbidden: maximum memory usage per Container is 2Gi, but limit is 4Gi

1.3.3. 低于最小值场景

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

limits:

memory: 2048Mi

cpu: 500m

requests:

memory: 32Mi

cpu: 200m

[root@duduniao local-k8s-yaml]# kubectl apply -f pod-demo.yaml

Error from server (Forbidden): error when creating "pod-demo.yaml": pods "nginx-demo-1" is forbidden: minimum memory usage per Container is 64Mi, but request is 32Mi

1.3.4. 一般正常场景

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-1

namespace: apps

labels:

app: nginx

spec:

containers:

- name: nginx-demo-1

image: linuxduduniao/nginx:v1.0.0

resources:

limits:

memory: 2048Mi

cpu: 500m

requests:

memory: 512Mi

cpu: 200m

[root@duduniao local-k8s-yaml]# kubectl describe pod -n apps nginx-demo-1

Limits:

cpu: 500m

memory: 2Gi

Requests:

cpu: 200m

memory: 512Mi

2. 名称空间配额

上述CPU和内存限制是针对单个Container而言的,而配额指的是当前名称空间中所有符合条件的Pod累计资源消耗的上限,在不同业务使用不同名称空间的场景中,避免资源被一个业务线抢占过多,需要设定配额。如开发和测试共用一个K8S集群,并且通过名称空间进行区分时,每个名称空间推荐设定配额。

2.1. 字段

apiVersion: v1

kind: ResourceQuota

metadata: <Object>

spec:

hard <map[string]string> # 配额指标,支持多种资源

scopeSelector <Object> # 对指定优先级的Pod进行资源配额计算

matchExpressions <[]Object>

scopeName <string> -required-

operator <string> -required-

values <[]string>

scopes <[]string> # 对指定优先级的Pod进行资源配额计算

# 通常仅用hard字段进行指定资源配额,支持以下这些配额类型

# 计算资源: https://kubernetes.io/zh/docs/tasks/administer-cluster/manage-resources/quota-memory-cpu-namespace/

requests.cpu

requests.memory

limits.cpu

limits.memory

# API资源(常用): https://kubernetes.io/zh/docs/tasks/administer-cluster/quota-api-object/

pods

services

secrets

services.nodeports

configmaps

persistentvolumeclaims

2.2. 计算资源配额

apiVersion: v1

kind: ResourceQuota

metadata:

name: computer-resource

namespace: apps

spec:

hard:

requests.cpu: 1000m

requests.memory: 2048Mi

limits.cpu: 6000m

limits.memory: 8192Mi

[root@duduniao local-k8s-yaml]# kubectl describe ns apps # 因为之前存在的pod-demo导致Used不为空

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 500m 6

limits.memory 2Gi 8Gi

requests.cpu 200m 1

requests.memory 512Mi 2Gi

Resource Limits

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 50m 1 100m 500m -

Container memory 64Mi 2Gi 128Mi 1Gi -

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

namespace: apps

spec:

replicas: 1

selector:

matchLabels:

app: slb

template:

metadata:

labels:

app: slb

spec:

containers:

- name: slb-demo

image: linuxduduniao/nginx:v1.0.0

resources:

limits:

memory: 1024Mi

cpu: 500m

[root@duduniao local-k8s-yaml]# kubectl get deployments.apps -n apps # 副本数为1时,可以运行

NAME READY UP-TO-DATE AVAILABLE AGE

slb-deploy 1/1 1 1 6m2s

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 500m 6

limits.memory 1Gi 8Gi

requests.cpu 500m 1

requests.memory 1Gi 2Gi

[root@duduniao local-k8s-yaml]# kubectl get deployments.apps -n apps # 副本数为2时,达到极限了

NAME READY UP-TO-DATE AVAILABLE AGE

slb-deploy 2/2 2 2 6m51s

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 1 6

limits.memory 2Gi 8Gi

requests.cpu 1 1

requests.memory 2Gi 2Gi

[root@duduniao local-k8s-yaml]# kubectl get deployments.apps -n apps # 因为第三个副本超了requests配额,无法启动

NAME READY UP-TO-DATE AVAILABLE AGE

slb-deploy 2/3 2 2 10m

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 1 6

limits.memory 2Gi 8Gi

requests.cpu 1 1

requests.memory 2Gi 2Gi

2.3. API资源配额

apiVersion: v1

kind: ResourceQuota

metadata:

name: computer-resource

namespace: apps

spec:

hard:

requests.cpu: 1000m

requests.memory: 2048Mi

limits.cpu: 6000m

limits.memory: 8192Mi

pods: 3

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 0 6

limits.memory 0 8Gi

pods 0 3

requests.cpu 0 1

requests.memory 0 2Gi

Resource Limits

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 50m 1 100m 500m -

Container memory 64Mi 2Gi 128Mi 1Gi -

apiVersion: apps/v1

kind: Deployment

metadata:

name: slb-deploy

namespace: apps

spec:

replicas: 2

selector:

matchLabels:

app: slb

template:

metadata:

labels:

app: slb

spec:

containers:

- name: slb-demo

image: linuxduduniao/nginx:v1.0.0

[root@duduniao local-k8s-yaml]# kubectl get deployments.apps -n apps slb-deploy # 当副本数为2时,能运行正常

NAME READY UP-TO-DATE AVAILABLE AGE

slb-deploy 2/2 2 2 11s

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 1 6

limits.memory 2Gi 8Gi

pods 2 3

requests.cpu 200m 1

requests.memory 256Mi 2Gi

Resource Limits

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 50m 1 100m 500m -

Container memory 64Mi 2Gi 128Mi 1Gi -

[root@duduniao local-k8s-yaml]# kubectl get deployments.apps -n apps slb-deploy # 当副本数超过3时,超过的部分无法启动

NAME READY UP-TO-DATE AVAILABLE AGE

slb-deploy 3/5 3 3 38s

[root@duduniao local-k8s-yaml]# kubectl describe ns apps

Name: apps

Labels: <none>

Annotations: Status: Active

Resource Quotas

Name: computer-resource

Resource Used Hard

-------- --- ---

limits.cpu 1500m 6

limits.memory 3Gi 8Gi

pods 3 3

requests.cpu 300m 1

requests.memory 384Mi 2Gi

Resource Limits

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 50m 1 100m 500m -

Container memory 64Mi 2Gi 128Mi 1Gi -

3. Kubernets集群资源要求

3.1. Kubernetes集群规模限制

Kubernetes集群设计的最大规模如下,参考文档:创建大型集群

- 节点数不超过5000

- Pod总数不超过 150000

- Container总数不超过 300000

- 每个节点Pod数量不超过 100(kubelet默认限制为110,可通过—max-pods参数配置)

以上是Kubernetes推荐的最大数量,但是我在perf-tests的clusterloader进行密度测试时,把单节点Pod数量压测到400时发现,集群节点非常不稳定,节点会出现NotReady甚至彻底崩溃,并伴随 Failed to activate service 'org.freedesktop.systemd1': timed out 报错,并且是 2C4G和4C16G均出现过。在轻量级任务中,Pod数量压倒200没有问题,但是仍然不推荐生产使用。

3.2. Kubernetes集群配置

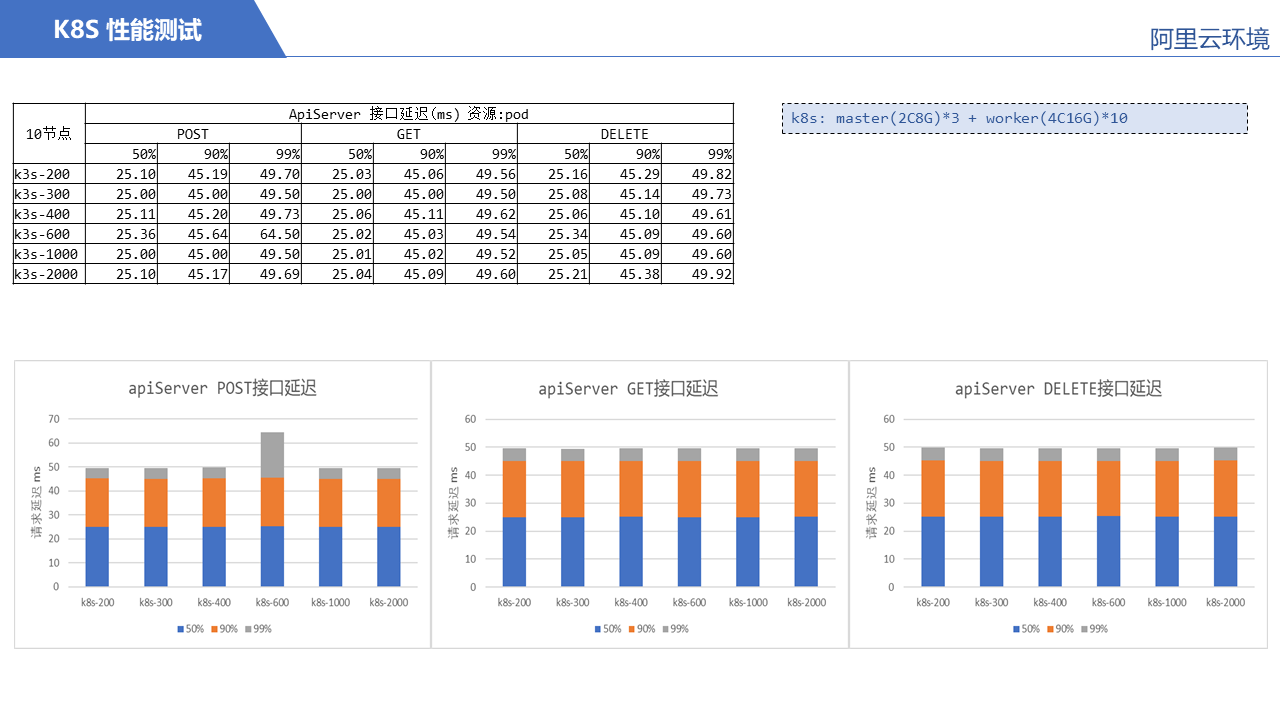

Kubernetes集群中,Schedule 和 Controller-manager 组件是选主的,即只有其中的master在处理任务,而ApiServer是无状态的,可以任意横向扩展。ApiServer的性能,即接口延迟几乎完全取决于Etcd集群,以下是一个10节点,200-2000Pod数量的Pod测试结果,仅供参考,测试工具: perf-test/clusterload 。

当随着节点数增加,Schedule和Controller到达性能瓶颈后,可以将该组件独立出来,使用更高性能的机器运行!

Kubernetes 不同规格集群控制平面的配置参考标准(来源: kubernetes官方文档,google虚拟机配置表, AWS虚拟机配置):

| 集群节点数 | GCE规格 | AWS规则 |

|---|---|---|

| 1-5 | n1-standard-1 1C 3.75G | m3.medium 1C 3.75G |

| 6-10 | n1-standard-2 2C 7.5G | m3.large 2C 7.5G |

| 11-100 | n1-standard-4 4C 15G | m3.xlarge 4C 15G |

| 101-250 | n1-standard-8 8C 30G | m3.2xlarge 8C 30G |

| 251-500 | n1-standard-16 16C 60G | c4.4xlarge 16C 30G |

| 500+ | n1-standard-32 32C 120G | c4.8xlarge 36C 60G |