上一节讲了CrawlProcess的实现,讲了一个CrawlProcess可以控制多个Crawler来同时进行多个爬取任务,CrawlProcess通过调用Crawler的crawl方法来进行爬取,并通过_active活动集合跟踪所有的Crawler.

这一节就来详细分析一下Crawler的源码。

class Crawler:def __init__(self, spidercls, settings=None):if isinstance(spidercls, Spider):raise ValueError('The spidercls argument must be a class, not an object')if isinstance(settings, dict) or settings is None:settings = Settings(settings)self.spidercls = spiderclsself.settings = settings.copy()self.spidercls.update_settings(self.settings)# 声明一个SignalManager对象,这个对象主要是利用开源的python库pydispatch作消息的发送和路由# scrapy使用它发送关键的消息事件给关心者,如爬取开始,爬取结束等消息# 通过send_catch_log_deferred来发送消息,通过connect方法来注册关心消息的处理函数self.signals = SignalManager(self)self.stats = load_object(self.settings['STATS_CLASS'])(self)handler = LogCounterHandler(self, level=self.settings.get('LOG_LEVEL'))logging.root.addHandler(handler)d = dict(overridden_settings(self.settings))logger.info("Overridden settings:\n%(settings)s",{'settings': pprint.pformat(d)})if get_scrapy_root_handler() is not None:# scrapy root handler already installed: update it with new settingsinstall_scrapy_root_handler(self.settings)# lambda is assigned to Crawler attribute because this way it is not# garbage collected after leaving __init__ scopeself.__remove_handler = lambda: logging.root.removeHandler(handler)# 注册引擎结束消息处理函数self.signals.connect(self.__remove_handler, signals.engine_stopped)lf_cls = load_object(self.settings['LOG_FORMATTER'])self.logformatter = lf_cls.from_crawler(self)self.extensions = ExtensionManager.from_crawler(self)self.settings.freeze()self.crawling = Falseself.spider = Noneself.engine = None

上一节分析了Crawler的crawl方法,现在对其调用的其它模块函数进行详细分析:

首先,Crawler的crawl方法创建spider。

self.spider = self._create_spider(*args, **kwargs)def _create_spider(self, *args, **kwargs):return self.spidercls.from_crawler(self, *args, **kwargs)

首先调用_create_spider来创建对应的spider对象,这里有个关键的类方法from_crawler,scrapy的许多类都实现了这个方法,这个方法用crawler对象来创建自己,从名字上也能看出来from_crawler.这样,许多类都可以使用crawler的关键方法和数据了,属于依赖注入吧。

看下spider基类的实现:

scrapy/spiders/init.py:

# 依赖注入可以使得spider有crawler和settings属性@classmethoddef from_crawler(cls, crawler, *args, **kwargs):spider = cls(*args, **kwargs)spider._set_crawler(crawler)return spider

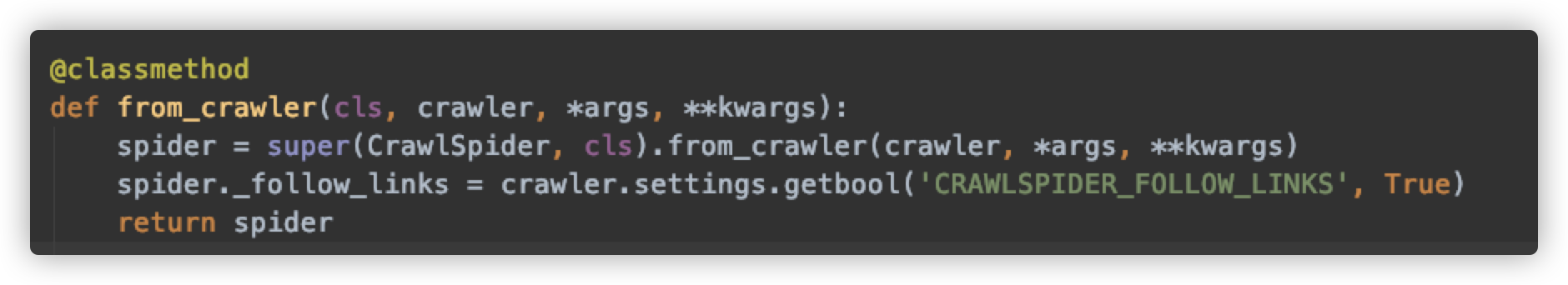

对于我们主要分析的CrawlSpider,也就是链接爬虫,再看下它做了些什么:

除了调用父类的from_crawler外,就是根据配置来初始化是否需要跟进网页链接,也就是不同的爬虫类需要重定义这个方法来实现个性化实现。

接下来,Crawler的crawl方法创建执行引擎:

self.engine = self._create_engine()def _create_engine(self):return ExecutionEngine(self, lambda _: self.stop())

class ExecutionEngine:def __init__(self, crawler, spider_closed_callback):self.crawler = crawlerself.settings = crawler.settingsself.signals = crawler.signals # 使用crawler的信号管理器,用来发送注册消息self.logformatter = crawler.logformatterself.slot = Noneself.spider = Noneself.running = Falseself.paused = False# 根据配置加载调度类模块,默认是scrapy.core.scheduler.Schedulerself.scheduler_cls = load_object(self.settings['SCHEDULER'])# 根据配置加载下载类模块,并创建一个对象,默认是scrapy.core.downloader.Downloaderdownloader_cls = load_object(self.settings['DOWNLOADER'])self.downloader = downloader_cls(crawler)# 创建一个Scraper,这是一个刮取器,它的作用前面文章有讲解,主要是用来处理下载后的结果并存储提取的数据self.scraper = Scraper(crawler)# 关闭爬虫时的处理函数self._spider_closed_callback = spider_closed_callback

再下来,是调用engine的open_spider和start方法,关于engine的源码后面章节详细分析:

start_requests = iter(self.spider.start_requests())# 调用open_spider进行爬取的准备工作,创建engine的关键组件yield self.engine.open_spider(self.spider, start_requests)# 这个start并非真正开始爬取,前一节讲了CrawlerProcess的start开启reactor才是真正开始yield defer.maybeDeferred(self.engine.start)