[01] Demo.java

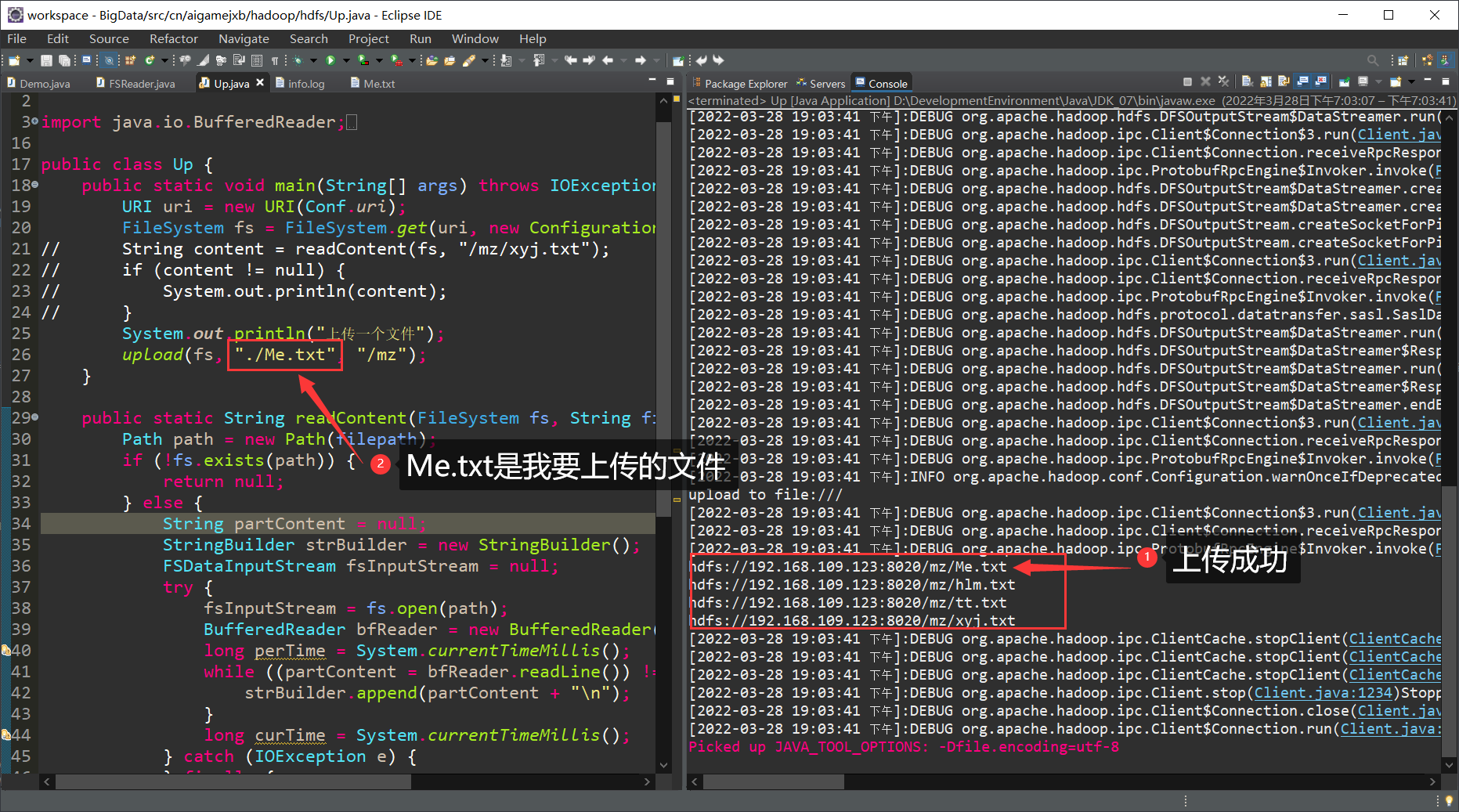

import java.io.BufferedReader;import java.io.IOException;import java.io.InputStreamReader;import java.net.URI;import java.net.URISyntaxException;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FSDataInputStream;import org.apache.hadoop.fs.FileStatus;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path;import cn.aigamejxb.hadoop.hdfs.conf.Conf;public class Demo {public static void main(String[] args) throws IOException, URISyntaxException {URI uri = new URI(Conf.uri);FileSystem fs = FileSystem.get(uri, new Configuration());//做读取文件操作时,把这边的注释打开,下面全部注释掉//因为上面执行完后FileSystem对象会被回收,下面执行会报错// String content = readContent(fs, "/mz/xyj.txt");// if (content != null) {// System.out.println(content);// }//做上传实验时,把这个注释打开,上面注释掉//如果上面不注释,上面执行完后FileSystem对象会被回收,这里执行会报错System.out.println("上传一个文件");upload(fs, "./Me.txt", "/mz");//注意修改成自己需要的参数:1:被上传的路径(电脑上的);2:目标路径(hadoop的目录路径)}public static String readContent(FileSystem fs, String filepath) throws IOException {Path path = new Path(filepath);if (!fs.exists(path)) {return null;} else {String partContent = null;StringBuilder strBuilder = new StringBuilder();FSDataInputStream fsInputStream = null;try {fsInputStream = fs.open(path);BufferedReader bfReader = new BufferedReader(new InputStreamReader(fsInputStream));long perTime = System.currentTimeMillis();while ((partContent = bfReader.readLine()) != null) {strBuilder.append(partContent + "\n");}long curTime = System.currentTimeMillis();} catch (IOException e) {} finally {fs.close();}return strBuilder.toString();}}public static void upload(FileSystem fs, String localFile, String hdfsPath) throws IOException {Path src = new Path(localFile);// 要上传的Path dst = new Path(hdfsPath);// 目的路径fs.copyFromLocalFile(src, dst);System.out.println("upload to " + fs.getConf().get("fs.default.name"));// 默认配置文件的名称(自己new的一个空壳子config)FileStatus files[] = fs.listStatus(dst);// 目的地址的所有文件状态for (FileStatus file : files) {System.out.println(file.getPath());}}}

[02] log4j.properties

简便起见log4j的配置我也贴一下:

# priority :debug<info<warn<error#you cannot specify every priority with different file for log4jlog4j.rootLogger=debug,stdout,info,debug,warn,error#consolelog4j.appender.stdout=org.apache.log4j.ConsoleAppenderlog4j.appender.stdout.layout=org.apache.log4j.PatternLayoutlog4j.appender.stdout.layout.ConversionPattern= [%d{yyyy-MM-dd HH:mm:ss a}]:%p %l%m%n#info loglog4j.logger.info=infolog4j.appender.info=org.apache.log4j.DailyRollingFileAppenderlog4j.appender.info.DatePattern='_'yyyy-MM-dd'.log'log4j.appender.info.File=./src/cn/aigamejxb/hadoop/hdfs/log/info.loglog4j.appender.info.Append=truelog4j.appender.info.Threshold=INFOlog4j.appender.info.layout=org.apache.log4j.PatternLayoutlog4j.appender.info.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss a} [Thread: %t][ Class:%c >> Method: %l ]%n%p:%m%n#debug loglog4j.logger.debug=debuglog4j.appender.debug=org.apache.log4j.DailyRollingFileAppenderlog4j.appender.debug.DatePattern='_'yyyy-MM-dd'.log'log4j.appender.debug.File=./src/cn/aigamejxb/hadoop/hdfs/log/debug.loglog4j.appender.debug.Append=truelog4j.appender.debug.Threshold=DEBUGlog4j.appender.debug.layout=org.apache.log4j.PatternLayoutlog4j.appender.debug.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss a} [Thread: %t][ Class:%c >> Method: %l ]%n%p:%m%n#warn loglog4j.logger.warn=warnlog4j.appender.warn=org.apache.log4j.DailyRollingFileAppenderlog4j.appender.warn.DatePattern='_'yyyy-MM-dd'.log'log4j.appender.warn.File=./src/cn/aigamejxb/hadoop/hdfs/log/warn.loglog4j.appender.warn.Append=truelog4j.appender.warn.Threshold=WARNlog4j.appender.warn.layout=org.apache.log4j.PatternLayoutlog4j.appender.warn.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss a} [Thread: %t][ Class:%c >> Method: %l ]%n%p:%m%n#errorlog4j.logger.error=errorlog4j.appender.error = org.apache.log4j.DailyRollingFileAppenderlog4j.appender.error.DatePattern='_'yyyy-MM-dd'.log'log4j.appender.error.File = ./src/cn/aigamejxb/hadoop/hdfs/log/error.loglog4j.appender.error.Append = truelog4j.appender.error.Threshold = ERRORlog4j.appender.error.layout = org.apache.log4j.PatternLayoutlog4j.appender.error.layout.ConversionPattern = %d{yyyy-MM-dd HH:mm:ss a} [Thread: %t][ Class:%c >> Method: %l ]%n%p:%m%n

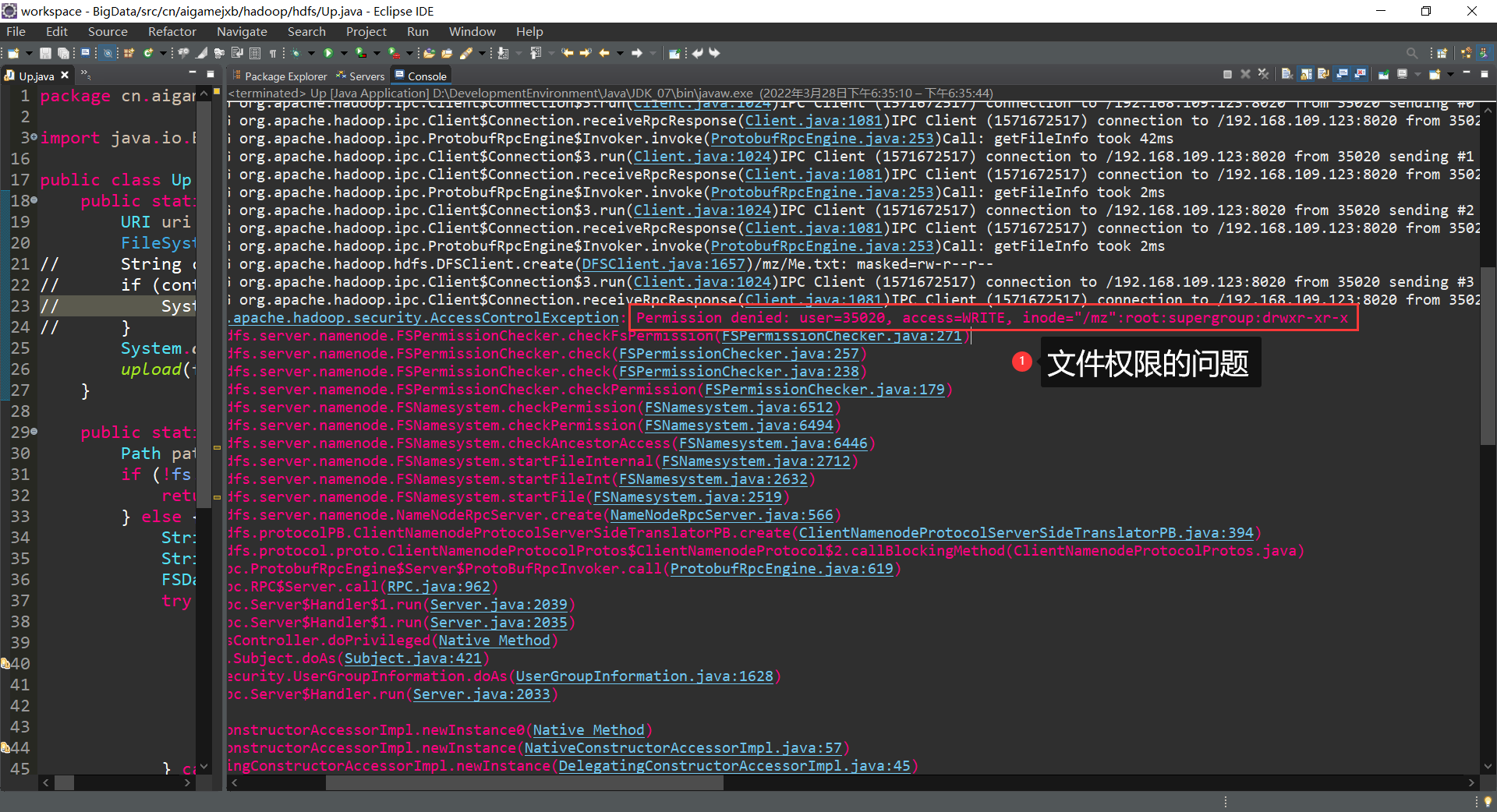

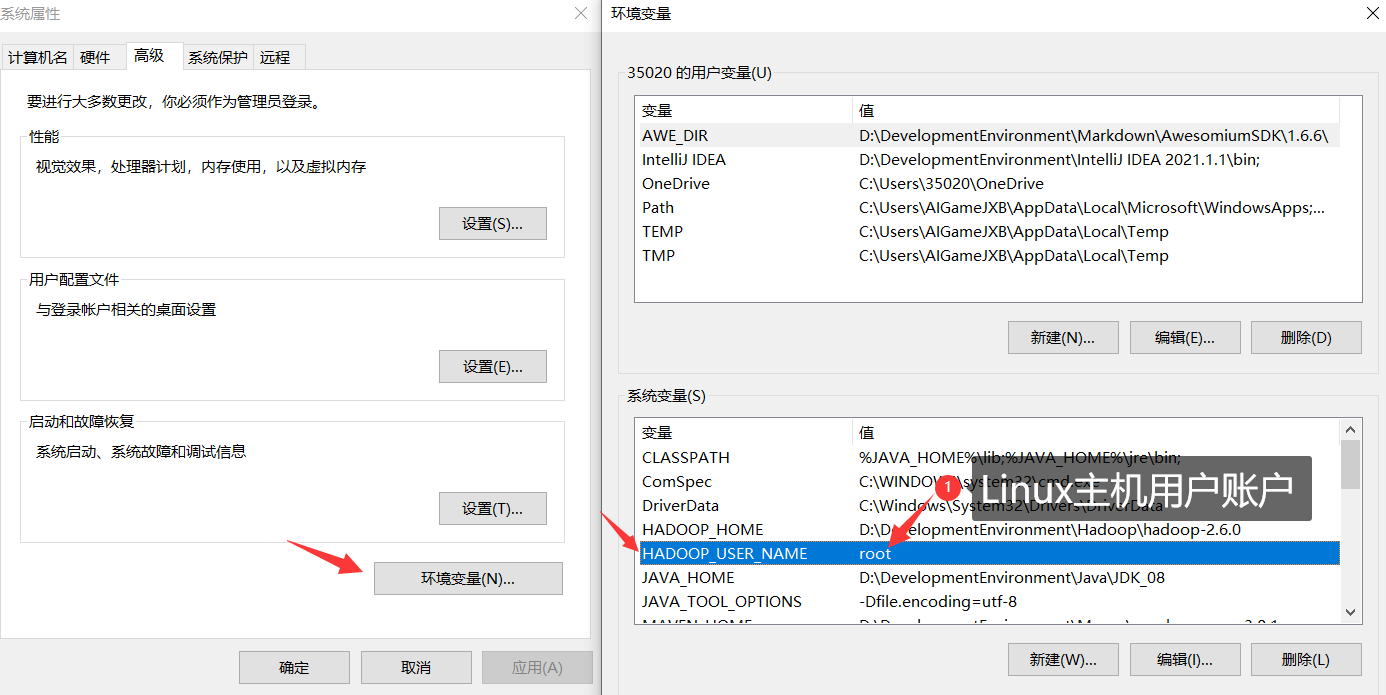

[03] Permission denied 报错解决方案

添加

系统变量HADOOP_USER_NAME=root#变量值根据自己账户情况而定,比如我的linux账户为root。。

重启

Eclipse,再跑一次