今天开始搭建logstash 客户端日志收集工具,搭配上期做的实战,来实现ELK 日志收集系统,我这里主要做收集tomcat的日志,故logstash工具安装在跟Tomcat服务在一台的服务器上;

1.下载

我这里使用版本7.4.0 以下是下载地址:

https://artifacts.elastic.co/downloads/logstash/logstash-7.4.0.rpm

2.安装:

命令

rpm -ivh logstash-7.4.0.rpm

3.修改配置文件

#创建日志和数据目录# mkdir -p /data/logs/logstash# mkdir -p /data/logstash_data# chown -R logstash:logstash /data/logs/logstash/# chown -R logstash:logstash /data/logstash_data/# 修改如下内容为自己的内容[root@VMTest soft]# vi /etc/logstash/logstash.yml#配置时一定要执行:# chown -R logstash:logstash /data/logs/logstash/# chown -R logstash:logstash /data/logstash_data/path.data: /data/logstash_datapath.logs: /data/logs/logstash

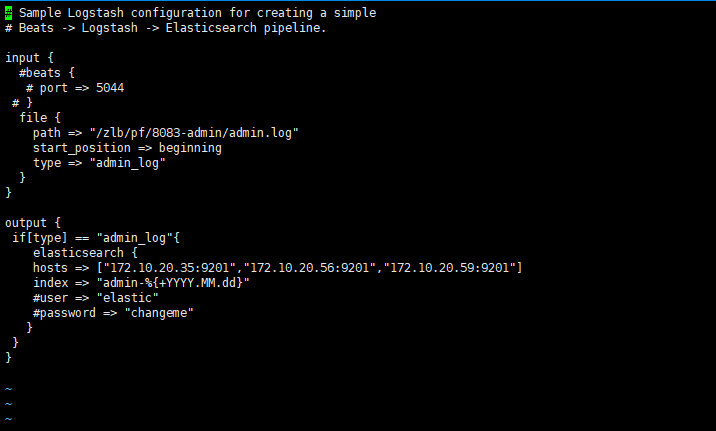

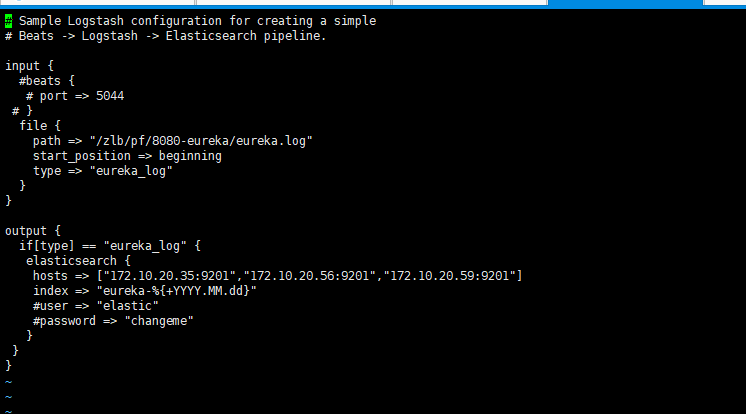

4.添加需要监听的日志文件

#路径/etc/logstash/conf.d#添加配置文件例如:-rw-r--r--. 1 root root 488 8月 24 18:10 logstash-admin.conf-rw-r--r--. 1 root root 492 8月 24 18:12 logstash-eureka.confpath: 日志监听的路径start_position:类型type:当多个配置文件的时候我们要分出谁是谁的日志,这个type就是分类进入ES索引中

5.启动服务

正确的启动方式:

[root@node1 logstash]# pwd/usr/share/logstash# -f /etc/logstash/conf.d/的意思是依赖conf.d下面的所有配置文件bin/logstash -f /etc/logstash/conf.d/

成功日志:

[root@node1 logstash]# bin/logstash -f /etc/logstash/conf.d/Thread.exclusive is deprecated, use Thread::MutexWARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaultsCould not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console[WARN ] 2021-08-24 17:44:23.957 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified[INFO ] 2021-08-24 17:44:23.987 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"7.4.0"}[INFO ] 2021-08-24 17:44:28.911 [Converge PipelineAction::Create<main>] Reflections - Reflections took 66 ms to scan 1 urls, producing 20 keys and 40 values[INFO ] 2021-08-24 17:44:31.370 [[main]-pipeline-manager] elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://172.10.20.35:9201/, http://172.10.20.56:9201/, http://172.10.20.59:9201/]}}[WARN ] 2021-08-24 17:44:31.817 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.35:9201/"}[INFO ] 2021-08-24 17:44:32.148 [[main]-pipeline-manager] elasticsearch - ES Output version determined {:es_version=>7}[WARN ] 2021-08-24 17:44:32.163 [[main]-pipeline-manager] elasticsearch - Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}[WARN ] 2021-08-24 17:44:32.709 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.56:9201/"}[WARN ] 2021-08-24 17:44:32.936 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.59:9201/"}[INFO ] 2021-08-24 17:44:33.052 [[main]-pipeline-manager] elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//172.10.20.35:9201", "//172.10.20.56:9201", "//172.10.20.59:9201"]}[INFO ] 2021-08-24 17:44:33.157 [[main]-pipeline-manager] elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://172.10.20.35:9201/, http://172.10.20.56:9201/, http://172.10.20.59:9201/]}}[WARN ] 2021-08-24 17:44:33.194 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.35:9201/"}[INFO ] 2021-08-24 17:44:33.236 [[main]-pipeline-manager] elasticsearch - ES Output version determined {:es_version=>7}[WARN ] 2021-08-24 17:44:33.246 [[main]-pipeline-manager] elasticsearch - Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}[INFO ] 2021-08-24 17:44:33.320 [Ruby-0-Thread-5: :1] elasticsearch - Using default mapping template[WARN ] 2021-08-24 17:44:33.393 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.56:9201/"}[INFO ] 2021-08-24 17:44:33.430 [Ruby-0-Thread-5: :1] elasticsearch - Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}[WARN ] 2021-08-24 17:44:33.463 [[main]-pipeline-manager] elasticsearch - Restored connection to ES instance {:url=>"http://172.10.20.59:9201/"}[INFO ] 2021-08-24 17:44:33.479 [[main]-pipeline-manager] elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//172.10.20.35:9201", "//172.10.20.56:9201", "//172.10.20.59:9201"]}[INFO ] 2021-08-24 17:44:33.511 [Ruby-0-Thread-7: :1] elasticsearch - Using default mapping template[WARN ] 2021-08-24 17:44:33.687 [[main]-pipeline-manager] LazyDelegatingGauge - A gauge metric of an unknown type (org.jruby.specialized.RubyArrayOneObject) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.[INFO ] 2021-08-24 17:44:33.709 [[main]-pipeline-manager] javapipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, :thread=>"#<Thread:0x477f34a3 run>"}[INFO ] 2021-08-24 17:44:34.482 [[main]-pipeline-manager] file - No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_3f53c4419c0da8df2bf7fae30007a5ce", :path=>["/zlb/pf/8080-eureka/eureka.log"]}[INFO ] 2021-08-24 17:44:34.568 [[main]-pipeline-manager] file - No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_073e1c290c495b18ebbcb26f522ecfe1", :path=>["/zlb/pf/8083-admin/admin.log"]}[INFO ] 2021-08-24 17:44:34.606 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}[INFO ] 2021-08-24 17:44:34.727 [[main]<file] observingtail - START, creating Discoverer, Watch with file and sincedb collections[INFO ] 2021-08-24 17:44:34.728 [[main]<file] observingtail - START, creating Discoverer, Watch with file and sincedb collections[INFO ] 2021-08-24 17:44:34.753 [Ruby-0-Thread-7: :1] elasticsearch - Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}[INFO ] 2021-08-24 17:44:34.862 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}[INFO ] 2021-08-24 17:44:35.935 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

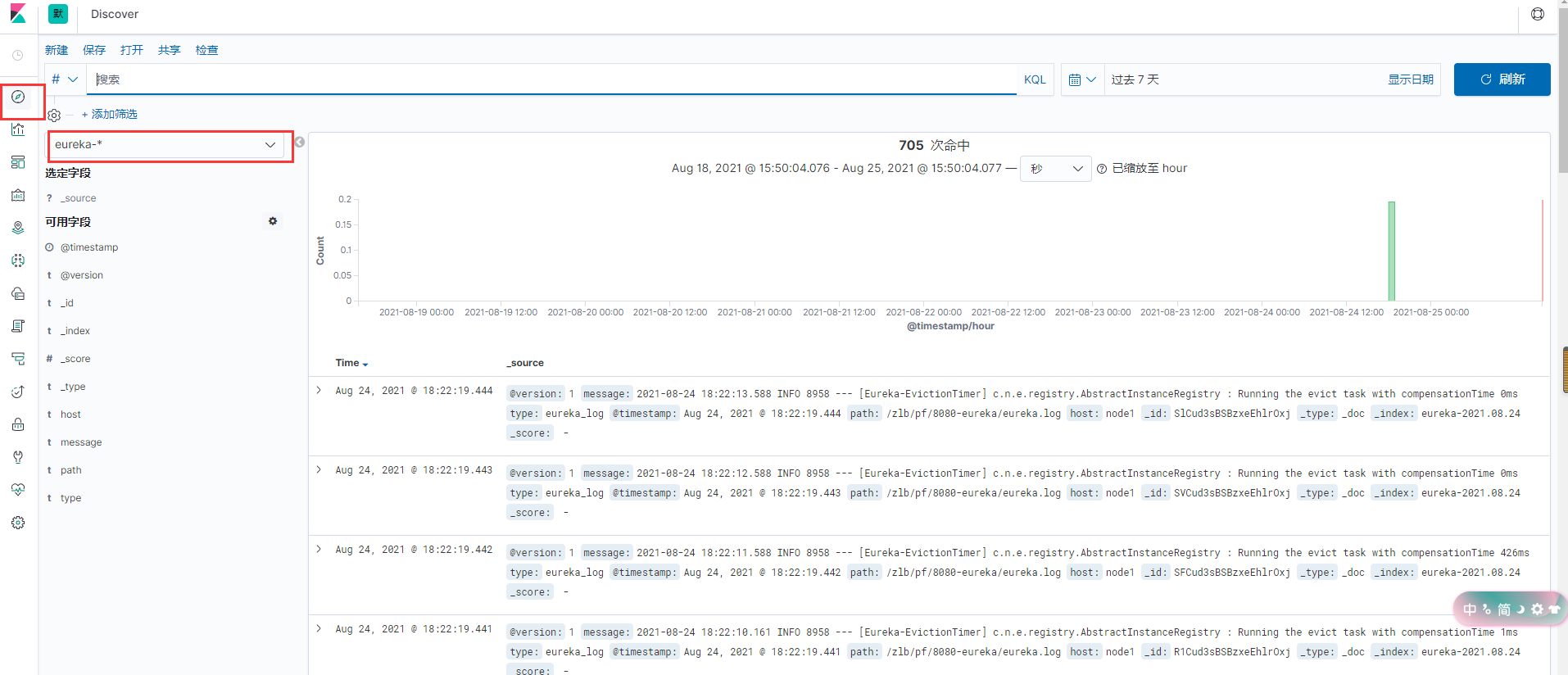

6.效果展示:

我们在kibana展示:

首先我们创建索引模式,我们看到ES 索引已经自动创建了

tomcat日志已经写进

7.将 logstash 作为服务启动

在上面的启动中,都是直接通过命令 logstash 来启动的,其实可以通过修改 logstash.service 启动脚本来启动服务

[root@192.168.118.14 ~]#vim /etc/systemd/system/logstash.service

…

ExecStart=/usr/share/logstash/bin/logstash "--path.settings" "/etc/logstash" "-f" "/etc/logstash/conf.d"

…

启动服务

[root@192.168.118.14 ~]#systemctl daemon-reload

[root@192.168.118.14 ~]#systemctl start logstash