问题描述

在最近的工作当中,接到一个任务:使用docker搭建一个GPU的TensorFlow环境,然后将容器打包给其他人使用,然后还有其他一些相关的库就不概述了。中间也是被要求甲方弄得有点无话可说,中间有很多问题,正好就记录一下。避免以后再有形似的工作不知怎么写。

环境描述

CentOS Linux release 7.7.1908 (Core)

- 对应命令:

cat /etc/redhat-release

- 对应命令:

docker版本:Docker version 18.09.8, build 0dd43dd87f

- 对应命令:

docker --version

- 对应命令:

GPU显卡型号:NVIDIA GeForce GTX 1080ti

开始搭建环境

首先是查询资料知道了要想在docker container中使用GPU驱动那么首先是安装nvidia-docker,关于nvidia-docker的描述是:”Build and run Docker containers leveraging NVIDIA GPUs”。目前的nvidia-docker是第二代,默认是nvidia-docker2。其实所有的安装教程都不如直接看官方文档:nvidia-docker官方存储库,然后根据官方的文档说明,目前使用nvidia-docker只需要在宿主机上安装GPU的驱动就好,不用安装cuda了。

安装GPU驱动

首先去nvidia的官方驱动页面:显卡驱动下载页面选择适合自己版本的,然后下载。然后运行就好。这是第一种方法,但是我不是使用这一种方法,我是用的在线的安装:

nvidia-detect -vrpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgrpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpmyum install nvidia-detectnvidia-detect -vlspci | grep -i NVIDIAyum search kmod-nvidiayum install kmod-nvidia.x86_64

安装nvidia-docker

关于nvidia-docker安装,直接按照官方存储库的readme文件中的提示就好

# 获取系统型号distribution=$(. /etc/os-release;echo $ID$VERSION_ID)# 通过curl下载安装文件curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.repo | sudo tee /etc/yum.repos.d/nvidia-docker.repo# 执行yum安装sudo yum install -y nvidia-container-toolkit# 重启dockersudo systemctl restart docker

修改docker配置文件

要想在容器中使用nvidia,首先是修改docker的配置文件:

# 修改配置文件vim /etc/docker/daemon.json# 写入以下配置{"default-runtime": "nvidia","registry-mirrors":["https://registry.docker-cn.com"],"runtimes": {"nvidia": {"path": "nvidia-container-runtime","runtimeArgs": []}}}

在容器中使用GPU

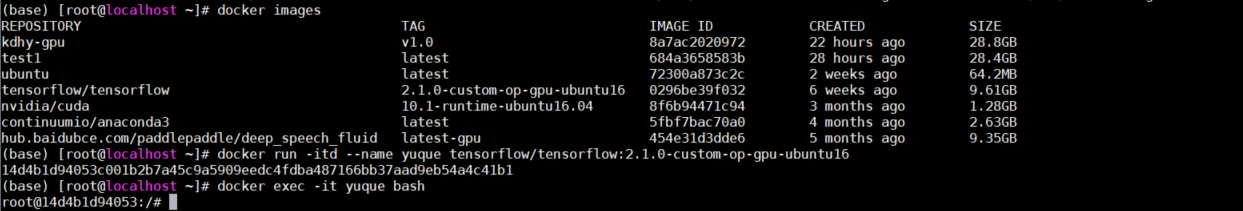

首先是拉取镜像,这是第一个坑,不能拉取普通的镜像,需要拉取nvidia的镜像,关于TensorFlow,也就需要去docker hub中去拉取安装好的TensorFlow的镜像,然后这次需求比较特殊,需要的操作系统版本是Ubuntu 16.04:

docker pull tensorflow/tensorflow:2.1.0-custom-op-gpu-ubuntu16

tensorflow在一点几版本中CPU版本和GPU版本是分开的,但是针对最新的TensorFlow中就没有分开的。所以直接拉取就好。然后是启动容器, 命令如下,就是很简单的docker命令:

docker run -itd --name container—name -p 22000:22 tensorflow:2.1.0-custom-op-gpu-ubuntu16

然后是进入容器:

docker exec -it container-name bash

然后在容器中验证能否使用nvidia,命令:nvidia-smi:

root@14d4b1d94053:~# nvidia-smiThu Mar 12 06:39:51 2020+-----------------------------------------------------------------------------+| NVIDIA-SMI 440.59 Driver Version: 440.59 CUDA Version: 10.2 ||-------------------------------+----------------------+----------------------+| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC || Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. ||===============================+======================+======================|| 0 GeForce GTX 108... Off | 00000000:01:00.0 Off | N/A || 22% 40C P0 53W / 250W | 0MiB / 11178MiB | 0% Default |+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+| Processes: GPU Memory || GPU PID Type Process name Usage ||=============================================================================|| No running processes found |+-----------------------------------------------------------------------------+root@14d4b1d94053:~# nvcc -Vnvcc: NVIDIA (R) Cuda compiler driverCopyright (c) 2005-2019 NVIDIA CorporationBuilt on Sun_Jul_28_19:07:16_PDT_2019Cuda compilation tools, release 10.1, V10.1.243

然后是验证TensorFlow中能否正常GPU,根据TensorFlow官方页面:

python -c "import tensorflow as tf;print(tf.reduce_sum(tf.random.normal([1000, 1000])))"root@14d4b1d94053:~# python -c "import tensorflow as tf;print(tf.reduce_sum(tf.random.normal([1000, 1000])))"2020-03-12 06:42:45.846888: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libnvinfer.so.62020-03-12 06:42:45.848669: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libnvinfer_plugin.so.62020-03-12 06:42:51.977544: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcuda.so.12020-03-12 06:42:52.259782: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.260348: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1555] Found device 0 with properties:pciBusID: 0000:01:00.0 name: GeForce GTX 1080 Ti computeCapability: 6.1coreClock: 1.582GHz coreCount: 28 deviceMemorySize: 10.92GiB deviceMemoryBandwidth: 451.17GiB/s2020-03-12 06:42:52.260423: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.12020-03-12 06:42:52.260475: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.102020-03-12 06:42:52.381636: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcufft.so.102020-03-12 06:42:52.428450: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcurand.so.102020-03-12 06:42:52.437360: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusolver.so.102020-03-12 06:42:52.441722: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusparse.so.102020-03-12 06:42:52.441909: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudnn.so.72020-03-12 06:42:52.442232: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.443970: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.445548: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1697] Adding visible gpu devices: 02020-03-12 06:42:52.446925: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA2020-03-12 06:42:52.587060: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3408000000 Hz2020-03-12 06:42:52.588038: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x618e180 initialized for platform Host (this does not guarantee that XLA will be used). Devices:2020-03-12 06:42:52.588173: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version2020-03-12 06:42:52.674842: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.675414: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x6190c60 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:2020-03-12 06:42:52.675443: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): GeForce GTX 1080 Ti, Compute Capability 6.12020-03-12 06:42:52.675688: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.676130: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1555] Found device 0 with properties:pciBusID: 0000:01:00.0 name: GeForce GTX 1080 Ti computeCapability: 6.1coreClock: 1.582GHz coreCount: 28 deviceMemorySize: 10.92GiB deviceMemoryBandwidth: 451.17GiB/s2020-03-12 06:42:52.676179: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.12020-03-12 06:42:52.676196: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.102020-03-12 06:42:52.676217: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcufft.so.102020-03-12 06:42:52.676237: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcurand.so.102020-03-12 06:42:52.676252: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusolver.so.102020-03-12 06:42:52.676273: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusparse.so.102020-03-12 06:42:52.676290: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudnn.so.72020-03-12 06:42:52.676347: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.676748: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.677095: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1697] Adding visible gpu devices: 02020-03-12 06:42:52.684803: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.12020-03-12 06:42:52.896910: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1096] Device interconnect StreamExecutor with strength 1 edge matrix:2020-03-12 06:42:52.896955: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1102] 02020-03-12 06:42:52.896983: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] 0: N2020-03-12 06:42:52.907562: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.909553: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero2020-03-12 06:42:52.910387: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1241] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 10435 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1080 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)tf.Tensor(-186.68738, shape=(), dtype=float32)

然后也这次工作中也有其他库的安装要求,然后通过pytorch也可以验证:

python -c "import torch;print(torch.cuda.is_available())"(base) root@aa09995aa171:/# python -c "import torch;print(torch.cuda.is_available())"True

容器的优化

首先说,作为一个Python开发者来说,Python环境管理是基础的准备,这次要求也是要有环境管理,我们使用Anaconda来进行环境管理,然后可以使用wget获取anaconda的安装脚本,因为是使用的TensorFlow的镜像,里面wget、vim等基础工具都是安装好了的。如果没有安装的话我们还需要安装

Ubuntu更换阿里云源

vim /etc/apt/sources.list# 写入配置deb http://mirrors.aliyun.com/ubuntu/ xenial maindeb-src http://mirrors.aliyun.com/ubuntu/ xenial maindeb http://mirrors.aliyun.com/ubuntu/ xenial-updates maindeb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates maindeb http://mirrors.aliyun.com/ubuntu/ xenial universedeb-src http://mirrors.aliyun.com/ubuntu/ xenial universedeb http://mirrors.aliyun.com/ubuntu/ xenial-updates universedeb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates universedeb http://mirrors.aliyun.com/ubuntu/ xenial-security maindeb-src http://mirrors.aliyun.com/ubuntu/ xenial-security maindeb http://mirrors.aliyun.com/ubuntu/ xenial-security universedeb-src http://mirrors.aliyun.com/ubuntu/ xenial-security universe# 更新apt-get update# 安装vimapt-get install wget

安装Anaconda

国内通过wget去anaconda的官网下载会很慢,我们可以去清华源下载:anaconda安装包清华下载,然后安装anaconda:

bash Anaconda3-2019.10-Linux-x86_64.sh

然后默认安装就好,安装好后执行一下:

source .bashrc

就可以进入conda的环境,然后修改anaconda的源为清华源,不然创建环境的时候很慢:

# 初始化conda配置conda config# 修改配置vim .condarc# 写入配置channels:- defaultsshow_channel_urls: truechannel_alias: https://mirrors.tuna.tsinghua.edu.cn/anacondadefault_channels:- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/r- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/pro- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/msys2custom_channels:conda-forge: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudmsys2: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudbioconda: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudmenpo: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudpytorch: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloudsimpleitk: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

然后如果对科学计算没什么大的需求,安装miniconda就可以了。

修改pip源

pip安装第三方库的时候会非常慢,我们可以修改pip源为阿里源:

mkdir .pipvim .pip/pip.conf# 写入配置[global]index-url = https://mirrors.aliyun.com/pypi/simple/[install]trusted-host=mirrors.aliyun.com

容器导出

说实话这是我整个工作中遇到问题最大的一部分,docker容器的导入导出有两个方式docker export和docker import以及docker save和docker load。docker import和docker load导入都是导入成一个镜像,然后再跑一个容器。然后关于这两个的区别完全可以另开一篇,这里就简单说一下,docker export导出的是容器的快照,不会保存元数据。然后如果用这一种方式,就算你在你机器上创建的容器导出再导入都会出错。会使用不了GPU资源,这玩意搞我好久哦。所以,如果你想让其他人也使用也就需要使用docker save,docker save是针对镜像的,所以我们需要先将我们搭建好的docker容器提交为一个镜像:

docker commit container-name image-name:version

然后使用docker save命令导出就好:

docker save -o image-name.tar image-name:version

然后就OK了

docker export和docker save的区别可以参看文章:docker export与docker save的区别