爬取笑话网站

代码

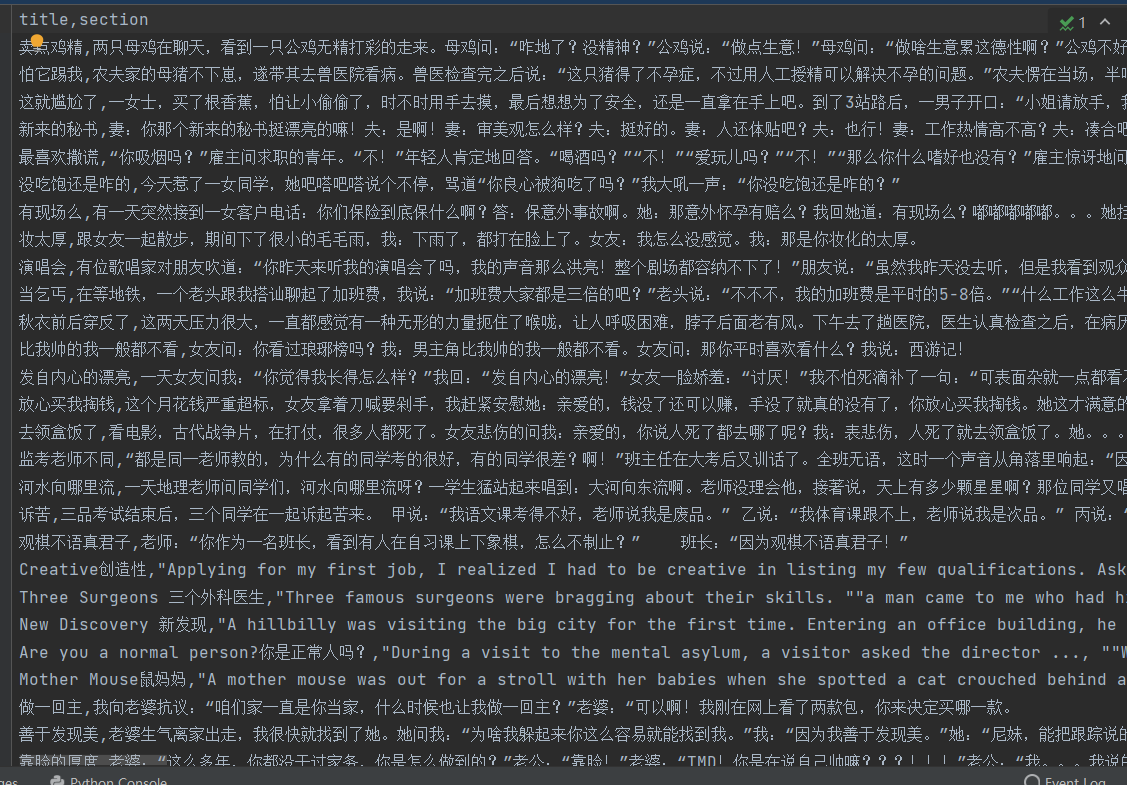

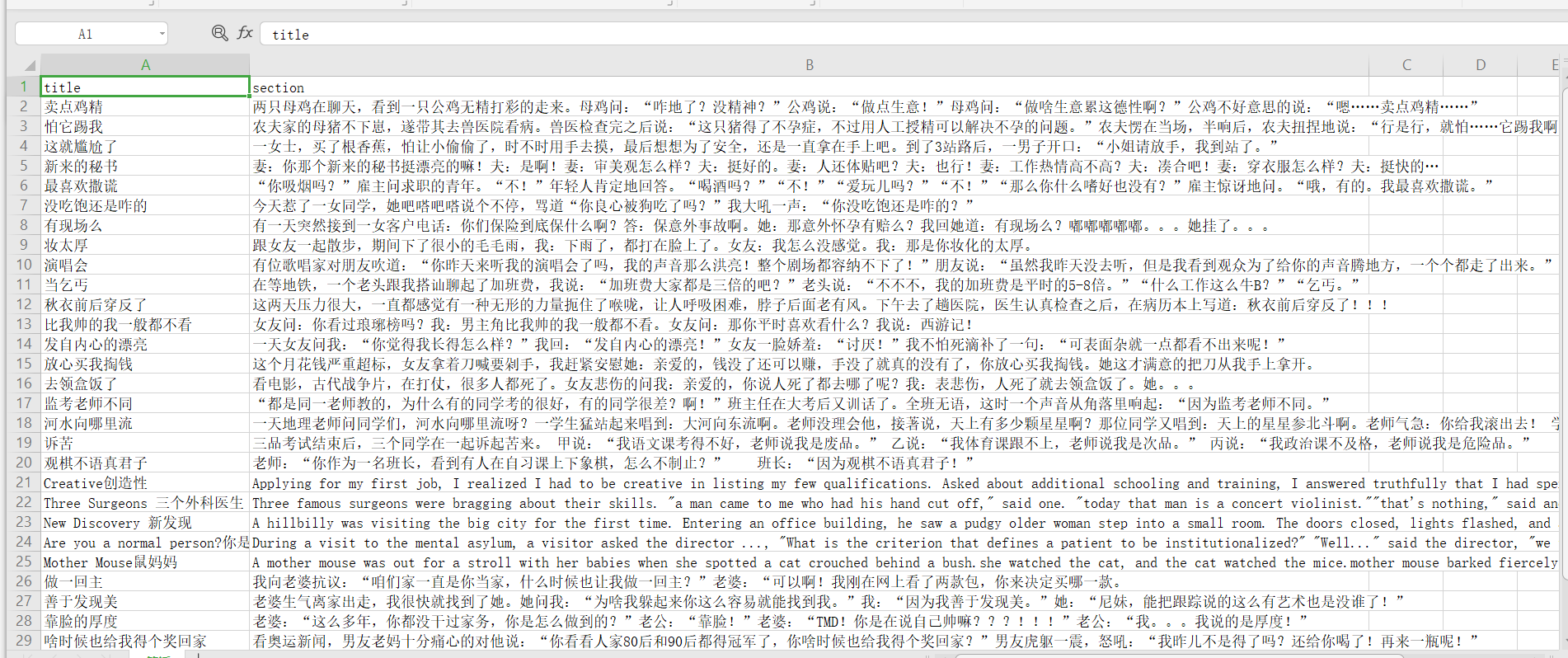

from lxml import etreeimport requestsimport reimport csvclass XiaoHua: # 初始化函数 def __init__(self): self.headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36' } self.url = 'http://www.17989.com/xiaohua/1.htm' self.li = [] self.header = ['title', 'section'] # 发送请求函数 def read_url(self, url): res = requests.get(url, self.headers) html = res.content.decode('utf-8') # print(html) return html # 数据解析函数 def parse_html(self, html): tree = etree.HTML(html) li_tags = tree.xpath('//div[@class="module articlelist"]//li') # print(li_tags) for li_tag in li_tags: pat = '\r\n' item = {} title = li_tag.xpath('./div[@class="hd"]/text()')[0].strip() item['title'] = title section = li_tag.xpath('./pre/text()')[0].strip() item['section'] = re.sub(pat, '', section) # print(item) self.li.append(item) # 数据保存函数 def write_data(self): with open('笑话.csv', 'w', encoding='utf-8', newline='') as f: w = csv.DictWriter(f, self.header) w.writeheader() w.writerows(self.li) print("保存成功") # 主函数 def main(self): # 发送请求 html = self.read_url(self.url) # 使用xpath数据解析 self.parse_html(html) # print(self.li) # 翻页操作 # # 1.爬取所有 # while True: # tree2 = etree.HTML(html) # next_url = 'http://www.17989.com/' + tree2.xpath('//a[text()="下一页"]/@href')[0] # if next_url: # # 使用新的url发送请求 # html = self.read_url(next_url) # # 数据解析 # self.parse_html(html) # print(next_url) # else: # break # 2.选择性爬取 由于爬取全部太多了 num = int(input("请输入你要爬取的页数:")) for i in range(num - 1): tree2 = etree.HTML(html) next_url = 'http://www.17989.com/' + tree2.xpath('//a[text()="下一页"]/@href')[0] html = self.read_url(next_url) self.parse_html(html) print(next_url) # 保存 self.write_data()# 启动if __name__ == '__main__': xh = XiaoHua() xh.main()

展示