环境启动

node02,apache-hive-2.3.7-bin.tar.gz,mysql-connector-java hadoop hdfs MR必须先启动 node02~04:./zkServer.sh start node01 hadoop-daemon.sh start journalnode hadoop-daemon.sh start namenode node02 hdfs namenode -bootstrapStandby node01 start-dfs.sh start-yarn.sh node03~04 yarn-daemon.sh start resourcemanager node03 hive —service hiveserver2 node04 连接 beeline

!connect jdbc:hive2://node03:10000/default root 123456或beeline -u jdbc:hive2://node03:10000/default root 123456退出 !quit

环境停止

node02~04:cd /opt/bigdata/zookeeper-3.4.6/bin ./zkServer.sh stop node01 hadoop-daemon.sh stop journalnode hadoop-daemon.sh stop namenode node01 stop-dfs.sh stop-yarn.sh node03~04 yarn-daemon.sh stop resourcemanager

一:SerDe

1:序列化和发序列化

2:实现数据存储和执行引擎解耦

3:应用场景

1):正则表达式读取

hive主要存储结构化数据,当结构化数据格式嵌套复杂时,可以使用serde,利用正则表达式匹配的方法读取数据,例如,表字段如下:

id,name,map

2):过滤数据例如

192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] “GET /bg-upper.png HTTP/1.1” 304 -

不希望数据显示的时候包含[]或者””,此时可以考虑使用serde的方式

4:语法规则

row_format: DELIMITED[FIELDS TERMINATED BY char [ESCAPED BY char]][COLLECTION ITEMS TERMINATED BY char][MAP KEYS TERMINATED BY char][LINES TERMINATED BY char]: SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

5:案例

1):数据文件

192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] "GET /bg-upper.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] "GET /bg-nav.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] "GET /asf-logo.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] "GET /bg-button.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:35 +0800] "GET /bg-middle.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET / HTTP/1.1" 200 11217192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET / HTTP/1.1" 200 11217192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /asf-logo.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-middle.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-button.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-nav.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-upper.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET / HTTP/1.1" 200 11217192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET / HTTP/1.1" 200 11217192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-button.png HTTP/1.1" 304 -192.168.57.4 - - [29/Feb/2019:18:14:36 +0800] "GET /bg-upper.png HTTP/1.1" 304 -

2):操作

创建表

CREATE TABLE logtbl (host STRING,identity STRING,t_user STRING,time STRING,request STRING,referer STRING,agent STRING)ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe'WITH SERDEPROPERTIES ("input.regex" = "([^ ]*) ([^ ]*) ([^ ]*) \\[(.*)\\] \"(.*)\" (-|[0-9]*) (-|[0-9]*)")STORED AS TEXTFILE;

加载数据

load data local inpath '/root/data/log' into table logtbl;

查询操作

select * from logtbl;

二:hive server2

1:hive server2

允许客户端远程连接hive检索数据的服务端接口,则需要开启hive server2,当前实现基于thrift RPC,对于hive server是个加强的版本,hive server2 针对于用户来说是服务端

hive server是一个允许一个远程客户端提交query的可选服务

2:启动

1)服务端

node03

hive —service hiveserver2

2)客户端

node04

beeline

#连接服务端!connect jdbc:hive2://node03:10000/default

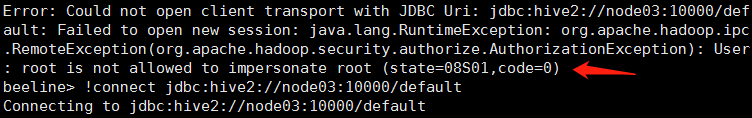

或beeline -u jdbc:hive2://node03:10000/default root 123456<br />报错:

node01:

cd /opt/bigdata/hadoop-2.6.5/etc/hadoop

vi core-site.xml 增加配置

<property><name>hadoop.proxyuser.root.groups</name><value>*</value></property><property><name>hadoop.proxyuser.root.hosts</name><value>*</value></property>

复制给其他服务器

scp core-site.xml node02:pwd

scp core-site.xml node03:pwd

scp core-site.xml node04:pwd

在线重启node01 node02

node01 hdfs dfsadmin -fs hdfs://node01:8020 -refreshSuperUserGroupsConfiguration

node02 hdfs dfsadmin -fs hdfs://node02:8020 -refreshSuperUserGroupsConfiguration

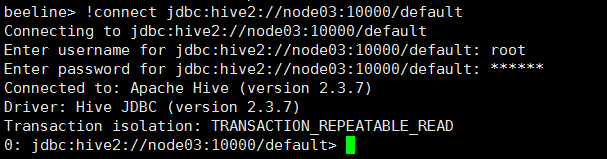

!connect jdbc:hive2://node03:10000/default root 123456 成功:

3)常用命令

4)beeline

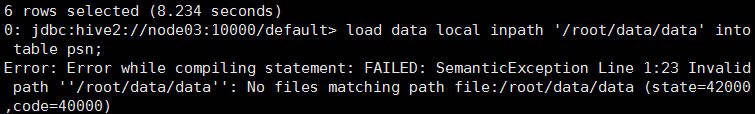

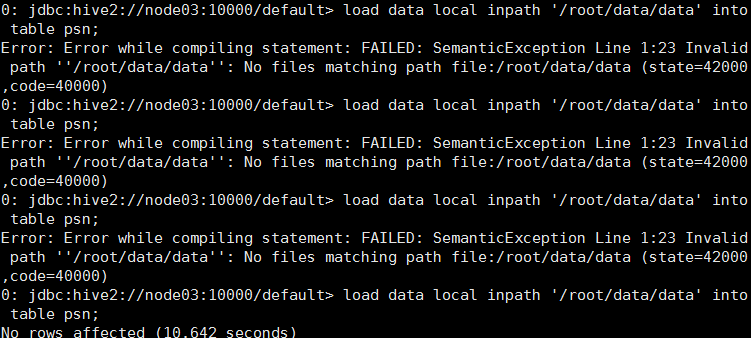

beeline 客户端一般情况下只能做查询操作,不能做增删改操作,否则报文件路径错误

load data local inpath ‘/root/data/data’ into table psn;

如果想操作,必须将文件配置在服务端node03,上传依然报错,write权限错误

当用户都是查询需求时,应使用beeline方式,服务端暴露10000端口