环境:

hadoop: 3.0.0-cdh6.3.2

背景:

刚把CDH的报警接入到钉钉群,不接不知道,一接吓一跳,时不时蹦出一个NameNode RPC延迟报警惊扰到大家,所以决定一定要把延迟的元凶揪出来。

问题定位:

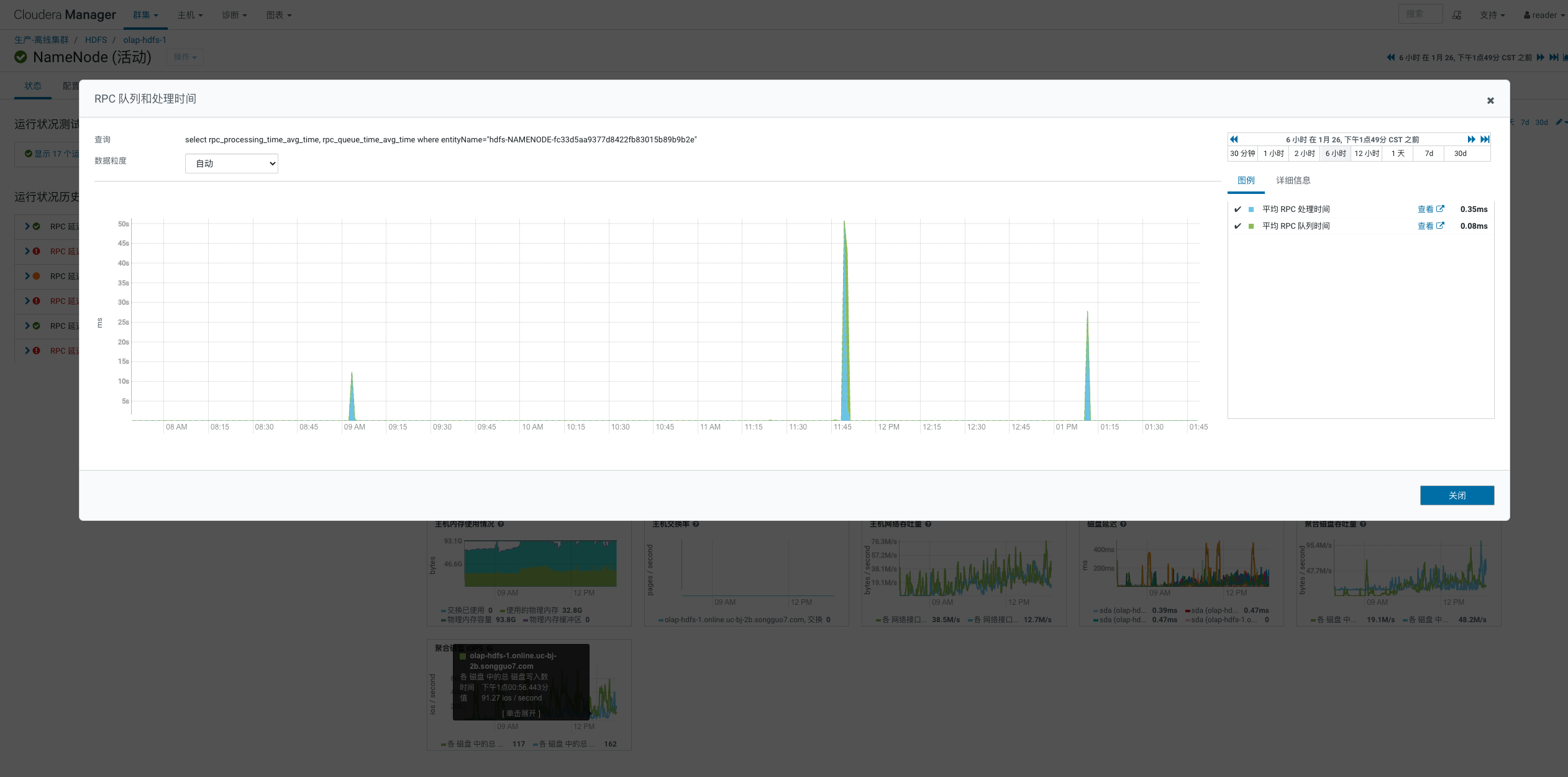

- NameNode RPC延迟

- 可以看到上面3段时间的延迟情况,查看Active NameNode 对应时间的日志:

2021-01-26 09:02:39,014 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Roll Edit Log from 10.xx.xx.197 2021-01-26 09:02:39,014 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Rolling edit logs 2021-01-26 09:02:39,014 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Ending log segment 1252312052, 1252312467 2021-01-26 09:02:39,949 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 420 Total time for transactions(ms): 21 Number of t ransactions batched in Syncs: 32 Number of syncs: 388 SyncTimes(ms): 445 15210 2021-01-26 09:02:39,954 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Finalizing edits file /data/dfs/nn/current/edits_inprogress_000000 0001252312052 -> /data/dfs/nn/current/edits_0000000001252312052-0000000001252312471 2021-01-26 09:02:39,955 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Starting log segment at 1252312472 2021-01-26 09:02:40,672 INFO org.apache.sentry.hdfs.SentryAuthorizationInfo: Received updates from Sentry Server. Size of PathUpdates 1 PermUpdates 0 2021-01-26 09:02:40,672 INFO org.apache.sentry.hdfs.UpdateableAuthzPaths: Applying Path update. Adding path for authz object cloudera_manager_metastore_ca nary_test_db_hive_hivemetastore_140d02447c5fd9c8e3f975f0bc0f78e5.cm_test_table authz path [[user, hue, .cloudera_manager_hive_metastore_canary, hive_HIVEM ETASTORE_140d02447c5fd9c8e3f975f0bc0f78e5, cm_test_table, p1=p0, p2=420]] 2021-01-26 09:03:05,679 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: FSNamesystem write lock held for 26663 ms via java.lang.Thread.getStackTrace(Thread.java:1559) org.apache.hadoop.util.StringUtils.getStackTrace(StringUtils.java:1032) org.apache.hadoop.hdfs.server.namenode.FSNamesystemLock.writeUnlock(FSNamesystemLock.java:263) org.apache.hadoop.hdfs.server.namenode.FSNamesystem.writeUnlock(FSNamesystem.java:1604) org.apache.hadoop.hdfs.server.namenode.FSNamesystem.rollEditLog(FSNamesystem.java:4606) org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.rollEditLog(NameNodeRpcServer.java:1276) org.apache.hadoop.hdfs.protocolPB.NamenodeProtocolServerSideTranslatorPB.rollEditLog(NamenodeProtocolServerSideTranslatorPB.java:146) org.apache.hadoop.hdfs.protocol.proto.NamenodeProtocolProtos$NamenodeProtocolService$2.callBlockingMethod(NamenodeProtocolProtos.java:12974) org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523) org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991) org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:869) org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:815) java.security.AccessController.doPrivileged(Native Method) javax.security.auth.Subject.doAs(Subject.java:422) org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

org.apache.hadoop.ipc.Server$Handler.run(Server.java:2675)

Number of suppressed write-lock reports: 0Longest write-lock held interval: 266632021-01-26 09:03:05,683 WARN org.apache.hadoop.ipc.Server: IPC Server handler 44 on 8022, call Call#25095 Retry#0 org.apache.hadoop.hdfs.server.protocol.N

amenodeProtocol.rollEditLog from 10.xx.xx.197:44732: output error

2021-01-26 09:03:05,684 INFO org.apache.hadoop.ipc.Server: IPC Server handler 44 on 8022 caught an exception

java.nio.channels.ClosedChannelException

at sun.nio.ch.SocketChannelImpl.ensureWriteOpen(SocketChannelImpl.java:270)at sun.nio.ch.SocketChannelImpl.write(SocketChannelImpl.java:461)at org.apache.hadoop.ipc.Server.channelWrite(Server.java:3242)at org.apache.hadoop.ipc.Server.access$1700(Server.java:137)at org.apache.hadoop.ipc.Server$Responder.processResponse(Server.java:1466)at org.apache.hadoop.ipc.Server$Responder.doRespond(Server.java:1536)at org.apache.hadoop.ipc.Server$Connection.sendResponse(Server.java:2586)at org.apache.hadoop.ipc.Server$Connection.access$300(Server.java:1608)at org.apache.hadoop.ipc.Server$RpcCall.doResponse(Server.java:933)at org.apache.hadoop.ipc.Server$Call.sendResponse(Server.java:767)at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:878)at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:815)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2675)

- 再来看看StandBy NameNode 的日志:

2021-01-26 09:02:39,011 INFO org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Triggering log roll on remote NameNode

2021-01-26 09:02:40,201 INFO org.apache.sentry.hdfs.SentryAuthorizationInfo: Received updates from Sentry Server. Size of PathUpdates 1 PermUpdates 0

2021-01-26 09:02:40,201 INFO org.apache.sentry.hdfs.UpdateableAuthzPaths: Applying Path update. Adding path for authz object cloudera_manager_metastore_ca

nary_test_db_hive_hivemetastore_140d02447c5fd9c8e3f975f0bc0f78e5.cm_test_table authz path [[user, hue, .cloudera_manager_hive_metastore_canary, hive_HIVEM

ETASTORE_140d02447c5fd9c8e3f975f0bc0f78e5, cm_test_table, p1=p0, p2=420]]

2021-01-26 09:02:59,035 WARN org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Exception from remote name node RemoteNameNodeInfo [nnId=namenode106

, ipcAddress=olap-hdfs-1.online.xx.com/10.xx.xx.11:8022, httpAddress=http://olap-hdfs-1.online.xx.com:9870], try next.

java.net.SocketTimeoutException: Call From olap-hdfs-2.online.xx.com/10.xx.xx.197 to olap-hdfs-1.online.xx.com:8022 failed

on socket timeout exception: java.net.SocketTimeoutException: 20000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels

.SocketChannel[connected local=/10.xx.xx.197:44732 remote=olap-hdfs-1.online.xx.com/10.xx.xx.11:8022]; For more details see: http://wi

ki.apache.org/hadoop/SocketTimeout

at sun.reflect.GeneratedConstructorAccessor23.newInstance(Unknown Source)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:824)at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:774)at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1503)at org.apache.hadoop.ipc.Client.call(Client.java:1445)at org.apache.hadoop.ipc.Client.call(Client.java:1355)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)at com.sun.proxy.$Proxy23.rollEditLog(Unknown Source)at org.apache.hadoop.hdfs.protocolPB.NamenodeProtocolTranslatorPB.rollEditLog(NamenodeProtocolTranslatorPB.java:150)at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$2.doWork(EditLogTailer.java:365)at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$2.doWork(EditLogTailer.java:362)at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$MultipleNameNodeProxy.call(EditLogTailer.java:504)at java.util.concurrent.FutureTask.run(FutureTask.java:266)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)Caused by: java.net.SocketTimeoutException: 20000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.xx.xx.197:44732 remote=olap-hdfs-1.online.xx.com/10.xx.xx.11:8022]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)at java.io.FilterInputStream.read(FilterInputStream.java:133)at java.io.BufferedInputStream.fill(BufferedInputStream.java:246)at java.io.BufferedInputStream.read(BufferedInputStream.java:265)at java.io.FilterInputStream.read(FilterInputStream.java:83)at java.io.FilterInputStream.read(FilterInputStream.java:83)at org.apache.hadoop.ipc.Client$Connection$PingInputStream.read(Client.java:554)at java.io.DataInputStream.readInt(DataInputStream.java:387)at org.apache.hadoop.ipc.Client$IpcStreams.readResponse(Client.java:1804)at org.apache.hadoop.ipc.Client$Connection.receiveRpcResponse(Client.java:1166)at org.apache.hadoop.ipc.Client$Connection.run(Client.java:1062)

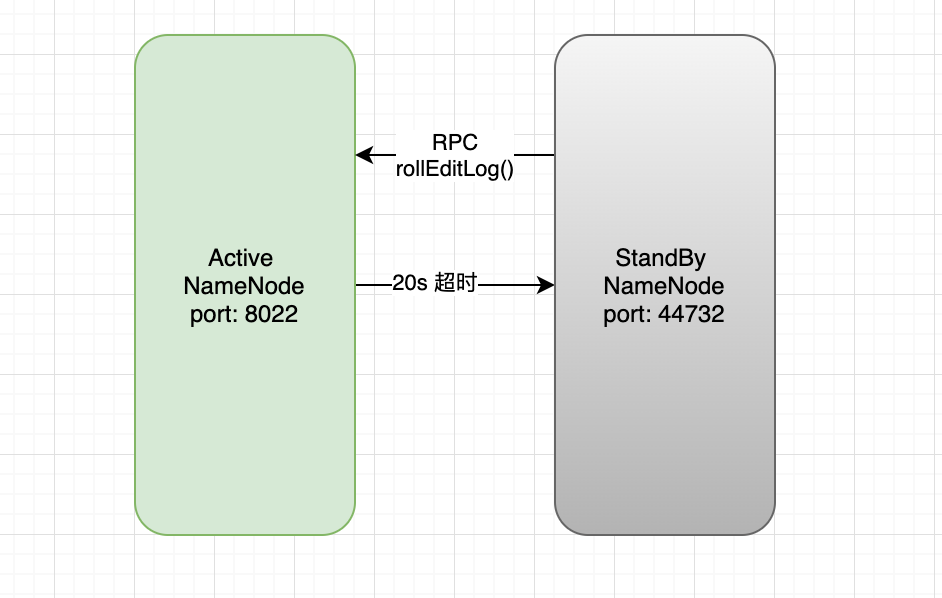

- 分析日志 StandBy NameNode 触发了 Active NameNode 的edit log 的日志滚动,让我们看看源码:

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer

/*** Trigger the active node to roll its logs.*/@VisibleForTestingvoid triggerActiveLogRoll() {LOG.info("Triggering log roll on remote NameNode");Future<Void> future = null;try {//利用线程池提交getNameNodeProxy(),接下来看下此方法future = rollEditsRpcExecutor.submit(getNameNodeProxy());future.get(rollEditsTimeoutMs, TimeUnit.MILLISECONDS);lastRollTriggerTxId = lastLoadedTxnId;} catch (ExecutionException e) {Throwable cause = e.getCause();if (cause instanceof RemoteException) {IOException ioe = ((RemoteException) cause).unwrapRemoteException();if (ioe instanceof StandbyException) {LOG.info("Skipping log roll. Remote node is not in Active state: " +ioe.getMessage().split("\n")[0]);return;}}LOG.warn("Unable to trigger a roll of the active NN", e);} catch (TimeoutException e) {if (future != null) {future.cancel(true);}LOG.warn(String.format("Unable to finish rolling edits in %d ms", rollEditsTimeoutMs));} catch (InterruptedException e) {LOG.warn("Unable to trigger a roll of the active NN", e);}}/*** NameNodeProxy factory method.* @return a Callable to roll logs on remote NameNode.*/@VisibleForTestingCallable<Void> getNameNodeProxy() {return new MultipleNameNodeProxy<Void>() {@Overrideprotected Void doWork() throws IOException {//利用Active NameNode的代理远程调用rollEditLog()方法cachedActiveProxy.rollEditLog();return null;}};}

它首先会获得Active NameNode的代理,并调用其rollEditLog()方法滚动编辑日志,然后将上次StandBy NameNode加载的最高事务ID,即lastLoadedTxnId,赋值给上次编辑日志滚动开始时的最新事务ID,即lastRollTriggerTxId,这么做是为了方便进行日志回滚以及逻辑判断。

- 画个图理清下调用关系:

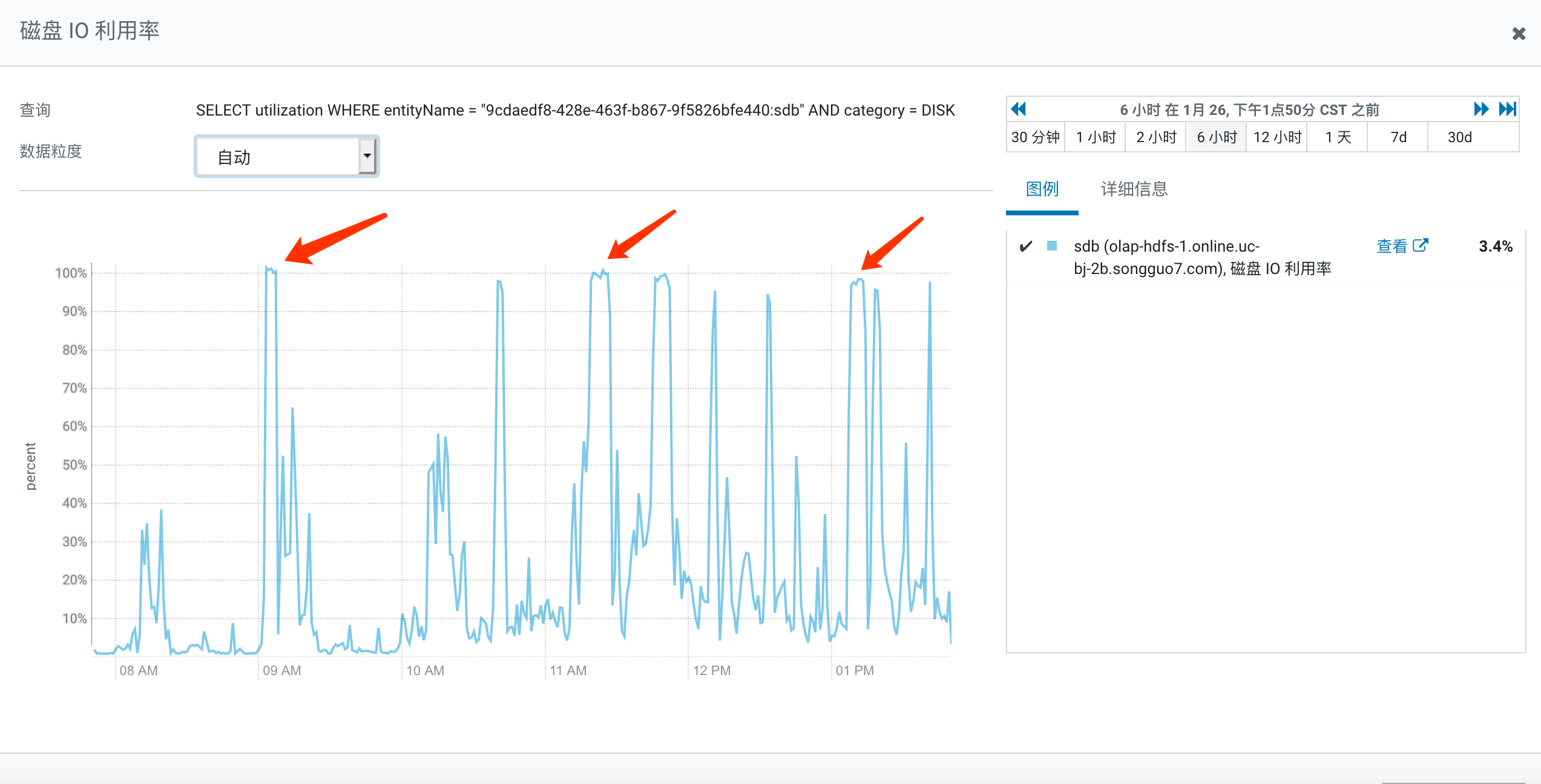

- 接下来我们只需要关心Active NameNode 在执行rollEditLog() 方法为什么会发生超时即可,首先查看了机器负载/CPU/内存/服务GC/网络IO均表示很无辜,因为NameNode的 dfs.namenode.name.dir =/data/dfs/nn; 所以我们看下/data所属磁盘的IO利用率,喔呵果然这里有猫腻,发现RPC延迟的时间都能和util 100%对上;

解决方式:

将NameNode的 dfs.namenode.name.dir 的目录放到一个IO利用率较正常的磁盘上;

后续思考:

因为作者的集群是混合部署,存在资源竞争的问题,所以在这里也建议大家生产环境的混部一定要谨慎,如果非混部不可的话,也一定要梳理好对磁盘的依赖,不要像作者一样将一块磁盘供各个组件使用;

参考文档:

https://www.cnblogs.com/RunnyHao/articles/5400729.html HDFS 的 RPC 机制

https://blog.csdn.net/dengxing1234/article/details/60956951 Hadoop-2.X HA模式下的FSImage和EditsLog合并过程

https://www.cnblogs.com/jirimutu01/p/5556232.html HDFS源码分析之EditLogTailer

https://developer.ibm.com/zh/articles/os-cn-hadoop-name-node/ Hadoop NameNode 高可用 (High Availability) 实现解析