环境:

hadoop 3.1.1.3.0.1.0-187

spark 2.x (spark on yarn)

背景:

测试环境下spark-submit 提交任务后日志异常,提交命令如下:

spark-submit —master yarn —deploy-mode cluster —executor-memory 512m —executor-cores 1 —keytab /data/ec_project/dts-executor/keytab/fasdfkhjas.keytab —principal fasdugfasyu —queue fkasjhdfhjka —name di-datacheck-20220127-616-7809 —class com.kkk.dam.spark.job.quality.QualityCheckJob —conf spark.dynamicAllocation.enabled=true —conf spark.shuffle.service.enabled=true —conf spark.speculation=true —conf spark.hadoop.mapreduce.input.fileinputformat.input.dir.recursive=true —conf spark.hive.mapred.supports.subdirectories=true /data/ec_project/dts-executor/plugins/dam-plugin/dataQualityCheck/lib/spark-job-package-1.0-SNAPSHOT.jar plan.param==xxxppp== job.origin.instance.id==7809 job.instance.id==7809 job.id==0 es.nodes==dam-es1.db.sdns.xxx.cloud:19201,dam-es2.db.sdns.xxx.cloud:19201,dam-es3.db.sdns.xxx.cloud:19201

yarn任务日志如下:

Application application_1643285672038_0001 failed 2 times due to AM Container for appattempt_1643285672038_0001_000002 exited with exitCode: 1 Failing this attempt.Diagnostics: [2022-01-27 20:17:43.382]Exception from container-launch. Container id: container_e47_1643285672038_0001_02_000001 Exit code: 1 Exception message: Launch container failed Shell output: main : command provided 1 main : run as user is C708AFFE5644909F5906693E8F7F08EF main : requested yarn user is C708AFFE5644909F5906693E8F7F08EF Getting exit code file… Creating script paths… Writing pid file… Writing to tmp file /data/hadoop/yarn/local/nmPrivate/application_1643285672038_0001/container_e47_1643285672038_0001_02_000001/container_e47_1643285672038_0001_02_000001.pid.tmp Writing to cgroup task files… Creating local dirs… Launching container… Getting exit code file… Creating script paths… [2022-01-27 20:17:43.383]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data/hadoop/yarn/local/filecache/19/spark2-hdp-yarn-archive.tar.gz/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data/hadoop/yarn/local/filecache/19/spark2-hdp-yarn-archive.tar.gz/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console. Set system property ‘log4j2.debug’ to show Log4j2 internal initialization logging. Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.hive.shims.HadoopShims.getHadoopConfNames()Ljava/util/Map; at org.apache.hadoop.hive.conf.HiveConf$ConfVars.

(HiveConf.java:368) at org.apache.hadoop.hive.conf.HiveConf. (HiveConf.java:105) at org.apache.spark.deploy.security.HiveDelegationTokenProvider.hiveConf(HiveDelegationTokenProvider.scala:49) at org.apache.spark.deploy.security.HiveDelegationTokenProvider.delegationTokensRequired(HiveDelegationTokenProvider.scala:72) at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$obtainDelegationTokens$2.apply(HadoopDelegationTokenManager.scala:131) at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$obtainDelegationTokens$2.apply(HadoopDelegationTokenManager.scala:130) at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241) at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241) at scala.collection.Iterator$class.foreach(Iterator.scala:891) at scala.collection.AbstractIterator.foreach(Iterator.scala:1334) at scala.collection.MapLike$DefaultValuesIterable.foreach(MapLike.scala:206) at scala.collection.TraversableLike$class.flatMap(TraversableLike.scala:241) at scala.collection.AbstractTraversable.flatMap(Traversable.scala:104) at org.apache.spark.deploy.security.HadoopDelegationTokenManager.obtainDelegationTokens(HadoopDelegationTokenManager.scala:130) at org.apache.spark.deploy.yarn.security.YARNHadoopDelegationTokenManager.obtainDelegationTokens(YARNHadoopDelegationTokenManager.scala:59) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$4.run(AMCredentialRenewer.scala:168) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$4.run(AMCredentialRenewer.scala:165) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.obtainTokensAndScheduleRenewal(AMCredentialRenewer.scala:165) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.org$apache$spark$deploy$yarn$security$AMCredentialRenewer$startInternal(AMCredentialRenewer.scala:108) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$2.run(AMCredentialRenewer.scala:91) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$2.run(AMCredentialRenewer.scala:89) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.start(AMCredentialRenewer.scala:89) at org.apache.spark.deploy.yarn.ApplicationMaster. (ApplicationMaster.scala:113) at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:803) at org.apache.spark.deploy.yarn.ApplicationMaster.main(ApplicationMaster.scala) [2022-01-27 20:17:43.384]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data/hadoop/yarn/local/filecache/19/spark2-hdp-yarn-archive.tar.gz/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data/hadoop/yarn/local/filecache/19/spark2-hdp-yarn-archive.tar.gz/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console. Set system property ‘log4j2.debug’ to show Log4j2 internal initialization logging. Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.hive.shims.HadoopShims.getHadoopConfNames()Ljava/util/Map; at org.apache.hadoop.hive.conf.HiveConf$ConfVars. (HiveConf.java:368) at org.apache.hadoop.hive.conf.HiveConf. (HiveConf.java:105) at org.apache.spark.deploy.security.HiveDelegationTokenProvider.hiveConf(HiveDelegationTokenProvider.scala:49) at org.apache.spark.deploy.security.HiveDelegationTokenProvider.delegationTokensRequired(HiveDelegationTokenProvider.scala:72) at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$obtainDelegationTokens$2.apply(HadoopDelegationTokenManager.scala:131) at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$obtainDelegationTokens$2.apply(HadoopDelegationTokenManager.scala:130) at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241) at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241) at scala.collection.Iterator$class.foreach(Iterator.scala:891) at scala.collection.AbstractIterator.foreach(Iterator.scala:1334) at scala.collection.MapLike$DefaultValuesIterable.foreach(MapLike.scala:206) at scala.collection.TraversableLike$class.flatMap(TraversableLike.scala:241) at scala.collection.AbstractTraversable.flatMap(Traversable.scala:104) at org.apache.spark.deploy.security.HadoopDelegationTokenManager.obtainDelegationTokens(HadoopDelegationTokenManager.scala:130) at org.apache.spark.deploy.yarn.security.YARNHadoopDelegationTokenManager.obtainDelegationTokens(YARNHadoopDelegationTokenManager.scala:59) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$4.run(AMCredentialRenewer.scala:168) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$4.run(AMCredentialRenewer.scala:165) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.obtainTokensAndScheduleRenewal(AMCredentialRenewer.scala:165) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.org$apache$spark$deploy$yarn$security$AMCredentialRenewer$startInternal(AMCredentialRenewer.scala:108) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$2.run(AMCredentialRenewer.scala:91) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer$$anon$2.run(AMCredentialRenewer.scala:89) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at org.apache.spark.deploy.yarn.security.AMCredentialRenewer.start(AMCredentialRenewer.scala:89) at org.apache.spark.deploy.yarn.ApplicationMaster. (ApplicationMaster.scala:113) at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:803) at org.apache.spark.deploy.yarn.ApplicationMaster.main(ApplicationMaster.scala) For more detailed output, check the application tracking page: http://kde-offline1.sdns.xx.cloud:8088/cluster/app/application_1643285672038_0001 Then click on links to logs of each attempt. . Failing the application.

问题定位:

因为是NoSuchMethodError,所以直觉反应是jar包冲突,所以首先去该任务的jar包中搜了一下是否有堆栈信息中的类,发现并没有,于是确定该jar包是由集群提供的;

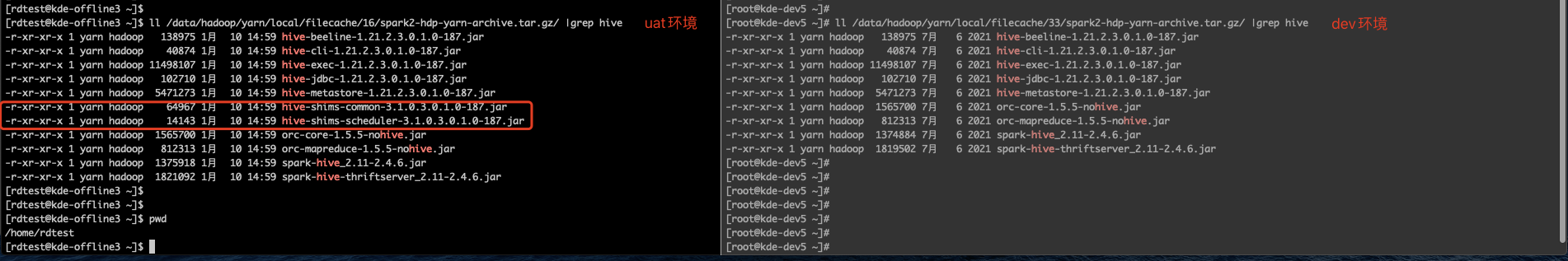

因为任务在开发环境运行正常,所以特意去对比了两个集群为spark提供的jar包有何区别;

通过对比发现测试环境多了两个jar包,通过jar包名字发现造成冲突的元凶就是这两个jar包;

解决方式:

首先确定两个jar包的来源,以下是参考的一篇博客的对说法:

Ambari 集群中,hdfs上存在一个压缩包/hdp/apps/2.6.2.0-205/spark2/spark2-hdp-yarn-archive.tar.gz。 spark on yarn 提交任务后,会将该文件下载到本地 目前看只有重启spark history server,才会将本地/usr/hdp/2.6.2.0-205/spark2/jars/ 下jar包打包成spark2-hdp-yarn-archive.tar.gz上传。 如果需要添加新的第三方jar,可以手工打包上传。

通过将hdfs上的spark2-hdp-yarn-archive.tar.gz 拉到本地,将多余的2个jar包剔除后重启打压缩包,重新上传至hdfs后问题解决;