- 初始化服务器

- 网络问题 & 升级内核版本

- 下载kk

- 安装集群

- 安装k8s和kubesphere配置文件

- 安装后卸载可插拔组件

- 删除namespace

- kubesphere实现一站式部署

- 问题记录

- check file permission: directory “/var/lib/etcd” exist, but the permission is “drwxr-xr-x”. The recommended permission is “-rwx———“ to prevent possible unprivileged access to the data.

- Error listing backups in backup store” backupLocation=default controller=backup-sync error=”rpc error: code = Unknown desc = RequestTimeTooSkewed: The difference between the request time and the server’s time is too large

- 参考

初始化服务器

# 开放k8s所需端口firewall-cmd --permanent --add-port=6443/tcpfirewall-cmd --permanent --add-port=2379/tcpfirewall-cmd --permanent --add-port=2380/tcpfirewall-cmd --permanent --add-port=10250/tcpfirewall-cmd --permanent --add-port=10251/tcpfirewall-cmd --permanent --add-port=10252/tcpfirewall-cmd --permanent --add-port=30000-32767/tcpfirewall-cmd --permanent --add-port=8472/udpfirewall-cmd --permanent --add-port=443/tcpfirewall-cmd --permanent --add-port=9099/tcpfirewall-cmd --permanent --add-port=22/tcpfirewall-cmd --permanent --add-port=179/tcpfirewall-cmd --permanent --add-port=6666-6667/tcpfirewall-cmd --permanent --add-port=68/tcpfirewall-cmd --permanent --add-port=53/tcpfirewall-cmd --permanent --add-port=67/tcp# 开启 Firewalld 的伪装 ip:如果不开启此功能,那将无法进行 ip 转发,会导致 DNS 插件不起作用firewall-cmd --add-masquerade --permanentfirewall-cmd --reload# 关闭selinuxsed -i 's/enforcing/disabled/' /etc/selinux/config # 永久# 时间同步systemctl start chronydsystemctl enable chronydyum install ntpdate -yntpdate time.windows.com# swap关闭swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab#允许 iptables 检查桥接流量cat <<EOF | sudo tee /etc/modules-load.d/k8s.confbr_netfilterEOFcat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsudo sysctl --system# 重启# reboot# 每个节点都要安装必要的工具yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vimnet-tools git

网络问题 & 升级内核版本

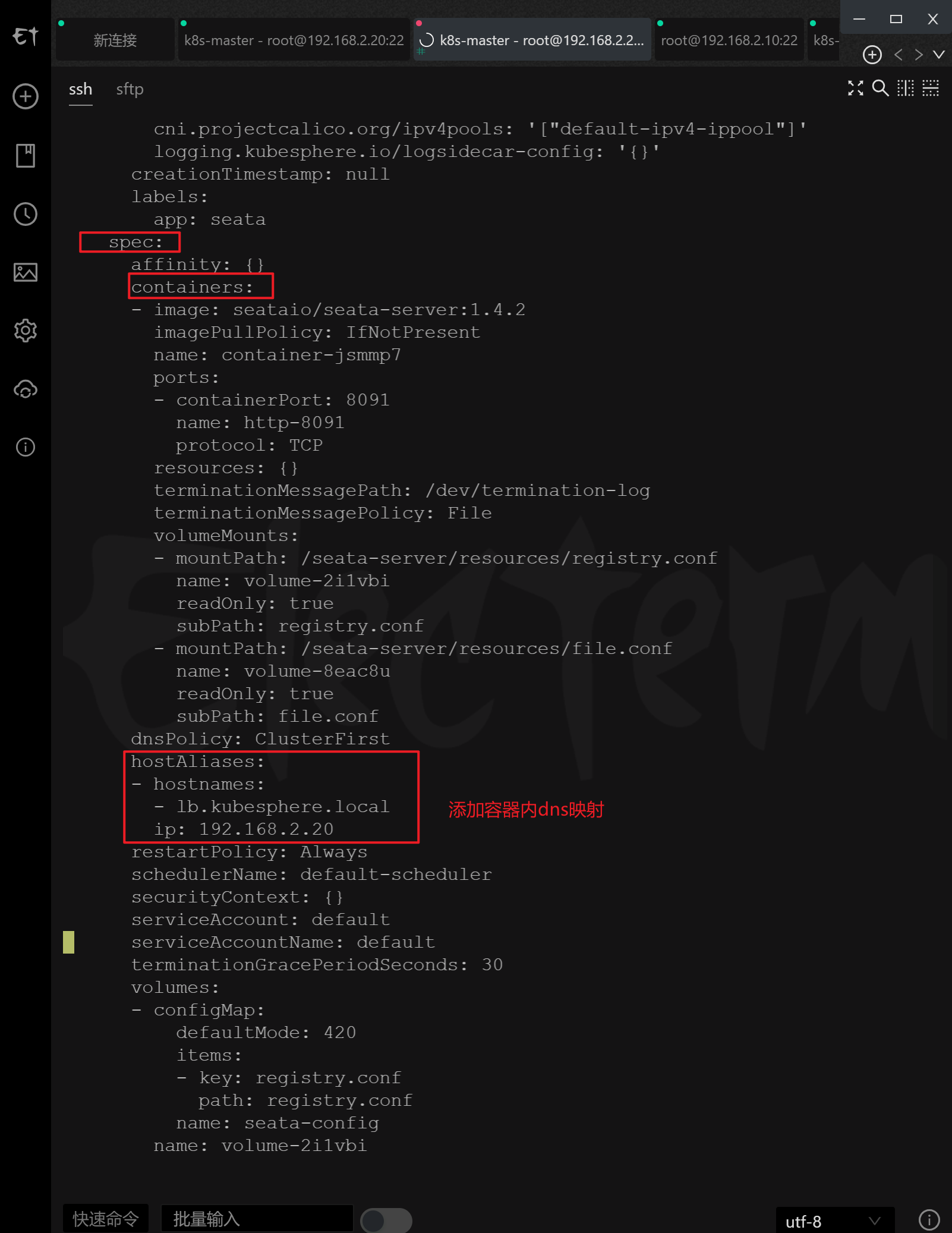

kubectl edit statefulset.apps/seata -n mall-xian

配置容器内dns映射

hostAliases:

- hostnames:

- lb.kubesphere.local

ip: 192.168.2.20

注册中心使用内部ip,本地不能访问到k8s机器内部ip

修改为ipvs:https://www.jianshu.com/p/31b161b99dc6

修改内核版本原因:https://blog.csdn.net/cljdsc/article/details/115701562

如何修改:https://www.cnblogs.com/varden/p/15178853.html

修改内核版本

lsmod |grep ip_vsuname -rrpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgsystemctl stop kubeletsystemctl stop etcdsystemctl stop dockeryum install -y https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpmyum list available --disablerepo=* --enablerepo=elrepo-kernelyum install -y kernel-lt-5.4.189-1.el7.elrepo --enablerepo=elrepo-kernelcat /boot/grub2/grub.cfg | grep menuentrygrub2-editenv listgrub2-set-default "CentOS Linux (5.4.189-1.el7.elrepo.x86_64) 7 (Core)"grub2-editenv listreboot

下载kk

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.0.0 sh -

chmod +x kk

安装集群

./kk create config --with-kubesphere v3.2.1 --with-kubernetes v1.21.5./kk create cluster -f config-sample.yaml

安装k8s和kubesphere配置文件

conf.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1kind: Clustermetadata:name: samplespec:hosts:- {name: master, address: 192.168.2.20, internalAddress: 192.168.2.20, user: root, password: root}- {name: node1, address: 192.168.2.21, internalAddress: 192.168.2.21, user: root, password: root}- {name: node2, address: 192.168.2.22, internalAddress: 192.168.2.22, user: root, password: root}roleGroups:etcd:- mastermaster:- masterworker:- master- node1- node2controlPlaneEndpoint:## Internal loadbalancer for apiserversinternalLoadbalancer: haproxydomain: lb.kubesphere.localaddress: ""port: 6443kubernetes:version: v1.20.4imageRepo: kubesphereclusterName: cluster.localnetwork:plugin: calicokubePodsCIDR: 10.233.64.0/18kubeServiceCIDR: 10.233.0.0/18registry:registryMirrors: []insecureRegistries: []addons: []---apiVersion: installer.kubesphere.io/v1alpha1kind: ClusterConfigurationmetadata:name: ks-installernamespace: kubesphere-systemlabels:version: v3.1.1spec:persistence:storageClass: ""authentication:jwtSecret: ""zone: ""local_registry: ""etcd:monitoring: trueendpointIps: localhostport: 2379tlsEnable: truecommon:redis:enabled: falseredisVolumSize: 2Giopenldap:enabled: falseopenldapVolumeSize: 2GiminioVolumeSize: 20Gimonitoring:endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090es:elasticsearchMasterVolumeSize: 4GielasticsearchDataVolumeSize: 20GilogMaxAge: 7elkPrefix: logstashbasicAuth:enabled: falseusername: ""password: ""externalElasticsearchUrl: ""externalElasticsearchPort: ""console:enableMultiLogin: trueport: 30880alerting: # 告警是可观测性的重要组成部分,与监控和日志密切相关。KubeSphere 中的告警系统与其主动式故障通知 (Proactive Failure Notification) 系统相结合,使用户可以基于告警策略了解感兴趣的活动enabled: true# thanosruler:# replicas: 1# resources: {}auditing: # 审计日志系统提供了一套与安全相关并按时间顺序排列的记录,按顺序记录了与单个用户、管理人员或系统其他组件相关的活动enabled: truedevops:enabled: truejenkinsMemoryLim: 2GijenkinsMemoryReq: 1500MijenkinsVolumeSize: 8GijenkinsJavaOpts_Xms: 512mjenkinsJavaOpts_Xmx: 512mjenkinsJavaOpts_MaxRAM: 2gevents: # 事件系统使用户能够跟踪集群内部发生的事件,例如节点调度状态和镜像拉取结果。这些事件会被准确记录下来,并在 Web 控制台中显示具体的原因、状态和信息enabled: true # 默认情况下,如果启用了事件系统,KubeKey 将安装内置 Elasticsearchruler:enabled: truereplicas: 2logging:enabled: true # 默认情况下,如果启用了日志系统,KubeKey 将安装内置 Elasticsearchlogsidecar:enabled: truereplicas: 2metrics_server: # 支持用于部署的容器组(Pod)弹性伸缩程序 (HPA)。在 KubeSphere 中,Metrics Server 控制着 HPA 是否启用enabled: truemonitoring:storageClass: ""prometheusMemoryRequest: 400MiprometheusVolumeSize: 20Gimulticluster:clusterRole: nonenetwork:networkpolicy: # 通过网络策略,用户可以在同一集群内实现网络隔离enabled: trueippool:type: calico # 将“none”更改为“calico”。topology: # 您可以启用服务拓扑图以集成 Weave Scope(Docker 和 Kubernetes 的可视化和监控工具)。Weave Scope 使用既定的 API 收集信息,为应用和容器构建拓扑图。服务拓扑图显示在您的项目中,将服务之间的连接关系可视化。type: weave-scope # 将“none”更改为“weave-scope”。openpitrix: #提供了一个基于 Helm 的应用商店,用于应用生命周期管理store:enabled: trueservicemesh: # KubeSphere 服务网格基于 Istio,将微服务治理和流量管理可视化。它拥有强大的工具包,包括熔断机制、蓝绿部署、金丝雀发布、流量镜像、链路追踪、可观测性和流量控制等enabled: truekubeedge: # KubeEdge 是一个开源系统,用于将容器化应用程序编排功能扩展到边缘的主机enabled: falsecloudCore:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []cloudhubPort: "10000"cloudhubQuicPort: "10001"cloudhubHttpsPort: "10002"cloudstreamPort: "10003"tunnelPort: "10004"cloudHub:advertiseAddress:- ""nodeLimit: "100"service:cloudhubNodePort: "30000"cloudhubQuicNodePort: "30001"cloudhubHttpsNodePort: "30002"cloudstreamNodePort: "30003"tunnelNodePort: "30004"edgeWatcher:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []edgeWatcherAgent:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []

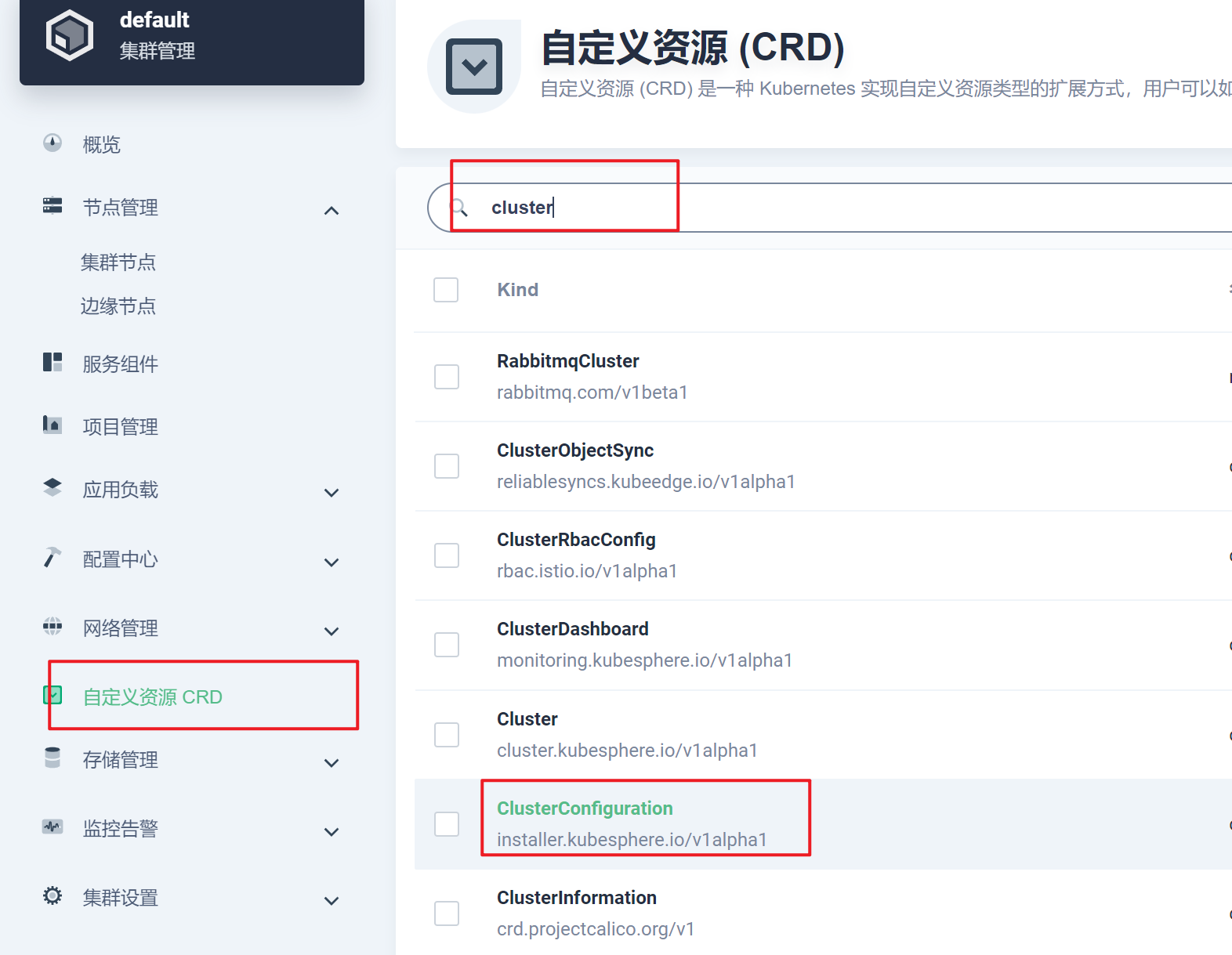

安装后卸载可插拔组件

kubectl -n kubesphere-system edit clusterconfiguration ks-installer

或者界面操作

apiVersion: installer.kubesphere.io/v1alpha1kind: ClusterConfigurationmetadata:annotations:kubectl.kubernetes.io/last-applied-configuration: >{"apiVersion":"installer.kubesphere.io/v1alpha1","kind":"ClusterConfiguration","metadata":{"annotations":{},"labels":{"version":"v3.1.1"},"name":"ks-installer","namespace":"kubesphere-system"},"spec":{"alerting":{"enabled":false},"auditing":{"enabled":false},"authentication":{"jwtSecret":""},"common":{"es":{"basicAuth":{"enabled":false,"password":"","username":""},"elasticsearchDataVolumeSize":"20Gi","elasticsearchMasterVolumeSize":"4Gi","elkPrefix":"logstash","externalElasticsearchPort":"","externalElasticsearchUrl":"","logMaxAge":7},"minioVolumeSize":"20Gi","monitoring":{"endpoint":"http://prometheus-operated.kubesphere-monitoring-system.svc:9090"},"openldap":{"enabled":false},"openldapVolumeSize":"2Gi","redis":{"enabled":false},"redisVolumSize":"2Gi"},"console":{"enableMultiLogin":true,"port":30880},"devops":{"enabled":false,"jenkinsJavaOpts_MaxRAM":"2g","jenkinsJavaOpts_Xms":"512m","jenkinsJavaOpts_Xmx":"512m","jenkinsMemoryLim":"2Gi","jenkinsMemoryReq":"1500Mi","jenkinsVolumeSize":"8Gi"},"etcd":{"endpointIps":"192.168.2.20","monitoring":false,"port":2379,"tlsEnable":true},"events":{"enabled":false,"ruler":{"enabled":true,"replicas":2}},"kubeedge":{"cloudCore":{"cloudHub":{"advertiseAddress":[""],"nodeLimit":"100"},"cloudhubHttpsPort":"10002","cloudhubPort":"10000","cloudhubQuicPort":"10001","cloudstreamPort":"10003","nodeSelector":{"node-role.kubernetes.io/worker":""},"service":{"cloudhubHttpsNodePort":"30002","cloudhubNodePort":"30000","cloudhubQuicNodePort":"30001","cloudstreamNodePort":"30003","tunnelNodePort":"30004"},"tolerations":[],"tunnelPort":"10004"},"edgeWatcher":{"edgeWatcherAgent":{"nodeSelector":{"node-role.kubernetes.io/worker":""},"tolerations":[]},"nodeSelector":{"node-role.kubernetes.io/worker":""},"tolerations":[]},"enabled":false},"logging":{"enabled":false,"logsidecar":{"enabled":true,"replicas":2}},"metrics_server":{"enabled":false},"monitoring":{"prometheusMemoryRequest":"400Mi","prometheusVolumeSize":"20Gi","storageClass":""},"multicluster":{"clusterRole":"none"},"network":{"ippool":{"type":"none"},"networkpolicy":{"enabled":false},"topology":{"type":"none"}},"openpitrix":{"store":{"enabled":false}},"persistence":{"storageClass":""},"servicemesh":{"enabled":false},"zone":"cn"}}labels:version: v3.1.1name: ks-installernamespace: kubesphere-systemspec:alerting:enabled: falseauditing:enabled: falseauthentication:jwtSecret: ''common:es:basicAuth:enabled: falsepassword: ''username: ''elasticsearchDataVolumeSize: 20GielasticsearchMasterVolumeSize: 4GielkPrefix: logstashexternalElasticsearchPort: ''externalElasticsearchUrl: ''logMaxAge: 7minioVolumeSize: 20Gimonitoring:endpoint: 'http://prometheus-operated.kubesphere-monitoring-system.svc:9090'openldap:enabled: falseopenldapVolumeSize: 2Giredis:enabled: falseredisVolumSize: 2Giconsole:enableMultiLogin: trueport: 30880devops:enabled: truejenkinsJavaOpts_MaxRAM: 2gjenkinsJavaOpts_Xms: 512mjenkinsJavaOpts_Xmx: 512mjenkinsMemoryLim: 2GijenkinsMemoryReq: 1500MijenkinsVolumeSize: 8Gietcd:endpointIps: 192.168.2.20monitoring: trueport: 2379tlsEnable: trueevents:enabled: falseruler:enabled: falsereplicas: 2kubeedge:cloudCore:cloudHub:advertiseAddress:- ''nodeLimit: '100'cloudhubHttpsPort: '10002'cloudhubPort: '10000'cloudhubQuicPort: '10001'cloudstreamPort: '10003'nodeSelector:node-role.kubernetes.io/worker: ''service:cloudhubHttpsNodePort: '30002'cloudhubNodePort: '30000'cloudhubQuicNodePort: '30001'cloudstreamNodePort: '30003'tunnelNodePort: '30004'tolerations: []tunnelPort: '10004'edgeWatcher:edgeWatcherAgent:nodeSelector:node-role.kubernetes.io/worker: ''tolerations: []nodeSelector:node-role.kubernetes.io/worker: ''tolerations: []enabled: falselogging:enabled: falselogsidecar:enabled: falsereplicas: 3metrics_server:enabled: truemonitoring:prometheusMemoryRequest: 400MiprometheusVolumeSize: 20GistorageClass: ''multicluster:clusterRole: nonenetwork:ippool:type: caliconetworkpolicy:enabled: truetopology:type: weave-scopeopenpitrix:store:enabled: falsepersistence:storageClass: ''servicemesh:enabled: falsezone: cn

删除namespace

# 1、删除namespaces一般使用kubectl delete namespaces <YOUR-NAMESPACE-NAME>#然后一直Terminating#**********************************#⚠️注意下面2-5步骤 中的grep 后的字符串 需要是待删除namespaces下已知的一些相关关键词 这四步也可以不做 直接第一步完成后到第六步# 2、删除状态是Released的pv, pv是不区分namespaces的kubectl get pv | grep "Released" | awk '{print $1}' | xargs kubectl delete pv# 3、删除crd(会删除所有的,注意!!!)#kubectl get crd | grep kubesphere | awk '{print $1}' | xargs kubectl delete crd# 4、删除clusterrole(会删除所有的,注意!!!)#kubectl get clusterrole | grep kubesphere | cut -d ' ' -f 1 | xargs kubectl delete clusterrole# 5、 删除clusterrolebindings(会删除所有的,注意!!!)#kubectl get clusterrolebindings.rbac.authorization.k8s.io | grep kubesphere | cut -d ' ' -f 1 | xargs kubectl delete clusterrolebindings.rbac.authorization.k8s.io#**********************************# 6、确定namespaces下的资源kubectl get all -n <YOUR-NAMESPACE-NAME>#看到no resource即可#这时候查看ns状态kubectl get ns#如果还显示Terminating# 7、强制删除kubectl delete ns <YOUR-NAMESPACE-NAME> --grace-period=0 --forcekubectl get ns#如果还显示Terminating

kubesphere实现一站式部署

配置认证信息

配置maven仓库地址

- 使用admin登陆ks

- 进入集群管理

- 进入配置中心

- 找到配置

- ks-devops-agent

- 修改这个配置。加入maven阿里云镜像加速地址

远程私有maven仓库地址:https://packages.aliyun.com/repos/2212269-snapshot-Sxd58p/packages

<?xml version="1.0" encoding="UTF-8"?><settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd"><mirrors><mirror><id>mirror</id><mirrorOf>central,jcenter,!rdc-releases,!rdc-snapshots</mirrorOf><name>mirror</name><url>https://maven.aliyun.com/nexus/content/groups/public</url></mirror></mirrors><servers><server><id>rdc-releases</id><username>624c4b6ad12a1c6958d94bcb</username><password>zhZm4kFQFp_q</password></server><server><id>rdc-snapshots</id><username>624c4b6ad12a1c6958d94bcb</username><password>zhZm4kFQFp_q</password></server></servers><profiles><profile><id>rdc</id><properties><altReleaseDeploymentRepository>rdc-releases::default::https://packages.aliyun.com/maven/repository/2212269-release-QNwAAi/</altReleaseDeploymentRepository><altSnapshotDeploymentRepository>rdc-snapshots::default::https://packages.aliyun.com/maven/repository/2212269-snapshot-Sxd58p/</altSnapshotDeploymentRepository></properties><repositories><repository><id>central</id><url>https://maven.aliyun.com/nexus/content/groups/public</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></repository><repository><id>snapshots</id><url>https://maven.aliyun.com/nexus/content/groups/public</url><releases><enabled>false</enabled></releases><snapshots><enabled>true</enabled></snapshots></repository><repository><id>rdc-releases</id><url>https://packages.aliyun.com/maven/repository/2212269-release-QNwAAi/</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></repository><repository><id>rdc-snapshots</id><url>https://packages.aliyun.com/maven/repository/2212269-snapshot-Sxd58p/</url><releases><enabled>false</enabled></releases><snapshots><enabled>true</enabled></snapshots></repository></repositories><pluginRepositories><pluginRepository><id>central</id><url>https://maven.aliyun.com/nexus/content/groups/public</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></pluginRepository><pluginRepository><id>snapshots</id><url>https://maven.aliyun.com/nexus/content/groups/public</url><releases><enabled>false</enabled></releases><snapshots><enabled>true</enabled></snapshots></pluginRepository><pluginRepository><id>rdc-releases</id><url>https://packages.aliyun.com/maven/repository/2212269-release-QNwAAi/</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></pluginRepository><pluginRepository><id>rdc-snapshots</id><url>https://packages.aliyun.com/maven/repository/2212269-snapshot-Sxd58p/</url><releases><enabled>false</enabled></releases><snapshots><enabled>true</enabled></snapshots></pluginRepository></pluginRepositories></profile></profiles><activeProfiles><activeProfile>rdc</activeProfile></activeProfiles></settings>

配置gitee账户凭证

配置docker账户凭证

docker-hub-token

DOCKER_USERNAME

DOCKER_PASSWORD

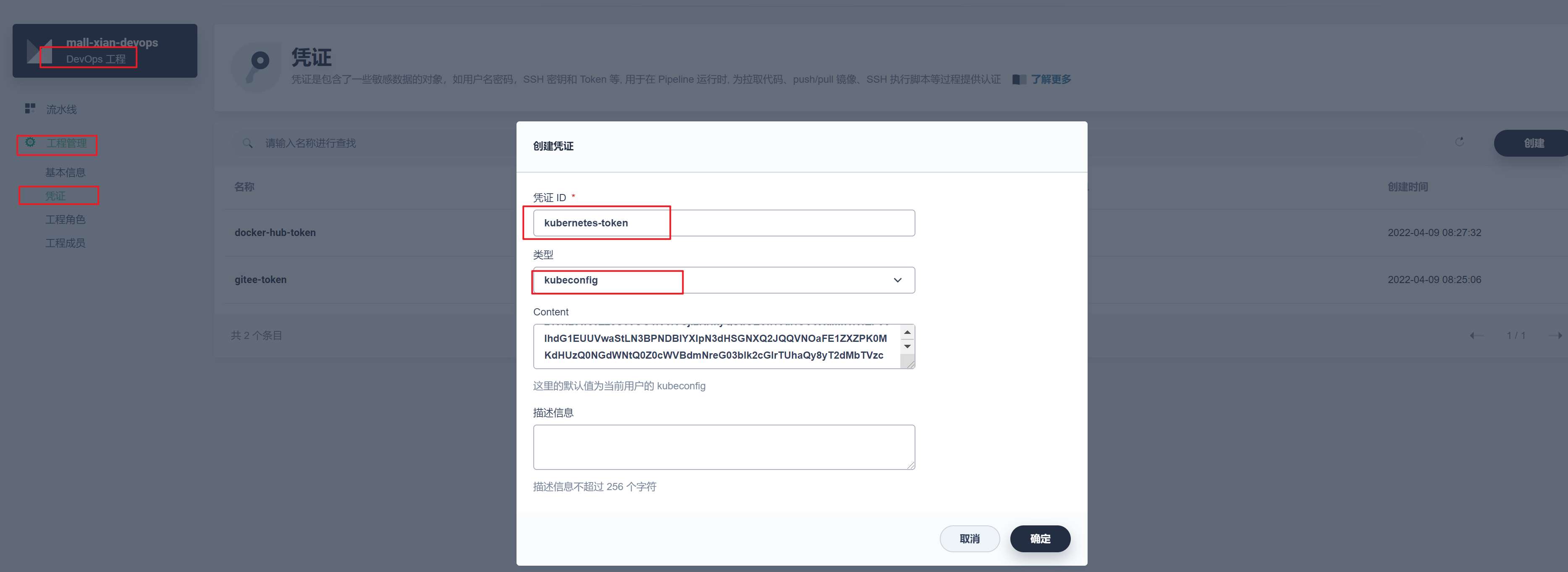

配置k8s凭证

kubernetes-token

使用maven命令打包

sh 'ls'sh 'mvn clean package -Dmaven.test.skip=true'sh 'ls hospital-manage/target'

docker登录打包上传

sh 'docker build -f Dockerfile -t $DOCKERHUB_NAMESPACE/$APP_NAME:SNAPSHOT-$BRANCH_NAME-$BUILD_NUMBER .'withCredentials([usernamePassword(credentialsId : 'docker-hub-token' ,passwordVariable : 'DOCKER_PASSWORD' ,usernameVariable : 'DOCKER_USERNAME' ,)]) {sh 'echo "$DOCKER_PASSWORD" | docker login $REGISTRY -u "$DOCKER_USERNAME" --password-stdin'sh 'docker push $DOCKERHUB_NAMESPACE/$APP_NAME:SNAPSHOT-$BRANCH_NAME-$BUILD_NUMBER'}

备份恢复etcd

https://www.cnblogs.com/yuhaohao/p/13214515.html

# 每个节点都要停止systemctl stop kubelet && systemctl stop etcdcd /var/backups/kube_etcdrm -rf /var/lib/etcdetcdctl snapshot restore etcd-2022-04-06-21-30-01/snapshot.db --data-dir /var/lib/etcd# 每个节点都要启动systemctl start kubelet && systemctl start etcd

搭建minio文件存储

# 安装dockeryum install docker-ce -y && systemctl enable docker && service docker start# 创建挂载目录mkdir -p /private/mnt/data# 运行minio 密码不能少于8位docker pull minio/miniofirewall-cmd --permanent --add-port=9000-9001/tcpfirewall-cmd --reloaddocker run -di -p 9000:9000 \-p 9001:9001 \--name minio2 --restart=always \-v /private/mnt/data:/data \-e "MINIO_ACCESS_KEY=minio" \-e "MINIO_SECRET_KEY=minio123" \minio/minio server /data \--console-address ":9001"

创建bucket:

backup

在每台master节点上都安装velero

用来同步每台机器的etcd

# 下载velerowget https://github.com/vmware-tanzu/velero/releases/download/v1.7.2/velero-v1.7.2-linux-amd64.tar.gzkubectl delete namespace/velero clusterrolebingding/velerokubectl delete crds -l component=velerocd /root/velero-v1.5.2-linux-amd64\cp -rf velero /usr/local/bin/# 创建minio凭证cat > credentials-velero << EOF[default]aws_access_key_id = minioaws_secret_access_key = minio123EOFvelero install \--provider aws \--plugins velero/velero-plugin-for-aws:v1.1.0 \--bucket backup \--use-restic \--secret-file ./credentials-velero \--use-volume-snapshots=false \--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://192.168.2.10:9000kubectl get all -n velerokubectl delete namespace/velero clusterrolebingding/velerokubectl delete crds -l component=velerocd /root/velero-v1.7.2-linux-amd64\cp -rf velero /usr/local/bin/# 创建minio凭证cat > credentials-velero << EOF[default]aws_access_key_id = minioaws_secret_access_key = minio123EOFvelero install \--provider aws \--plugins velero/velero-plugin-for-aws:v1.3.1 \--bucket backup \--use-restic \--secret-file ./credentials-velero \--use-volume-snapshots=false \--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://192.168.2.10:9000kubectl get all -n velero

参考: https://blog.csdn.net/weixin_42143049/article/details/115757747

https://zhuanlan.zhihu.com/p/441954396

velero命令

kubectl get backupstoragelocations.velero.io -n velerokubectl describe backupstoragelocations.velero.io -n velerovelero get backup-locationsvelero backup getvelero versionvelero backup -hvelero backup logsvelero backup logs back-testkubectl logs -f deploy/velero -n velero# 创建备份定时任务 - 每6小时velero schedule create <SCHEDULE_NAME> \--include-namespaces <NAMESPACE> \--include-resources='deployments.apps,replicasets.apps,deployments.extensions,replicasets.extensions,pods,Service,StatefulSet,Ingress,ConfigMap,Secret' \--schedule="@every 6h"# 设置每日备份velero schedule create <SCHEDULE NAME> --schedule "0 7 * * *"# 创建备份-从定时任务velero backup create --from-schedule example-schedule# 创建备份velero backup create backup-demo1 --include-namespaces test --default-volumes-to-restic --include-cluster-resources# ***恢复前先依次关闭集群所有机器kubelet、etcd、docker***systemctl stop kubeletsystemctl stop etcdsystemctl stop docker# 恢复velero restore create --from-backup <BACKUP_NAME> --namespace-mappings <NAMESPACE>:bak-<NAMESPACE># ***恢复后依次开启集群所有机器docker、etcd、kubelet***systemctl start dockersystemctl start etcdsystemctl start kubelet

批量启停集群所有节点

# 停止集群nodes='master node1 node2'for node in ${nodes[@]}doecho "==== Stop docker on $node ===="ssh root@$node systemctl stop dockerdone

# 启动集群nodes='master node1 node2'for node in ${nodes[@]}doecho "==== Start docker on $node ===="ssh root@$node systemctl start dockerdone

问题记录

check file permission: directory “/var/lib/etcd” exist, but the permission is “drwxr-xr-x”. The recommended permission is “-rwx———“ to prevent possible unprivileged access to the data.

chmod -R 700 /var/lib/etcd

Error listing backups in backup store” backupLocation=default controller=backup-sync error=”rpc error: code = Unknown desc = RequestTimeTooSkewed: The difference between the request time and the server’s time is too large

时间不一致

# 时间同步

systemctl start chronyd

systemctl enable chronyd

yum install ntpdate -y

ntpdate time.windows.com

参考

本地安装kubesphere: https://kubesphere.com.cn/docs/installing-on-kubernetes/on-prem-kubernetes/install-ks-on-linux-airgapped/

启用日志系统:https://kubesphere.com.cn/docs/pluggable-components/logging/

kubesphere文档:https://github.com/kubesphere/kubekey/blob/master/README_zh-CN.md

k8s官方文档网络问题:https://kubernetes.feisky.xyz/troubleshooting/network