使用docker部署

docker run --name seata-server \-p 8091:8091 \-e SEATA_CONFIG_NAME=file:/root/seata-config/registry \-v /PATH/TO/CONFIG_FILE:/root/seata-config \seataio/seata-server:1.4.2

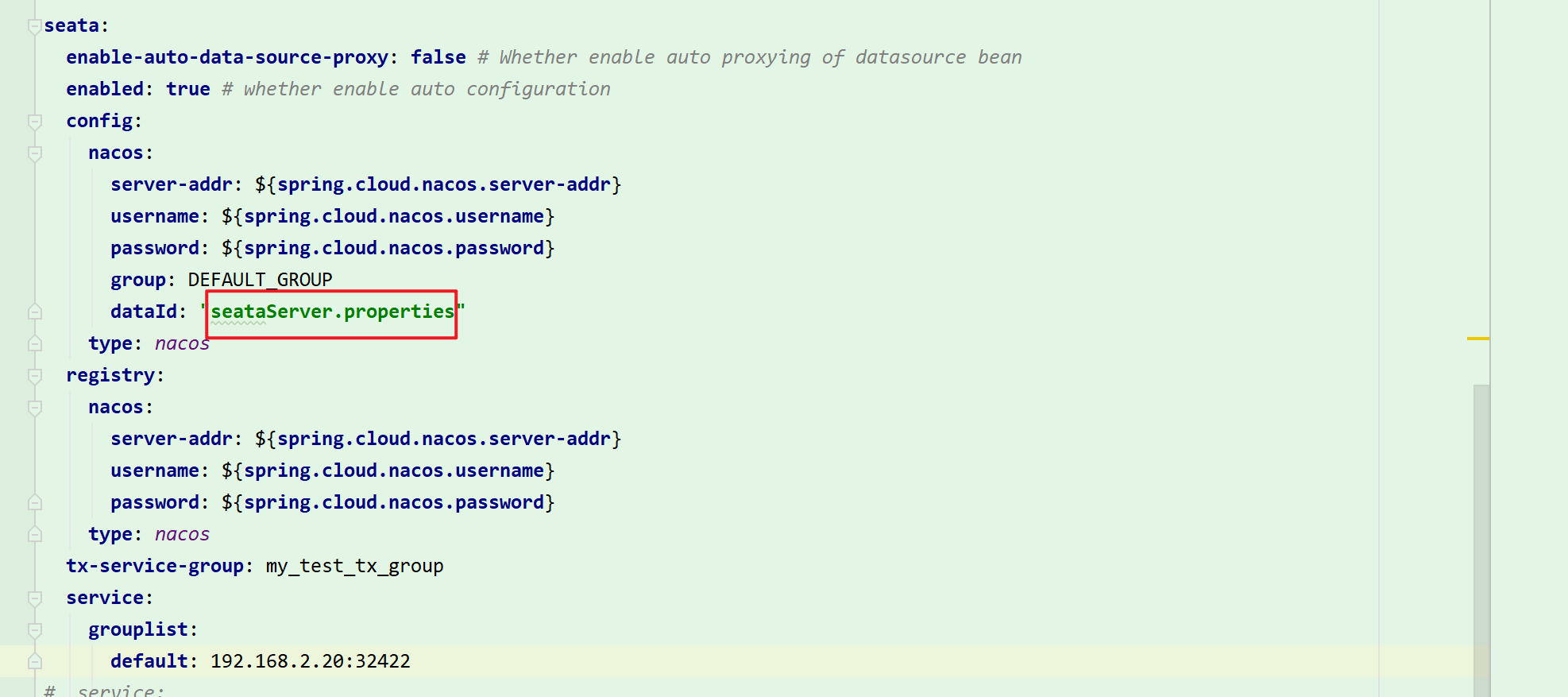

在nacos配置中心配置

seataServer.properties

transport.type=TCPtransport.server=NIOtransport.heartbeat=truetransport.enableClientBatchSendRequest=falsetransport.threadFactory.bossThreadPrefix=NettyBosstransport.threadFactory.workerThreadPrefix=NettyServerNIOWorkertransport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandlertransport.threadFactory.shareBossWorker=falsetransport.threadFactory.clientSelectorThreadPrefix=NettyClientSelectortransport.threadFactory.clientSelectorThreadSize=1transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThreadtransport.threadFactory.bossThreadSize=1transport.threadFactory.workerThreadSize=defaulttransport.shutdown.wait=3service.vgroupMapping.my_test_tx_group=defaultservice.default.grouplist=192.168.2.20:32422service.enableDegrade=falseservice.disableGlobalTransaction=falseclient.rm.asyncCommitBufferLimit=10000client.rm.lock.retryInterval=10client.rm.lock.retryTimes=30client.rm.lock.retryPolicyBranchRollbackOnConflict=trueclient.rm.reportRetryCount=5client.rm.tableMetaCheckEnable=falseclient.rm.tableMetaCheckerInterval=60000client.rm.sqlParserType=druidclient.rm.reportSuccessEnable=falseclient.rm.sagaBranchRegisterEnable=falseclient.tm.commitRetryCount=5client.tm.rollbackRetryCount=5client.tm.defaultGlobalTransactionTimeout=60000client.tm.degradeCheck=falseclient.tm.degradeCheckAllowTimes=10client.tm.degradeCheckPeriod=2000store.mode=dbstore.publicKey=store.file.dir=file_store/datastore.file.maxBranchSessionSize=16384store.file.maxGlobalSessionSize=512store.file.fileWriteBufferCacheSize=16384store.file.flushDiskMode=asyncstore.file.sessionReloadReadSize=100store.db.datasource=druidstore.db.dbType=mysqlstore.db.driverClassName=com.mysql.jdbc.Driverstore.db.url=jdbc:mysql://192.168.2.20:30569/seata?useUnicode=true&rewriteBatchedStatements=truestore.db.user=rootstore.db.password=rootstore.db.minConn=5store.db.maxConn=30store.db.globalTable=global_tablestore.db.branchTable=branch_tablestore.db.queryLimit=100store.db.lockTable=lock_tablestore.db.maxWait=5000store.redis.mode=singlestore.redis.single.host=127.0.0.1store.redis.single.port=6379store.redis.sentinel.masterName=store.redis.sentinel.sentinelHosts=store.redis.maxConn=10store.redis.minConn=1store.redis.maxTotal=100store.redis.database=0store.redis.password=store.redis.queryLimit=100server.recovery.committingRetryPeriod=1000server.recovery.asynCommittingRetryPeriod=1000server.recovery.rollbackingRetryPeriod=1000server.recovery.timeoutRetryPeriod=1000server.maxCommitRetryTimeout=-1server.maxRollbackRetryTimeout=-1server.rollbackRetryTimeoutUnlockEnable=falseclient.undo.dataValidation=trueclient.undo.logSerialization=jacksonclient.undo.onlyCareUpdateColumns=trueserver.undo.logSaveDays=7server.undo.logDeletePeriod=86400000client.undo.logTable=undo_logclient.undo.compress.enable=trueclient.undo.compress.type=zipclient.undo.compress.threshold=64klog.exceptionRate=100transport.serialization=seatatransport.compressor=nonemetrics.enabled=falsemetrics.registryType=compactmetrics.exporterList=prometheusmetrics.exporterPrometheusPort=9898

使用kubesphere部署

dockerhub

资源分配

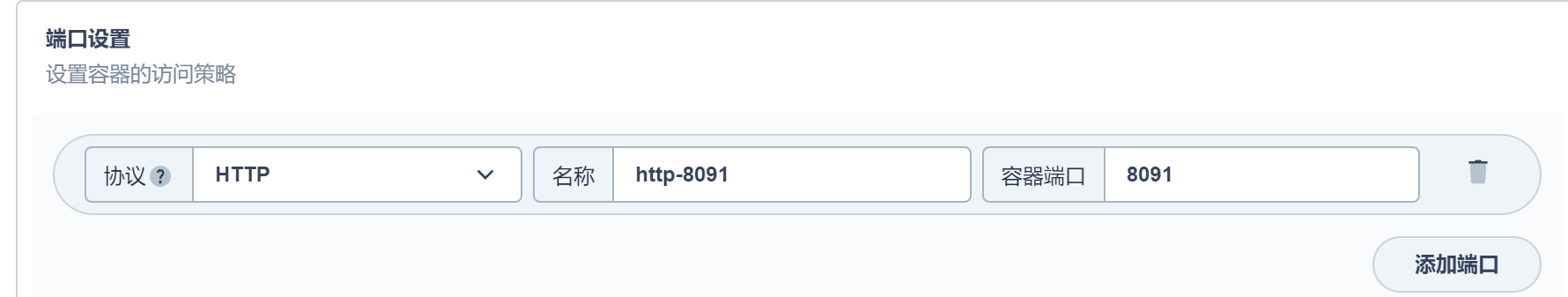

端口配置

同步主机时区

配置文件子路径挂载

(* 默认使用路径挂载会覆盖容器内所有配置,所以如果只需要覆盖个别配置可以使用子路径)

/seata-server/resources/registry.conf

/seata-server/resources/file.conf

configMap

seata-conf

file.conf

sql脚本在seata源码seata-1.4.2\script\server\db中

## transaction log store, only used in seata-serverstore {## store mode: file、db、redismode = "db"## rsa decryption public keypublicKey = ""## file store propertyfile {## store location dirdir = "sessionStore"# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptionsmaxBranchSessionSize = 16384# globe session size , if exceeded throws exceptionsmaxGlobalSessionSize = 512# file buffer size , if exceeded allocate new bufferfileWriteBufferCacheSize = 16384# when recover batch read sizesessionReloadReadSize = 100# async, syncflushDiskMode = async}service {#transaction service group mappingvgroupMapping.my_test_tx_group = "default"#only support when registry.type=file, please don't set multiple addressesdefault.grouplist = "192.168.2.20:32422"#degrade, current not supportenableDegrade = false#disable seatadisableGlobalTransaction = false}## database store propertydb {## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.datasource = "druid"## mysql/oracle/postgresql/h2/oceanbase etc.dbType = "mysql"driverClassName = "com.mysql.jdbc.Driver"## if using mysql to store the data, recommend add rewriteBatchedStatements=true in jdbc connection paramurl = "jdbc:mysql://192.168.2.20:30569/seata?rewriteBatchedStatements=true"user = "root"password = "root"minConn = 5maxConn = 100globalTable = "global_table"branchTable = "branch_table"lockTable = "lock_table"queryLimit = 100maxWait = 5000}## redis store propertyredis {## redis mode: single、sentinelmode = "single"## single mode propertysingle {host = "127.0.0.1"port = "6379"}## sentinel mode propertysentinel {masterName = ""## such as "10.28.235.65:26379,10.28.235.65:26380,10.28.235.65:26381"sentinelHosts = ""}password = ""database = "0"minConn = 1maxConn = 10maxTotal = 100queryLimit = 100}}

registry.conf

registry {# file 、nacos 、eureka、redis、zk、consul、etcd3、sofatype = "nacos"nacos {application = "seata-server"serverAddr = "192.168.2.20:30010"group = "SEATA_GROUP"namespace = ""cluster = "default"username = "nacos"password = "nacos"}eureka {serviceUrl = "http://localhost:8761/eureka"application = "default"weight = "1"}redis {serverAddr = "localhost:6379"db = 0password = ""cluster = "default"timeout = 0}zk {cluster = "default"serverAddr = "127.0.0.1:2181"sessionTimeout = 6000connectTimeout = 2000username = ""password = ""}consul {cluster = "default"serverAddr = "127.0.0.1:8500"aclToken = ""}etcd3 {cluster = "default"serverAddr = "http://localhost:2379"}sofa {serverAddr = "127.0.0.1:9603"application = "default"region = "DEFAULT_ZONE"datacenter = "DefaultDataCenter"cluster = "default"group = "SEATA_GROUP"addressWaitTime = "3000"}file {name = "file.conf"}}config {# file、nacos 、apollo、zk、consul、etcd3type = "nacos"nacos {serverAddr = "192.168.2.20:30010"namespace = ""group = "SEATA_GROUP"username = "nacos"password = "nacos"dataId = "seataServer.properties"}consul {serverAddr = "127.0.0.1:8500"aclToken = ""}apollo {appId = "seata-server"## apolloConfigService will cover apolloMetaapolloMeta = "http://192.168.1.204:8801"apolloConfigService = "http://192.168.1.204:8080"namespace = "application"apolloAccesskeySecret = ""cluster = "seata"}zk {serverAddr = "127.0.0.1:2181"sessionTimeout = 6000connectTimeout = 2000username = ""password = ""nodePath = "/seata/seata.properties"}etcd3 {serverAddr = "http://localhost:2379"}file {name = "file.conf"}}